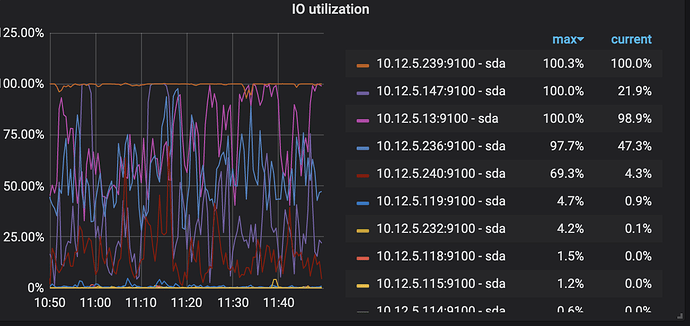

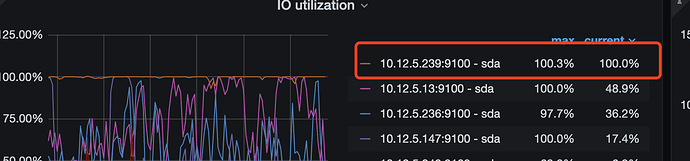

overview-tikv面板如下

test-cluster-Overview_2021-07-01T06_33_17.941Z.json (1.4 MB)

附上store信息

“count”: 6,

“stores”: [

{

“store”: {

“id”: 24478148,

“address”: “10.12.5.236:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.236:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625060753,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1625150052300593698,

“state_name”: “Up”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “2.929TiB”,

“used_size”: “2.336TiB”,

“leader_count”: 28173,

“leader_weight”: 2,

“leader_score”: 14086.5,

“leader_size”: 2287717,

“region_count”: 81160,

“region_weight”: 2,

“region_score”: 3279733.5,

“region_size”: 6559467,

“sending_snap_count”: 3,

“start_ts”: “2021-06-30T13:45:53Z”,

“last_heartbeat_ts”: “2021-07-01T14:34:12.300593698Z”,

“uptime”: “24h48m19.300593698s”

}

},

{

“store”: {

“id”: 24480822,

“address”: “10.12.5.239:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.239:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1624981287,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1625150058184653393,

“state_name”: “Up”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “3.34TiB”,

“used_size”: “2.456TiB”,

“leader_count”: 28175,

“leader_weight”: 2,

“leader_score”: 14087.5,

“leader_size”: 2119216,

“region_count”: 92540,

“region_weight”: 2,

“region_score”: 3593884,

“region_size”: 7187768,

“sending_snap_count”: 2,

“receiving_snap_count”: 1,

“start_ts”: “2021-06-29T15:41:27Z”,

“last_heartbeat_ts”: “2021-07-01T14:34:18.184653393Z”,

“uptime”: “46h52m51.184653393s”

}

},

{

“store”: {

“id”: 24590972,

“address”: “10.12.5.240:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.240:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1624981319,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1625150052511248317,

“state_name”: “Up”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “3.732TiB”,

“used_size”: “2.002TiB”,

“leader_count”: 28177,

“leader_weight”: 2,

“leader_score”: 14088.5,

“leader_size”: 2257348,

“region_count”: 79045,

“region_weight”: 2,

“region_score”: 3137037,

“region_size”: 6274074,

“start_ts”: “2021-06-29T15:41:59Z”,

“last_heartbeat_ts”: “2021-07-01T14:34:12.511248317Z”,

“uptime”: “46h52m13.511248317s”

}

},

{

“store”: {

“id”: 38833310,

“address”: “10.12.5.147:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.147:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1624981158,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1625150055810678172,

“state_name”: “Up”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “3.538TiB”,

“used_size”: “2.103TiB”,

“leader_count”: 28177,

“leader_weight”: 2,

“leader_score”: 14088.5,

“leader_size”: 2185865,

“region_count”: 76711,

“region_weight”: 2,

“region_score”: 3037499.5,

“region_size”: 6074999,

“sending_snap_count”: 1,

“start_ts”: “2021-06-29T15:39:18Z”,

“last_heartbeat_ts”: “2021-07-01T14:34:15.810678172Z”,

“uptime”: “46h54m57.810678172s”

}

},

{

“store”: {

“id”: 262397455,

“address”: “10.12.5.13:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.13:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625010184,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1625150057591985664,

“state_name”: “Up”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “4.672TiB”,

“used_size”: “1.222TiB”,

“leader_count”: 14089,

“leader_weight”: 1,

“leader_score”: 14089,

“leader_size”: 1137581,

“region_count”: 46467,

“region_weight”: 1,

“region_score”: 3532790,

“region_size”: 3532790,

“sending_snap_count”: 1,

“receiving_snap_count”: 1,

“start_ts”: “2021-06-29T23:43:04Z”,

“last_heartbeat_ts”: “2021-07-01T14:34:17.591985664Z”,

“uptime”: “38h51m13.591985664s”

}

},

{

“store”: {

“id”: 268391998,

“address”: “10.12.5.119:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.119:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1624981378,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1625150059176263312,

“state_name”: “Up”

},

“status”: {

“capacity”: “320TiB”,

“available”: “281.7TiB”,

“used_size”: “1.221TiB”,

“leader_count”: 14091,

“leader_weight”: 1,

“leader_score”: 14091,

“leader_size”: 1028339,

“region_count”: 46785,

“region_weight”: 1,

“region_score”: 3532367,

“region_size”: 3532367,

“start_ts”: “2021-06-29T15:42:58Z”,

“last_heartbeat_ts”: “2021-07-01T14:34:19.176263312Z”,

“uptime”: “46h51m21.176263312s”

}

}

]

}