【TiDB 版本】v4.0.6

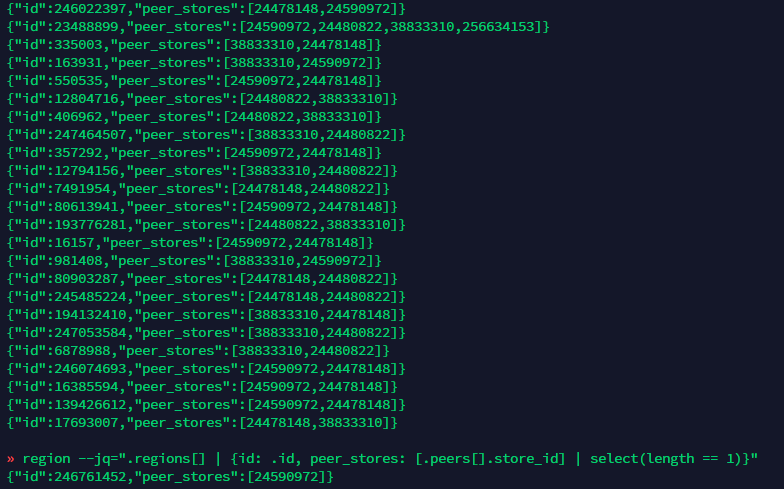

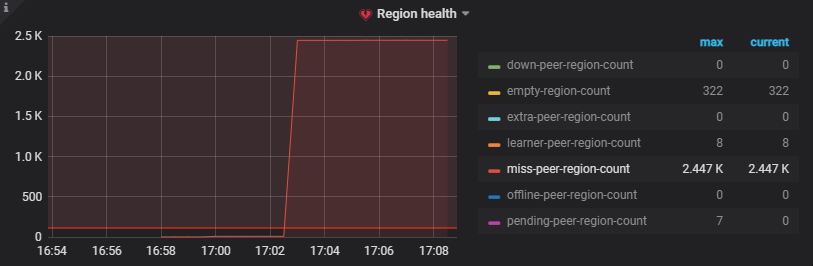

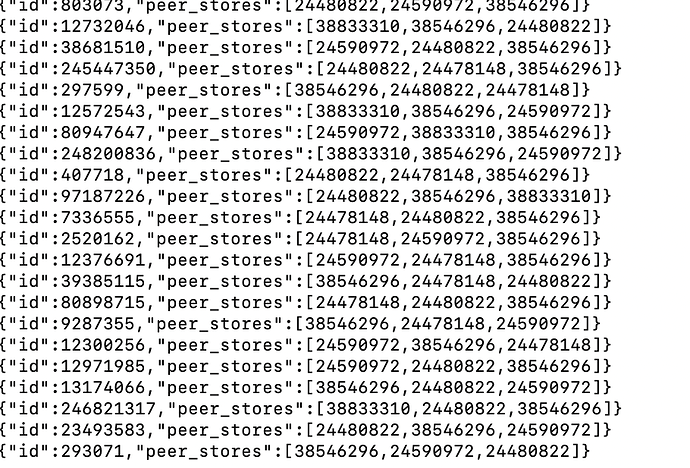

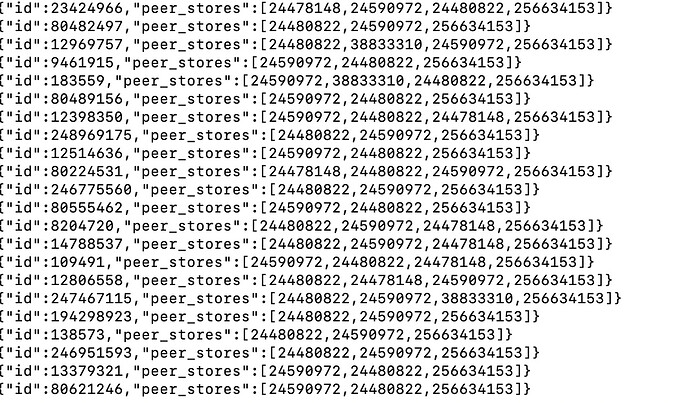

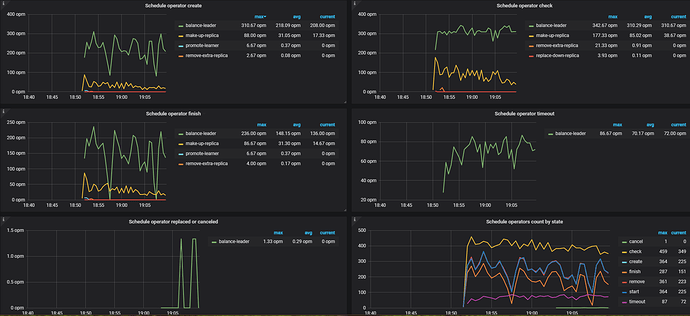

今天有两台一直卡在pending offline 的节点我发现他们leader cont都为0了就直接把他们两个scale-in --force了

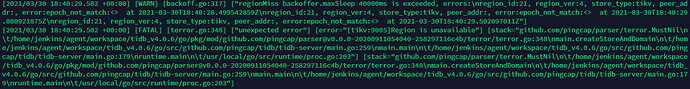

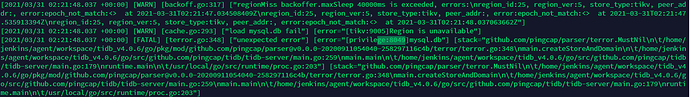

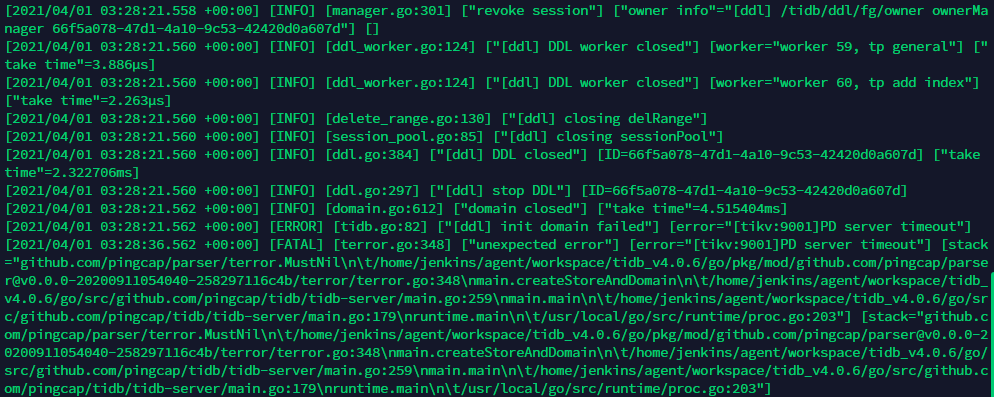

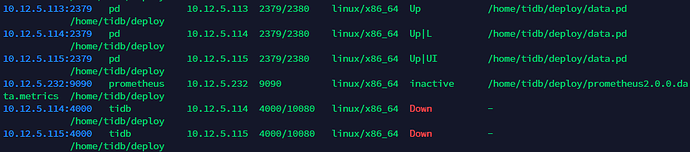

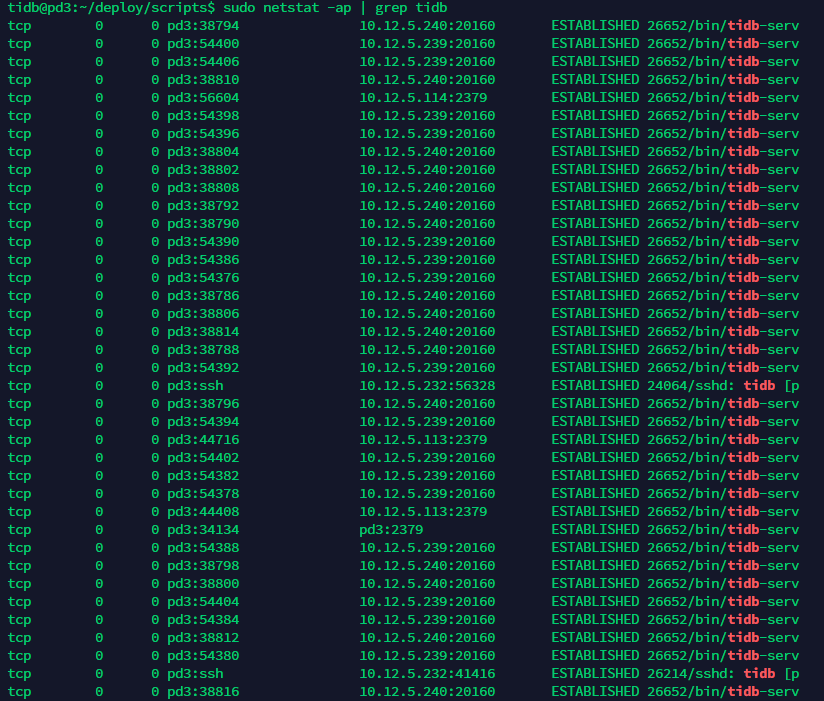

尝试重启集群发现tidb节点无法启动

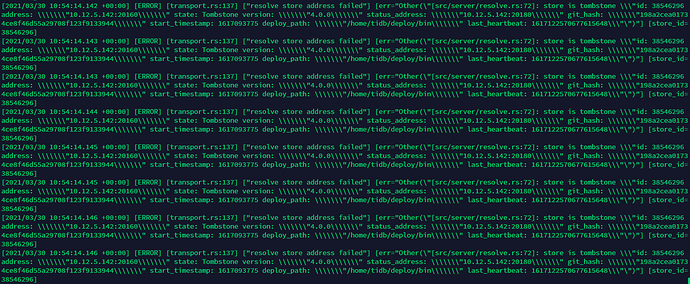

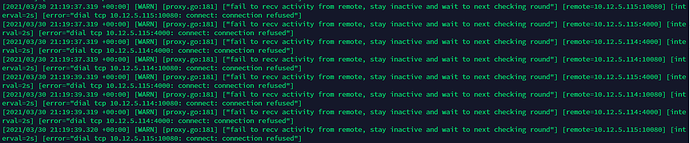

同时tikv节点日志也出现了error:

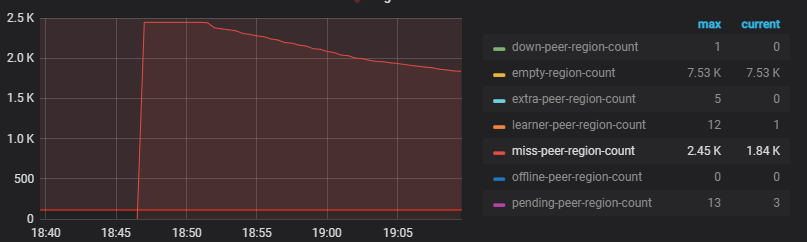

store中节点的leader都变为0:

{

"count": 6,

"stores": [

{

"store": {

"id": 38833310,

"address": "10.12.5.147:20160",

"version": "4.0.6",

"status_address": "10.12.5.147:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1617101326,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617129874605847067,

"state_name": "Up"

},

"status": {

"capacity": "0B",

"available": "0B",

"used_size": "0B",

"leader_count": 0,

"leader_weight": 2,

"leader_score": 0,

"leader_size": 0,

"region_count": 94661,

"region_weight": 2,

"region_score": 8681,

"region_size": 17362,

"start_ts": "2021-03-30T10:48:46Z",

"last_heartbeat_ts": "2021-03-30T18:44:34.605847067Z",

"uptime": "7h55m48.605847067s"

}

},

{

"store": {

"id": 256634153,

"address": "10.12.5.11:20160",

"state": 1,

"version": "4.0.6",

"status_address": "10.12.5.11:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1617003174,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617056393120558835,

"state_name": "Offline"

},

"status": {

"capacity": "0B",

"available": "0B",

"used_size": "0B",

"leader_count": 0,

"leader_weight": 1,

"leader_score": 0,

"leader_size": 0,

"region_count": 0,

"region_weight": 1,

"region_score": 0,

"region_size": 0,

"start_ts": "2021-03-29T07:32:54Z",

"last_heartbeat_ts": "2021-03-29T22:19:53.120558835Z",

"uptime": "14h46m59.120558835s"

}

},

{

"store": {

"id": 256634687,

"address": "10.12.5.12:20160",

"version": "4.0.6",

"status_address": "10.12.5.12:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1617101516,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617102184699004054,

"state_name": "Up"

},

"status": {

"capacity": "2.952TiB",

"available": "2.929TiB",

"used_size": "14.98GiB",

"leader_count": 421,

"leader_weight": 1,

"leader_score": 421,

"leader_size": 30916,

"region_count": 463,

"region_weight": 1,

"region_score": 30916,

"region_size": 30916,

"start_ts": "2021-03-30T10:51:56Z",

"last_heartbeat_ts": "2021-03-30T11:03:04.699004054Z",

"uptime": "11m8.699004054s"

}

},

{

"store": {

"id": 24478148,

"address": "10.12.5.236:20160",

"version": "4.0.6",

"status_address": "10.12.5.236:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1617101400,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617128494296419927,

"state_name": "Up"

},

"status": {

"capacity": "0B",

"available": "0B",

"used_size": "0B",

"leader_count": 0,

"leader_weight": 2,

"leader_score": 0,

"leader_size": 0,

"region_count": 95298,

"region_weight": 2,

"region_score": 5293,

"region_size": 10586,

"start_ts": "2021-03-30T10:50:00Z",

"last_heartbeat_ts": "2021-03-30T18:21:34.296419927Z",

"uptime": "7h31m34.296419927s"

}

},

{

"store": {

"id": 24480822,

"address": "10.12.5.239:20160",

"version": "4.0.6",

"status_address": "10.12.5.239:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1617101450,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617128502506331755,

"state_name": "Up"

},

"status": {

"capacity": "0B",

"available": "0B",

"used_size": "0B",

"leader_count": 2,

"leader_weight": 2,

"leader_score": 1,

"leader_size": 117,

"region_count": 86212,

"region_weight": 2,

"region_score": 7610,

"region_size": 15220,

"start_ts": "2021-03-30T10:50:50Z",

"last_heartbeat_ts": "2021-03-30T18:21:42.506331755Z",

"uptime": "7h30m52.506331755s"

}

},

{

"store": {

"id": 24590972,

"address": "10.12.5.240:20160",

"version": "4.0.6",

"status_address": "10.12.5.240:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1617101530,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617101423422266377,

"state_name": "Disconnected"

},

"status": {

"capacity": "5.952TiB",

"available": "4.033TiB",

"used_size": "1.829TiB",

"leader_count": 0,

"leader_weight": 2,

"leader_score": 0,

"leader_size": 0,

"region_count": 84954,

"region_weight": 2,

"region_score": 13013.5,

"region_size": 26027,

"start_ts": "2021-03-30T10:52:10Z",

"last_heartbeat_ts": "2021-03-30T10:50:23.422266377Z"

}

}

]

}