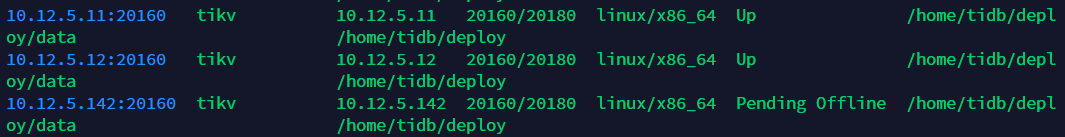

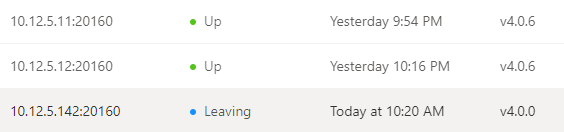

【TiDB 版本】 整体节点为v4.0.6 缩容失败卡在pending状态节点为 v4.0.0

【问题描述】

- 由于多台kv节点日志报错对142节点的通讯错误,于是希望将142节点scale-in。

- 在此之前先扩容了两个kv节点,集群原有kv的存储都是6T,由于服务器空间不足,新扩容的两个节点的存储都是3T的。

版本情况:

store信息:

{

"count": 7,

"stores": [

{

"store": {

"id": 38833310,

"address": "10.12.5.147:20160",

"version": "4.0.6",

"status_address": "10.12.5.147:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1616988548,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617018682473799047,

"state_name": "Down"

},

"status": {

"capacity": "5.952TiB",

"available": "3.723TiB",

"used_size": "2.055TiB",

"leader_count": 16269,

"leader_weight": 2,

"leader_score": 8134.5,

"leader_size": 0,

"region_count": 94626,

"region_weight": 2,

"region_score": 21985.5,

"region_size": 43971,

"start_ts": "2021-03-29T03:29:08Z",

"last_heartbeat_ts": "2021-03-29T11:51:22.473799047Z",

"uptime": "8h22m14.473799047s"

}

},

{

"store": {

"id": 256634153,

"address": "10.12.5.11:20160",

"version": "4.0.6",

"status_address": "10.12.5.11:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1616939696,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617023398668237519,

"state_name": "Up"

},

"status": {

"capacity": "2.952TiB",

"available": "2.938TiB",

"used_size": "6.693GiB",

"leader_count": 121,

"leader_weight": 1,

"leader_score": 121,

"leader_size": 6913,

"region_count": 221,

"region_weight": 1,

"region_score": 14566,

"region_size": 14566,

"start_ts": "2021-03-28T13:54:56Z",

"last_heartbeat_ts": "2021-03-29T13:09:58.668237519Z",

"uptime": "23h15m2.668237519s"

}

},

{

"store": {

"id": 256634687,

"address": "10.12.5.12:20160",

"version": "4.0.6",

"status_address": "10.12.5.12:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1616940967,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617023397542814654,

"state_name": "Up"

},

"status": {

"capacity": "2.952TiB",

"available": "2.938TiB",

"used_size": "6.268GiB",

"leader_count": 109,

"leader_weight": 1,

"leader_score": 109,

"leader_size": 7992,

"region_count": 212,

"region_weight": 1,

"region_score": 14426,

"region_size": 14426,

"start_ts": "2021-03-28T14:16:07Z",

"last_heartbeat_ts": "2021-03-29T13:09:57.542814654Z",

"uptime": "22h53m50.542814654s"

}

},

{

"store": {

"id": 24478148,

"address": "10.12.5.236:20160",

"version": "4.0.6",

"status_address": "10.12.5.236:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1616989770,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617023394532020087,

"state_name": "Up"

},

"status": {

"capacity": "5.952TiB",

"available": "2.905TiB",

"used_size": "1.947TiB",

"leader_count": 44626,

"leader_weight": 2,

"leader_score": 22313,

"leader_size": 0,

"region_count": 95285,

"region_weight": 2,

"region_score": 227874,

"region_size": 455748,

"start_ts": "2021-03-29T03:49:30Z",

"last_heartbeat_ts": "2021-03-29T13:09:54.532020087Z",

"uptime": "9h20m24.532020087s"

}

},

{

"store": {

"id": 24480822,

"address": "10.12.5.239:20160",

"version": "4.0.6",

"status_address": "10.12.5.239:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1616921467,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617017458404523405,

"state_name": "Down"

},

"status": {

"capacity": "5.952TiB",

"available": "3.915TiB",

"used_size": "1.966TiB",

"leader_count": 28636,

"leader_weight": 2,

"leader_score": 14318,

"leader_size": 541234,

"region_count": 86207,

"region_weight": 2,

"region_score": 580823,

"region_size": 1161646,

"start_ts": "2021-03-28T08:51:07Z",

"last_heartbeat_ts": "2021-03-29T11:30:58.404523405Z",

"uptime": "26h39m51.404523405s"

}

},

{

"store": {

"id": 24590972,

"address": "10.12.5.240:20160",

"version": "4.0.6",

"status_address": "10.12.5.240:20180",

"git_hash": "ca2475bfbcb49a7c34cf783596acb3edd05fc88f",

"start_timestamp": 1616933090,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617017452350247709,

"state_name": "Down"

},

"status": {

"capacity": "5.952TiB",

"available": "4.049TiB",

"used_size": "1.827TiB",

"leader_count": 10734,

"leader_weight": 2,

"leader_score": 5367,

"leader_size": 732622,

"region_count": 84959,

"region_weight": 2,

"region_score": 631075.5,

"region_size": 1262151,

"start_ts": "2021-03-28T12:04:50Z",

"last_heartbeat_ts": "2021-03-29T11:30:52.350247709Z",

"uptime": "23h26m2.350247709s"

}

},

{

"store": {

"id": 38546296,

"address": "10.12.5.142:20160",

"state": 1,

"version": "4.0.0",

"status_address": "10.12.5.142:20180",

"git_hash": "198a2cea01734ce8f46d55a29708f123f9133944",

"start_timestamp": 1616984419,

"deploy_path": "/home/tidb/deploy/bin",

"last_heartbeat": 1617006478833672808,

"state_name": "Offline"

},

"status": {

"capacity": "5.952TiB",

"available": "3.586TiB",

"used_size": "1.87TiB",

"leader_count": 0,

"leader_weight": 2,

"leader_score": 0,

"leader_size": 0,

"region_count": 86647,

"region_weight": 2,

"region_score": 465899.5,

"region_size": 931799,

"receiving_snap_count": 1,

"start_ts": "2021-03-29T02:20:19Z",

"last_heartbeat_ts": "2021-03-29T08:27:58.833672808Z",

"uptime": "6h7m39.833672808s"

}

}

]

}

3. 新的节点处于集群局域网中,并不能访问外网。

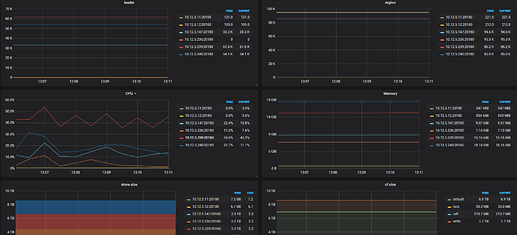

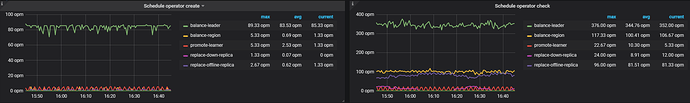

4. 新节点scale-out过去一天几乎并未均衡数据,cpu,内存使用极少。

5. 与此同时,下线的142kv也卡在pending一天了。