已经添加2个节点,我这边观察一下,region块是否会平衡一下

机器说加就加,羡慕呀![]() 。

。

近期有考虑升级集群吗,v3.0 太老了些。region score 算法支持相比 v5.x 少,还有些权重参数,也是 v5.x 才正式在生产中使用的。

之前打算升级,但这上面的业务压的很重,怕升级出了问题,没有办法恢复,就over了

是的,生产环境确实以稳定为主。

你这个版本要升级到 v5.x,就跨了两个大版本,升级后肯定还得磨合一段时间,确实是风险比较大。真要升级,你这边到时候肯定得做好预案,而且还要在线下模拟演练、线下使用目标版本测试运行等。

要升级的话,我想了一下,还不如直接重新搭一个新的TIDB ,然后做数据平移,这样估计还保险点![]()

嗯,这也是一种升级方案,有资源就可以这么搞。但不管是哪种方案,目标版本都需要提前验证,毕竟新的版本有些配置默认值有变动、有些配置废弃或被新的配置代替等。

加完tikv后region 分布均匀了吗

那台region高的,还是一直高

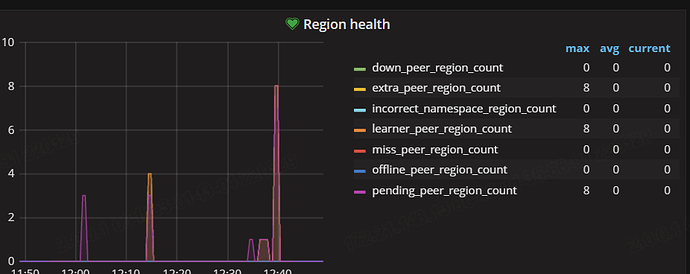

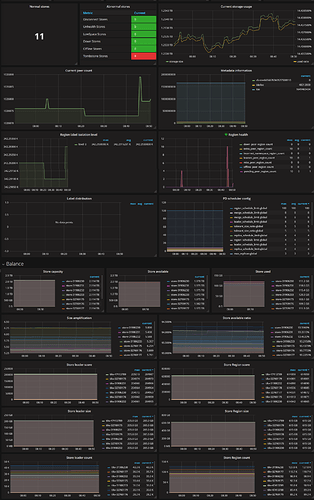

1.麻烦反馈下PD监控,看上面metric无法导出,可以找找一些截长图工具,方便截取。

2.pd ctl 命令中反馈下 store 结果。

加了两台服务器以后,集群的整个性能提上来了,同时对应的GC的性能也提升了,对应的存储空间也释放了,但目前从监控的显示来看,就是region块没有减少,且存在集中的情况。

这个命令,我在我的集群里面试了,3.0的参数里面是没有empty-region

之前提交命令没响应,我还以为是empty过大

结果后面才知道是region check empty是无效的命令

store

{

“count”: 11,

“stores”: [

{

“store”: {

“id”: 32769177,

“address”: “192.168.1.235:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 35723,

“leader_weight”: 1,

“leader_score”: 204980,

“leader_size”: 204980,

“region_count”: 110714,

“region_weight”: 1,

“region_score”: 614900,

“region_size”: 614900,

“start_ts”: “2021-07-15T15:08:58+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:31.853533313+08:00”,

“uptime”: “6425h36m33.853533313s”

}

},

{

“store”: {

“id”: 31906231,

“address”: “192.168.1.90:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 27826,

“leader_weight”: 1,

“leader_score”: 204966,

“leader_size”: 204966,

“region_count”: 88596,

“region_weight”: 1,

“region_score”: 614884,

“region_size”: 614884,

“start_ts”: “2021-08-03T12:46:43+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:36.254699426+08:00”,

“uptime”: “5971h58m53.254699426s”

}

},

{

“store”: {

“id”: 32769175,

“address”: “192.168.1.231:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 33362,

“leader_weight”: 1,

“leader_score”: 204973,

“leader_size”: 204973,

“region_count”: 92222,

“region_weight”: 1,

“region_score”: 614893,

“region_size”: 614893,

“start_ts”: “2022-03-26T01:08:23+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:29.160587819+08:00”,

“uptime”: “343h37m6.160587819s”

}

},

{

“store”: {

“id”: 32769179,

“address”: “192.168.1.236:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 30044,

“leader_weight”: 1,

“leader_score”: 204964,

“leader_size”: 204964,

“region_count”: 89203,

“region_weight”: 1,

“region_score”: 614901,

“region_size”: 614901,

“start_ts”: “2022-03-23T01:13:58+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:32.815957478+08:00”,

“uptime”: “415h31m34.815957478s”

}

},

{

“store”: {

“id”: 47413789,

“address”: “192.168.1.13:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 27588,

“leader_weight”: 1,

“leader_score”: 204984,

“leader_size”: 204984,

“region_count”: 90882,

“region_weight”: 1,

“region_score”: 614946,

“region_size”: 614946,

“start_ts”: “2022-03-29T15:40:26+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:36.400363526+08:00”,

“uptime”: “257h5m10.400363526s”

}

},

{

“store”: {

“id”: 31906233,

“address”: “192.168.1.87:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 31015,

“leader_weight”: 1,

“leader_score”: 204941,

“leader_size”: 204941,

“region_count”: 82425,

“region_weight”: 1,

“region_score”: 614800,

“region_size”: 614800,

“start_ts”: “2021-12-19T00:22:00+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:36.281968841+08:00”,

“uptime”: “2672h23m36.281968841s”

}

},

{

“store”: {

“id”: 32769176,

“address”: “192.168.1.229:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 28193,

“leader_weight”: 1,

“leader_score”: 204959,

“leader_size”: 204959,

“region_count”: 70401,

“region_weight”: 1,

“region_score”: 614856,

“region_size”: 614856,

“start_ts”: “2021-07-03T14:58:25+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:30.408115684+08:00”,

“uptime”: “6713h47m5.408115684s”

}

},

{

“store”: {

“id”: 47413788,

“address”: “192.168.1.11:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 27948,

“leader_weight”: 1,

“leader_score”: 204925,

“leader_size”: 204925,

“region_count”: 89302,

“region_weight”: 1,

“region_score”: 614838,

“region_size”: 614838,

“start_ts”: “2022-03-29T15:40:26+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:30.413265265+08:00”,

“uptime”: “257h5m4.413265265s”

}

},

{

“store”: {

“id”: 31906230,

“address”: “192.168.1.89:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 43317,

“leader_weight”: 1,

“leader_score”: 204963,

“leader_size”: 204963,

“region_count”: 127002,

“region_weight”: 1,

“region_score”: 614891,

“region_size”: 614891,

“start_ts”: “2022-04-07T14:39:43+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:28.023875928+08:00”,

“uptime”: “42h5m45.023875928s”

}

},

{

“store”: {

“id”: 31906232,

“address”: “192.168.1.88:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 35346,

“leader_weight”: 1,

“leader_score”: 204955,

“leader_size”: 204955,

“region_count”: 98868,

“region_weight”: 1,

“region_score”: 614890,

“region_size”: 614890,

“start_ts”: “2021-01-15T20:45:56+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:35.600645991+08:00”,

“uptime”: “10763h59m39.600645991s”

}

},

{

“store”: {

“id”: 32769178,

“address”: “192.168.1.230:20160”,

“version”: “3.0.0-beta”,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.9 TiB”,

“available”: “1.8 TiB”,

“leader_count”: 21931,

“leader_weight”: 1,

“leader_score”: 204966,

“leader_size”: 204966,

“region_count”: 87264,

“region_weight”: 1,

“region_score”: 614929,

“region_size”: 614929,

“start_ts”: “2021-04-02T14:51:09+08:00”,

“last_heartbeat_ts”: “2022-04-09T08:45:27.863231401+08:00”,

“uptime”: “8921h54m18.863231401s”

}

}

]

}

虚拟机的事吧

- 查看store 分数基本是差不多的,但是 leader count 和 region count 有差距。可以参考文档先看看是否有热点问题。

https://docs.pingcap.com/zh/tidb/v3.0/pd-scheduling-best-practices#leaderregion-分布不均衡 - 可以查看问题发生时读写流量大的几个 region,查看 region 大小,是否有比较大的 region 没有分裂,排除下。

https://docs.pingcap.com/zh/tidb/v3.0/pd-scheduling-best-practices#热点调度状态 - 版本 3.0 很旧,新版本 store 分数这些都不断调整过,有可能的话,还是升级到新版本

该主题在最后一个回复创建后60天后自动关闭。不再允许新的回复。