为提高效率,提问时请提供以下信息,问题描述清晰可优先响应。

- 【TiDB 版本】:3.1.0-beta.1

- 【问题描述】:

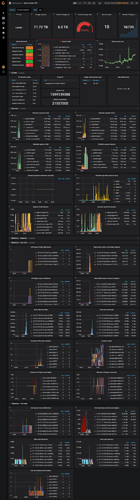

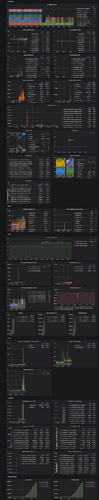

最近数据库需要大量读写,但是出现性能不佳的情况。在操作时出现 tikv timeout 或者region is unavailable的情况。

各tikv节点经常状态不稳定,例如当前的状态为:

tidb@three:~/tidb-ansible/resources/bin$ ./pd-ctl -u "http://10.12.5.113:2379" store|grep state_name "state_name": "Up" "state_name": "Up" "state_name": "Disconnected" "state_name": "Up" "state_name": "Down" "state_name": "Disconnected" "state_name": "Disconnected" "state_name": "Disconnected" "state_name": "Up" "state_name": "Up" "state_name": "Disconnected" "state_name": "Down" "state_name": "Down"

tidb@three:~/tidb-ansible/resources/bin$ ./pd-ctl -u “http://10.12.5.113:2379” store

{ "count": 13, "stores": [ { "store": { "id": 335855, "address": "10.12.5.230:20160", "version": "3.1.0-beta.1", "state_name": "Up" }, "status": { "capacity": "884.9GiB", "available": "199GiB", "leader_count": 9390, "leader_weight": 1, "leader_score": 1043499, "leader_size": 1043499, "region_count": 17293, "region_weight": 1, "region_score": 940071725.5503502, "region_size": 1643391, "start_ts": "2020-08-23T02:10:16Z", "last_heartbeat_ts": "2020-08-23T02:46:07.227896007Z", "uptime": "35m51.227896007s" } }, { "store": { "id": 484920, "address": "10.12.5.223:20160", "version": "3.1.0-beta.1", "state_name": "Up" }, "status": { "capacity": "884.9GiB", "available": "203.2GiB", "leader_count": 11427, "leader_weight": 1, "leader_score": 1213914, "leader_size": 1213914, "region_count": 18726, "region_weight": 1, "region_score": 914446997.4288011, "region_size": 1770229, "start_ts": "2020-08-23T02:09:57Z", "last_heartbeat_ts": "2020-08-23T02:46:21.554811523Z", "uptime": "36m24.554811523s" } }, { "store": { "id": 17388737, "address": "10.12.5.214:20160", "version": "3.1.0-beta.1", "state_name": "Disconnected" }, "status": { "capacity": "884.9GiB", "available": "193.8GiB", "leader_count": 18, "leader_weight": 1, "leader_score": 1355, "leader_size": 1355, "region_count": 15416, "region_weight": 1, "region_score": 971808761.6416945, "region_size": 1261973, "start_ts": "2020-08-23T02:43:19Z", "last_heartbeat_ts": "2020-08-23T02:43:30.469837181Z", "uptime": "11.469837181s" } }, { "store": { "id": 407938, "address": "10.12.5.221:20160", "version": "3.1.0-beta.1", "state_name": "Up" }, "status": { "capacity": "884.9GiB", "available": "428.9GiB", "leader_count": 2279, "leader_weight": 1, "leader_score": 1155710, "leader_size": 1155710, "region_count": 2634, "region_weight": 1, "region_score": 1182197, "region_size": 1182197, "sending_snap_count": 1, "start_ts": "2020-08-23T02:09:32Z", "last_heartbeat_ts": "2020-08-23T02:46:15.375248999Z", "uptime": "36m43.375248999s" } }, { "store": { "id": 665678, "address": "127.0.0.1:20160", "version": "3.1.0-beta.1", "state_name": "Down" }, "status": { "leader_weight": 1, "region_weight": 1, "start_ts": "1970-01-01T00:00:00Z" } }, { "store": { "id": 640552, "address": "10.12.5.224:20160", "version": "3.1.0-beta.1", "state_name": "Up" }, "status": { "capacity": "884.9GiB", "available": "201.1GiB", "leader_count": 9736, "leader_weight": 1, "leader_score": 1086020, "leader_size": 1086020, "region_count": 18895, "region_weight": 1, "region_score": 927204434.4555326, "region_size": 1657957, "start_ts": "2020-08-23T02:10:04Z", "last_heartbeat_ts": "2020-08-23T02:46:23.339818555Z", "uptime": "36m19.339818555s" } }, { "store": { "id": 2026701, "address": "10.12.5.227:20160", "version": "3.1.0-beta.1", "state_name": "Disconnected" }, "status": { "capacity": "884.9GiB", "available": "198.6GiB", "leader_count": 2774, "leader_weight": 1, "leader_score": 292477, "leader_size": 292477, "region_count": 18189, "region_weight": 1, "region_score": 942237682.7523708, "region_size": 1502493, "start_ts": "2020-08-23T02:11:10Z", "last_heartbeat_ts": "2020-08-23T02:26:06.180979812Z", "uptime": "14m56.180979812s" } }, { "store": { "id": 6506926, "address": "10.12.5.231:20160", "version": "3.1.0-beta.1", "state_name": "Disconnected" }, "status": { "capacity": "1007GiB", "available": "311.1GiB", "leader_count": 8196, "leader_weight": 1, "leader_score": 1000551, "leader_size": 1000551, "region_count": 10832, "region_weight": 1, "region_score": 489578759.9719987, "region_size": 2138334, "start_ts": "2020-08-23T02:09:53Z", "last_heartbeat_ts": "2020-08-23T02:24:24.524230709Z", "uptime": "14m31.524230709s" } }, { "store": { "id": 10968962, "address": "10.12.5.233:20160", "version": "3.1.0-beta.1", "state_name": "Up" }, "status": { "capacity": "884.9GiB", "available": "199.6GiB", "leader_count": 6514, "leader_weight": 1, "leader_score": 745635, "leader_size": 745635, "region_count": 14893, "region_weight": 1, "region_score": 936286208.6025124, "region_size": 2306434, "start_ts": "2020-08-23T02:10:19Z", "last_heartbeat_ts": "2020-08-23T02:46:22.090152418Z", "uptime": "36m3.090152418s" } }, { "store": { "id": 6506924, "address": "10.12.5.229:20160", "version": "3.1.0-beta.1", "state_name": "Down" }, "status": { "capacity": "1007GiB", "available": "223.2GiB", "leader_count": 2, "leader_weight": 1, "leader_score": 152, "leader_size": 152, "region_count": 20252, "region_weight": 1, "region_score": 957450920.5420499, "region_size": 734939, "start_ts": "2020-08-23T02:10:01Z", "last_heartbeat_ts": "2020-08-23T02:10:11.957212698Z", "uptime": "10.957212698s" } }, { "store": { "id": 6506925, "address": "10.12.5.228:20160", "version": "3.1.0-beta.1", "state_name": "Down" }, "status": { "capacity": "1007GiB", "available": "222.5GiB", "leader_weight": 1, "region_count": 19097, "region_weight": 1, "region_score": 961158063.6551847, "region_size": 430576, "start_ts": "2020-08-23T02:09:49Z", "last_heartbeat_ts": "2020-08-23T02:09:59.935448794Z", "uptime": "10.935448794s" } }, { "store": { "id": 407940, "address": "10.12.5.220:20160", "version": "3.1.0-beta.1", "state_name": "Up" }, "status": { "capacity": "865.2GiB", "available": "197GiB", "leader_count": 14264, "leader_weight": 1, "leader_score": 1448249, "leader_size": 1448249, "region_count": 18524, "region_weight": 1, "region_score": 925391192.2735996, "region_size": 1906767, "start_ts": "2020-08-23T02:09:39Z", "last_heartbeat_ts": "2020-08-23T02:46:20.320183413Z", "uptime": "36m41.320183413s" } }, { "store": { "id": 1597655, "address": "10.12.5.226:20160", "version": "3.1.0-beta.1", "state_name": "Disconnected" }, "status": { "capacity": "835.7GiB", "available": "188.7GiB", "leader_count": 4064, "leader_weight": 1, "leader_score": 400408, "leader_size": 400408, "region_count": 13935, "region_weight": 1, "region_score": 935338040.0638361, "region_size": 1155387, "start_ts": "2020-08-23T02:10:41Z", "last_heartbeat_ts": "2020-08-23T02:21:35.213273663Z", "uptime": "10m54.213273663s" } } ] }

此外,也会经常出现no space的情况,但实际磁盘可用空间是超过100G的。通常需要进行log清除(约几G) + 删除last_tikv.toml文件,然后通过df -h 磁盘 能发现可用空间是有的。

针对上述问题,我想知道当前性能不佳的原因是什么?有什么解决办法?

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。