-

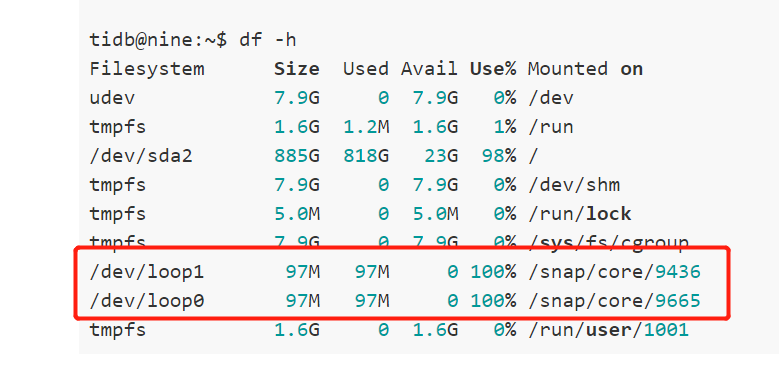

无法启动的 tikv 节点 df -h 命令的结果

tidb@nine:~$ df -h

Filesystem Size Used Avail Use% Mounted on

udev 7.9G 0 7.9G 0% /dev

tmpfs 1.6G 1.2M 1.6G 1% /run

/dev/sda2 885G 818G 23G 98% /

tmpfs 7.9G 0 7.9G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 7.9G 0 7.9G 0% /sys/fs/cgroup

/dev/loop1 97M 97M 0 100% /snap/core/9436

/dev/loop0 97M 97M 0 100% /snap/core/9665

tmpfs 1.6G 0 1.6G 0% /run/user/1001

-

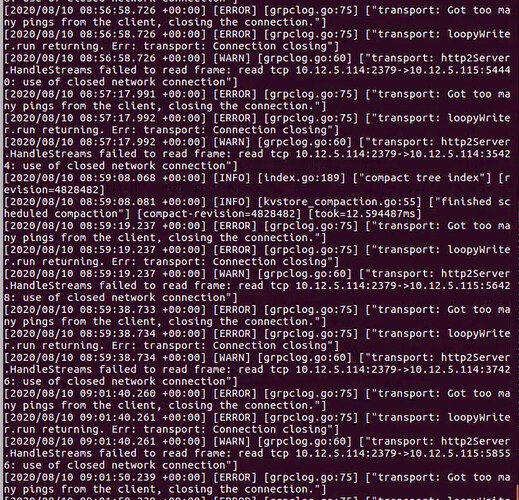

无法启动的 tidb 节点的 tidb.log 日志文件

[2020/08/08 19:47:52.183 +08:00] [WARN] [client_batch.go:223] ["init create streaming fail"] [target=10.12.5.221:20160] [error="context deadline exceeded"]

[2020/08/08 19:47:52.183 +08:00] [INFO] [region_cache.go:937] ["mark store's regions need be refill"] [store=10.12.5.221:20160]

[2020/08/08 19:47:52.183 +08:00] [INFO] [region_cache.go:430] ["switch region peer to next due to send request fail"] [current="region ID: 73, meta: id:73 start_key:\"t\\200\\000\\000\\000\\000\\000\\000!\" end_key:\"t\\200\\000\\000\\000\\000\\000\\000#\" region_epoch:<conf_ver:114 version:17 > peers:<id:15427350 store_id:484920 > peers:<id:17093307 store_id:407938 > , peer: id:17093307 store_id:407938 , addr: 10.12.5.221:20160, idx: 1"] [needReload=false] [error="context deadline exceeded"] [errorVerbose="context deadline exceeded\

github.com/pingcap/errors.AddStack

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/pkg/mod/github.com/pingcap/errors@v0.11.4/errors.go:174

github.com/pingcap/errors.Trace

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/pkg/mod/github.com/pingcap/errors@v0.11.4/juju_adaptor.go:15

github.com/pingcap/tidb/store/tikv.sendBatchRequest

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/client_batch.go:585

github.com/pingcap/tidb/store/tikv.(*rpcClient).SendRequest

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/client.go:287

github.com/pingcap/tidb/store/tikv.(*RegionRequestSender).sendReqToRegion

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/region_request.go:169

github.com/pingcap/tidb/store/tikv.(*RegionRequestSender).SendReqCtx

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/region_request.go:133

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTaskOnce

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:696

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTask

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:653

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).run

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:481

runtime.goexit

\t/usr/local/go/src/runtime/asm_amd64.s:1357"]

[2020/08/08 19:47:53.739 +08:00] [INFO] [region_cache.go:564] ["switch region peer to next due to NotLeader with NULL leader"] [currIdx=0] [regionID=73]

[2020/08/08 19:47:54.239 +08:00] [INFO] [region_cache.go:324] ["invalidate current region, because others failed on same store"] [region=73] [store=10.12.5.221:20160]

[2020/08/08 19:47:54.239 +08:00] [INFO] [coprocessor.go:754] ["[TIME_COP_PROCESS] resp_time:7.557614143s txnStartTS:418614386337513478 region_id:73 store_addr:10.12.5.223:20160 backoff_ms:39244 backoff_types:[regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss]"]

[2020/08/08 19:47:54.741 +08:00] [INFO] [region_cache.go:564] ["switch region peer to next due to NotLeader with NULL leader"] [currIdx=0] [regionID=73]

[2020/08/08 19:48:00.241 +08:00] [WARN] [client_batch.go:223] ["init create streaming fail"] [target=10.12.5.221:20160] [error="context deadline exceeded"]

[2020/08/08 19:48:00.241 +08:00] [INFO] [region_cache.go:937] ["mark store's regions need be refill"] [store=10.12.5.221:20160]

[2020/08/08 19:48:00.241 +08:00] [INFO] [region_cache.go:430] ["switch region peer to next due to send request fail"] [current="region ID: 73, meta: id:73 start_key:\"t\\200\\000\\000\\000\\000\\000\\000!\" end_key:\"t\\200\\000\\000\\000\\000\\000\\000#\" region_epoch:<conf_ver:114 version:17 > peers:<id:15427350 store_id:484920 > peers:<id:17093307 store_id:407938 > , peer: id:17093307 store_id:407938 , addr: 10.12.5.221:20160, idx: 1"] [needReload=false] [error="context deadline exceeded"] [errorVerbose="context deadline exceeded\

github.com/pingcap/errors.AddStack

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/pkg/mod/github.com/pingcap/errors@v0.11.4/errors.go:174

github.com/pingcap/errors.Trace

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/pkg/mod/github.com/pingcap/errors@v0.11.4/juju_adaptor.go:15

github.com/pingcap/tidb/store/tikv.sendBatchRequest

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/client_batch.go:585

github.com/pingcap/tidb/store/tikv.(*rpcClient).SendRequest

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/client.go:287

github.com/pingcap/tidb/store/tikv.(*RegionRequestSender).sendReqToRegion

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/region_request.go:169

github.com/pingcap/tidb/store/tikv.(*RegionRequestSender).SendReqCtx

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/region_request.go:133

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTaskOnce

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:696

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTask

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:653

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).run

\t/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:481

runtime.goexit

\t/usr/local/go/src/runtime/asm_amd64.s:1357"]

[2020/08/08 19:48:00.242 +08:00] [WARN] [backoff.go:304] ["tikvRPC backoffer.maxSleep 40000ms is exceeded, errors:\

epoch_not_match:<> at 2020-08-08T19:47:54.240007952+08:00

not leader: region_id:73 , ctx: region ID: 73, meta: id:73 start_key:"t\200\000\000\000\000\000\000!" end_key:"t\200\000\000\000\000\000\000#" region_epoch:<conf_ver:114 version:17 > peers:<id:15427350 store_id:484920 > peers:<id:17093307 store_id:407938 > , peer: id:15427350 store_id:484920 , addr: 10.12.5.223:20160, idx: 0 at 2020-08-08T19:47:54.741462916+08:00

send tikv request error: context deadline exceeded, ctx: region ID: 73, meta: id:73 start_key:"t\200\000\000\000\000\000\000!" end_key:"t\200\000\000\000\000\000\000#" region_epoch:<conf_ver:114 version:17 > peers:<id:15427350 store_id:484920 > peers:<id:17093307 store_id:407938 > , peer: id:17093307 store_id:407938 , addr: 10.12.5.221:20160, idx: 1, try next peer later at 2020-08-08T19:48:00.24215013+08:00"]

2020/08/08 19:48:00.242 terror.go:342: [fatal] [tikv:9005]Region is unavailable

github.com/pingcap/errors.AddStack

/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/pkg/mod/github.com/pingcap/errors@v0.11.4/errors.go:174

github.com/pingcap/errors.Trace

/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/pkg/mod/github.com/pingcap/errors@v0.11.4/juju_adaptor.go:15

github.com/pingcap/tidb/store/tikv.(*RegionRequestSender).onSendFail

/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/region_request.go:229

github.com/pingcap/tidb/store/tikv.(*RegionRequestSender).sendReqToRegion

/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/region_request.go:172

github.com/pingcap/tidb/store/tikv.(*RegionRequestSender).SendReqCtx

/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/region_request.go:133

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTaskOnce

/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:696

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTask

/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:653

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).run

/home/jenkins/agent/workspace/tidb_v3.1.0-beta.1/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:481

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1357

- Overview → PD 相关监控

由于服务无法启动,不能从ganafa获取信息。

从pd-ctl获得下述信息:

tidb@three:~/tidb-ansible/resources/bin$ ./pd-ctl -u "http://10.12.5.113:2379" member

{

"header": {

"cluster_id": 6807312755917103041

},

"members": [

{

"name": "pd_pd3",

"member_id": 2579653654541892389,

"peer_urls": [

"http://10.12.5.115:2380"

],

"client_urls": [

"http://10.12.5.115:2379"

]

},

{

"name": "pd_pd2",

"member_id": 3717199249823848643,

"peer_urls": [

"http://10.12.5.114:2380"

],

"client_urls": [

"http://10.12.5.114:2379"

]

},

{

"name": "pd_pd1",

"member_id": 4691481983733508901,

"peer_urls": [

"http://10.12.5.113:2380"

],

"client_urls": [

"http://10.12.5.113:2379"

]

}

],

"leader": {

"name": "pd_pd1",

"member_id": 4691481983733508901,

"peer_urls": [

"http://10.12.5.113:2380"

],

"client_urls": [

"http://10.12.5.113:2379"

]

},

"etcd_leader": {

"name": "pd_pd1",

"member_id": 4691481983733508901,

"peer_urls": [

"http://10.12.5.113:2380"

],

"client_urls": [

"http://10.12.5.113:2379"

]

}

}

tidb@three:~/tidb-ansible/resources/bin$ ./pd-ctl -u "http://10.12.5.113:2379" store

{

"count": 12,

"stores": [

{

"store": {

"id": 2026701,

"address": "10.12.5.227:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "884.9GiB",

"available": "215GiB",

"leader_count": 8392,

"leader_weight": 1,

"leader_score": 937689,

"leader_size": 937689,

"region_count": 17443,

"region_weight": 1,

"region_score": 843405288.182425,

"region_size": 1967586,

"start_ts": "2020-08-07T15:03:27Z",

"last_heartbeat_ts": "2020-08-08T11:51:59.300228709Z",

"uptime": "20h48m32.300228709s"

}

},

{

"store": {

"id": 6506925,

"address": "10.12.5.228:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "1007GiB",

"available": "244.7GiB",

"leader_count": 7887,

"leader_weight": 1,

"leader_score": 938577,

"leader_size": 938577,

"region_count": 17611,

"region_weight": 1,

"region_score": 843185057.8181653,

"region_size": 2013469,

"start_ts": "2020-08-07T15:03:43Z",

"last_heartbeat_ts": "2020-08-08T11:51:55.484376468Z",

"uptime": "20h48m12.484376468s"

}

},

{

"store": {

"id": 10968962,

"address": "10.12.5.233:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "884.9GiB",

"available": "215.2GiB",

"leader_count": 8278,

"leader_weight": 1,

"leader_score": 937689,

"leader_size": 937689,

"region_count": 15276,

"region_weight": 1,

"region_score": 841956941.3070436,

"region_size": 1711320,

"start_ts": "2020-08-07T15:02:56Z",

"last_heartbeat_ts": "2020-08-08T11:51:55.634135924Z",

"uptime": "20h48m59.634135924s"

}

},

{

"store": {

"id": 665678,

"address": "127.0.0.1:20160",

"version": "3.1.0-beta.1",

"state_name": "Down"

},

"status": {

"leader_weight": 1,

"region_weight": 1,

"start_ts": "1970-01-01T00:00:00Z"

}

},

{

"store": {

"id": 640552,

"address": "10.12.5.224:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "884.9GiB",

"available": "215.2GiB",

"leader_count": 9180,

"leader_weight": 1,

"leader_score": 938467,

"leader_size": 938467,

"region_count": 19311,

"region_weight": 1,

"region_score": 841943870.6920581,

"region_size": 1956940,

"start_ts": "2020-08-07T15:03:23Z",

"last_heartbeat_ts": "2020-08-08T11:51:54.455860075Z",

"uptime": "20h48m31.455860075s"

}

},

{

"store": {

"id": 6506924,

"address": "10.12.5.229:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "1007GiB",

"available": "244.6GiB",

"leader_count": 9255,

"leader_weight": 1,

"leader_score": 938570,

"leader_size": 938570,

"region_count": 19089,

"region_weight": 1,

"region_score": 843320363.6070805,

"region_size": 2004782,

"start_ts": "2020-08-07T15:03:43Z",

"last_heartbeat_ts": "2020-08-08T11:51:56.195575776Z",

"uptime": "20h48m13.195575776s"

}

},

{

"store": {

"id": 335855,

"address": "10.12.5.230:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "884.9GiB",

"available": "215.2GiB",

"leader_count": 8001,

"leader_weight": 1,

"leader_score": 938633,

"leader_size": 938633,

"region_count": 17089,

"region_weight": 1,

"region_score": 842085247.7981482,

"region_size": 2001698,

"start_ts": "2020-08-07T15:02:07Z",

"last_heartbeat_ts": "2020-08-08T11:51:55.988218953Z",

"uptime": "20h49m48.988218953s"

}

},

{

"store": {

"id": 407938,

"address": "10.12.5.221:20160",

"version": "3.1.0-beta.1",

"state_name": "Down"

},

"status": {

"leader_weight": 1,

"region_count": 1346,

"region_weight": 1,

"region_score": 16,

"region_size": 16,

"start_ts": "1970-01-01T00:00:00Z"

}

},

{

"store": {

"id": 407940,

"address": "10.12.5.220:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "865.2GiB",

"available": "210.5GiB",

"leader_count": 9032,

"leader_weight": 1,

"leader_score": 937662,

"leader_size": 937662,

"region_count": 18397,

"region_weight": 1,

"region_score": 841403337.9166226,

"region_size": 1910555,

"start_ts": "2020-08-07T15:01:43Z",

"last_heartbeat_ts": "2020-08-08T11:51:54.024402611Z",

"uptime": "20h50m11.024402611s"

}

},

{

"store": {

"id": 484920,

"address": "10.12.5.223:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "884.9GiB",

"available": "215.2GiB",

"leader_count": 9083,

"leader_weight": 1,

"leader_score": 937628,

"leader_size": 937628,

"region_count": 18707,

"region_weight": 1,

"region_score": 841935338.1643195,

"region_size": 1936273,

"start_ts": "2020-08-07T15:03:12Z",

"last_heartbeat_ts": "2020-08-08T11:51:54.713647796Z",

"uptime": "20h48m42.713647796s"

}

},

{

"store": {

"id": 6506926,

"address": "10.12.5.231:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "1007GiB",

"available": "245GiB",

"leader_count": 7659,

"leader_weight": 1,

"leader_score": 938559,

"leader_size": 938559,

"region_count": 13842,

"region_weight": 1,

"region_score": 841210712.7905145,

"region_size": 1632447,

"start_ts": "2020-08-07T15:01:54Z",

"last_heartbeat_ts": "2020-08-08T11:51:53.469129485Z",

"uptime": "20h49m59.469129485s"

}

},

{

"store": {

"id": 1597655,

"address": "10.12.5.226:20160",

"version": "3.1.0-beta.1",

"state_name": "Up"

},

"status": {

"capacity": "835.7GiB",

"available": "203GiB",

"leader_count": 9212,

"leader_weight": 1,

"leader_score": 938610,

"leader_size": 938610,

"region_count": 16542,

"region_weight": 1,

"region_score": 843726572.5291586,

"region_size": 1629098,

"start_ts": "2020-08-07T15:03:37Z",

"last_heartbeat_ts": "2020-08-08T11:51:58.167808167Z",

"uptime": "20h48m21.167808167s"

}

}

]

}

tidb@three:~/tidb-ansible/resources/bin$ ./pd-ctl -u "http://10.12.5.113:2379" health

[

{

"name": "pd_pd3",

"member_id": 2579653654541892389,

"client_urls": [

"http://10.12.5.115:2379"

],

"health": true

},

{

"name": "pd_pd2",

"member_id": 3717199249823848643,

"client_urls": [

"http://10.12.5.114:2379"

],

"health": true

},

{

"name": "pd_pd1",

"member_id": 4691481983733508901,

"client_urls": [

"http://10.12.5.113:2379"

],

"health": true

}

]