raft_221.log (2.6 MB)

请问您反馈的是这两个raft 和 db 目录下的 LOG 吗? 文件名就是 LOG, 多谢。

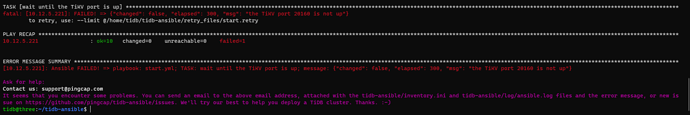

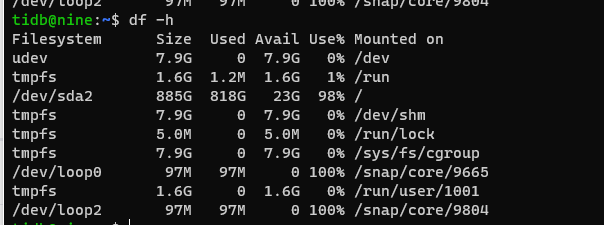

你能往数据挂载的盘上拷贝一个 1G 的文件么?以验证可用空间确实能使用。Out Of Space 是系统返回的,应用程序没什么解决的手段。如果方便的话,也请提供一下下面命令的输出:

% df -h % mount

拷贝2G的文件是可以拷贝成功的。

请问 221 tikv 最近还尝试过重启么?可以传一份它最近的启动以后日志嘛?

tikv_stderr.log 有什么输出嘛?

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.

thread 'main' panicked at 'invalid auto generated configuration file /home/tidb/deploy/data/last_tikv.toml, err expected an equals, found eof at line 445', src/config.rs:1500:13

stack backtrace:

0: backtrace::backtrace::libunwind::trace

at /cargo/registry/src/github.com-1ecc6299db9ec823/backtrace-0.3.29/src/backtrace/libunwind.rs:88

1: backtrace::backtrace::trace_unsynchronized

at /cargo/registry/src/github.com-1ecc6299db9ec823/backtrace-0.3.29/src/backtrace/mod.rs:66

2: std::sys_common::backtrace::_print

at src/libstd/sys_common/backtrace.rs:47

3: std::sys_common::backtrace::print

at src/libstd/sys_common/backtrace.rs:36

4: std::panicking::default_hook::{{closure}}

at src/libstd/panicking.rs:198

5: std::panicking::default_hook

at src/libstd/panicking.rs:212

6: std::panicking::rust_panic_with_hook

at src/libstd/panicking.rs:475

7: std::panicking::continue_panic_fmt

at src/libstd/panicking.rs:382

8: std::panicking::begin_panic_fmt

at src/libstd/panicking.rs:337

9: tikv::config::TiKvConfig::from_file::{{closure}}

at src/config.rs:1500

10: cmd::setup::validate_and_persist_config

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libcore/result.rs:766

11: tikv_server::main

at cmd/src/bin/tikv-server.rs:156

12: std::rt::lang_start::{{closure}}

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/rt.rs:64

13: main

14: __libc_start_main

15: <unknown>

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.可以把 /home/tidb/deploy/data/last_tikv.toml 文件上传一下嘛?

log-level = "info"

log-file = "/home/tidb/deploy/log/tikv.log"

log-rotation-timespan = "1d"

panic-when-unexpected-key-or-data = false

[readpool.storage]

high-concurrency = 12

normal-concurrency = 12

low-concurrency = 12

max-tasks-per-worker-high = 2000

max-tasks-per-worker-normal = 2000

max-tasks-per-worker-low = 2000

stack-size = "10MiB"

[readpool.coprocessor]

high-concurrency = 8

normal-concurrency = 8

low-concurrency = 8

max-tasks-per-worker-high = 2000

max-tasks-per-worker-normal = 2000

max-tasks-per-worker-low = 2000

stack-size = "10MiB"

[server]

addr = "0.0.0.0:20160"

advertise-addr = "10.12.5.221:20160"

status-addr = "10.12.5.221:20180"

status-thread-pool-size = 1

grpc-compression-type = "none"

grpc-concurrency = 4

grpc-concurrent-stream = 1024

grpc-raft-conn-num = 1

grpc-memory-pool-quota = 9223372036854775807

grpc-stream-initial-window-size = "2MiB"

grpc-keepalive-time = "10s"

grpc-keepalive-timeout = "3s"

concurrent-send-snap-limit = 32

concurrent-recv-snap-limit = 32

end-point-recursion-limit = 1000

end-point-stream-channel-size = 8

end-point-batch-row-limit = 64

end-point-stream-batch-row-limit = 128

end-point-enable-batch-if-possible = true

end-point-request-max-handle-duration = "1m"

snap-max-write-bytes-per-sec = "100MiB"

snap-max-total-size = "0KiB"

stats-concurrency = 1

heavy-load-threshold = 300

heavy-load-wait-duration = "1ms"

[server.labels]

[storage]

data-dir = "/home/tidb/deploy/data"

gc-ratio-threshold = 1.1

max-key-size = 4096

scheduler-notify-capacity = 10240

scheduler-concurrency = 2048000

scheduler-worker-pool-size = 4

scheduler-pending-write-threshold = "100MiB"

[storage.block-cache]

shared = true

capacity = "8GiB"

num-shard-bits = 6

strict-capacity-limit = false

high-pri-pool-ratio = 0.0

memory-allocator = "nodump"

[pd]

endpoints = ["10.12.5.113:2379", "10.12.5.114:2379", "10.12.5.115:2379"]

retry-interval = "300ms"

retry-max-count = 9223372036854775807

retry-log-every = 10

[metric]

interval = "15s"

address = ""

job = "tikv"

[raftstore]

sync-log = false

prevote = true

raftdb-path = ""

capacity = 1209462790553

raft-base-tick-interval = "1s"

raft-heartbeat-ticks = 2

raft-election-timeout-ticks = 10

raft-min-election-timeout-ticks = 0

raft-max-election-timeout-ticks = 0

raft-max-size-per-msg = "1MiB"

raft-max-inflight-msgs = 256

raft-entry-max-size = "8MiB"

raft-log-gc-tick-interval = "10s"

raft-log-gc-threshold = 50

raft-log-gc-count-limit = 73728

raft-log-gc-size-limit = "72MiB"

raft-entry-cache-life-time = "30s"

raft-reject-transfer-leader-duration = "3s"

split-region-check-tick-interval = "10s"

region-split-check-diff = "6MiB"

region-compact-check-interval = "5m"

clean-stale-peer-delay = "10m"

region-compact-check-step = 100

region-compact-min-tombstones = 10000

region-compact-tombstones-percent = 30

pd-heartbeat-tick-interval = "1m"

pd-store-heartbeat-tick-interval = "10s"

snap-mgr-gc-tick-interval = "1m"

snap-gc-timeout = "4h"

lock-cf-compact-interval = "10m"

lock-cf-compact-bytes-threshold = "256MiB"

notify-capacity = 40960

messages-per-tick = 4096

max-peer-down-duration = "5m"

max-leader-missing-duration = "2h"

abnormal-leader-missing-duration = "10m"

peer-stale-state-check-interval = "5m"

leader-transfer-max-log-lag = 10

snap-apply-batch-size = "10MiB"

consistency-check-interval = "0s"

report-region-flow-interval = "1m"

raft-store-max-leader-lease = "9s"

right-derive-when-split = true

allow-remove-leader = false

merge-max-log-gap = 10

merge-check-tick-interval = "10s"

use-delete-range = false

cleanup-import-sst-interval = "10m"

local-read-batch-size = 1024

apply-max-batch-size = 1024

apply-pool-size = 2

store-max-batch-size = 1024

store-pool-size = 2

future-poll-size = 1

hibernate-regions = false

[coprocessor]

split-region-on-table = false

batch-split-limit = 10

region-max-size = "144MiB"

region-split-size = "96MiB"

region-max-keys = 1440000

region-split-keys = 960000

[rocksdb]

wal-recovery-mode = 2

wal-dir = ""

wal-ttl-seconds = 0

wal-size-limit = "0KiB"

max-total-wal-size = "4GiB"

max-background-jobs = 6

max-manifest-file-size = "128MiB"

create-if-missing = true

max-open-files = 40960

enable-statistics = true

stats-dump-period = "10m"

compaction-readahead-size = "0KiB"

info-log-max-size = "1GiB"

info-log-roll-time = "0s"

info-log-keep-log-file-num = 10

info-log-dir = ""

rate-bytes-per-sec = "0KiB"

rate-limiter-mode = 2

auto-tuned = false

bytes-per-sync = "1MiB"

wal-bytes-per-sync = "512KiB"

max-sub-compactions = 1

writable-file-max-buffer-size = "1MiB"

use-direct-io-for-flush-and-compaction = false

enable-pipelined-write = true

[rocksdb.defaultcf]

block-size = "64KiB"

block-cache-size = "4009MiB"

disable-block-cache = false

cache-index-and-filter-blocks = true

pin-l0-filter-and-index-blocks = true

use-bloom-filter = true

optimize-filters-for-hits = true

whole-key-filtering = true

bloom-filter-bits-per-key = 10

block-based-bloom-filter = false

read-amp-bytes-per-bit = 0

compression-per-level = ["no", "no", "lz4", "lz4", "lz4", "zstd", "zstd"]

write-buffer-size = "128MiB"

max-write-buffer-number = 5

min-write-buffer-number-to-merge = 1

max-bytes-for-level-base = "512MiB"

target-file-size-base = "8MiB"

level0-file-num-compaction-trigger = 4

level0-slowdown-writes-trigger = 20

level0-stop-writes-trigger = 36

max-compaction-bytes = "2GiB"

compaction-pri = 3

dynamic-level-bytes = true

num-levels = 7

max-bytes-for-level-multiplier = 10

compaction-style = 0

disable-auto-compactions = false

soft-pending-compaction-bytes-limit = "64GiB"

hard-pending-compaction-bytes-limit = "256GiB"

force-consistency-checks = true

prop-size-index-distance = 4194304

prop-keys-index-distance = 40960

enable-doubly-skiplist = false

[rocksdb.defaultcf.titan]

min-blob-size = "1KiB"

blob-file-compression = "lz4"

blob-cache-size = "0KiB"

min-gc-batch-size = "16MiB"

max-gc-batch-size = "64MiB"

discardable-ratio = 0.5

sample-ratio = 0.1

merge-small-file-threshold = "8MiB"

blob-run-mode = "normal"

[rocksdb.writecf]

block-size = "64KiB"

block-cache-size = "2405MiB"

disable-block-cache = false

cache-index-and-filter-blocks = true

pin-l0-filter-and-index-blocks = true

use-bloom-filter = true

optimize-filters-for-hits = false

whole-key-filtering = false

bloom-filter-bits-per-key = 10

block-based-bloom-filter = false

read-amp-bytes-per-bit = 0

compression-per-level = ["no", "no", "lz4", "lz4", "lz4", "zstd", "zstd"]

write-buffer-size = "128MiB"

max-write-buffer-number = 5

min-write-buffer-number-to-merge = 1

max-bytes-for-level-base = "512MiB"

target-file-size-base = "8MiB"

level0-file-num-compaction-trigger = 4

level0-slowdown-writes-trigger = 20

level0-stop-writes-trigger = 36

max-compaction-bytes = "2GiB"

compaction-pri = 3

dynamic-level-bytes = true

num-levels = 7

max-bytes-for-level-multiplier = 10

compaction-style = 0

disable-auto-compactions = false

soft-pending-compaction-bytes-limit = "64GiB"

hard-pending-compaction-bytes-limit = "256GiB"

force-consistency-checks = true

prop-size-index-distance = 4194304

prop-keys-index-distance = 40960

enable-doubly-skiplist = false

[rocksdb.writecf.titan]

min-blob-size = "1KiB"

blob-file-compression = "lz4"

blob-cache-size = "0KiB"

min-gc-batch-size = "16MiB"

max-gc-batch-size = "64MiB"

discardable-ratio = 0.5

sample-ratio = 0.1

merge-small-file-threshold = "8MiB"

blob-run-mode = "read-only"

[rocksdb.lockcf]

block-size = "16KiB"

block-cache-size = "320MiB"

disable-block-cache = false

cache-index-and-filter-blocks = true

pin-l0-filter-and-index-blocks = true

use-bloom-filter = true

optimize-filters-for-hits = false

whole-key-filtering = true

bloom-filter-bits-per-key = 10

block-based-bloom-filter = false

read-amp-bytes-per-bit = 0

compression-per-level = ["no", "no", "no", "no", "no", "no", "no"]

write-buffer-size = "128MiB"

max-write-buffer-number = 5

min-write-buffer-number-to-merge = 1

max-bytes-for-level-base = "128MiB"

target-file-size-base = "8MiB"

level0-file-num-compaction-trigger = 1

level0-slowdown-writes-trigger = 20

level0-stop-writes-trigger = 36

max-compaction-bytes = "2GiB"

compaction-pri = 0

dynamic-level-bytes = true

num-levels = 7

max-bytes-for-level-multiplier = 10

compaction-style = 0

disable-auto-compactions = false

soft-pending-compaction-bytes-limit = "64GiB"

hard-pending-compaction-bytes-limit = "256GiB"

force-consistency-checks = true

prop-size-index-distance = 4194304

prop-keys-index-distance = 40960

enable-doubly-skiplist = false

[rocksdb.lockcf.titan]

min-blob-size = "1KiB"

blob-file-compression = "lz4"

blob-cache-size = "0KiB"

min-gc-batch-size = "16MiB"

max-gc-batch-size = "64MiB"

discardable-ratio = 0.5

sample-ratio = 0.1

merge-small-file-threshold = "8MiB"

blob-run-mode = "read-only"

[rocksdb.raftcf]

block-size = "16KiB"

block-cache-size = "128MiB"

disable-block-cache = false

cache-index-and-filter-blocks = true

pin-l0-filter-and-index-blocks = true

use-bloom-filter = true

optimize-filters-for-hits = true

whole-key-filtering = true

bloom-filter-bits-per-key = 10

block-based-bloom-filter = false

read-amp-bytes-per-bit = 0

compression-per-level = ["no", "no", "no", "no", "no", "no", "no"]

write-buffer-size = "128MiB"

max-write-buffer-number = 5

min-write-buffer-number-to-merge = 1

max-bytes-for-level-base = "128MiB"

target-file-size-base = "8MiB"

level0-file-num-compaction-trigger = 1

level0-slowdown-writes-trigger = 20

level0-stop-writes-trigger = 36

max-compaction-bytes = "2GiB"

compaction-pri = 0

dynamic-level-bytes = true

num-levels = 7

max-bytes-for-level-multiplier = 10

compaction-style = 0

disable-auto-compactions = false

soft-pending-compaction-bytes-limit = "64GiB"

hard-pending-compaction-bytes-limit = "256GiB"

force-consistency-checks = true

prop-size-index-distance = 4194304

prop-keys-index-distance = 40960

enable-doubly-skiplist = false

[rocksdb.raftcf.titan]

min-blob-size = "1KiB"

blob-file-compression = "lz4"

blob-cache-size = "0KiB"

min-gc-batch-size = "16MiB"

max-gc-batch-size = "64MiB"

discardable-ratio = 0.5

sample-ratio = 0.1

merge-small-file-threshold = "8MiB"

blob-run-mode = "read-only"

[rocksdb.titan]

enabled = false

dirname = ""

disable-gc = false

max-background-gc = 1

purge-obsolete-files-period = "10s"

[raftdb]

wal-recovery-mode = 2

wal-dir = ""

wal-ttl-seconds = 0

wal-size-limit = "0KiB"

max-total-wal-size = "4GiB"

max-background-jobs = 4

max-manifest-file-size = "20MiB"

create-if-missing = true

max-open-files = 40960

enable-statistics = true

stats-dump-period = "10m"

compaction-readahead-size = "0KiB"

info-log-max-size = "1GiB"

info-log-roll-time = "0s"

info-log-keep-log-file-num = 10

info-log-dir = ""

max-sub-compactions = 2

writable-file-max-buffer-size = "1MiB"

use-direct-io-for-flush-and-compaction = false

enable-pipelined-write = true

allow-concurrent-memtable-write = false

bytes-per-sync = "1MiB"

wal-bytes-per-sync = "512KiB"

[raftdb.defaultcf]

block-size = "64KiB"

block-cache-size = "320MiB"

disable-block-cache = false

cache-index-and-filter-blocks = true

pin-l0-filter-and-index-blocks = true

use-bloom-filter = false

optimize-filters-for-hits = true

whole-key-filtering = true

bloom-filter-bits-per-key = 10

block-based-bloom-filter = false

read-amp-bytes-per-bit = 0

compression-per-level = ["no", "no", "lz4", "lz4", "lz4", "zstd", "zstd"]

write-buffer-size = "128MiB"

max-write-buffer-number = 5

min-write-buffer-number-to-merge = 1

max-bytes-for-level-base = "512MiB"

target-file-size-base = "8MiB"

level0-file-num-compaction-trigger = 4

level0-slowdown-writes-trigger = 20

level0-stop-writes-trigger = 36

max-compaction-bytes = "2GiB"

compaction-pri = 0

dynamic-level-bytes = true

num-levels = 7

max-bytes-for-level-multiplier = 10

compaction-style = 0

disable-auto-compactions = false

soft-pending-compaction-bytes-limit = "64GiB"

hard-pending-compaction-bytes-limit = "256GiB"

force-consistency-checks = true

prop-size-index-distance = 4194304

prop-keys-index-distance = 40960

enable-doubly-skiplist = false

[raftdb.defaultcf.titan]

min-blob-size = "1KiB"

blob-file-compression = "lz4"

blob-cache-size = "0KiB"

min-gc-batch-size = "16MiB"

max-gc-batch-size = "64MiB"

discardable-ratio = 0.5

sample-ratio = 0.1

merge-small-file-threshold = "8MiB"

blob-run-mode = "normal"

[security]

ca-path = ""

cert-path = ""

key-path = ""

cipher-file = ""

[import]

import-dir = "/tmp/tikv/import"

num-threads = 8

num-import-jobs = 8

num-import-sst-jobs = 2我用你的这个配置文件并不能复现那个 panic 栈。可以直接传文件而不是复制拷贝内容嘛?有一种可能是原始文件里包含了一些不可见字符,通过复制拷贝就丢失了。

应该是当时磁盘写满了,导致 SST 和这个文件都写不进去。后来清理空间以后,SST 可以写进去了,但是这个文件已经写坏了,导致没办法启动。如果你没改过配置的话,直接把这个文件删掉应该就可以启动了。

非常感谢,现已正常使用。另外想问一下,由于当前tikv磁盘空间很少,如何加速tidb对存储的负载均衡?

- 建议扩容在缩容,将tikv磁盘保持一致。

- 可以参考以下文档

https://docs.pingcap.com/zh/tidb/stable/pd-scheduling-best-practices#pd-调度策略最佳实践

此话题已在最后回复的 1 分钟后被自动关闭。不再允许新回复。