好的![]()

如果切换回 v4.0.2 后 leader 不均匀的问题解决了,希望能回复通知一下,谢谢。

@rleungx此问题有 issue ?排查思路是什么呢

好的,目前还没测试,有结果一定回复

![]()

@rleungx @Yisaer @户口舟亢 @5kbpers-PingCAP

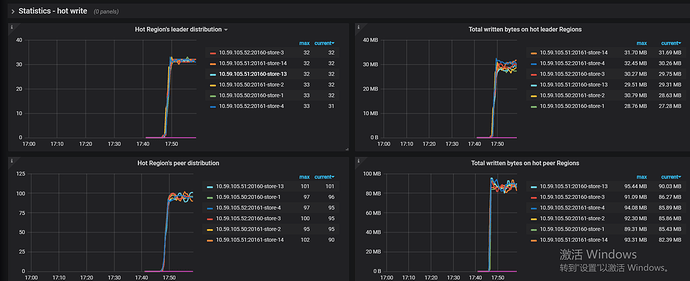

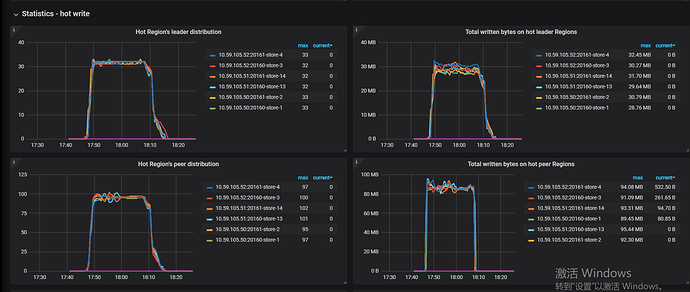

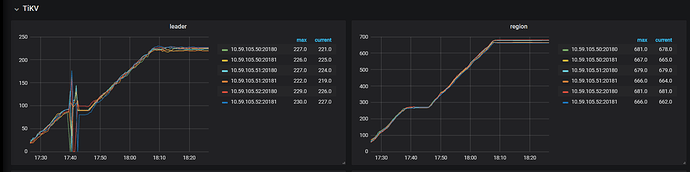

不好意思才回,结论是v4.0.2 leader region和hot region非常均衡,看下面图

1、版本:

v4.0.2

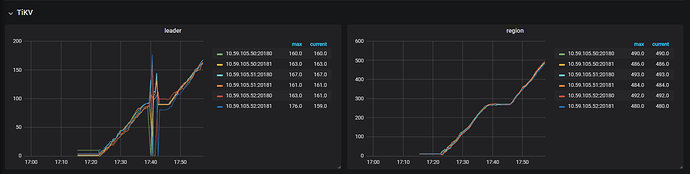

2、leader region和hot region图:

上面我从17:20 - 17.40分压测,并且在17:40分做了reload操作,之后从17:47分又开始做压测(貌似pd切换之后之前监控数据都没了?)

2、topology:

TiDB Version: v4.0.2

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

10.59.105.70:9093 alertmanager 10.59.105.70 9093/9094 linux/x86_64 Up /data/alertmanager/data /data/alertmanager

10.59.105.70:3000 grafana 10.59.105.70 3000 linux/x86_64 Up - /data/grafana

10.59.105.60:2379 pd 10.59.105.60 2379/2380 linux/x86_64 Up|L /data/pd/data /data/pd

10.59.105.61:2379 pd 10.59.105.61 2379/2380 linux/x86_64 Up|UI /data/pd/data /data/pd

10.59.105.62:2379 pd 10.59.105.62 2379/2380 linux/x86_64 Up /data/pd/data /data/pd

10.59.105.70:9090 prometheus 10.59.105.70 9090 linux/x86_64 Up /data/prometheus/data /data/prometheus

10.59.105.60:4000 tidb 10.59.105.60 4000/10080 linux/x86_64 Up - /data/tidb

10.59.105.61:4000 tidb 10.59.105.61 4000/10080 linux/x86_64 Up - /data/tidb

10.59.105.62:4000 tidb 10.59.105.62 4000/10080 linux/x86_64 Up - /data/tidb

10.59.105.70:9000 tiflash 10.59.105.70 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /data/tiflash/data /data/tiflash

10.59.105.50:20160 tikv 10.59.105.50 20160/20180 linux/x86_64 Up /data/tikv/20160/deploy/store /data/tikv/20160/deploy

10.59.105.50:20161 tikv 10.59.105.50 20161/20181 linux/x86_64 Up /data/tikv/20161/deploy/store /data/tikv/20161/deploy

10.59.105.51:20160 tikv 10.59.105.51 20160/20180 linux/x86_64 Up /data/tikv/20160/deploy/store /data/tikv/20160/deploy

10.59.105.51:20161 tikv 10.59.105.51 20161/20181 linux/x86_64 Up /data/tikv/20161/deploy/store /data/tikv/20161/deploy

10.59.105.52:20160 tikv 10.59.105.52 20160/20180 linux/x86_64 Up /data/tikv/20160/deploy/store /data/tikv/20160/deploy

10.59.105.52:20161 tikv 10.59.105.52 20161/20181 linux/x86_64 Up /data/tikv/20161/deploy/store /data/tikv/20161/deploy

3、topology配置详情:

global:

user: tidb

ssh_port: 22

deploy_dir: deploy

data_dir: data

os: linux

arch: amd64

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

deploy_dir: deploy/monitor-9100

data_dir: data/monitor-9100

log_dir: deploy/monitor-9100/log

server_configs:

tidb:

binlog.enable: false

binlog.ignore-error: false

experimental.allow-auto-random: true

experimental.allow-expression-index: true

log.slow-threshold: 300

performance.committer-concurrency: 16384

prepared-plan-cache.enabled: false

tikv-client.grpc-connection-count: 128

tikv:

log-level: warn

raftdb.bytes-per-sync: 512MB

raftdb.defaultcf.compression-per-level:

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

raftdb.wal-bytes-per-sync: 256MB

raftdb.writable-file-max-buffer-size: 512MB

raftstore.apply-pool-size: 5

raftstore.hibernate-regions: true

raftstore.messages-per-tick: 40960

raftstore.raft-base-tick-interval: 2s

raftstore.raft-entry-max-size: 32MB

raftstore.raft-max-inflight-msgs: 8192

raftstore.store-pool-size: 4

raftstore.sync-log: false

readpool.coprocessor.use-unified-pool: true

readpool.storage.use-unified-pool: false

readpool.unified.max-thread-count: 13

rocksdb.bytes-per-sync: 512MB

rocksdb.defaultcf.compression-per-level:

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

rocksdb.defaultcf.level0-slowdown-writes-trigger: 64

rocksdb.defaultcf.level0-stop-writes-trigger: 64

rocksdb.defaultcf.max-write-buffer-number: 10

rocksdb.defaultcf.min-write-buffer-number-to: 1

rocksdb.lockcf.compression-per-level:

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

rocksdb.lockcf.level0-slowdown-writes-trigger: 64

rocksdb.lockcf.level0-stop-writes-trigger: 64

rocksdb.lockcf.max-write-buffer-number: 10

rocksdb.lockcf.min-write-buffer-number-to: 1

rocksdb.raftcf.compression-per-level:

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

rocksdb.raftcf.level0-slowdown-writes-trigger: 64

rocksdb.raftcf.level0-stop-writes-trigger: 64

rocksdb.raftcf.max-write-buffer-number: 10

rocksdb.raftcf.min-write-buffer-number-to: 1

rocksdb.wal-bytes-per-sync: 256MB

rocksdb.writable-file-max-buffer-size: 512MB

rocksdb.writecf.compression-per-level:

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

- zstd

rocksdb.writecf.level0-slowdown-writes-trigger: 64

rocksdb.writecf.level0-stop-writes-trigger: 64

rocksdb.writecf.max-write-buffer-number: 10

rocksdb.writecf.min-write-buffer-number-to: 1

storage.block-cache.capacity: 24GB

storage.scheduler-worker-pool-size: 4

pd:

replication.enable-placement-rules: true

replication.location-labels:

- host

schedule.leader-schedule-limit: 8

schedule.region-schedule-limit: 2048

schedule.replica-schedule-limit: 256

tiflash:

logger.level: info

path_realtime_mode: false

tiflash-learner: {}

pump: {}

drainer: {}

cdc: {}

tidb_servers:

- host: 10.59.105.60

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /data/tidb

log_dir: /data/tidb/log

arch: amd64

os: linux

- host: 10.59.105.61

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /data/tidb

log_dir: /data/tidb/log

arch: amd64

os: linux

- host: 10.59.105.62

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /data/tidb

log_dir: /data/tidb/log

arch: amd64

os: linux

tikv_servers:

- host: 10.59.105.50

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /data/tikv/20160/deploy

data_dir: /data/tikv/20160/deploy/store

log_dir: /data/tikv/20160/deploy/log

numa_node: "0"

config:

server.labels:

host: h50

arch: amd64

os: linux

- host: 10.59.105.50

ssh_port: 22

port: 20161

status_port: 20181

deploy_dir: /data/tikv/20161/deploy

data_dir: /data/tikv/20161/deploy/store

log_dir: /data/tikv/20161/deploy/log

numa_node: "1"

config:

server.labels:

host: h50

arch: amd64

os: linux

- host: 10.59.105.51

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /data/tikv/20160/deploy

data_dir: /data/tikv/20160/deploy/store

log_dir: /data/tikv/20160/deploy/log

numa_node: "0"

config:

server.labels:

host: h51

arch: amd64

os: linux

- host: 10.59.105.51

ssh_port: 22

port: 20161

status_port: 20181

deploy_dir: /data/tikv/20161/deploy

data_dir: /data/tikv/20161/deploy/store

log_dir: /data/tikv/20161/deploy/log

numa_node: "1"

config:

server.labels:

host: h51

arch: amd64

os: linux

- host: 10.59.105.52

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /data/tikv/20160/deploy

data_dir: /data/tikv/20160/deploy/store

log_dir: /data/tikv/20160/deploy/log

numa_node: "0"

config:

server.labels:

host: h52

arch: amd64

os: linux

- host: 10.59.105.52

ssh_port: 22

port: 20161

status_port: 20181

deploy_dir: /data/tikv/20161/deploy

data_dir: /data/tikv/20161/deploy/store

log_dir: /data/tikv/20161/deploy/log

numa_node: "1"

config:

server.labels:

host: h52

arch: amd64

os: linux

tiflash_servers:

- host: 10.59.105.70

ssh_port: 22

tcp_port: 9000

http_port: 8123

flash_service_port: 3930

flash_proxy_port: 20170

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /data/tiflash

data_dir: /data/tiflash/data

log_dir: /data/tiflash/log

arch: amd64

os: linux

pd_servers:

- host: 10.59.105.60

ssh_port: 22

name: pd-10.59.105.60-2379

client_port: 2379

peer_port: 2380

deploy_dir: /data/pd

data_dir: /data/pd/data

log_dir: /data/pd/log

arch: amd64

os: linux

- host: 10.59.105.61

ssh_port: 22

name: pd-10.59.105.61-2379

client_port: 2379

peer_port: 2380

deploy_dir: /data/pd

data_dir: /data/pd/data

log_dir: /data/pd/log

arch: amd64

os: linux

- host: 10.59.105.62

ssh_port: 22

name: pd-10.59.105.62-2379

client_port: 2379

peer_port: 2380

deploy_dir: /data/pd

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /data/tiflash

data_dir: /data/tiflash/data

log_dir: /data/tiflash/log

arch: amd64

os: linux

pd_servers:

- host: 10.59.105.60

ssh_port: 22

name: pd-10.59.105.60-2379

client_port: 2379

peer_port: 2380

deploy_dir: /data/pd

data_dir: /data/pd/data

log_dir: /data/pd/log

arch: amd64

os: linux

- host: 10.59.105.61

ssh_port: 22

name: pd-10.59.105.61-2379

client_port: 2379

peer_port: 2380

deploy_dir: /data/pd

data_dir: /data/pd/data

log_dir: /data/pd/log

arch: amd64

os: linux

- host: 10.59.105.61

ssh_port: 22

name: pd-10.59.105.61-2379

client_port: 2379

peer_port: 2380

deploy_dir: /data/pd

data_dir: /data/pd/data

log_dir: /data/pd/log

arch: amd64

os: linux

- host: 10.59.105.62

ssh_port: 22

name: pd-10.59.105.62-2379

client_port: 2379

peer_port: 2380

deploy_dir: /data/pd

data_dir: /data/pd/data

log_dir: /data/pd/log

arch: amd64

os: linux

4、config show信息:

>> config show

{

"replication": {

"enable-placement-rules": "true",

"location-labels": "host",

"max-replicas": 3,

"strictly-match-label": "false"

},

"schedule": {

"enable-cross-table-merge": "false",

"enable-debug-metrics": "false",

"enable-location-replacement": "true",

"enable-make-up-replica": "true",

"enable-one-way-merge": "false",

"enable-remove-down-replica": "true",

"enable-remove-extra-replica": "true",

"enable-replace-offline-replica": "true",

"high-space-ratio": 0.7,

"hot-region-cache-hits-threshold": 3,

"hot-region-schedule-limit": 4,

"leader-schedule-limit": 8,

"leader-schedule-policy": "count",

"low-space-ratio": 0.8,

"max-merge-region-keys": 200000,

"max-merge-region-size": 20,

"max-pending-peer-count": 16,

"max-snapshot-count": 3,

"max-store-down-time": "30m0s",

"merge-schedule-limit": 8,

"patrol-region-interval": "100ms",

"region-schedule-limit": 2048,

"replica-schedule-limit": 256,

"scheduler-max-waiting-operator": 5,

"split-merge-interval": "1h0m0s",

"store-limit-mode": "manual",

"tolerant-size-ratio": 0

}

}

5、pd ctl上store信息:

» store

{

"count": 7,

"stores": [

{

"store": {

"id": 14,

"address": "10.59.105.51:20161",

"labels": [

{

"key": "host",

"value": "h51"

}

],

"version": "4.0.2",

"status_address": "10.59.105.51:20181",

"git_hash": "98ee08c587ab47d9573628aba6da741433d8855c",

"start_timestamp": 1596793233,

"deploy_path": "/data/tikv/20161/deploy/bin",

"last_heartbeat": 1596794773328138574,

"state_name": "Up"

},

"status": {

"capacity": "1.718TiB",

"available": "1.71TiB",

"used_size": "2.557GiB",

"leader_count": 212,

"leader_weight": 1,

"leader_score": 212,

"leader_size": 17212,

"region_count": 642,

"region_weight": 1,

"region_score": 51774,

"region_size": 51774,

"start_ts": "2020-08-07T17:40:33+08:00",

"last_heartbeat_ts": "2020-08-07T18:06:13.328138574+08:00",

"uptime": "25m40.328138574s"

}

},

{

"store": {

"id": 55,

"address": "10.59.105.70:3930",

"labels": [

{

"key": "engine",

"value": "tiflash"

}

],

"version": "v4.0.2",

"peer_address": "10.59.105.70:20170",

"status_address": "10.59.105.70:20292",

"git_hash": "8dee36744ae9ecfe9dda0522fef1634e37d23e87",

"start_timestamp": 1596793148,

"deploy_path": "/data/tiflash/bin/tiflash",

"last_heartbeat": 1596794769110115702,

"state_name": "Up"

},

"status": {

"capacity": "1.719TiB",

"available": "1.63TiB",

"used_size": "11.5KiB",

"leader_count": 0,

"leader_weight": 1,

"leader_score": 0,

"leader_size": 0,

"region_count": 0,

"region_weight": 1,

"region_score": 0,

"region_size": 0,

"start_ts": "2020-08-07T17:39:08+08:00",

"last_heartbeat_ts": "2020-08-07T18:06:09.110115702+08:00",

"uptime": "27m1.110115702s"

}

},

{

"store": {

"id": 1,

"address": "10.59.105.50:20160",

"labels": [

{

"key": "host",

"value": "h50"

}

],

"version": "4.0.2",

"status_address": "10.59.105.50:20180",

"git_hash": "98ee08c587ab47d9573628aba6da741433d8855c",

"start_timestamp": 1596793174,

"deploy_path": "/data/tikv/20160/deploy/bin",

"last_heartbeat": 1596794765469741794,

"state_name": "Up"

},

"status": {

"capacity": "1.718TiB",

"available": "1.711TiB",

"used_size": "2.847GiB",

"leader_count": 212,

"leader_weight": 1,

"leader_score": 212,

"leader_size": 16516,

"region_count": 646,

"region_weight": 1,

"region_score": 51476,

"region_size": 51476,

"start_ts": "2020-08-07T17:39:34+08:00",

"last_heartbeat_ts": "2020-08-07T18:06:05.469741794+08:00",

"uptime": "26m31.469741794s"

}

},

{

"store": {

"id": 2,

"address": "10.59.105.50:20161",

"labels": [

{

"key": "host",

"value": "h50"

}

],

"version": "4.0.2",

"status_address": "10.59.105.50:20181",

"git_hash": "98ee08c587ab47d9573628aba6da741433d8855c",

"start_timestamp": 1596793192,

"deploy_path": "/data/tikv/20161/deploy/bin",

"last_heartbeat": 1596794772465424513,

"state_name": "Up"

},

"status": {

"capacity": "1.718TiB",

"available": "1.709TiB",

"used_size": "2.609GiB",

"leader_count": 217,

"leader_weight": 1,

"leader_score": 217,

"leader_size": 17185,

"region_count": 639,

"region_weight": 1,

"region_score": 51566,

"region_size": 51566,

"start_ts": "2020-08-07T17:39:52+08:00",

"last_heartbeat_ts": "2020-08-07T18:06:12.465424513+08:00",

"uptime": "26m20.465424513s"

}

},

{

"store": {

"id": 3,

"address": "10.59.105.52:20160",

"labels": [

{

"key": "host",

"value": "h52"

}

],

"version": "4.0.2",

"status_address": "10.59.105.52:20180",

"git_hash": "98ee08c587ab47d9573628aba6da741433d8855c",

"start_timestamp": 1596793308,

"deploy_path": "/data/tikv/20160/deploy/bin",

"last_heartbeat": 1596794768716744394,

"state_name": "Up"

},

"status": {

"capacity": "1.718TiB",

"available": "1.71TiB",

"used_size": "2.847GiB",

"leader_count": 214,

"leader_weight": 1,

"leader_score": 214,

"leader_size": 17415,

"region_count": 652,

"region_weight": 1,

"region_score": 51492,

"region_size": 51492,

"start_ts": "2020-08-07T17:41:48+08:00",

"last_heartbeat_ts": "2020-08-07T18:06:08.716744394+08:00",

"uptime": "24m20.716744394s"

}

},

{

"store": {

"id": 4,

"address": "10.59.105.52:20161",

"labels": [

{

"key": "host",

"value": "h52"

}

],

"version": "4.0.2",

"status_address": "10.59.105.52:20181",

"git_hash": "98ee08c587ab47d9573628aba6da741433d8855c",

"start_timestamp": 1596793327,

"deploy_path": "/data/tikv/20161/deploy/bin",

"last_heartbeat": 1596794767721506475,

"state_name": "Up"

},

"status": {

"capacity": "1.718TiB",

"available": "1.709TiB",

"used_size": "2.903GiB",

"leader_count": 216,

"leader_weight": 1,

"leader_score": 216,

"leader_size": 18041,

"region_count": 633,

"region_weight": 1,

"region_score": 51550,

"region_size": 51550,

"start_ts": "2020-08-07T17:42:07+08:00",

"last_heartbeat_ts": "2020-08-07T18:06:07.721506475+08:00",

"uptime": "24m0.721506475s"

}

},

{

"store": {

"id": 13,

"address": "10.59.105.51:20160",

"labels": [

{

"key": "host",

"value": "h51"

}

],

"version": "4.0.2",

"status_address": "10.59.105.51:20180",

"git_hash": "98ee08c587ab47d9573628aba6da741433d8855c",

"start_timestamp": 1596793212,

"deploy_path": "/data/tikv/20160/deploy/bin",

"last_heartbeat": 1596794773139089171,

"state_name": "Up"

},

"status": {

"capacity": "1.718TiB",

"available": "1.712TiB",

"used_size": "3.197GiB",

"leader_count": 214,

"leader_weight": 1,

"leader_score": 214,

"leader_size": 16673,

"region_count": 643,

"region_weight": 1,

"region_score": 51268,

"region_size": 51268,

"start_ts": "2020-08-07T17:40:12+08:00",

"last_heartbeat_ts": "2020-08-07T18:06:13.139089171+08:00",

"uptime": "26m1.139089171s"

}

}

]

}

6、pd上scheduler信息:

» scheduler show

[

"balance-leader-scheduler",

"balance-region-scheduler",

"label-scheduler",

"balance-hot-region-scheduler"

]

7、placement rules:

» config placement-rules show

[

{

"group_id": "pd",

"id": "default",

"start_key": "",

"end_key": "",

"role": "voter",

"count": 3,

"location_labels": [

"host"

]

}

]

8、pd完整的监控:

filter target监控中也没有看到blance leader

1 个赞

感谢反馈

上述帖子中提到的因 v4.0.3 引入的 leader balance 的修改导致 leader 分布不均衡的问题在 v4.0.5 修复,对应的内容如下:

1 个赞

多谢多谢

![]()

![]()

此话题已在最后回复的 1 分钟后被自动关闭。不再允许新回复。