版本TiDB:v3.0.11

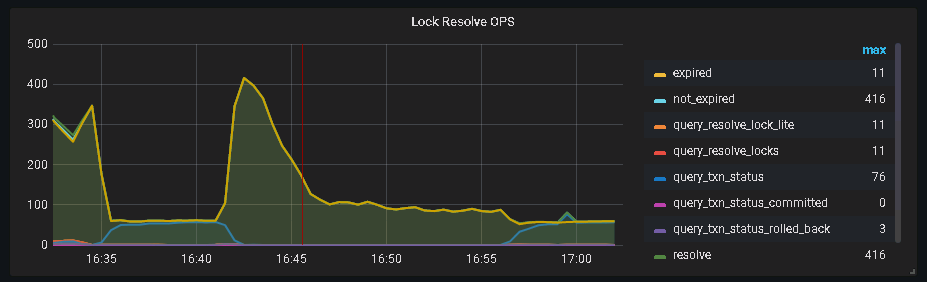

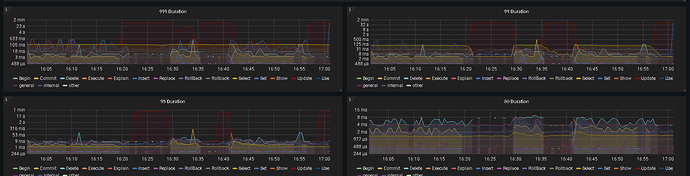

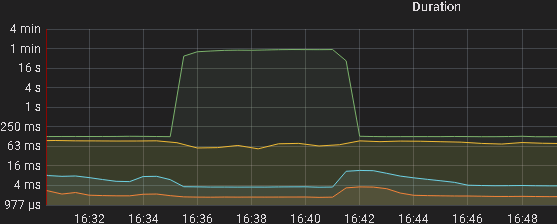

在某个时段内大量的这种update变慢,都是根据id更新,未发现id并发冲突。只是显示binlog写入变慢,目前pump的在56核256G,SSD的的服务器上。数据冲突不严重。目前没太好的查找方向。

*************************** 394. row ***************************

Time: 2020-07-09 16:39:52.860977

Txn_start_ts: 417931972074799124

User: uic

Host: 10.204.9.202

Conn_ID: 2761922

Query_time: 41.745752207

Parse_time: 0.000040567

Compile_time: 0.000044442

Prewrite_time: 41.744269941

Binlog_prewrite_time: 41.744283277

Commit_time: 0

Get_commit_ts_time: 0

Commit_backoff_time: 41.722

Backoff_types: [txnLock

Resolve_lock_time: 0.007564717

Local_latch_wait_time: 0

Write_keys: 3

Write_size: 676

Prewrite_region: 3

Txn_retry: 0

Process_time: 0

Wait_time: 0

Backoff_time: 0

LockKeys_time: 0

Request_count: 0

Total_keys: 0

Process_keys: 0

DB: uic

Index_names:

Is_internal: 0

Digest: 2f2abcc1729c7cec9284548a61e5179d5e4fde4da523f5c9d1924922d5ddcd13

Stats:

Cop_proc_avg: 0

Cop_proc_p90: 0

Cop_proc_max: 0

Cop_proc_addr:

Cop_wait_avg: 0

Cop_wait_p90: 0

Cop_wait_max: 0

Cop_wait_addr:

Mem_max: 0

Succ: 0

Plan: Point_Get_1 root 1 table:uic_user, handle:38378511638990848

Plan_digest: 0cea232deba5ed3a5351e923015f60176bbddc64d94f4bd8d68b522e419b6729

Prev_stmt:

Query: update uic_user SET extend_attr = '{"defaultPaymentType":"1","boxShowModeTipTimes":"1","courier_growth_score":"4909","courier_feature_rank":"2","courier_effective_time":"20200726","boxShowMode":"2","courier_growth_rank":"3","showBoxTypeModePage":"1","boxShowModeGray":"2"}', update_time = '2020-07-09 16:35:08.065' where id = 38378511638990848;