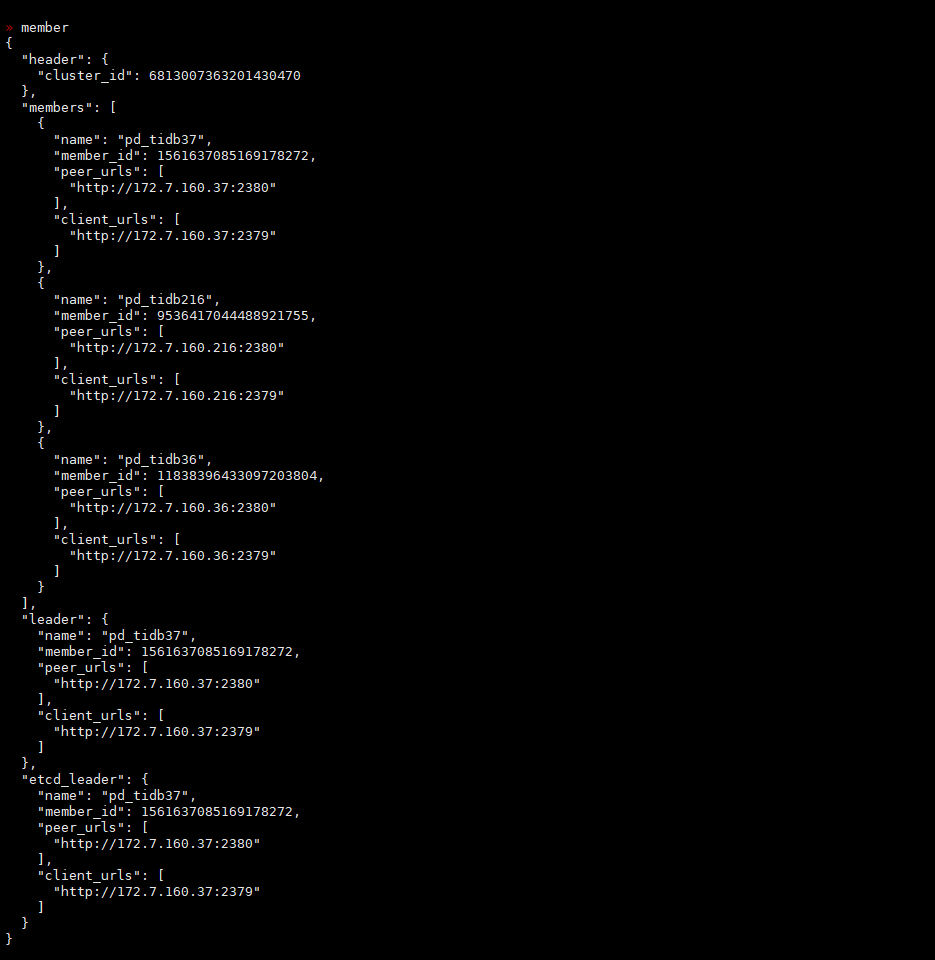

3个pd 3个tidb 3个tikv

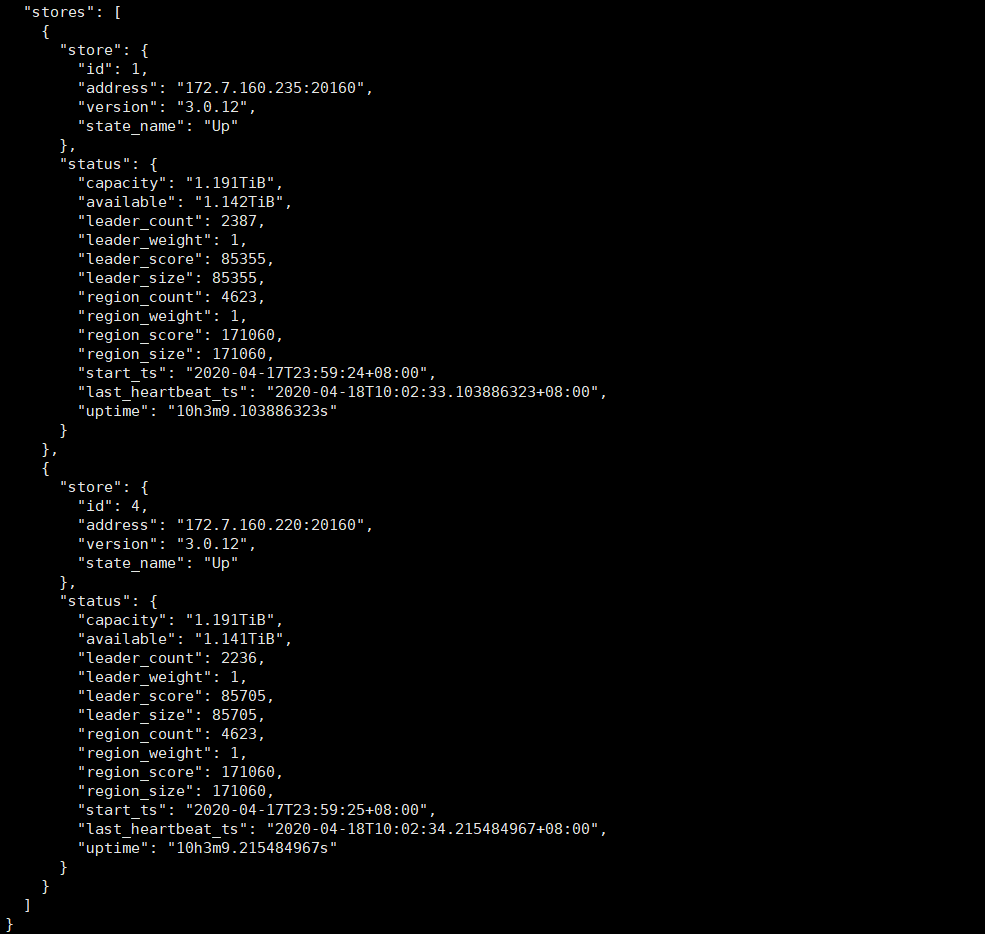

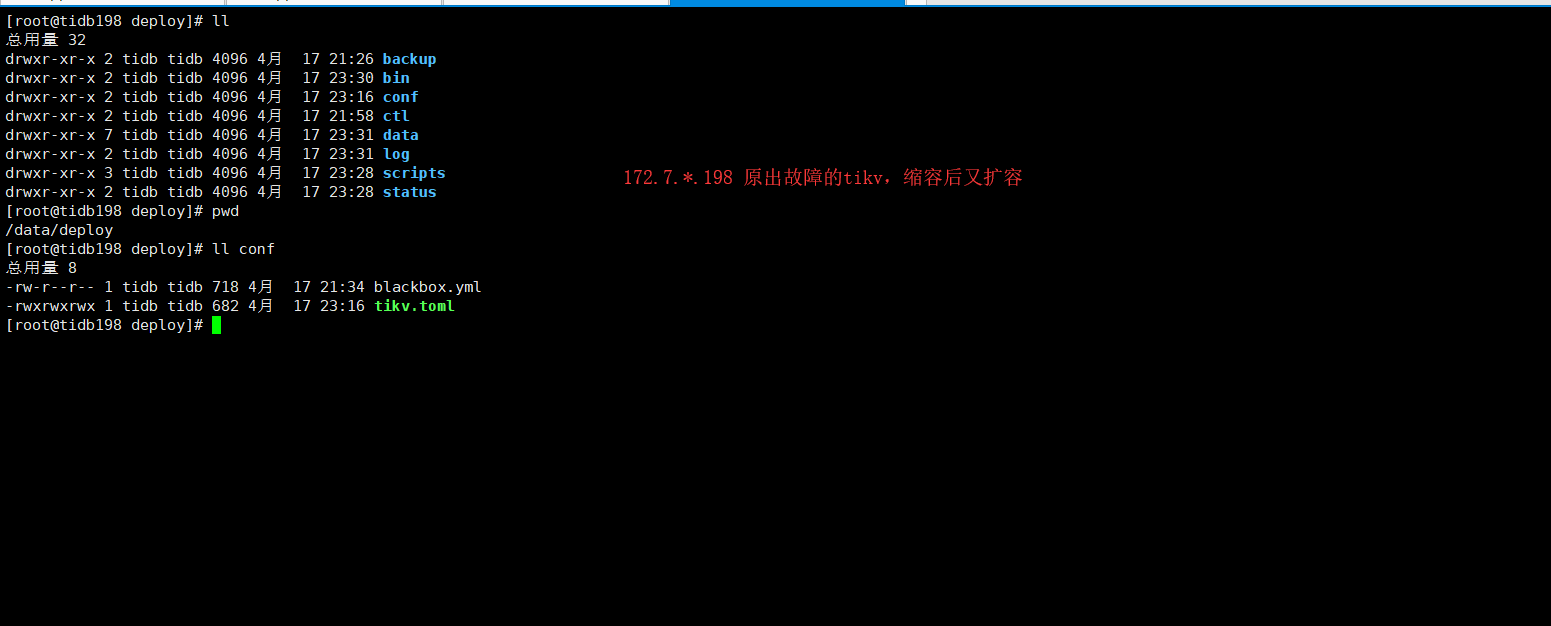

其中一个tikv down了,再也无法启动,严格按照文档“缩容”方法,先缩容,完毕后, store 5 看到的是 Tombstone 状态。然后再按照文档进行扩容,扩容后,无法启动,错误信息如下

[172.7.160.198]: Ansible FAILED! => playbook: start.yml; TASK: wait until the TiKV port is up; message: {"changed": false, "elapsed": 300, "msg": "the TiKV port 20160 is not up"}

tikv.log日志最后

[2020/04/18 00:38:32.463 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=addr-resolver]

[2020/04/18 00:38:32.501 +08:00] [INFO] [scheduler.rs:257] ["Scheduler::new is called to initialize the transaction scheduler"]

[2020/04/18 00:38:32.711 +08:00] [INFO] [scheduler.rs:278] ["Scheduler::new is finished, the transaction scheduler is initialized"]

[2020/04/18 00:38:32.711 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=gc-worker]

[2020/04/18 00:38:32.711 +08:00] [INFO] [mod.rs:722] ["Storage started."]

[2020/04/18 00:38:32.713 +08:00] [INFO] [server.rs:148] ["listening on addr"] [addr=0.0.0.0:20160]

[2020/04/18 00:38:32.713 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=region-collector-worker]

[2020/04/18 00:38:32.713 +08:00] [INFO] [node.rs:333] ["start raft store thread"] [store_id=19931]

[2020/04/18 00:38:32.713 +08:00] [INFO] [store.rs:800] ["start store"] [takes=36.867µs] [merge_count=0] [applying_count=0] [tombstone_count=0] [region_count=0] [store_id=19931]

[2020/04/18 00:38:32.713 +08:00] [INFO] [store.rs:852] ["cleans up garbage data"] [takes=11.403µs] [garbage_range_count=1] [store_id=19931]

[2020/04/18 00:38:32.714 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=split-check]

[2020/04/18 00:38:32.714 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=snapshot-worker]

[2020/04/18 00:38:32.714 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=raft-gc-worker]

[2020/04/18 00:38:32.714 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=compact-worker]

[2020/04/18 00:38:32.715 +08:00] [INFO] [future.rs:131] ["starting working thread"] [worker=pd-worker]

[2020/04/18 00:38:32.715 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=consistency-check]

[2020/04/18 00:38:32.715 +08:00] [INFO] [mod.rs:334] ["starting working thread"] [worker=cleanup-sst]

[2020/04/18 00:38:32.715 +08:00] [WARN] [store.rs:1118] ["set thread priority for raftstore failed"] [error="Os { code: 13, kind: PermissionDenied, message: \"Permission denied\" }"]

[2020/04/18 00:38:32.715 +08:00] [INFO] [node.rs:161] ["put store to PD"] [store="id: 19931 address: \"172.7.160.235:20160\" version: \"3.0.12\""]

[2020/04/18 00:38:32.716 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.716 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.716 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.716 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.717 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.717 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.717 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.717 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.718 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.718 +08:00] [ERROR] [util.rs:327] ["request failed"] [err="Grpc(RpcFailure(RpcStatus { status: Unknown, details: Some(\"duplicated store address: id:19931 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" , already registered by id:1 address:\\\"172.7.160.235:20160\\\" version:\\\"3.0.12\\\" \") }))"]

[2020/04/18 00:38:32.718 +08:00] [FATAL] [server.rs:264] ["failed to start node: Other(\"[src/pd/util.rs:335]: fail to request\")"]

有什么地方操作不对吗,谢谢!