【概述】 两张千万级的表join

【背景】 版本升级到V5.2.2

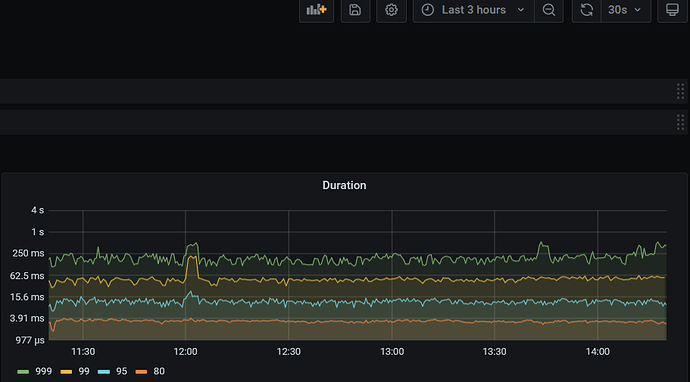

【现象】 业务和数据库现象

【问题】 SQL执行变得极端

【业务影响】 慢慢慢

【TiDB 版本】 V5.2.2

SELECT

count(1)

FROM

t1ags

LEFT JOIN t2 ar ON ags.t2_id = ar.t2_id

WHERE

ags.status IN (0, 1, 2, 4)

AND ags.merchant_id = 'dwj'

AND ags.create_time >= date_format('2021-10-22 00:00:00', '%Y-%m-%d')

AND ags.create_time < date_format('2021-12-22 00:00:00', '%Y-%m-%d');

id task estRows operator info actRows execution info memory disk

HashAgg_8 root 1 funcs:count(1)->Column#46 1 time:17h35m53.9s, loops:2, partial_worker:{wall_time:17h35m53.818665882s, concurrency:5, task_num:276506, tot_wait:87h59m25.534435281s, tot_exec:3.365149708s, tot_time:87h59m29.092939118s, max:17h35m53.818623181s, p95:17h35m53.818623181s}, final_worker:{wall_time:17h35m53.91090043s, concurrency:5, task_num:5, tot_wait:87h59m29.093287161s, tot_exec:92.266445ms, tot_time:87h59m29.1855608s, max:17h35m53.910800171s, p95:17h35m53.910800171s} 5.55 KB N/A

└─IndexJoin_13 root 8584973.13 left outer join, inner:IndexReader_12, outer key:dbdbdb.t1.t2_id, inner key:dbdbdb.t2.t2_id, equal cond:eq(dbdbdb.t1.t2_id, dbdbdb.t2.t2_id) 8848173 time:17h35m52.3s, loops:276507, inner:{total:87h11m9s, concurrency:5, task:276505, construct:5h18m16.8s, fetch:81h52m46s, build:5.74s}, probe:9.51s 1.59 GB N/A

├─IndexLookUp_35 root 8567764.50 8848173 time:6.3s, loops:276508, index_task: {total_time: 2h0m4.2s, fetch_handle: 1.76s, build: 1.25ms, wait: 2h0m2.4s}, table_task: {total_time: 10h0m21s, num: 453, concurrency: 5} 163.6 MB N/A

│ ├─IndexRangeScan_32 cop[tikv] 9154137.14 table:ags, index:idx_t1_3(create_time, phone), range:[2021-10-22 00:00:00,2021-12-22 00:00:00), keep order:false 9094286 time:627.4ms, loops:8928, cop_task: {num: 10, max: 652.8ms, min: 346.8ms, avg: 485.9ms, p95: 652.8ms, max_proc_keys: 1073274, p95_proc_keys: 1073274, tot_proc: 4.6s, tot_wait: 31ms, rpc_num: 10, rpc_time: 4.86s, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:590ms, min:324ms, p80:479ms, p95:590ms, iters:8926, tasks:10}, scan_detail: {total_process_keys: 9094286, total_keys: 9094296, rocksdb: {delete_skipped_count: 244, key_skipped_count: 9094530, block: {cache_hit_count: 77, read_count: 7896, read_byte: 72.0 MB}}} N/A N/A

│ └─Selection_34 cop[tikv] 8567764.50 eq(dbdbdb.t1.merchant_id, "dwj"), in(dbdbdb.t1.status, 0, 1, 2, 4) 8848173 time:22.2s, loops:11998, cop_task: {num: 588, max: 142.3ms, min: 529.8μs, avg: 42.9ms, p95: 75.3ms, max_proc_keys: 20992, p95_proc_keys: 20480, tot_proc: 22.3s, tot_wait: 269ms, rpc_num: 588, rpc_time: 25.2s, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:88ms, min:0s, p80:50ms, p95:63ms, iters:11557, tasks:588}, scan_detail: {total_process_keys: 9094286, total_keys: 9531493, rocksdb: {delete_skipped_count: 292982, key_skipped_count: 19120129, block: {cache_hit_count: 1961079, read_count: 49014, read_byte: 793.7 MB}}} N/A N/A

│ └─TableRowIDScan_33 cop[tikv] 9154137.14 table:ags, keep order:false 9094286 tikv_task:{proc max:83ms, min:0s, p80:47ms, p95:60ms, iters:11557, tasks:588} N/A N/A

└─IndexReader_12 root 1 index:IndexRangeScan_11 3563472 time:66h25m17.9s, loops:553011, cop_task: {num: 2997713, max: 1.64s, min: 134.4μs, avg: 812.3μs, p95: 1.41ms, max_proc_keys: 7, p95_proc_keys: 2, tot_proc: 7m30.9s, tot_wait: 7m44.3s, rpc_num: 2997713, rpc_time: 39m30.1s, copr_cache_hit_ratio: 0.00} 841 Bytes N/A

└─IndexRangeScan_11 cop[tikv] 1 table:ar, index:PRIMARY(t2_id), range: decided by [eq(dbdbdb.t2.t2_id, dbdbdb.t1.t2_id)], keep order:false