【 TiDB 使用环境】

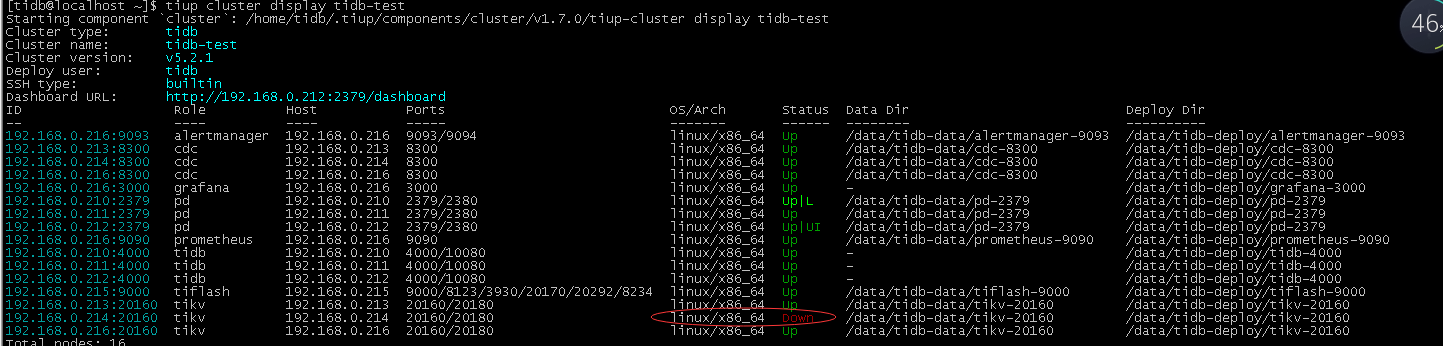

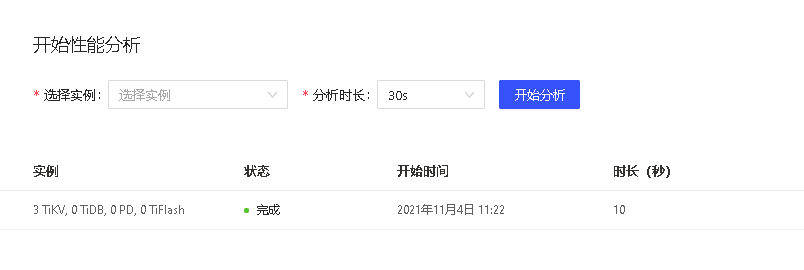

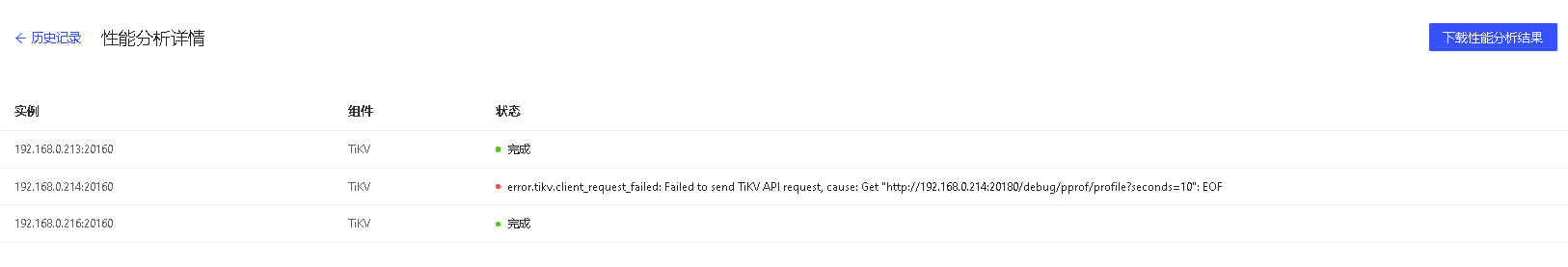

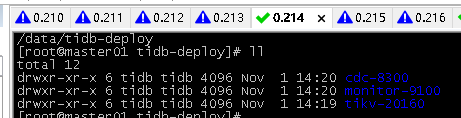

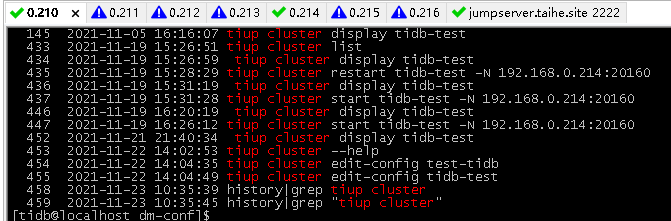

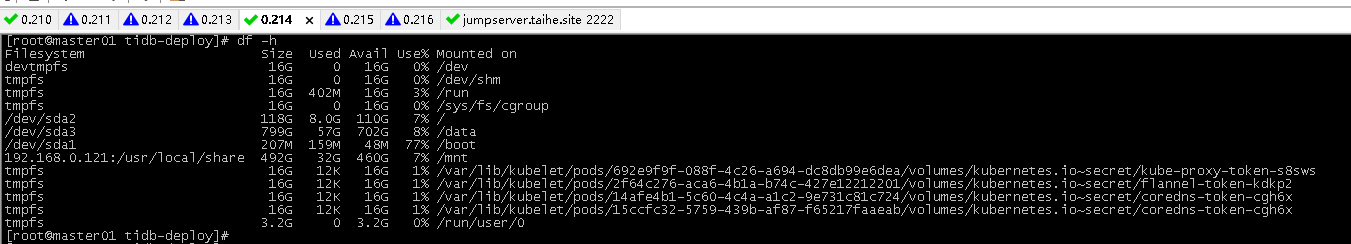

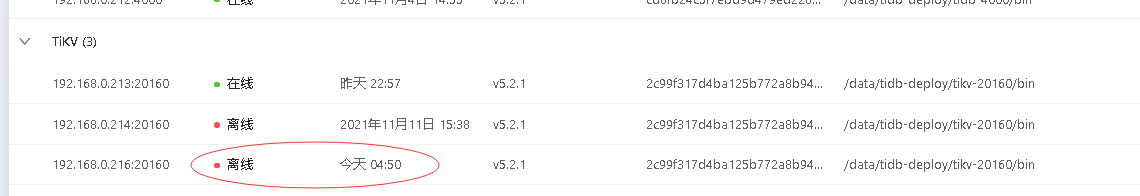

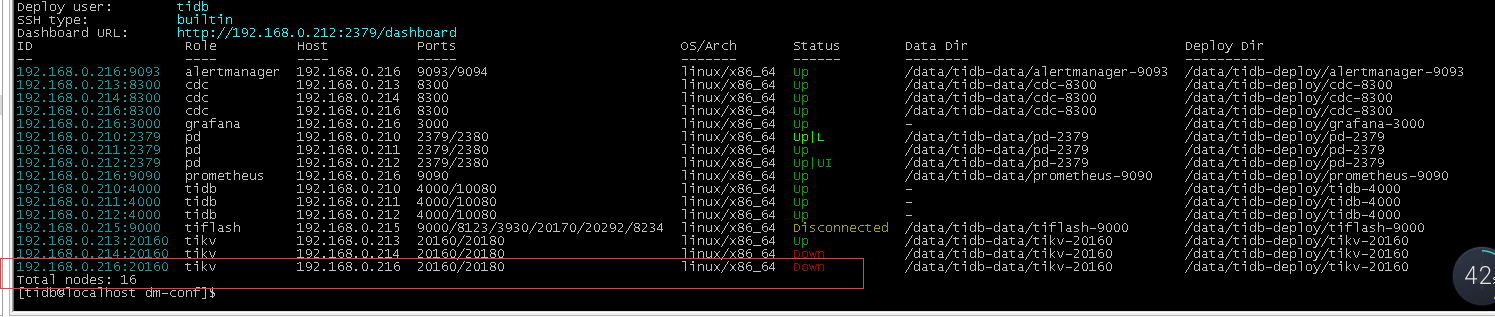

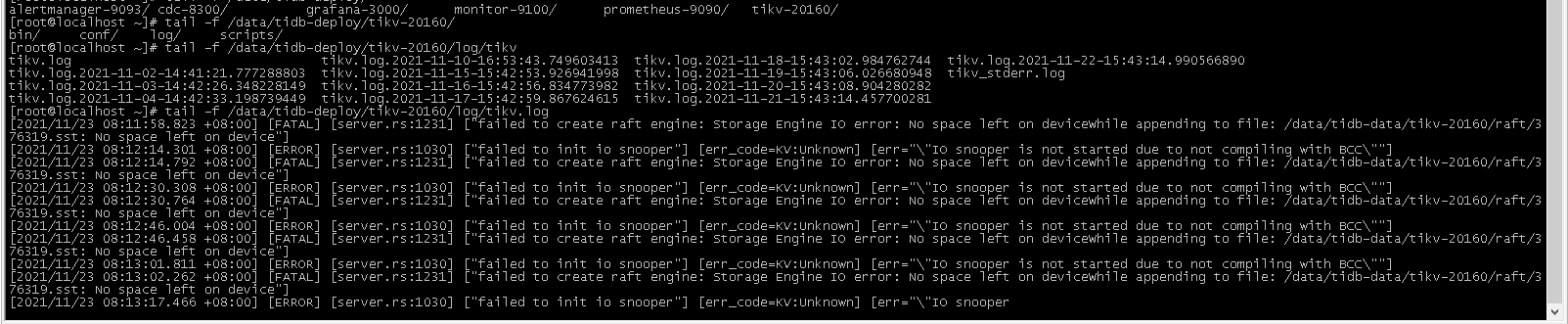

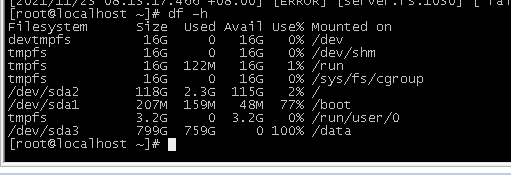

【概述】:3个TiKV节点,突然有一个节点挂掉了,尝试启动3次均失败

【背景】:没有做过什么操作,突然就挂了

【现象】:目前还可以连接上tidb,只是有一个tikv挂掉了

【问题】:

【业务影响】:

【TiDB 版本】:

【附件】:

- 相关日志

- 配置文件

- Grafana 监控(https://metricstool.pingcap.com/)

tiup-cluster-debug-2021-11-19-15-53-55.log (276.9 KB) tiup-cluster-debug-2021-11-19-15-43-58.log (436.9 KB) tiup-cluster-debug-2021-11-19-15-33-44.log (438.2 KB)

{

"error": true,

"message": "error.api.other: record not found",

"code": "error.api.other",

"full_text": "error.api.other: record not found\

at github.com/pingcap/tidb-dashboard/pkg/apiserver/utils.NewAPIError()\

\t/nfs/cache/mod/github.com/pingcap/tidb-dashboard@v0.0.0-20210826074103-29034af68525/pkg/apiserver/utils/error.go:67\

at github.com/pingcap/tidb-dashboard/pkg/apiserver/utils.MWHandleErrors.func1()\

\t/nfs/cache/mod/github.com/pingcap/tidb-dashboard@v0.0.0-20210826074103-29034af68525/pkg/apiserver/utils/error.go:96\

at github.com/gin-gonic/gin.(*Context).Next()\

\t/nfs/cache/mod/github.com/gin-gonic/gin@v1.5.0/context.go:147\

at github.com/gin-contrib/gzip.Gzip.func2()\

\t/nfs/cache/mod/github.com/gin-contrib/gzip@v0.0.1/gzip.go:47\

at github.com/gin-gonic/gin.(*Context).Next()\

\t/nfs/cache/mod/github.com/gin-gonic/gin@v1.5.0/context.go:147\

at github.com/gin-gonic/gin.RecoveryWithWriter.func1()\

\t/nfs/cache/mod/github.com/gin-gonic/gin@v1.5.0/recovery.go:83\

at github.com/gin-gonic/gin.(*Context).Next()\

\t/nfs/cache/mod/github.com/gin-gonic/gin@v1.5.0/context.go:147\

at github.com/gin-gonic/gin.(*Engine).handleHTTPRequest()\

\t/nfs/cache/mod/github.com/gin-gonic/gin@v1.5.0/gin.go:403\

at github.com/gin-gonic/gin.(*Engine).ServeHTTP()\

\t/nfs/cache/mod/github.com/gin-gonic/gin@v1.5.0/gin.go:364\

at github.com/pingcap/tidb-dashboard/pkg/apiserver.(*Service).handler()\

\t/nfs/cache/mod/github.com/pingcap/tidb-dashboard@v0.0.0-20210826074103-29034af68525/pkg/apiserver/apiserver.go:208\

at net/http.HandlerFunc.ServeHTTP()\

\t/usr/local/go/src/net/http/server.go:2069\

at github.com/pingcap/tidb-dashboard/pkg/utils.(*ServiceStatus).NewStatusAwareHandler.func1()\

\t/nfs/cache/mod/github.com/pingcap/tidb-dashboard@v0.0.0-20210826074103-29034af68525/pkg/utils/service_status.go:79\

at net/http.HandlerFunc.ServeHTTP()\

\t/usr/local/go/src/net/http/server.go:2069\

at net/http.(*ServeMux).ServeHTTP()\

\t/usr/local/go/src/net/http/server.go:2448\

at go.etcd.io/etcd/embed.(*accessController).ServeHTTP()\

\t/nfs/cache/mod/go.etcd.io/etcd@v0.5.0-alpha.5.0.20191023171146-3cf2f69b5738/embed/serve.go:359\

at net/http.serverHandler.ServeHTTP()\

\t/usr/local/go/src/net/http/server.go:2887\

at net/http.(*conn).serve()\

\t/usr/local/go/src/net/http/server.go:1952\

at runtime.goexit()\

\t/usr/local/go/src/runtime/asm_amd64.s:1371"

}