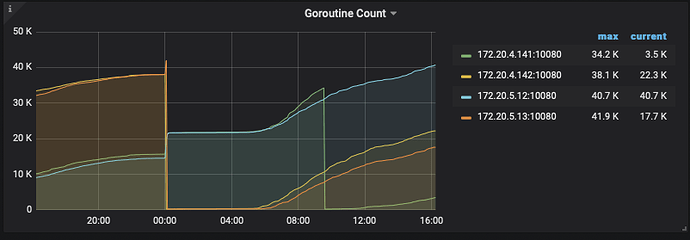

goroutine 在监控中也是在持续上升:

我们目前分析 goroutine,发现卡住的 goroutine 最终都到了 grpc 这里:

goroutine 32574899 [select, 346 minutes]:

google.golang.org/grpc.newClientStream.func5(0xc000792000, 0xc02e28b9e0, 0x38d3be0, 0xc05116b680)

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/pkg/mod/google.golang.org/grpc@v1.26.0/stream.go:319 +0xd7

created by google.golang.org/grpc.newClientStream

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/pkg/mod/google.golang.org/grpc@v1.26.0/stream.go:318 +0x9a5

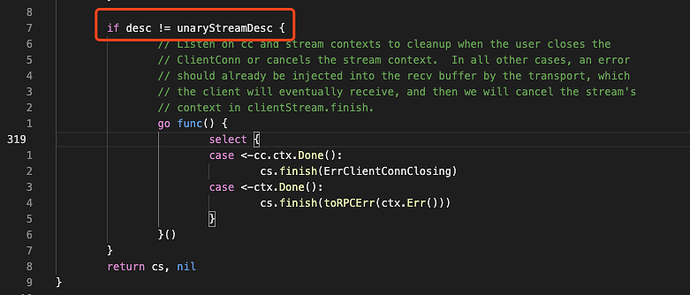

而 stream.go:319 是这样的:

即流式 grpc 才会触发,而这个grpc调用方应该来自 store/tikv/coprocessor.go:

goroutine 32711125 [select, 344 minutes]:

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).sendToRespCh(0xc03d40b5f0, 0xc0a7ff8e10, 0xc0954cd560, 0xc057f17401, 0xc0e3936400)

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:687 +0xd2

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleCopResponse(0xc03d40b5f0, 0xc02221e080, 0xc0a6be2cd0, 0xc0a7ff8e10, 0x0, 0x0, 0x0, 0x0, 0xc03d3b36c0, 0xc0954cd560, ...)

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:1201 +0x60d

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleCopStreamResult(0xc03d40b5f0, 0xc02221e080, 0xc0a6be2cd0, 0xc0557326c0, 0xc03d3b36c0, 0xc0954cd560, 0x1008e0, 0x0, 0x0, 0x0, ...)

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:1055 +0x16e

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTaskOnce(0xc03d40b5f0, 0xc02221e080, 0xc03d3b36c0, 0xc0954cd560, 0x0, 0xc0e36a0608, 0x11baeab, 0xc038414508, 0x0)

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:902 +0x676

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTask(0xc03d40b5f0, 0x38d3be0, 0xc053231aa0, 0xc03d3b36c0, 0xc0954cd560)

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:808 +0x1aa

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).run(0xc03d40b5f0, 0x38d3be0, 0xc053231aa0)

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:538 +0xeb

created by github.com/pingcap/tidb/store/tikv.(*copIterator).open

/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:580 +0x99

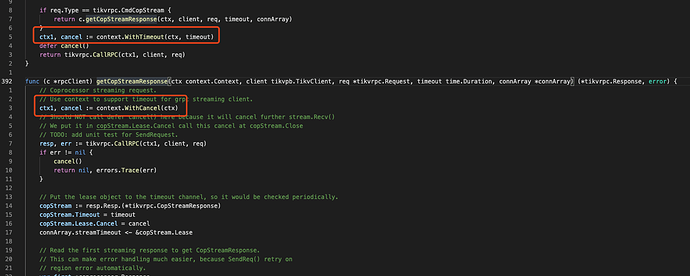

但在 coprocessor.go 我们没有找到初始化 rpc 调用的代码,怀疑的代码来源: store/tikv/client.go 392-427:

如图所示, getCopStreamResponse 没有像普通 unary 一样使用 context.WithTimeout, 而是没有超时时间的 context.WithCancel, 而 timeout 参数则用在了下面的 copStream 对象,这个对象的作用我们目前还搞不清楚。

以我们目前的能力再深入分析难度就很大了,我不清楚这里有没有 PingCAP 的 QA或研发人员? 这个问题应该是可重现的,只要我们设置较小的内存并多次执行 Stream 统计就可以复现。