Bug 反馈

ticdc 有2个问题

【 TiDB 版本】7.1.0

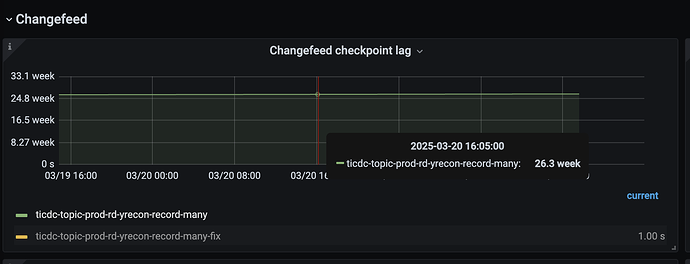

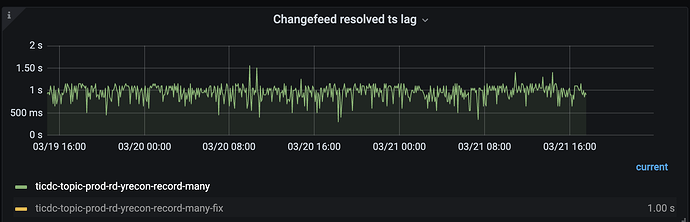

【 影响】ticdc同步数据不推进

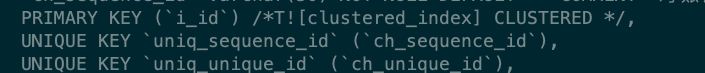

问题1:同步任务报错,导致部分表数据不同步(总共5张,有1张表不同步)

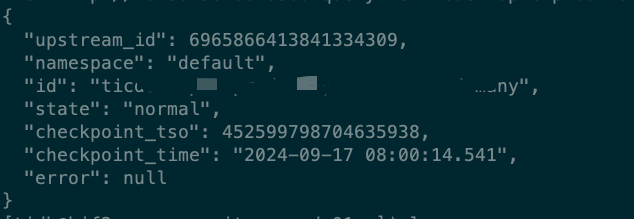

发现tso未推进,还是几个月以前的tso(任务状态是normal,也没有告警,理论应该告警)

[2025/03/21 16:51:02.061 +08:00] [WARN] [metrics_collector.go:96] ["Get Kafka brokers failed, use historical brokers to collect kafka broker level metrics"] [namespace=default] [changefeed=ticdc-topic] [role=processor] [duration=157.575642ms] [error="[CDC:ErrReachMaxTry]reach maximum try: 3, error: kafka: tried to use a client that was closed: kafka: tried to use a client that was closed"] [errorVerbose="[CDC:ErrReachMaxTry]reach maximum try: 3, error: kafka: tried to use a client that was closed: kafka: tried to use a client that was closed\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20221009092201-b66cddb77c32/errors.go:174\ngithub.com/pingcap/errors.(*Error).GenWithStackByArgs\n\tgithub.com/pingcap/errors@v0.11.5-0.20221009092201-b66cddb77c32/normalize.go:164\ngithub.com/pingcap/tiflow/pkg/retry.run\n\tgithub.com/pingcap/tiflow/pkg/retry/retry_with_opt.go:69\ngithub.com/pingcap/tiflow/pkg/retry.Do\n\tgithub.com/pingcap/tiflow/pkg/retry/retry_with_opt.go:34\ngithub.com/pingcap/tiflow/pkg/sink/kafka.(*saramaAdminClient).queryClusterWithRetry\n\tgithub.com/pingcap/tiflow/pkg/sink/kafka/admin.go:92\ngithub.com/pingcap/tiflow/pkg/sink/kafka.(*saramaAdminClient).GetAllBrokers\n\tgithub.com/pingcap/tiflow/pkg/sink/kafka/admin.go:130\ngithub.com/pingcap/tiflow/pkg/sink/kafka.(*saramaMetricsCollector).updateBrokers\n\tgithub.com/pingcap/tiflow/pkg/sink/kafka/metrics_collector.go:94\ngithub.com/pingcap/tiflow/pkg/sink/kafka.(*saramaMetricsCollector).Run\n\tgithub.com/pingcap/tiflow/pkg/sink/kafka/metrics_collector.go:85\nruntime.goexit\n\truntime/asm_amd64.s:1598"]

问题2:按照官方解决方案处理,无法识别kafka版本,已确定kafka版本(2.12-2.2.1)正确

-- sink 配置

protocol=canal-json&max-message-bytes=67108864&replication-factor=2&partition-num=8&kafka-version=2.12

报错:ErrKafkaInvalidVersion]invalid kafka version: invalid version `2.12