【 TiDB 使用环境】生产环境 /测试/ Poc

【 TiDB 版本】

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

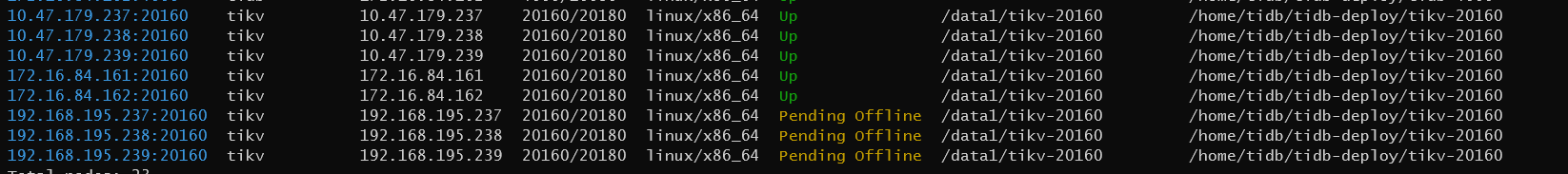

有8个tikv节点,缩容掉3个,然后一直处于Pending Offline 状态

用pd ctl工具查看

» store 1

{

“store”: {

“id”: 1,

“address”: “192.168.195.238:20160”,

“version”: “6.5.2”,

“peer_address”: “192.168.195.238:20160”,

“status_address”: “192.168.195.238:20180”,

“git_hash”: “a29f525cec48a801e9d8b1748356a88385bcfd33”,

“start_timestamp”: 1708917830,

“deploy_path”: “/home/tidb/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1708929042072904460,

“node_state”: 2,

“state_name”: “Offline”

},

“status”: {

“capacity”: “1.718TiB”,

“available”: “1.603TiB”,

“used_size”: “8.775GiB”,

“leader_count”: 1,

“leader_weight”: 1,

“leader_score”: 1,

“leader_size”: 4,

“region_count”: 6,

“region_weight”: 1,

“region_score”: 24.55325300705284,

“region_size”: 21,

“slow_score”: 1,

“start_ts”: “2024-02-26T11:23:50+08:00”,

“last_heartbeat_ts”: “2024-02-26T14:30:42.07290446+08:00”,

“uptime”: “3h6m52.07290446s”

}

}

tikv重启后也没用,这个要怎么解决

[2024/02/26 14:24:31.883 +08:00] [WARN] [kv.rs:958] [“call GetStoreSafeTS failed”] [err=Grpc(RemoteStopped)]

[2024/02/26 14:30:33.484 +08:00] [INFO] [util.rs:598] [“connecting to PD endpoint”] [endpoints=http://10.47.179.239:2379]

[2024/02/26 14:30:35.880 +08:00] [WARN] [kv.rs:958] [“call GetStoreSafeTS failed”] [err=Grpc(RemoteStopped)]

tikv的日志一直是这样,这个有影响吗

pd-ctl operator add remove-peer 167326 8

我执行了这个后重新查看region id 167326

» region 167326

{

“id”: 167326,

“start_key”: “7480000000000000FFE000000000000000F8”,

“end_key”: “7480000000000000FFE100000000000000F8”,

“epoch”: {

“conf_ver”: 66,

“version”: 171

},

“peers”: [

{

“id”: 167327,

“store_id”: 9,

“role_name”: “Voter”

},

{

“id”: 167328,

“store_id”: 2,

“role_name”: “Voter”

}

],

“leader”: {

“id”: 167328,

“store_id”: 2,

“role_name”: “Voter”

},

“cpu_usage”: 0,

“written_bytes”: 162,

“read_bytes”: 0,

“written_keys”: 3,

“read_keys”: 0,

“approximate_size”: 1,

“approximate_keys”: 0

}

这个是不是变成2副本了,应该得是3副本才正常?

大佬们,能否通过pd-ctl operator add remove-peer这种方式手工调度,目前用这个方式会导致副本从3个变成2个吗。还是有其他方式解决

region_count几个小时了都一直不变,tikv状态也一直Pending Offline,我就试了下手动移除副本

如果主副本不在这几个要下线的节点上,可以考虑手动移除这几个节点,执行pd-ctl store delete <store_id>

好的,重启tikv后leader就不在这几个要下线的节点了,我试下

» store delete 8

Success!

» store 8

{

“store”: {

“id”: 8,

“address”: “192.168.195.237:20160”,

“version”: “6.5.2”,

“peer_address”: “192.168.195.237:20160”,

“status_address”: “192.168.195.237:20180”,

“git_hash”: “a29f525cec48a801e9d8b1748356a88385bcfd33”,

“start_timestamp”: 1708928437,

“deploy_path”: “/home/tidb/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1708932378530704092,

“node_state”: 2,

“state_name”: “Offline”

},

“status”: {

“capacity”: “1.718TiB”,

“available”: “1.608TiB”,

“used_size”: “4.024GiB”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 5,

“region_weight”: 1,

“region_score”: 18.702520077290437,

“region_size”: 16,

“slow_score”: 1,

“start_ts”: “2024-02-26T14:20:37+08:00”,

“last_heartbeat_ts”: “2024-02-26T15:26:18.530704092+08:00”,

“uptime”: “1h5m41.530704092s”

}

}

好像没啥作用,日志同样只有这个

[2024/02/26 15:28:13.885 +08:00] [WARN] [kv.rs:958] [“call GetStoreSafeTS failed”] [err=Grpc(RemoteStopped)]

[2024/02/26 15:30:34.428 +08:00] [INFO] [util.rs:598] [“connecting to PD endpoint”] [endpoints=http://10.47.179.239:2379]

[2024/02/26 15:31:15.880 +08:00] [WARN] [kv.rs:958] [“call GetStoreSafeTS failed”] [err=Grpc(RemoteStopped)]

小龙虾爱大龙虾

(Minghao Ren)

12

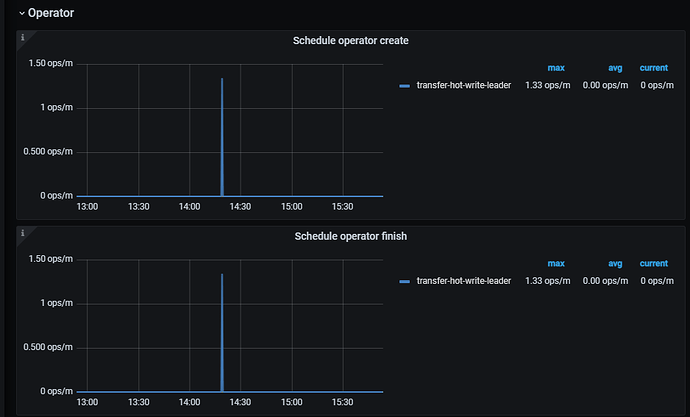

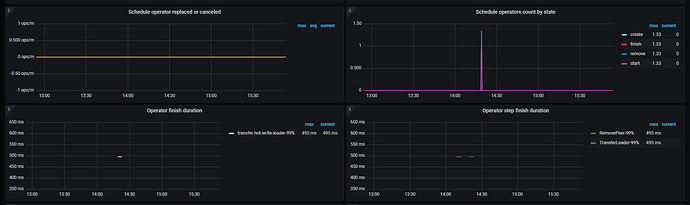

去看下grafana 的PD面板,有下线进度,同时看下这3个下线的节点region有没有在减少,region数量减少到0这3个节点就下完了。

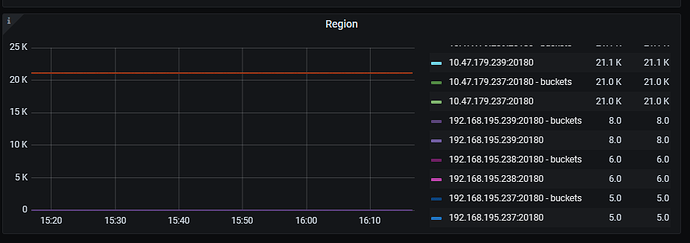

是这些吗,region count看着也一直没变化

小龙虾爱大龙虾

(Minghao Ren)

16

看着没有balance的调度生成,你看看那3个节点上边还有region吗?

小龙虾爱大龙虾

(Minghao Ren)

18

有设置label和placement rule相关的东西吗,下线后还能满足这些要求吗

有设置的,但是下线的这3个节点的label我执行show placement查看是没有策略应用的

±-------------------------------------------------------±-------------------------------------------------------------------------------------------------------±-----------------+

| Target | Placement | Scheduling_State |

±-------------------------------------------------------±-------------------------------------------------------------------------------------------------------±-----------------+

| POLICY p1 | PRIMARY_REGION=“a” REGIONS=“a,b” SCHEDULE=“MAJORITY_IN_PRIMARY” FOLLOWERS=4 | NULL |

| POLICY p-other | PRIMARY_REGION=“a” REGIONS=“a,b” SCHEDULE=“MAJORITY_IN_PRIMARY” | NULL |

| POLICY p-test | PRIMARY_REGION=“a” REGIONS=“a,b” SCHEDULE=“MAJORITY_IN_PRIMARY” | NULL |

| POLICY p2 | PRIMARY_REGION=“b” REGIONS=“a,b” SCHEDULE=“MAJORITY_IN_PRIMARY” FOLLOWERS=2 | NULL |

show placement labels;

±-------±-----------------------------------------------------------------------------------------------+

| Key | Values |

±-------±-----------------------------------------------------------------------------------------------+

| host | [“m179-237”, “m179-238”, “m179-239”, “m195-237”, “m195-238”, “m195-239”, “m84-161”, “m84-162”] |

| region | [“c”, “a”, “b”] |

| zone | [“c”, “a”, “b”] |

±-------±-----------------------------------------------------------------------------------------------+

3 rows in set (0.06 sec)

tikv_servers:

- host: 192.168.195.237

ssh_port: 56789

port: 20160

status_port: 20180

deploy_dir: /home/tidb/tidb-deploy/tikv-20160

data_dir: /data1/tikv-20160

log_dir: /home/tidb/tidb-deploy/tikv-20160/log

offline: true

config:

server.labels:

host: m195-237

region: c

zone: c

arch: amd64

os: linux

目前的label和placement配置是这样