【 TiDB 使用环境】生产环境

【 TiDB 版本】使用k8s+kubesphere+operator的方式部署tidb

【复现路径】增加 kv pod 节点

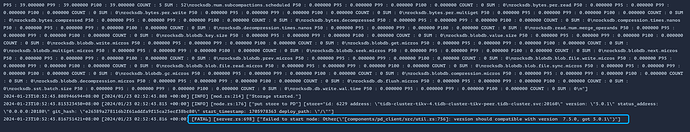

【遇到的问题:问题现象及影响】[FATAL] [server.rs:698] [“failed to start node: Other("[components/pd_client/src/util.rs:756]: version should compatible with version 7.5.0, got 5.0.1")”]

【资源配置】

【附件:截图/日志/监控】

是不是组件版本没统一

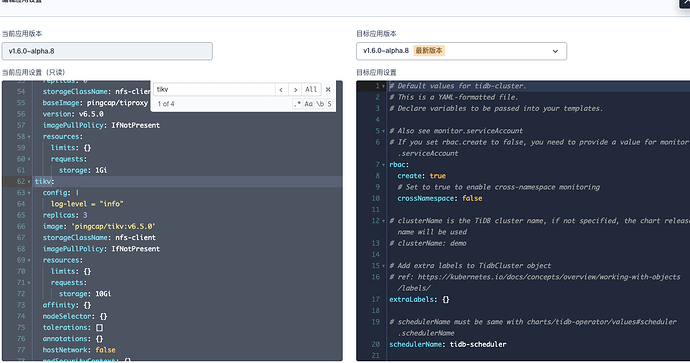

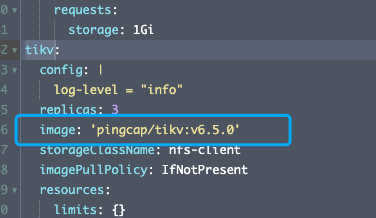

你好 请问哪里可以配置kv的版本呢 我用的是operator(v1.6.0-alpha.8)的方式部署的 都是默认配置

你全部换7.5的版本的镜像试下

| TiDB 版本 | 适用的 TiDB Operator 版本 |

|---|---|

| dev | dev |

| TiDB >= 7.1 | 1.5(推荐),1.4 |

| 6.5 <= TiDB < 7.1 | 1.5, 1.4(推荐),1.3 |

| 5.4 <= TiDB < 6.5 | 1.4, 1.3(推荐) |

| 5.1 <= TiDB < 5.4 | 1.4,1.3(推荐),1.2 |

| 3.0 <= TiDB < 5.1 | 1.4,1.3(推荐),1.2,1.1 |

| 2.1 <= TiDB < v3.0 | 1.0(停止维护) |

好的👌 感谢回复🙏 我试一下

把5的版本换成7的

你好 感谢回复🙏 线上环境可以随意更改镜像版本嘛 数据会不会丢失之类的呢

yaml配置文件显示的镜像 kitv tidb tipd 的版本都是 v6.5.0 但是pod启动之后不知道为什么kv就变成5.0.1了

kubectl describe pod 看看tikv的镜像是不是真的5.0.1

Name: tidb-cluster-tikv-4

Namespace: tidb-cluster

Priority: 0

Node: node3/192.168.0.7

Start Time: Tue, 23 Jan 2024 18:04:29 +0800

Labels: app.kubernetes.io/component=tikv

app.kubernetes.io/instance=tidb-cluster

app.kubernetes.io/managed-by=tidb-operator

app.kubernetes.io/name=tidb-cluster

controller-revision-hash=tidb-cluster-tikv-5cc88d8bbf

statefulset.kubernetes.io/pod-name=tidb-cluster-tikv-4

tidb.pingcap.com/cluster-id=7310155728820563327

Annotations: cni.projectcalico.org/containerID: 908b4d126bc04b62d689a52c3ede5b03fb4e46b076c851a86365b3c9ea505fd3

cni.projectcalico.org/podIP: 10.233.92.49/32

cni.projectcalico.org/podIPs: 10.233.92.49/32

prometheus.io/path: /metrics

prometheus.io/port: 20180

prometheus.io/scrape: true

Status: Running

IP: 10.233.92.49

IPs:

IP: 10.233.92.49

Controlled By: StatefulSet/tidb-cluster-tikv

Containers:

tikv:

Container ID: containerd://5533c31def1bedbeee0ff9862cdbc5e66a3ef1f59804a98ca21d9e309e9fd345

Image: pingcap/tikv

Image ID: docker.io/pingcap/tikv@sha256:2b0992519eb2cabdf22291a7066c0ab5cb93373825366c5b6cf97b273eb2cb53

Port: 20160/TCP

Host Port: 0/TCP

Command:

/bin/sh

/usr/local/bin/tikv_start_script.sh

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Wed, 24 Jan 2024 10:59:13 +0800

Finished: Wed, 24 Jan 2024 10:59:13 +0800

Ready: False

Restart Count: 202

Environment:

NAMESPACE: tidb-cluster (v1:metadata.namespace)

CLUSTER_NAME: tidb-cluster

HEADLESS_SERVICE_NAME: tidb-cluster-tikv-peer

CAPACITY: 0

TZ: UTC

Mounts:

/etc/podinfo from annotations (ro)

/etc/tikv from config (ro)

/usr/local/bin from startup-script (ro)

/var/lib/tikv from tikv (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-2hhv5 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

tikv:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: tikv-tidb-cluster-tikv-4

ReadOnly: false

annotations:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.annotations → annotations

config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: tidb-cluster-tikv-1c8d5543

Optional: false

startup-script:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: tidb-cluster-tikv-1c8d5543

Optional: false

kube-api-access-2hhv5:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

Warning BackOff 2m39s (x4655 over 16h) kubelet Back-off restarting failed container

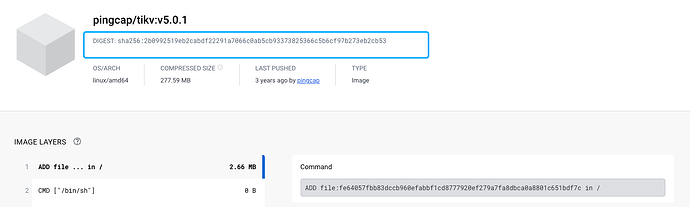

好像没有显示具体的镜像版本信息 有md5值

确实是5.0.1 sha256对的上

tidbcluster里面的镜像路径别写版本号,然后在spec:version 里面单独指定一个版本。类似下面的例子,我去掉了其他的内容,只保留了镜像和版本。这样的话所有组件用的版本就都是同一个了。例子来自于: https://github.com/pingcap/tidb-operator/blob/master/examples/basic-random-password/tidb-cluster.yaml

apiVersion: pingcap.com/v1alpha1

kind: TidbCluster

metadata:

name: basic

spec:

version: v7.1.1

helper:

image: alpine:3.16.0

pd:

baseImage: pingcap/pd

tikv:

baseImage: pingcap/tikv

tidb:

baseImage: pingcap/tidb

好滴 感谢回复! 我试一下

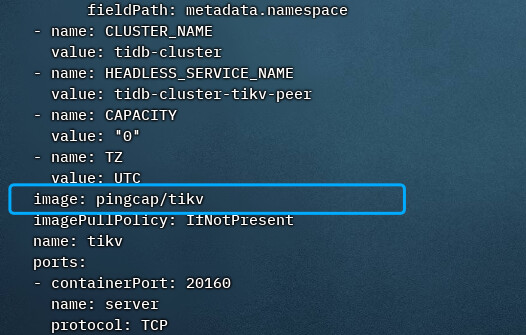

你好大佬 昨天我按照这个配置把version提到外面 还是没有生效 还是5.0.1版本 ![]()

这个有点奇怪。

- 检查sts: kubectl get sts xxx-tikv -n xxx -oyaml 看看tikv的image是不是正确的版本,如果是正确的,继续检查pod的。

- 检查pod: kubectl get pod xxx-tikv-x -n xxx -oyaml 看看image,如果还对,去node上看看

- 登录这个节点所在的node,执行 docker image list ,看看是不是tikv的tag打错了?直接删掉这个image:

docker rmi xxx - 然后重建 pod

我按照您说的看了一下 kubectl get pod 里面的镜像内容是:

image: docker.io/pingcap/tikv:latest

imageID: docker.io/pingcap/tikv@sha256:2b0992519eb2cabdf22291a7066c0ab5cb93373825366c5b6cf97b273eb2cb53

这个sha256的值我去dockerhub上对比了一下是5.0.1版本的

但是其他的几个kv pod 是下面的这样的

image: docker.io/pingcap/tikv:latest

imageID: docker.io/pingcap/tikv@sha256:d2adb67c75e9d25dda8c8c367c1db269e079dfd2f8427c8aff0ff44ec1c1be09

其他的几个节点上的 kv pod可以正常运行没有这个问题 就是某两个节点有这个问题

然后我删除重建立有问题的 kv pod节点 还是一样的sha256 和 版本(5.0.1) ![]()

- latest 肯定是不对的,dockerhub上的latest也不是5.0.1,是不是你的这俩节点的docker的仓库地址被镜像到了第三方地址。

- 看看sts里面的版本对不对。如果这个都不对,那得看看operator了。运行的镜像不应该有latest这样的版本

这个是我的 tidb-cluster CRD 的全部内容

apiVersion: pingcap.com/v1alpha1

kind: TidbCluster

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: >

{"apiVersion":"pingcap.com/v1alpha1","kind":"TidbCluster","metadata":{"annotations":{"kubesphere.io/creator":"admin","meta.helm.sh/release-name":"tidb-cluster","meta.helm.sh/release-namespace":"tidb-cluster","pingcap.com/ha-topology-key":"kubernetes.io/hostname","pingcap.com/pd.tidb-cluster-pd.sha":"cfa0d77a","pingcap.com/tidb.tidb-cluster-tidb.sha":"866b9771","pingcap.com/tikv.tidb-cluster-tikv.sha":"1c8d5543"},"labels":{"app.kubernetes.io/component":"tidb-cluster","app.kubernetes.io/instance":"tidb-cluster","app.kubernetes.io/managed-by":"Helm","app.kubernetes.io/name":"tidb-cluster","app.kubesphere.io/instance":"tidb-cluster","helm.sh/chart":"tidb-cluster-v1.6.0-alpha.8"},"name":"tidb-cluster","namespace":"tidb-cluster"},"spec":{"discovery":{},"enablePVReclaim":false,"helper":{"image":"busybox:1.34.1"},"imagePullPolicy":"IfNotPresent","pd":{"affinity":{},"baseImage":"pingcap/pd","enableDashboardInternalProxy":true,"hostNetwork":false,"image":"pingcap/pd:v6.5.0","imagePullPolicy":"IfNotPresent","maxFailoverCount":3,"replicas":3,"requests":{"storage":"1Gi"},"startTimeout":30,"storageClassName":"nfs-client"},"pvReclaimPolicy":"Retain","schedulerName":"tidb-scheduler","services":[{"name":"pd","type":"ClusterIP"}],"tidb":{"affinity":{},"baseImage":"pingcap/tidb","binlogEnabled":false,"hostNetwork":false,"image":"pingcap/tidb:v6.5.0","imagePullPolicy":"IfNotPresent","maxFailoverCount":3,"replicas":2,"separateSlowLog":true,"slowLogTailer":{"image":"busybox:1.33.0","imagePullPolicy":"IfNotPresent","limits":{"cpu":"100m","memory":"50Mi"},"requests":{"cpu":"20m","memory":"5Mi"}},"tlsClient":{}},"tikv":{"affinity":{},"baseImage":"pingcap/tikv","hostNetwork":false,"image":"pingcap/tikv:v6.5.0","imagePullPolicy":"IfNotPresent","maxFailoverCount":3,"replicas":3,"requests":{"storage":"10Gi"},"scalePolicy":{"scaleInParallelism":1,"scaleOutParallelism":1},"storageClassName":"nfs-client"},"timezone":"UTC","tiproxy":{"baseImage":"pingcap/tiproxy","imagePullPolicy":"IfNotPresent","replicas":0,"requests":{"storage":"1Gi"},"storageClassName":"nfs-client","version":"v6.5.0"},"tlsCluster":{},"version":""}}

kubesphere.io/creator: admin

meta.helm.sh/release-name: tidb-cluster

meta.helm.sh/release-namespace: tidb-cluster

pingcap.com/ha-topology-key: kubernetes.io/hostname

pingcap.com/pd.tidb-cluster-pd.sha: cfa0d77a

pingcap.com/tidb.tidb-cluster-tidb.sha: 866b9771

pingcap.com/tikv.tidb-cluster-tikv.sha: 1c8d5543

labels:

app.kubernetes.io/component: tidb-cluster

app.kubernetes.io/instance: tidb-cluster

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: tidb-cluster

app.kubesphere.io/instance: tidb-cluster

helm.sh/chart: tidb-cluster-v1.6.0-alpha.8

name: tidb-cluster

namespace: tidb-cluster

spec:

discovery: {}

enablePVReclaim: false

version: v7.5.0

helper:

image: 'busybox:1.34.1'

imagePullPolicy: IfNotPresent

pd:

affinity: {}

baseImage: pingcap/pd

enableDashboardInternalProxy: true

hostNetwork: false

imagePullPolicy: IfNotPresent

maxFailoverCount: 3

replicas: 3

requests:

storage: 1Gi

startTimeout: 30

storageClassName: nfs-client

pvReclaimPolicy: Retain

schedulerName: tidb-scheduler

services:

- name: pd

type: ClusterIP

tidb:

affinity: {}

baseImage: pingcap/tidb

binlogEnabled: false

hostNetwork: false

imagePullPolicy: IfNotPresent

maxFailoverCount: 3

replicas: 2

separateSlowLog: true

slowLogTailer:

image: 'busybox:1.33.0'

imagePullPolicy: IfNotPresent

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 20m

memory: 5Mi

tlsClient: {}

tikv:

affinity: {}

baseImage: pingcap/tikv

image: 'pingcap/tikv'

hostNetwork: false

imagePullPolicy: IfNotPresent

maxFailoverCount: 3

replicas: 3

requests:

storage: 20Gi

scalePolicy:

scaleInParallelism: 1

scaleOutParallelism: 1

storageClassName: nfs-client

timezone: Asia/Shanghai

tiproxy:

baseImage: pingcap/tiproxy

imagePullPolicy: IfNotPresent

replicas: 0

requests:

storage: 1Gi

storageClassName: nfs-client

version: v6.5.0

tlsCluster: {}