./tikv-ctl --db /data/tidb-data/tikv-20160/db recreate-region -p ‘10.30.xx.xx:2379’ -r 9783357

initing empty region 10113953 with peer_id 10113954…

Debugger::recreate_region: “[src/server/debug.rs:639]: "[src/server/debug.rs:664]: region still exists id: 10113953 start_key: 7480000000000000FF375F698000000000FF0000040380000000FF0D2F659003800000FF0000000002038000FF00009043FDAD0000FD end_key: 7480000000000008FF875F72FC00000019FF18E0020000000000FA region_epoch { conf_ver: 1 version: 15792 } peers { id: 10113954 store_id: 8009882 }"”

下面是region信息

» region 9783357

{

“id”: 9783357,

“start_key”: “7480000000000000FF375F698000000000FF0000040380000000FF0D2F659003800000FF0000000002038000FF00009043FDAD0000FD”,

“end_key”: “7480000000000008FF875F72FC00000019FF18E0020000000000FA”,

“epoch”: {

“conf_ver”: 8012,

“version”: 15791

},

“peers”: [

{

“id”: 9783358,

“store_id”: 8009882,

“role_name”: “Voter”

},

{

“id”: 9783359,

“store_id”: 6,

“role_name”: “Voter”

},

{

“id”: 9783360,

“store_id”: 8009881,

“role_name”: “Voter”

},

{

“id”: 10113880,

“store_id”: 1,

“role”: 1,

“role_name”: “Learner”,

“is_learner”: true

}

],

“leader”: {

“id”: 9783359,

“store_id”: 6,

“role_name”: “Voter”

},

“down_peers”: [

{

“down_seconds”: 4967,

“peer”: {

“id”: 9783360,

“store_id”: 8009881,

“role_name”: “Voter”

}

},

{

“down_seconds”: 317,

“peer”: {

“id”: 10113880,

“store_id”: 1,

“role”: 1,

“role_name”: “Learner”,

“is_learner”: true

}

}

],

“pending_peers”: [

{

“id”: 10113880,

“store_id”: 1,

“role”: 1,

“role_name”: “Learner”,

“is_learner”: true

}

],

“written_bytes”: 0,

“read_bytes”: 0,

“written_keys”: 0,

“read_keys”: 0,

“approximate_size”: 1,

“approximate_keys”: 0

}]

好像还是不行

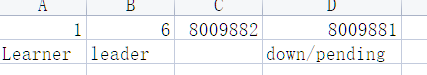

“store_id”: 8009881是一直在tiup看不到,但是pd-ctl还可以看到的,“store_id”: 8009882一直处于Pending Offline状态的