是sas ssd

参考这些:

告诉你为啥会有 emtpy region

学习最佳实践

https://docs.pingcap.com/zh/tidb/stable/pd-scheduling-best-practices#region-merge-速度慢

FAQ 对于处理的方式上的一些排查过程

对

你系统有66K+ region, 空region有4K+ ,可以先优化掉空region ,看看效果。

刚看了看你发来的tikv log,主要发现两个问题:

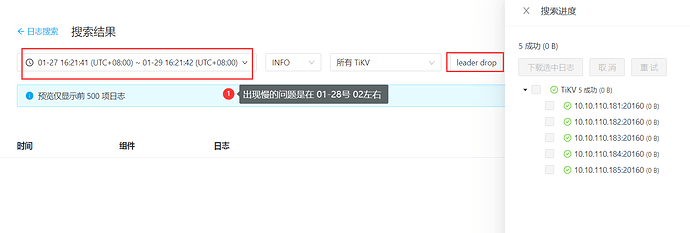

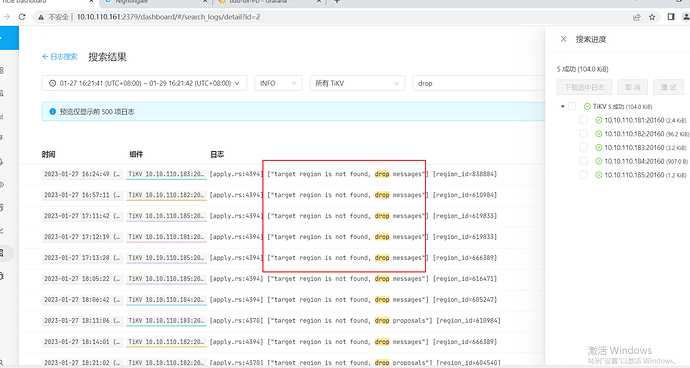

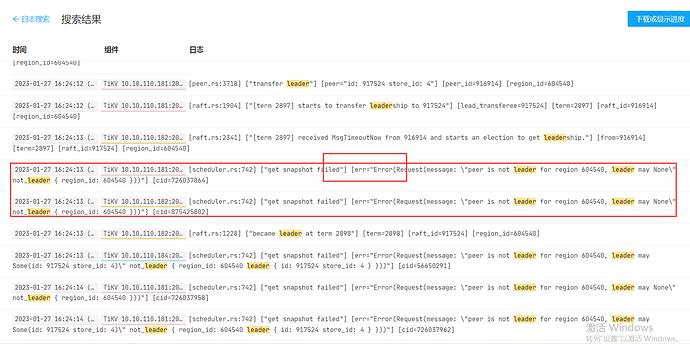

- 在今天上午10:30分之前,你的tikv里的leader一直在频繁的切换了,相关的信息如下

[2023/01/28 14:12:38.670 +08:00] [Info] [raft.rs:2212] [“received votes response”] [term=62] [type=MsgRequestPreVoteResponse] [approvals=2] [rejections=0] [from=183511] [vote=true] [raft_id=183512] [region_id=183509]

[2023/01/28 14:12:38.670 +08:00] [Info] [raft.rs:1144] [“became candidate at term 63”] [term=63] [raft_id=183512] [region_id=183509]

[2023/01/28 14:12:38.670 +08:00] [Info] [raft.rs:1299] [“broadcasting vote request”] [to=“[183510, 183511]”] [log_index=86] [log_term=62] [term=63] [type=MsgRequestVote] [raft_id=183512] [region_id=183509]

[2023/01/28 14:12:38.670 +08:00] [Info] [raft.rs:2212] [“received votes response”] [term=54] [type=MsgRequestPreVoteResponse] [approvals=2] [rejections=0] [from=771278] [vote=true] [raft_id=771279] [region_id=771277]

[2023/01/28 14:12:38.670 +08:00] [Info] [raft.rs:1144] [“became candidate at term 55”] [term=55] [raft_id=771279] [region_id=771277]

[2023/01/28 14:12:38.670 +08:00] [Info] [raft.rs:1299] [“broadcasting vote request”] [to=“[771280, 771278]”] [log_index=77] [log_term=54] [term=55] [type=MsgRequestVote] [raft_id=771279] [region_id=771277]

[2023/01/28 14:12:38.670 +08:00] [Info] [subscription_manager.rs:395] [“backup stream: on_modify_observe”] [op=“Stop { region: id: 183509 start_key: 7480000000000001FFD45F72800000010BFFE298A30000000000FA end_key: 7480000000000001FFD45F72800000010BFFEF343D0000000000FA region_epoch { conf_ver: 1547 version: 6273 } peers { id: 183510 store_id: 5 } peers { id: 183511 store_id: 13009 } peers { id: 183512 store_id: 1 } }”]

[2023/01/28 14:12:38.670 +08:00] [Warn] [subscription_track.rs:143] [“trying to deregister region not registered”] [region_id=183509]

[2023/01/28 14:12:38.670 +08:00] [Info] [subscription_manager.rs:395] [“backup stream: on_modify_observe”] [op=“Stop { region: id: 771277 start_key: 7480000000000002FF305F698000000000FF0000010419AF176CFF7700000003800000FF0095ABD509000000FC end_key: 7480000000000002FF305F698000000000FF0000010419AF176DFFF000000003800000FF0095BBB339000000FC region_epoch { conf_ver: 12683 version: 3737 } peers { id: 771278 store_id: 13009 } peers { id: 771279 store_id: 1 } peers { id: 771280 store_id: 5 } }”]

[2023/01/28 14:12:38.670 +08:00] [Warn] [subscription_track.rs:143] [“trying to deregister region not registered”] [region_id=771277]

[2023/01/28 14:12:38.678 +08:00] [Info] [raft.rs:1550] [“starting a new election”] [term=47] [raft_id=828463] [region_id=828461]

[2023/01/28 14:12:38.678 +08:00] [Info] [raft.rs:1170] [“became pre-candidate at term 47”] [term=47] [raft_id=828463] [region_id=828461]

[2023/01/28 14:12:38.678 +08:00] [Info] [raft.rs:1299] [“broadcasting vote request”] [to=“[828464, 828462]”] [log_index=61] [log_term=47] [term=47] [type=MsgRequestPreVote] [raft_id=828463] [region_id=828461]

[2023/01/28 14:12:38.678 +08:00] [Info] [raft.rs:1550] [“starting a new election”] [term=59] [raft_id=162564] [region_id=162562]

[2023/01/28 14:12:38.678 +08:00] [Info] [raft.rs:1170] [“became pre-candidate at term 59”] [term=59] [raft_id=162564] [region_id=162562]

[2023/01/28 14:12:38.678 +08:00] [Info] [raft.rs:1299] [“broadcasting vote request”] [to=“[162565, 162563]”] [log_index=96] [log_term=59] [term=59] [type=MsgRequestPreVote] [raft_id=162564] [region_id=162562]

[2023/01/28 14:12:38.678 +08:00] [Info] [subscription_manager.rs:395] [“backup stream: on_modify_observe”] [op=“Stop { region: id: 828461 start_key: 7480000000000002FF305F698000000000FF0000010419AF2524FF5C00000003800000FF00F711D844000000FC end_key: 7480000000000002FF305F698000000000FF0000010419AF2525FFC400000003800000FF00F71FECB6000000FC region_epoch { conf_ver: 14747 version: 5424 } peers { id: 828462 store_id: 13009 } peers { id: 828463 store_id: 1 } peers { id: 828464 store_id: 5 } }”]

[2023/01/28 14:12:38.678 +08:00] [Warn] [subscription_track.rs:143] [“trying to deregister region not registered”] [region_id=828461]

[2023/01/28 14:12:38.678 +08:00] [Info] [subscription_manager.rs:395] [“backup stream: on_modify_observe”] [op=“Stop { region: id: 162562 start_key: 7480000000000001FFD45F7280000000B9FF2DD62B0000000000FA end_key: 7480000000000001FFD45F7280000000B9FF3A7C8F0000000000FA region_epoch { conf_ver: 1481 version: 4600 } peers { id: 162563 store_id: 13009 } peers { id: 162564 store_id: 1 } peers { id: 162565 store_id: 5 } }”]

[2023/01/28 14:12:38.678 +08:00] [Warn] [subscription_track.rs:143] [“trying to deregister region not registered”] [region_id=162562]

[2023/01/28 14:12:38.678 +08:00] [Info] [raft.rs:1550] [“starting a new election”] [term=64] [raft_id=35217] [region_id=35216]

[2023/01/28 14:12:38.678 +08:00] [Info] [raft.rs:1170] [“became pre-candidate at term 64”] [term=64] [raft_id=35217] [region_id=35216]

…

[2023/01/28 20:51:42.620 +08:00] [Info] [pd.rs:1521] [“try to transfer leader”] [to_peers=“[]”] [to_peer=“id: 540046 store_id: 5”] [from_peer=“id: 540047 store_id: 1”] [region_id=540044]

[2023/01/28 20:51:42.620 +08:00] [Info] [pd.rs:1521] [“try to transfer leader”] [to_peers=“[]”] [to_peer=“id: 298220 store_id: 5”] [from_peer=“id: 312372 store_id: 1”] [region_id=234735]

2. 10:30分后,出现了大量如下告警:(prewrite和commit 很慢)

[2023/01/29 10:39:48.521 +08:00] [Warn] [scheduler.rs:1088] [“[region 936743] scheduler handle command: prewrite, ts: 439080710397231107”] [takes=1892]

[2023/01/29 10:39:48.521 +08:00] [Warn] [scheduler.rs:1088] [“[region 936743] scheduler handle command: commit, ts: 439080710292373508”] [takes=2117]

1. 看看监控,看最近一周的数据吧,是不是有 leader drop

2. 还是要确认下磁盘,总觉得你磁盘有问题磁盘是 sas ssd

看一周的数据,确保出现慢的时间点在监控范围内:

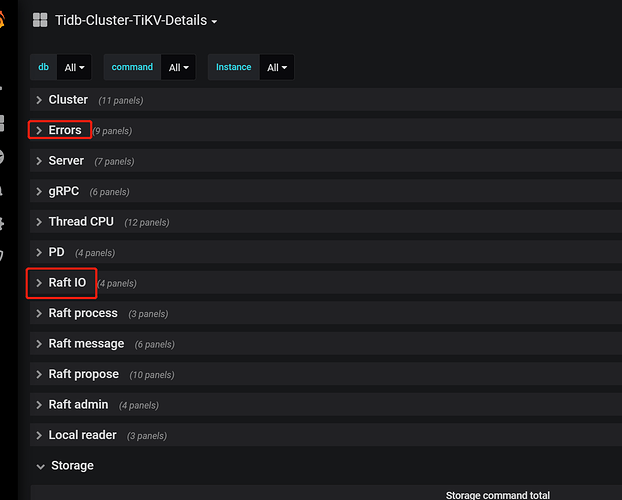

- tikv-details ---- raft message ---- messages

- blackbox_exporter ---- ping latency

- pd ---- operator ---- schedule operator create

- pd ---- statistics-balance ---- store leader score/size/count

突然写入慢可以关注下几个监控点:

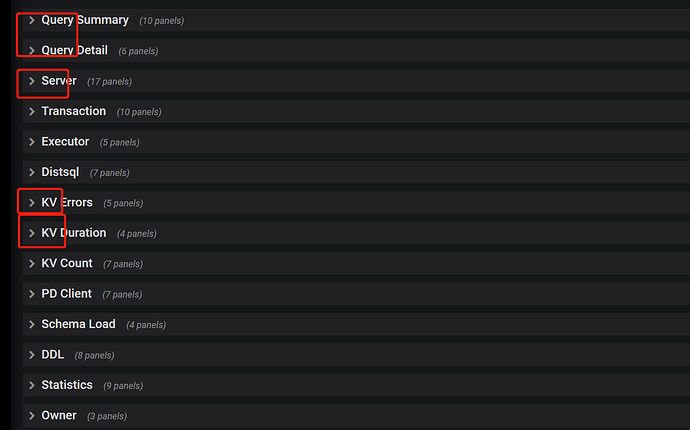

1、监控面板下:

先确定下是否是真的写入引起的问题,可能读引起的IO不足也不是没有可能的

2、在关注

集群写入异常raftstore error 也会体现的

3、最后可以关注下:

下的failed query opm 指标是否有异常

希望对你有帮助

从业务上来说 主要是写入数据,增加 批量写入的数据量,性能有所提升。

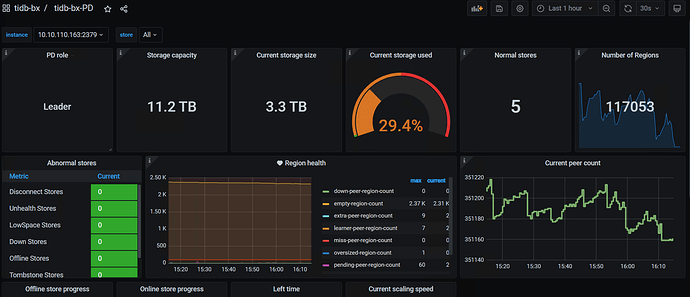

他这监控看起来:

- 业务上应该有 trucate 或者 drop partition 操作,从 empty region 来看。

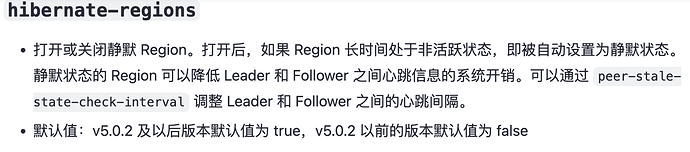

- tikv 的 region 数量较多,可以考虑调大心跳开静默 region。

具体需要 怎么调整? 给予指导

-

hibernate region 6.5默认是开启的,调心跳参数 peer-stale-state-check-interval

-

磁盘的io性能差,肯定会引起prewrite commit慢,这些慢了也会导致scheduler队列的堆积,peer的心跳和通信都会有影响,这两个相互影响,会恶性循环,leader频繁切换也就是正常现象了,都雪崩了

-

综合你其他的帖子,个人认为把你的磁盘性能提升下,应该能解决你这几个帖子的大部分问题

-

括容不是说让你扩磁盘,这并不能解决单节点tikvr egion数过多的问题,而是扩tikv节点,缩小单个节点region的数量,才能减缓单个节点的压力。一个节点region数量越多,维护peer的心跳消耗的资源越大

版本已经是 6.5了, 增加tikv节点 看看,非常感谢你的回复。

现在瓶颈应该是在磁盘,io性能好了,可能region数量过的的问题就不会报了,应该优先解决

看你的规划吧,直接通过扩容缩容的方式也可以

学习下 , 没遇到过 , 这尼玛看了好久 , 希望后面对我有帮助

请教下,我看监控中 storage used 在56.9%,但每个tikv的region已经有6.6w了,而诊断中要求最好在2w以下,同时在文档中最佳实践里要求tikv存储在1T以上,这是否意味着storage一般情况下都要保持在使用率很低的状态?