【 TiDB 使用环境】生产环境 /测试/ Poc

【 TiDB 版本】v6.1.0

【复现路径】

1、使用ticdc同步数据到下游的tidb;

2、源库的状态:

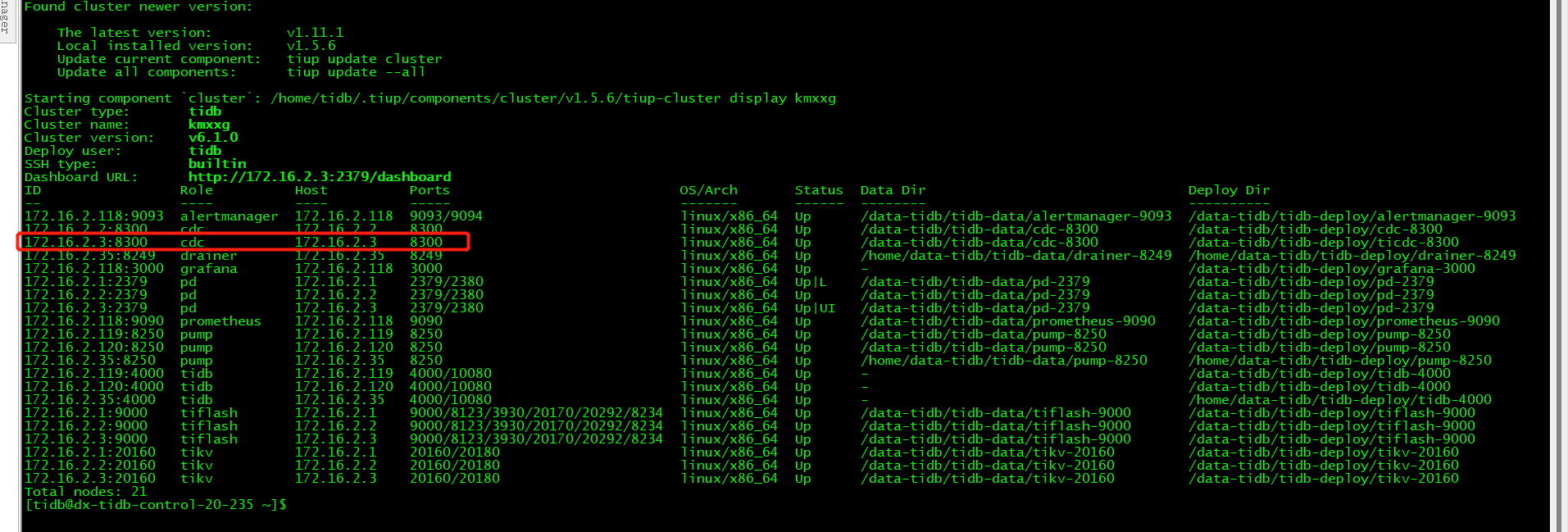

红框为所使用的cdc节点

3、同步的表结构:

CREATE TABLE `ads_test_pushuo_file` (

`crticle_content_id` bigint(20) NOT NULL,

`filename` varchar(100) NOT NULL,

`content` text DEFAULT NULL,

PRIMARY KEY (`crticle_content_id`) /*T![clustered_index] NONCLUSTERED */,

UNIQUE KEY `primary_index` (`crticle_content_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin;

4、同步命令

进入172.16.2.3:8300 这个节点的服务器,运行命令:

/data-tidb/tidb-deploy/ticdc-8300/bin/cdc cli changefeed create --pd=http://172.16.2.13:2379 --sink-uri="tidb://root:playlist6@172.1.1.12:4000/" --changefeed-id="ads-test-file" --config kmxxg_content_security.toml

5、配置文件配置:

case-sensitive = true

enable-old-value = true

[filter]

rules = ['kmxxg_content_security.ads_test_pushuo_file']

[mounter]

worker-num = 16

[sink]

protocol = "default"

[cyclic-replication]

enable = false

replica-id = 1

filter-replica-ids = [2,3]

sync-ddl = true

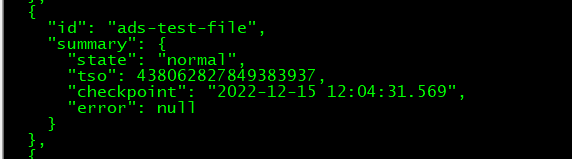

6、查看cdc任务状态:

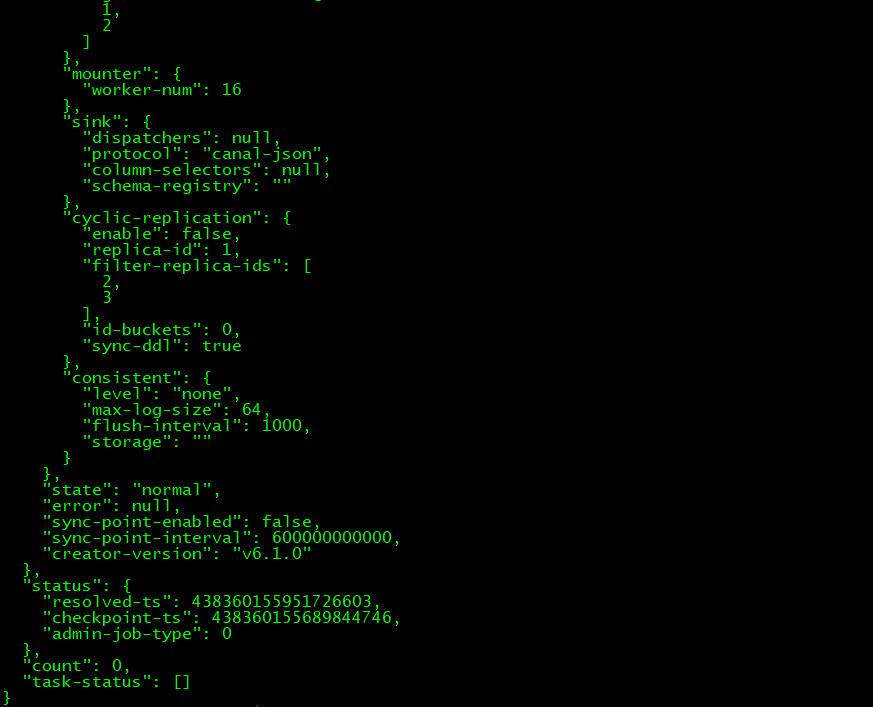

7、查看cdc任务详细:

{

"info": {

"upstream-id": 0,

"sink-uri": "tidb://root:playlist6@172.1.1.12:4000/",

"opts": {},

"create-time": "2022-12-13T14:45:10.378326772+08:00",

"start-ts": 438020056014913540,

"target-ts": 0,

"admin-job-type": 0,

"sort-engine": "unified",

"sort-dir": "",

"config": {

"case-sensitive": true,

"enable-old-value": true,

"force-replicate": false,

"check-gc-safe-point": true,

"filter": {

"rules": [

"kmxxg_content_security.ads_test_pushuo_file"

],

"ignore-txn-start-ts": null

},

"mounter": {

"worker-num": 16

},

"sink": {

"dispatchers": null,

"protocol": "default",

"column-selectors": null,

"schema-registry": ""

},

"cyclic-replication": {

"enable": false,

"replica-id": 1,

"filter-replica-ids": [

2,

3

],

"id-buckets": 0,

"sync-ddl": true

},

"consistent": {

"level": "none",

"max-log-size": 64,

"flush-interval": 1000,

"storage": ""

}

},

"state": "normal",

"error": null,

"sync-point-enabled": false,

"sync-point-interval": 600000000000,

"creator-version": "v6.1.0"

},

"status": {

"resolved-ts": 438062843066580994,

"checkpoint-ts": 438062843066580994,

"admin-job-type": 0

},

"count": 0,

"task-status": []

}

【遇到的问题:问题现象及影响】

1、源库的ads_test_pushuo_file插入数据;

2、查看目标库ads_test_pushuo_file没有数据增加;

请问从配置上看不同步的问题出现在哪里?

【资源配置】

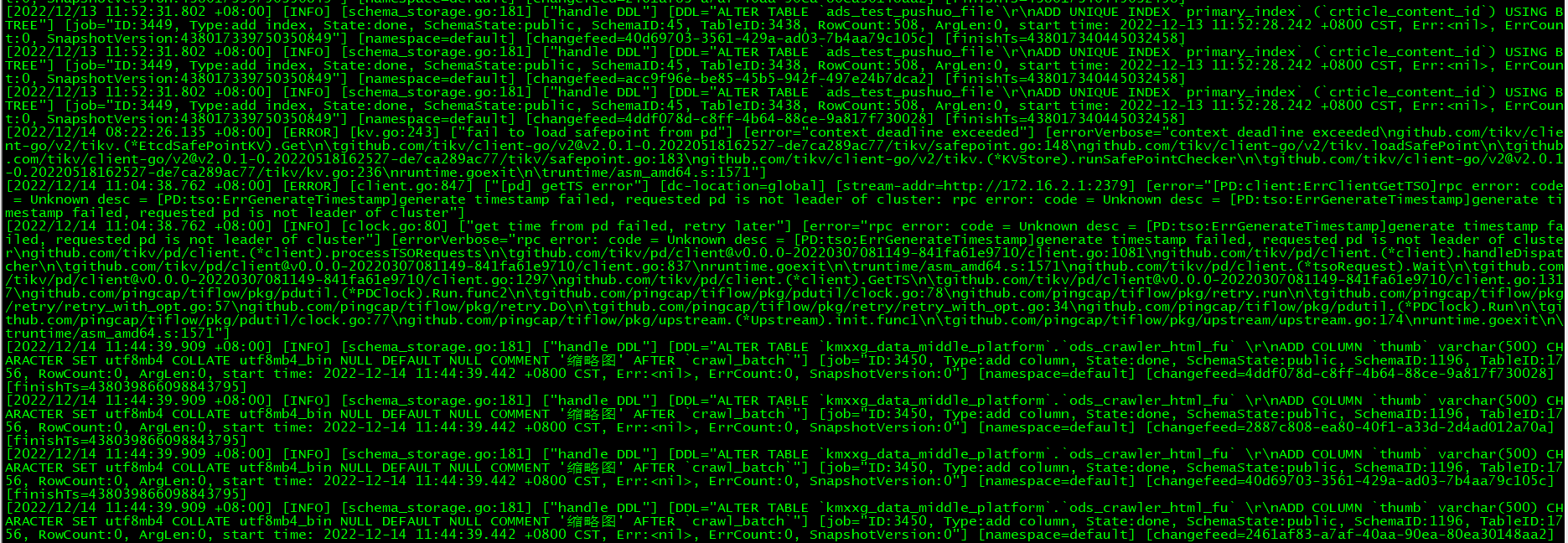

【附件:截图/日志/监控】