379",“attempt”:0,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

config file conf/tidb.toml contained unknown configuration options: pessimistic-txn.ttl, txn-local-latches

{“level”:“warn”,“ts”:“2022-09-27T14:08:42.823+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-1711b413-4d7f-4133-9cbe-2025c9d24aab/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-09-27T14:08:48.687+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-1711b413-4d7f-4133-9cbe-2025c9d24aab/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-09-27T14:08:52.691+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-1711b413-4d7f-4133-9cbe-2025c9d24aab/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = transport is closing”}

{“level”:“warn”,“ts”:“2022-09-27T14:08:54.691+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-1711b413-4d7f-4133-9cbe-2025c9d24aab/192.168.3.33:2379”,“attempt”:1,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

tidb集群 ,过一会就会挂了重启,这个日志能看出什么原因吗

goroutine 17816 [IO wait]:

internal/poll.runtime_pollWait(0x7f2463815370, 0x72, 0xffffffffffffffff)

/usr/local/go/src/runtime/netpoll.go:184 +0x55

internal/poll.(*pollDesc).wait(0xc0bfd06698, 0x72, 0x4000, 0x4000, 0xffffffffffffffff)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:87 +0x45

internal/poll.(*pollDesc).waitRead(…)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:92

internal/poll.(*FD).Read(0xc0bfd06680, 0xc01116a000, 0x4000, 0x4000, 0x0, 0x0, 0x0)

/usr/local/go/src/internal/poll/fd_unix.go:169 +0x1cf

net.(*netFD).Read(0xc0bfd06680, 0xc01116a000, 0x4000, 0x4000, 0xc01415b5c0, 0x2b584d8, 0xc087925790)

/usr/local/go/src/net/fd_unix.go:202 +0x4f

net.(*conn).Read(0xc09f780a48, 0xc01116a000, 0x4000, 0x4000, 0x0, 0x0, 0x0)

/usr/local/go/src/net/net.go:184 +0x68

bufio.(*Reader).Read(0xc0a6754ae0, 0xc0235b28ac, 0x4, 0x4, 0xc0718702b8, 0x36a86a0, 0xc0008ae380)

/usr/local/go/src/bufio/bufio.go:226 +0x26a

github.com/pingcap/tidb/server.bufferedReadConn.Read(...)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/buffered_read_conn.go:30

io.ReadAtLeast(0x36f0fe0, 0xc0dd672940, 0xc0235b28ac, 0x4, 0x4, 0x4, 0xc0002ae5a0, 0x1d, 0x1d)

/usr/local/go/src/io/io.go:310 +0x87

io.ReadFull(…)

/usr/local/go/src/io/io.go:329

github.com/pingcap/tidb/server.(*packetIO).readOnePacket(0xc0dd672960, 0x2b57506, 0xc000714e10, 0x3f9be0edc9a52daa, 0x116f318, 0x1c40f98bbe8387)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/packetio.go:79 +0x86

github.com/pingcap/tidb/server.(*packetIO).readPacket(0xc0dd672960, 0x16dcc754d3, 0x524b160, 0x0, 0x524b160, 0x0)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/packetio.go:105 +0x32

github.com/pingcap/tidb/server.(*clientConn).readPacket(…)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/conn.go:285

github.com/pingcap/tidb/server.(*clientConn).Run(0xc087925790, 0x3742b20, 0xc0a0ab0db0)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/conn.go:689 +0x1b0

github.com/pingcap/tidb/server.(*Server).onConn(0xc00905de40, 0xc087925790)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/server.go:415 +0xb12

created by github.com/pingcap/tidb/server.(*Server).Run

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/server.go:333 +0x709

goroutine 20328 [IO wait]:

internal/poll.runtime_pollWait(0x7f24633f3880, 0x72, 0xffffffffffffffff)

/usr/local/go/src/runtime/netpoll.go:184 +0x55

internal/poll.(*pollDesc).wait(0xc21141f198, 0x72, 0x4000, 0x4000, 0xffffffffffffffff)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:87 +0x45

internal/poll.(*pollDesc).waitRead(…)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:92

internal/poll.(*FD).Read(0xc21141f180, 0xc2129b6000, 0x4000, 0x4000, 0x0, 0x0, 0x0)

/usr/local/go/src/internal/poll/fd_unix.go:169 +0x1cf

net.(*netFD).Read(0xc21141f180, 0xc2129b6000, 0x4000, 0x4000, 0xc05d8595c0, 0x2b586d4, 0xc2118c3790)

/usr/local/go/src/net/fd_unix.go:202 +0x4f

net.(*conn).Read(0xc211a83ee0, 0xc2129b6000, 0x4000, 0x4000, 0x0, 0x0, 0x0)

/usr/local/go/src/net/net.go:184 +0x68

bufio.(*Reader).Read(0xc09a7379e0, 0xc2357ed5a8, 0x4, 0x4, 0xc20b164fa8, 0x0, 0xc0008ae380)

/usr/local/go/src/bufio/bufio.go:226 +0x26a

github.com/pingcap/tidb/server.bufferedReadConn.Read(...)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/buffered_read_conn.go:30

io.ReadAtLeast(0x36f0fe0, 0xc2128f69e0, 0xc2357ed5a8, 0x4, 0x4, 0x4, 0xc0002ae5a0, 0x1d, 0x1d)

/usr/local/go/src/io/io.go:310 +0x87

io.ReadFull(…)

/usr/local/go/src/io/io.go:329

github.com/pingcap/tidb/server.(*packetIO).readOnePacket(0xc2128f6a00, 0x2b57355, 0xc000715290, 0x3eff4880de72f8ac, 0x116f318, 0x1c40fa40c5ae89)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/packetio.go:79 +0x86

github.com/pingcap/tidb/server.(*packetIO).readPacket(0xc2128f6a00, 0x1791ce7fd5, 0x524b160, 0x0, 0x524b160, 0x0)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/packetio.go:105 +0x32

github.com/pingcap/tidb/server.(*clientConn).readPacket(…)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/conn.go:285

github.com/pingcap/tidb/server.(*clientConn).Run(0xc2118c3790, 0x3742b20, 0xc2118a5740)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/conn.go:689 +0x1b0

github.com/pingcap/tidb/server.(*Server).onConn(0xc00905de40, 0xc2118c3790)

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/server.go:415 +0xb12

created by github.com/pingcap/tidb/server.(*Server).Run

/home/jenkins/agent/workspace/tidb_v4.0.4/go/src/github.com/pingcap/tidb/server/server.go:333 +0x709

{“level”:“warn”,“ts”:“2022-09-27T14:01:11.177+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-19ec2cb5-f953-484d-bfe1-3a6d8347549a/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = etcdserver: leader changed”}

{“level”:“warn”,“ts”:“2022-09-27T14:08:48.597+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-19ec2cb5-f953-484d-bfe1-3a6d8347549a/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-09-27T14:08:54.230+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-19ec2cb5-f953-484d-bfe1-3a6d8347549a/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = etcdserver: leader changed”}

{“level”:“warn”,“ts”:“2022-09-27T14:17:29.586+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-19ec2cb5-f953-484d-bfe1-3a6d8347549a/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = etcdserver: leader changed”}

{“level”:“warn”,“ts”:“2022-09-27T14:17:32.107+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-19ec2cb5-f953-484d-bfe1-3a6d8347549a/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = etcdserver: leader changed”}

{“level”:“warn”,“ts”:“2022-09-27T14:17:34.598+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-19ec2cb5-f953-484d-bfe1-3a6d8347549a/192.168.3.33:2379”,“attempt”:1,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-09-27T14:17:39.584+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-19ec2cb5-f953-484d-bfe1-3a6d8347549a/192.168.3.33:2379”,“attempt”:1,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-09-27T14:35:49.116+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-19ec2cb5-f953-484d-bfe1-3a6d8347549a/192.168.3.33:2379”,“attempt”:0,“error”:“rpc error: code = NotFound desc = etcdserver: requested lease not found”}

版本v4.0.4

是新搭建的集群还是新改的配置参数,pessimistic-txn.ttl, txn-local-latches这两个去掉下看看

endpoint://client-1711b413-4d7f-4133-9cbe-2025c9d24aab/192.168.3.33:2379

这是个什么机器? 怎么又是码,又是 IP的…

这个节点不稳定,无法提供服务…查下

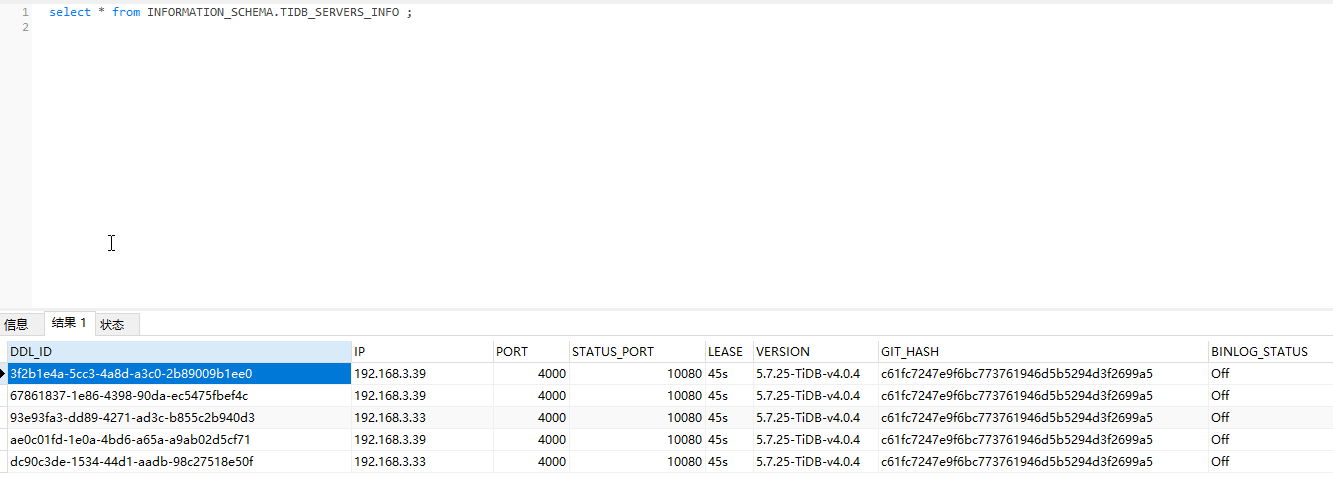

另外请提供下集群配置

集群 用好久了

这里的记录是不是重复了啊

正常的集群 ,PD也是这样的信息

一直重启前有没有调整配置参数,或缩容,扩容组件之类的,还是说什么都没调整就开始频繁重启了

是不是配置了这两个配置

https://docs.pingcap.com/zh/tidb/v4.0/tidb-configuration-file#txn-local-latches

为啥 PD 只有两个节点? 不怕脑裂么…

![]()

Raft 协议至少 3 节点… 这也太闹了,还不如单节点呢…

之前一直正常的啊

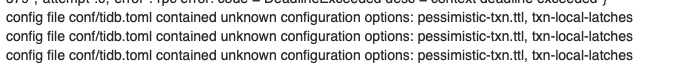

看下OS的日志

![]()

[Tue Sep 27 18:00:26 2022] Out of memory: Kill process 11036 (tidb-server) score 939 or sacrifice child

[Tue Sep 27 18:00:26 2022] [] out_of_memory+0x4b6/0x4f0

[Tue Sep 27 18:00:26 2022] Out of memory: Kill process 11037 (tidb-server) score 939 or sacrifice child

[Tue Sep 27 18:00:26 2022] [] out_of_memory+0x4b6/0x4f0

[Tue Sep 27 18:00:26 2022] Out of memory: Kill process 11055 (tidb-server) score 939 or sacrifice child

[Tue Sep 27 18:10:49 2022] [] out_of_memory+0x4b6/0x4f0

[Tue Sep 27 18:10:49 2022] Out of memory: Kill process 11347 (tidb-server) score 939 or sacrifice child

[Tue Sep 27 18:23:04 2022] [] out_of_memory+0x4b6/0x4f0

[Tue Sep 27 18:23:04 2022] Out of memory: Kill process 11986 (tidb-server) score 936 or sacrifice child

[Tue Sep 27 19:06:07 2022] [] out_of_memory+0x4b6/0x4f0

[Tue Sep 27 19:06:07 2022] Out of memory: Kill process 12735 (tidb-server) score 939 or sacrifice child

[Tue Sep 27 23:31:04 2022] [] out_of_memory+0x4b6/0x4f0

[Tue Sep 27 23:31:04 2022] Out of memory: Kill process 15815 (tidb-server) score 937 or sacrifice child

[Wed Sep 28 00:35:14 2022] [] out_of_memory+0x4b6/0x4f0

[Wed Sep 28 00:35:14 2022] Out of memory: Kill process 31055 (tidb-server) score 935 or sacrifice child