【 TiDB 使用环境】生产环境

【 TiDB 版本】v4.0.0-rc.2

【遇到的问题】

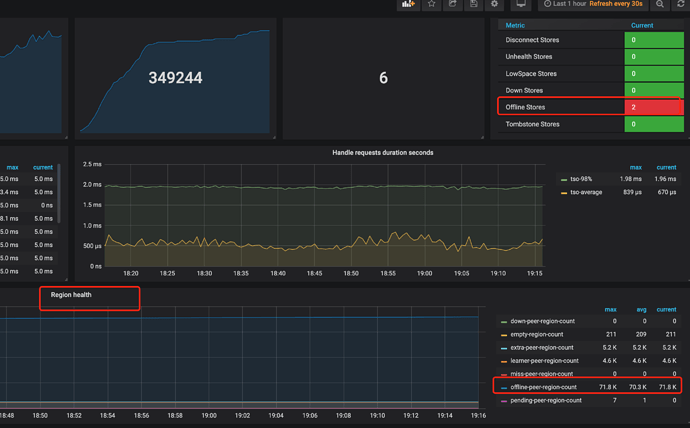

ReplicaChecker未调度出Operator来处理下线Store。

TiKV先扩容2台新机器,然后再缩容两台旧机器来完成裁撤替换,总TiKV是8个实例。旧机器状态是Offline,但是没见到ReplicaChecker调度Operator来转移这些多出来的offline-peer-region。

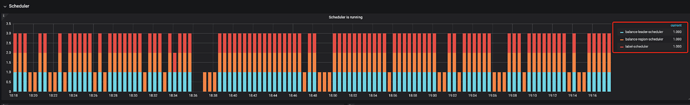

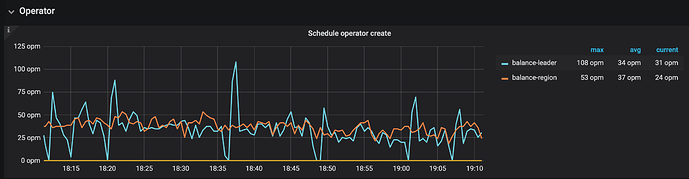

通过看板发现Operator里面只有balance-region和balance-leader,并没有replace-offline-replica和remove-extra-replica的调度。

查文档发现这个ReplicaChecker不能关闭呢,只能关闭balance-leader-scheduler和balance-region-scheduler。

辛苦各位大佬,看下怎么能启动这个ReplicaChecker来处理这些offline-peer-region

【复现路径】

执行TiKV的scale-in 下线,观察2台TiKV状态为Offline,但是没主动开始转移数据。

PD配置

{

“replication”: {

“enable-placement-rules”: “false”,

“location-labels”: “”,

“max-replicas”: 3,

“strictly-match-label”: “false”

},

“schedule”: {

“enable-cross-table-merge”: “false”,

“enable-debug-metrics”: “true”,

“enable-location-replacement”: “true”,

“enable-make-up-replica”: “true”,

“enable-one-way-merge”: “false”,

“enable-remove-down-replica”: “true”,

“enable-remove-extra-replica”: “true”,

“enable-replace-offline-replica”: “true”,

“high-space-ratio”: 0.8,

“hot-region-cache-hits-threshold”: 3,

“hot-region-schedule-limit”: 4,

“leader-schedule-limit”: 4,

“leader-schedule-policy”: “count”,

“low-space-ratio”: 0.9,

“max-merge-region-keys”: 200000,

“max-merge-region-size”: 20,

“max-pending-peer-count”: 16,

“max-snapshot-count”: 8,

“max-store-down-time”: “30m0s”,

“merge-schedule-limit”: 8,

“patrol-region-interval”: “100ms”,

“region-schedule-limit”: 32,

“replica-schedule-limit”: 64,

“scheduler-max-waiting-operator”: 5,

“split-merge-interval”: “1h0m0s”,

“store-balance-rate”: 15,

“store-limit-mode”: “manual”,

“tolerant-size-ratio”: 0

}

}

【问题现象及影响】

下线的TiKV Store没有Operator来转移。

【附件】