为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

【概述】场景+问题概述

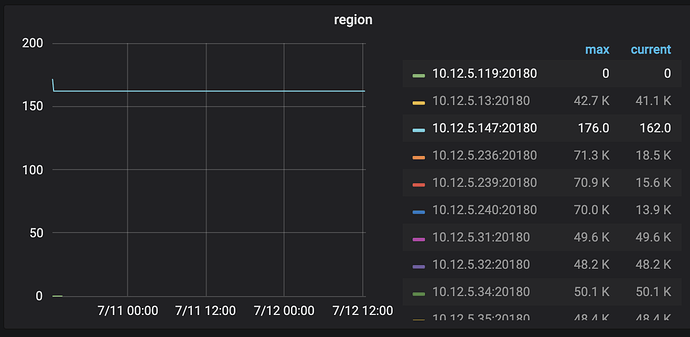

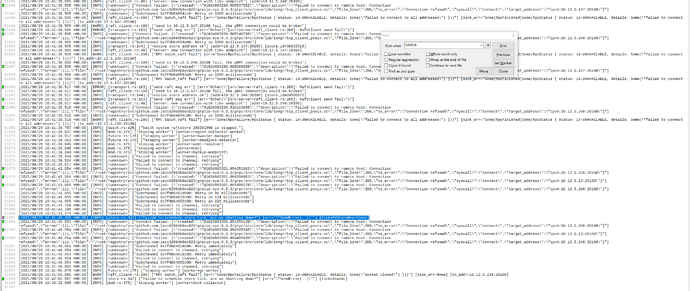

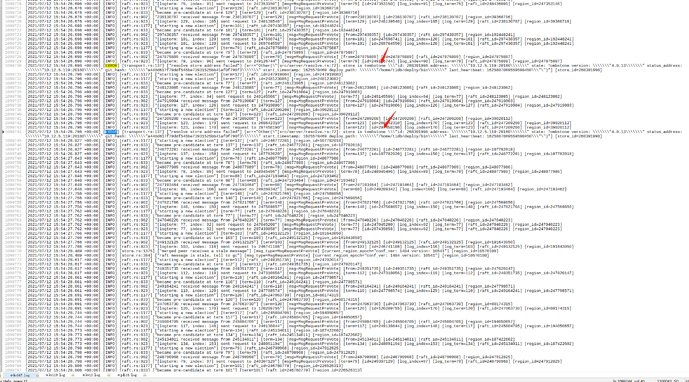

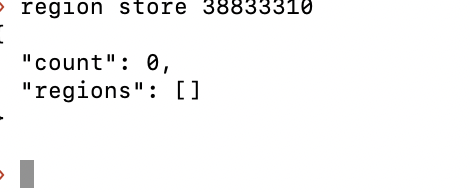

集群正在执行缩容操作,其中147节点一直处于pending offline状态,在还剩余176个region时不动,通过pd的region store 38833310(147节点的store id)命令,显示有14个region,将这些region通过transfer-region移除后,pd显示region为0,然后监控显示region数量也减少了14个,现在卡在162不动了

store信息

{

“count”: 10,

“stores”: [

{

“store”: {

“id”: 534172077,

“address”: “10.12.5.31:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.31:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625607322,

“deploy_path”: “/home/tidb/deploy/tikv-20180/bin”,

“last_heartbeat”: 1626063677867766687,

“state_name”: “Up”

},

“status”: {

“capacity”: “3.444TiB”,

“available”: “1.394TiB”,

“used_size”: “2.038TiB”,

“leader_count”: 18273,

“leader_weight”: 1,

“leader_score”: 18273,

“leader_size”: 1767721,

“region_count”: 49656,

“region_weight”: 1,

“region_score”: 4828311,

“region_size”: 4828311,

“start_ts”: “2021-07-06T21:35:22Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:17.867766687Z”,

“uptime”: “126h45m55.867766687s”

}

},

{

“store”: {

“id”: 534204559,

“address”: “10.12.5.34:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.34:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625625018,

“deploy_path”: “/home/tidb/deploy/tikv-20180/bin”,

“last_heartbeat”: 1626063678056973929,

“state_name”: “Up”

},

“status”: {

“capacity”: “3.444TiB”,

“available”: “1.389TiB”,

“used_size”: “2.042TiB”,

“leader_count”: 18266,

“leader_weight”: 1,

“leader_score”: 18266,

“leader_size”: 1786799,

“region_count”: 50183,

“region_weight”: 1,

“region_score”: 4844400,

“region_size”: 4844400,

“start_ts”: “2021-07-07T02:30:18Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:18.056973929Z”,

“uptime”: “121h51m0.056973929s”

}

},

{

“store”: {

“id”: 24478148,

“address”: “10.12.5.236:20160”,

“state”: 1,

“version”: “4.0.13”,

“status_address”: “10.12.5.236:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625578141,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1626063680893274157,

“state_name”: “Offline”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “4.611TiB”,

“used_size”: “646.2GiB”,

“leader_count”: 455,

“leader_weight”: 2,

“leader_score”: 227.5,

“leader_size”: 50740,

“region_count”: 18465,

“region_weight”: 2,

“region_score”: 896308.5,

“region_size”: 1792617,

“start_ts”: “2021-07-06T13:29:01Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:20.893274157Z”,

“uptime”: “134h52m19.893274157s”

}

},

{

“store”: {

“id”: 262397455,

“address”: “10.12.5.13:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.13:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625972309,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1626063682423247624,

“state_name”: “Up”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “4.408TiB”,

“used_size”: “1.472TiB”,

“leader_count”: 18270,

“leader_weight”: 1,

“leader_score”: 18270,

“leader_size”: 1770619,

“region_count”: 41208,

“region_weight”: 1,

“region_score”: 4129335,

“region_size”: 4129335,

“start_ts”: “2021-07-11T02:58:29Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:22.423247624Z”,

“uptime”: “25h22m53.423247624s”

}

},

{

“store”: {

“id”: 534172210,

“address”: “10.12.5.32:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.32:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625607928,

“deploy_path”: “/home/tidb/deploy/tikv-20180/bin”,

“last_heartbeat”: 1626063678426610555,

“state_name”: “Up”

},

“status”: {

“capacity”: “3.444TiB”,

“available”: “1.407TiB”,

“used_size”: “2.022TiB”,

“leader_count”: 18264,

“leader_weight”: 1,

“leader_score”: 18264,

“leader_size”: 1776709,

“region_count”: 48242,

“region_weight”: 1,

“region_score”: 4787261,

“region_size”: 4787261,

“start_ts”: “2021-07-06T21:45:28Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:18.426610555Z”,

“uptime”: “126h35m50.426610555s”

}

},

{

“store”: {

“id”: 534402022,

“address”: “10.12.5.35:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.35:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625756847,

“deploy_path”: “/home/tidb/deploy/tikv-20180/bin”,

“last_heartbeat”: 1626063685879831998,

“state_name”: “Up”

},

“status”: {

“capacity”: “3.444TiB”,

“available”: “1.365TiB”,

“used_size”: “2.065TiB”,

“leader_count”: 18263,

“leader_weight”: 1,

“leader_score”: 18263,

“leader_size”: 1744583,

“region_count”: 48435,

“region_weight”: 1,

“region_score”: 24401539.15261078,

“region_size”: 4779096,

“sending_snap_count”: 6,

“start_ts”: “2021-07-08T15:07:27Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:25.879831998Z”,

“uptime”: “85h13m58.879831998s”

}

},

{

“store”: {

“id”: 534414223,

“address”: “10.12.5.36:20160”,

“version”: “4.0.13”,

“status_address”: “10.12.5.36:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625727955,

“deploy_path”: “/home/tidb/deploy/tikv-20180/bin”,

“last_heartbeat”: 1626063685177102463,

“state_name”: “Up”

},

“status”: {

“capacity”: “3.444TiB”,

“available”: “1.419TiB”,

“used_size”: “2.013TiB”,

“leader_count”: 18264,

“leader_weight”: 1,

“leader_score”: 18264,

“leader_size”: 1902987,

“region_count”: 48760,

“region_weight”: 1,

“region_score”: 4833216,

“region_size”: 4833216,

“sending_snap_count”: 1,

“start_ts”: “2021-07-08T07:05:55Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:25.177102463Z”,

“uptime”: “93h15m30.177102463s”

}

},

{

“store”: {

“id”: 24480822,

“address”: “10.12.5.239:20160”,

“state”: 1,

“version”: “4.0.13”,

“status_address”: “10.12.5.239:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625578218,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1626063679251086555,

“state_name”: “Offline”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “5.222TiB”,

“used_size”: “561.7GiB”,

“leader_count”: 547,

“leader_weight”: 2,

“leader_score”: 273.5,

“leader_size”: 55834,

“region_count”: 15558,

“region_weight”: 2,

“region_score”: 780357.5,

“region_size”: 1560715,

“start_ts”: “2021-07-06T13:30:18Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:19.251086555Z”,

“uptime”: “134h51m1.251086555s”

}

},

{

“store”: {

“id”: 24590972,

“address”: “10.12.5.240:20160”,

“state”: 1,

“version”: “4.0.13”,

“status_address”: “10.12.5.240:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625578262,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1626063683722675545,

“state_name”: “Offline”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “5.214TiB”,

“used_size”: “512.6GiB”,

“leader_count”: 686,

“leader_weight”: 2,

“leader_score”: 343,

“leader_size”: 71494,

“region_count”: 13789,

“region_weight”: 2,

“region_score”: 696865,

“region_size”: 1393730,

“start_ts”: “2021-07-06T13:31:02Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:23.722675545Z”,

“uptime”: “134h50m21.722675545s”

}

},

{

“store”: {

“id”: 38833310,

“address”: “10.12.5.147:20160”,

“state”: 1,

“version”: “4.0.13”,

“status_address”: “10.12.5.147:20180”,

“git_hash”: “a448d617f79ddf545be73931525bb41af0f790f3”,

“start_timestamp”: 1625578102,

“deploy_path”: “/home/tidb/deploy/bin”,

“last_heartbeat”: 1626063684247104886,

“state_name”: “Offline”

},

“status”: {

“capacity”: “5.952TiB”,

“available”: “5.606TiB”,

“used_size”: “20.73GiB”,

“leader_count”: 0,

“leader_weight”: 2,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 10,

“region_weight”: 2,

“region_score”: 172.5,

“region_size”: 345,

“start_ts”: “2021-07-06T13:28:22Z”,

“last_heartbeat_ts”: “2021-07-12T04:21:24.247104886Z”,

“uptime”: “134h53m2.247104886s”

}

}

]

}

【背景】做过哪些操作

【现象】业务和数据库现象

【业务影响】

【TiDB 版本】

【附件】

- 相关日志 和 监控

-

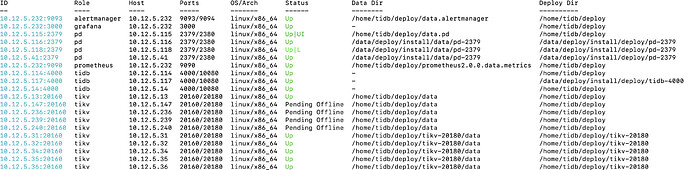

TiUP Cluster Display 信息

-

TiUP Cluster Edit Config 信息

-

TiDB- Overview 监控

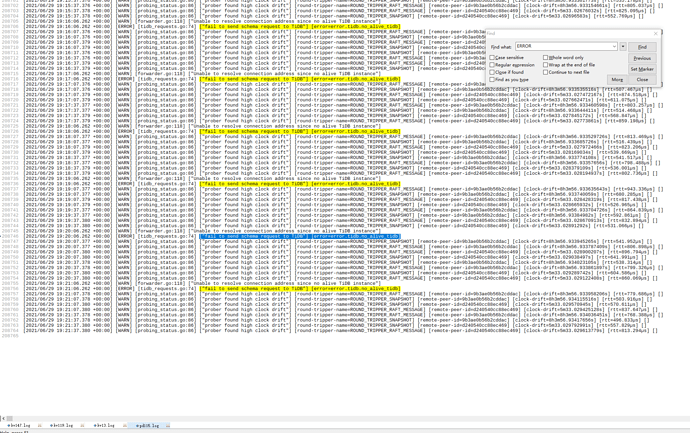

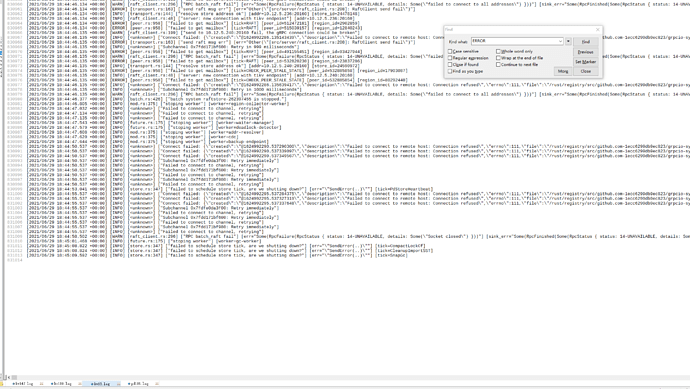

- 对应模块日志(包含问题前后1小时日志)

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。