用cdc cli changefeed query查询状态为normal,但数据一直没同步:

{

“info”: {

“sink-uri”: “mysql://root:aaaaaa@172.31.0.246:4000/”,

“opts”: {

“_changefeed_id”: “rpt-cdc-task”

},

“create-time”: “2021-06-17T21:01:54.814229576+08:00”,

“start-ts”: 425697915851505672,

“target-ts”: 0,

“admin-job-type”: 2,

“sort-engine”: “unified”,

“sort-dir”: “.”,

“config”: {

“case-sensitive”: true,

“enable-old-value”: false,

“force-replicate”: false,

“filter”: {

“rules”: [

“yxt.ote_userexam”,

“yxt.ote_examarrange”,

“yxt.sta_org_user”

],

“ignore-txn-start-ts”: null,

“ddl-allow-list”: null

},

“mounter”: {

“worker-num”: 6

},

“sink”: {

“dispatchers”: [

{

“matcher”: [

“yxt.ote_userexam”,

“yxt.ote_examarrange”,

“yxt.sta_org_user”

],

“dispatcher”: “ts”

}

],

“protocol”: “default”

},

“cyclic-replication”: {

“enable”: false,

“replica-id”: 1,

“filter-replica-ids”: null,

“id-buckets”: 0,

“sync-ddl”: true

},

“scheduler”: {

“type”: “table-number”,

“polling-time”: -1

}

},

“state”: “normal”,

“history”: null,

“error”: null,

“sync-point-enabled”: false,

“sync-point-interval”: 600000000000

},

“status”: {

“resolved-ts”: 0,

“checkpoint-ts”: 425697915851505672,

“admin-job-type”: 0

},

“count”: 0,

“task-status”: [

{

“capture-id”: “e2236b7f-5c60-4c3e-a910-6d56ddbb9d80”,

“status”: {

“tables”: {

“2943”: {

“start-ts”: 425697915851505672,

“mark-table-id”: 0

},

“952”: {

“start-ts”: 425697915851505672,

“mark-table-id”: 0

},

“954”: {

“start-ts”: 425697915851505672,

“mark-table-id”: 0

}

},

“operation”: {

“2943”: {

“delete”: false,

“boundary_ts”: 425697915851505672,

“done”: false

},

“952”: {

“delete”: false,

“boundary_ts”: 425697915851505672,

“done”: false

},

“954”: {

“delete”: false,

“boundary_ts”: 425697915851505672,

“done”: false

}

},

“admin-job-type”: 1

}

}

]

}

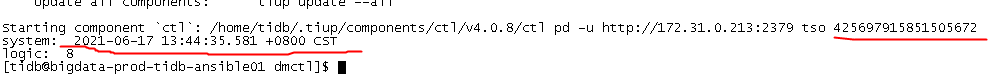

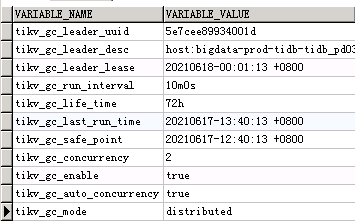

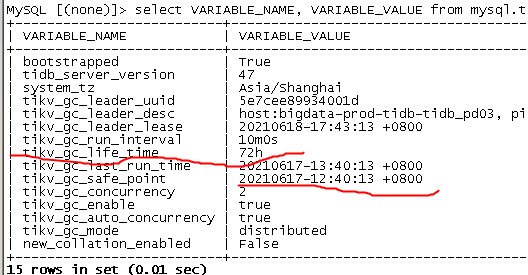

- tso 设置的时间是 2021-06-17 13:44:35.581 +0800 CST ? 上游 tidb 配置的 gc 是多长时间? 这个tso 配置了吗?默认使用当前时间是否可以?

- 查看下 ticdc 日志是否有其他报错。

ticdc日志没报错,这个任务是是要从2021-06-17 13:44:35.581开始执行的,gc设置的是48小时

下游是TiDB,版本是4.0.8,有做表过滤,只同步其中三张表

1.上游tidb gc设置:

ticdc任务启动指定–start-ts=425697915851505672,对应时间"checkpoint": “2021-06-17 13:44:35.581”

应该是在gc safe point之后

2.ticdc时不时出现以下日志:

[2021/06/17 23:48:54.993 +08:00] [INFO] [client.go:850] [“EventFeed disconnected”] [regionID=7] [requestID=93] [span=“[6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006c0000000000fa, 6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006d0000000000fa)”] [checkpoint=425707421009444865] [error="[CDC:ErrEventFeedEventError]not_leader:<region_id:7 > "]

[2021/06/17 23:48:54.993 +08:00] [INFO] [region_range_lock.go:365] [“unlocked range”] [lockID=11] [regionID=7] [startKey=6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006c0000000000fa] [endKey=6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006d0000000000fa] [checkpointTs=425707421009444865]

[2021/06/17 23:48:54.993 +08:00] [INFO] [region_cache.go:816] [“switch region peer to next due to NotLeader with NULL leader”] [currIdx=0] [regionID=7]

[2021/06/17 23:48:54.993 +08:00] [INFO] [region_range_lock.go:217] [“range locked”] [lockID=11] [regionID=7] [startKey=6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006c0000000000fa] [endKey=6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006d0000000000fa] [checkpointTs=425707421009444865]

设置日志debug级别后,一直返回:

[2021/06/17 23:57:50.080 +08:00] [DEBUG] [changefeed.go:768] [“skip update resolved ts”] [taskPositions=0] [taskStatus=1]

[2021/06/17 23:57:50.623 +08:00] [DEBUG] [changefeed.go:768] [“skip update resolved ts”] [taskPositions=0] [taskStatus=1]

[2021/06/17 23:57:51.217 +08:00] [DEBUG] [changefeed.go:768] [“skip update resolved ts”] [taskPositions=0] [taskStatus=1]

[2021/06/17 23:57:51.900 +08:00] [DEBUG] [changefeed.go:768] [“skip update resolved ts”] [taskPositions=0] [taskStatus=1]

是否正常

3.ticdc启动不指定–start-ts后,checkpoint也不会往后推

#cdc cli changefeed create --pd=http://172.31.0.213:2379 --sink-uri=“mysql://root@aaaaa@172.31.0.246:4000/” --changefeed-id=“rpt-cdc-task” --config=rpt-cdc.toml

#cdc cli changefeed list --pd=http://172.31.0.213:2379

[

{

“id”: “rpt-cdc-task”,

“summary”: {

“state”: “normal”,

“tso”: 425707684005412873,

“checkpoint”: “2021-06-18 00:05:38.131”, --不会变化

“error”: null

}

}

]

这三张表是用lightning local模式全量导入的,现在要做增量同步

改成tidb也不行

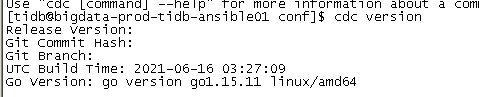

上下游版本都是 4.0.8

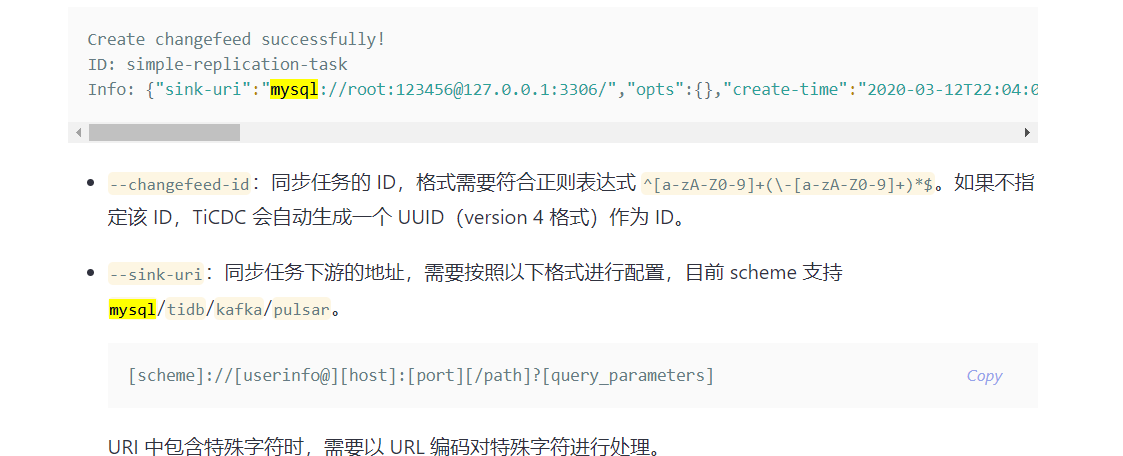

麻烦确认一下,获取 TS 方式是否参考以下文档方式。选取一下 start ts ,做一下数据同步试试。

https://docs.pingcap.com/zh/tidb/stable/troubleshoot-ticdc#ticdc-创建任务时如何选择-start-ts

我没指定start ts,也是不行

cdc cli changefeed create --pd=http://172.31.0.213:2379 --sink-uri=“tidb://root:aaaaa@172.31.0.246:4000” --config rpt-cdc.toml --start-ts=0 --changefeed-id rpt-cdc-task-bright1

我指定start-ts=0,还是不行

这个检查点一直不会往前推进

case-sensitive = true

enable-old-value = false

[filter]

rules = [‘yxt.ote_userexam’,‘yxt.ote_examarrange’,‘yxt.sta_org_user’]

[sink]

protocol = “default”

[mounter]

worker-num = 6

[cyclic-replication]

enable = false

replica-id = 1

sync-ddl = true

这是配置文件

-

不设置 start-ts 为 0 ,别使用这个参数

cdc cli changefeed create --pd=http://172.31.0.213:2379 --sink-uri=“tidb://root:aaaaa@172.31.0.246:4000” --config rpt-cdc.toml --changefeed-id rpt-cdc-task-bright1 -

环形复制需要吗?先别配置了试试

[cyclic-replication]

enable = false

replica-id = 1

sync-ddl = true

- 这个任务删掉重新配置,或者修改配置后 update 更新。

startTs less than gcSafePoint: [tikv:9006]GC life time is shorter than transaction duration, transaction starts at 425697915851505672, GC safe point is 18446744073709551615

创建时提示这个错误

1.最上面查询状态的 resolved-ts 一直为 0 ,看起来是 ticdc 集群本身卡主,麻烦删掉任务重启 cdc 进程后,再新建任务试下 ;

2.麻烦检查下同步的三张表是否满足下面的限制条件:

[2021/06/18 17:22:52.272 +08:00] [WARN] [client_changefeed.go:208] [“this changefeed has been deleted, the residual meta data will be completely deleted within 24 hours.”]

删除时提示这个

CDC我有重启过