[root@server ~]# tiup ctl:v5.0.1 pd -u http://192.168.100.94:2379 store

Starting component ctl: /root/.tiup/components/ctl/v5.0.1/ctl pd -u http://192.168.100.94:2379 store

{

“count”: 7,

“stores”: [

{

“store”: {

“id”: 3651246,

“address”: “192.168.100.187:3930”,

“state”: 1,

“labels”: [

{

“key”: “engine”,

“value”: “tiflash”

}

],

“version”: “v5.0.1”,

“peer_address”: “192.168.100.187:20170”,

“status_address”: “192.168.100.187:20292”,

“git_hash”: “1821cf655bc90e1fab6e6154cfe994c19c75d377”,

“start_timestamp”: 1623142668,

“deploy_path”: “/home/tidb-deploy/tiflash-9000/bin/tiflash”,

“last_heartbeat”: 1623751836900362022,

“state_name”: “Offline”

},

“status”: {

“capacity”: “0B”,

“available”: “0B”,

“used_size”: “0B”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 0,

“region_weight”: 1,

“region_score”: 0,

“region_size”: 0,

“start_ts”: “2021-06-08T16:57:48+08:00”,

“last_heartbeat_ts”: “2021-06-15T18:10:36.900362022+08:00”,

“uptime”: “169h12m48.900362022s”

}

},

{

“store”: {

“id”: 3691328,

“address”: “192.168.100.186:3930”,

“labels”: [

{

“key”: “engine”,

“value”: “tiflash”

}

],

“version”: “v5.0.1”,

“peer_address”: “192.168.100.186:20170”,

“status_address”: “192.168.100.186:20292”,

“git_hash”: “1821cf655bc90e1fab6e6154cfe994c19c75d377”,

“start_timestamp”: 1624323601,

“deploy_path”: “/home/tidb-deploy/tiflash-9000/bin/tiflash”,

“last_heartbeat”: 1624334734049585406,

“state_name”: “Up”

},

“status”: {

“capacity”: “44.16GiB”,

“available”: “41.24GiB”,

“used_size”: “2.921GiB”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 131,

“region_weight”: 1,

“region_score”: 7752,

“region_size”: 7752,

“start_ts”: “2021-06-22T09:00:01+08:00”,

“last_heartbeat_ts”: “2021-06-22T12:05:34.049585406+08:00”,

“uptime”: “3h5m33.049585406s”

}

},

{

“store”: {

“id”: 7332002,

“address”: “192.168.100.126:20160”,

“version”: “5.0.1”,

“status_address”: “192.168.100.126:20180”,

“git_hash”: “e26389a278116b2f61addfa9f15ca25ecf38bc80”,

“start_timestamp”: 1624323577,

“deploy_path”: “/home/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1624334730925288853,

“state_name”: “Up”

},

“status”: {

“capacity”: “440.9GiB”,

“available”: “405GiB”,

“used_size”: “12.71GiB”,

“leader_count”: 3625,

“leader_weight”: 1,

“leader_score”: 3625,

“leader_size”: 23609,

“region_count”: 10883,

“region_weight”: 1,

“region_score”: 78120,

“region_size”: 78120,

“start_ts”: “2021-06-22T08:59:37+08:00”,

“last_heartbeat_ts”: “2021-06-22T12:05:30.925288853+08:00”,

“uptime”: “3h5m53.925288853s”

}

},

{

“store”: {

“id”: 7982622,

“address”: “192.168.100.127:20160”,

“version”: “5.0.1”,

“status_address”: “192.168.100.127:20180”,

“git_hash”: “e26389a278116b2f61addfa9f15ca25ecf38bc80”,

“start_timestamp”: 1624323577,

“deploy_path”: “/home/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1624334730334522569,

“state_name”: “Up”

},

“status”: {

“capacity”: “440.9GiB”,

“available”: “405.1GiB”,

“used_size”: “12.63GiB”,

“leader_count”: 3625,

“leader_weight”: 1,

“leader_score”: 3625,

“leader_size”: 26096,

“region_count”: 10883,

“region_weight”: 1,

“region_score”: 78120,

“region_size”: 78120,

“start_ts”: “2021-06-22T08:59:37+08:00”,

“last_heartbeat_ts”: “2021-06-22T12:05:30.334522569+08:00”,

“uptime”: “3h5m53.334522569s”

}

},

{

“store”: {

“id”: 9235861,

“address”: “192.168.100.94:20160”,

“version”: “5.0.1”,

“status_address”: “192.168.100.94:20180”,

“git_hash”: “e26389a278116b2f61addfa9f15ca25ecf38bc80”,

“start_timestamp”: 1624323576,

“deploy_path”: “/home/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1624334728907333613,

“state_name”: “Up”

},

“status”: {

“capacity”: “494.7GiB”,

“available”: “385.1GiB”,

“used_size”: “12.64GiB”,

“leader_count”: 3633,

“leader_weight”: 1,

“leader_score”: 3633,

“leader_size”: 28415,

“region_count”: 10883,

“region_weight”: 1,

“region_score”: 78120,

“region_size”: 78120,

“start_ts”: “2021-06-22T08:59:36+08:00”,

“last_heartbeat_ts”: “2021-06-22T12:05:28.907333613+08:00”,

“uptime”: “3h5m52.907333613s”

}

},

{

“store”: {

“id”: 70002,

“address”: “192.168.100.186:20160”,

“state”: 1,

“version”: “5.0.1”,

“status_address”: “192.168.100.186:20180”,

“git_hash”: “e26389a278116b2f61addfa9f15ca25ecf38bc80”,

“start_timestamp”: 1624323576,

“deploy_path”: “/home/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1624334728528215947,

“state_name”: “Offline”

},

“status”: {

“capacity”: “44.16GiB”,

“available”: “19.91GiB”,

“used_size”: “8.49GiB”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 0,

“region_weight”: 1,

“region_score”: 0,

“region_size”: 0,

“start_ts”: “2021-06-22T08:59:36+08:00”,

“last_heartbeat_ts”: “2021-06-22T12:05:28.528215947+08:00”,

“uptime”: “3h5m52.528215947s”

}

},

{

“store”: {

“id”: 70059,

“address”: “192.168.100.187:20160”,

“version”: “5.0.1”,

“status_address”: “192.168.100.187:20180”,

“git_hash”: “e26389a278116b2f61addfa9f15ca25ecf38bc80”,

“start_timestamp”: 1623136089,

“deploy_path”: “/home/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1623751882105124348,

“state_name”: “Down”

},

“status”: {

“capacity”: “0B”,

“available”: “0B”,

“used_size”: “0B”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 0,

“region_weight”: 1,

“region_score”: 0,

“region_size”: 0,

“start_ts”: “2021-06-08T15:08:09+08:00”,

“last_heartbeat_ts”: “2021-06-15T18:11:22.105124348+08:00”,

“uptime”: “171h3m13.105124348s”

}

}

]

}

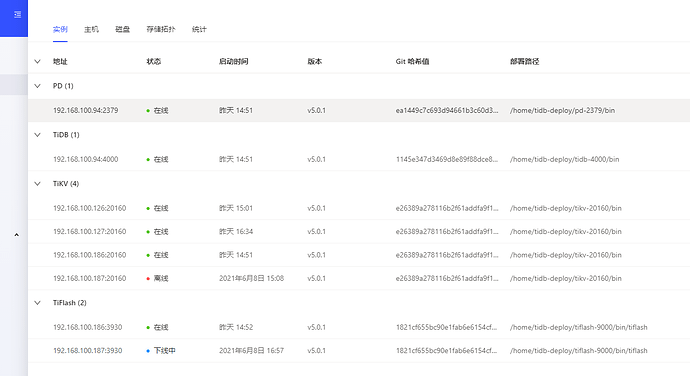

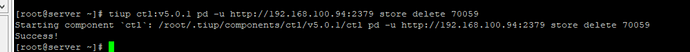

有问题的这个 storeid = 70059

其实我最上面也有发出来了

前面是:执行 delete 后一直是 offine 状态,“region_count”:949 这个一直都是这个值,不会变成0

现在region count 为0了.但是还是在.删不掉