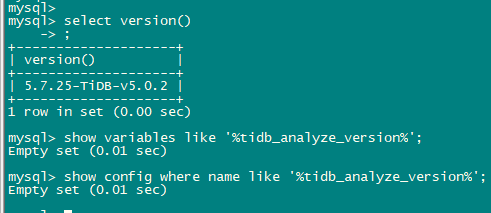

【TiDB 版本】5.0.2

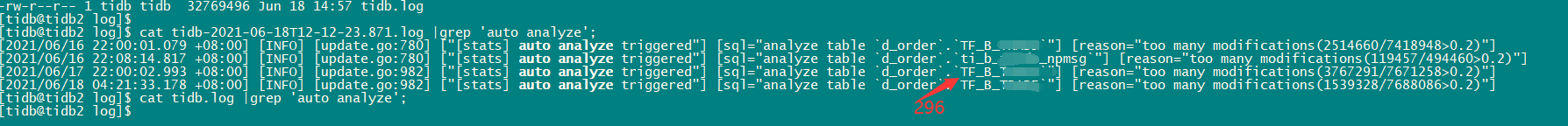

内部SQL 查询 mysql.stats_histograms 成为慢SQL,slow 日志如下,时间主要消耗在prewrite, 该表的select操作触发了内部数据变更?

select table_id, is_index, hist_id, distinct_count, version, null_count, tot_col_size, stats_ver, flag, correlation, last_analyze_pos from mysql.stats_histograms where table_id = 296;

Time: 2021-06-17T14:51:38.307281364+08:00

Txn_start_ts: 425698968233836568

# Query_time: 8.192957657000001

Parse_time: 0

Compile_time: 0.000549031

Rewrite_time: 0.000148509

Optimize_time: 0.000193462

Wait_TS: 0.000007247

# Prewrite_time: 8.17349929 Commit_time: 0.006987921 Get_commit_ts_time: 0.000822984 Commit_backoff_time: 172.092 Backoff_types: [txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock txnLock] Resolve_lock_time: 2.271464247 Write_keys: 84 Write_size: 1919366 Prewrite_region: 6

Is_internal: true

Digest: 4b1bd9a81f4d47a43392b6e996af9f45d6b5b545e2799ad16623bd38493b2e41

Num_cop_tasks: 0

Mem_max: 1997990

Prepared: false

Plan_from_cache: false

Plan_from_binding: false

Has_more_results: false

KV_total: 3.866736011

PD_total: 0.000826715

Backoff_total: 172.092

Write_sql_response_total: 0

Succ: true

Plan: tidb_decode_plan('qgTwTDAJMjdfM…

id task estRows operator info actRows execution info memory disk

Projection_4 root 10 mysql.stats_histograms.table_id, mysql.stats_histograms.is_index, mysql.stats_histograms.hist_id, mysql.stats_histograms.distinct_count, mysql.stats_histograms.version, mysql.stats_histograms.null_count, mysql.stats_histograms.tot_col_size, mysql.stats_histograms.stats_ver, mysql.stats_histograms.flag, mysql.stats_histograms.correlation, mysql.stats_histograms.last_analyze_pos 88 time:5.09s, loops:2, Concurrency:OFF 13.5 KB N/A

└─IndexLookUp_10 root 10 88 time:5.09s, loops:2, index_task: {total_time: 459.8µs, fetch_handle: 456.1µs, build: 640ns, wait: 3.13µs}, table_task: {total_time: 5.09s, num: 1, concurrency: 5} 22.9 KB N/A

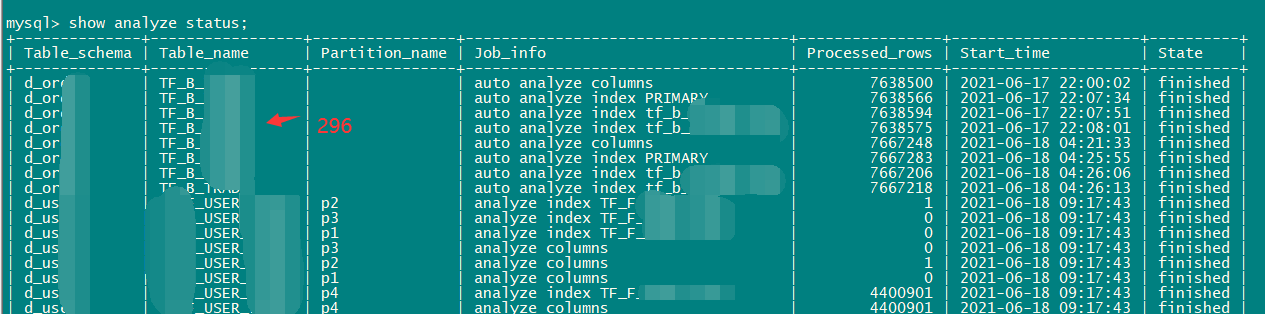

├─IndexRangeScan_8 cop[tikv] 10 table:stats_histograms, index:tbl(table_id, is_index, hist_id), range:[296,296], keep order:false, stats:pseudo 88 time:447µs, loops:3, cop_task: {num: 1, max: 465.1µs, proc_keys: 88, rpc_num: 1, rpc_time: 453.5µs, copr_cache_hit_ratio: 0.00}, tikv_task:{time:0s, loops:2}, scan_detail: {total_process_keys: 88, total_keys: 89, rocksdb: {delete_skipped_count: 0, key_skipped_count: 88, block: {cache_hit_count: 15, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

└─TableRowIDScan_9 cop[tikv] 10 table:stats_histograms, keep order:false, stats:pseudo 88 time:5.09s, loops:2, cop_task: {num: 6, max: 2.27ms, min: 871.2µs, avg: 1.41ms, p95: 2.27ms, max_proc_keys: 25, p95_proc_keys: 25, tot_proc: 4ms, tot_wait: 1ms, rpc_num: 15, rpc_time: 13.8ms, copr_cache_hit_ratio: 0.00}, ResolveLock:{num_rpc:9, total_time:20.3s}, backoff{txnNotFound: 20s}, tikv_task:{proc max:1ms, min:0s, p80:1ms, p95:1ms, iters:6, tasks:6}, scan_detail: {total_process_keys: 88, total_keys: 787, rocksdb: {delete_skipped_count: 117, key_skipped_count: 1551, block: {cache_hit_count: 483, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

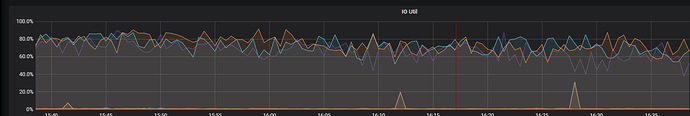

操作期间磁盘存在性能问题