【概述】使用TIUP扩容TIKV节点成功后,新节点没有数据写入

【背景】执行kv节点扩容操作:

tiup cluster scale-out tidb-cluster-name scale-out.yaml --user tidb -i /home/tidb/.ssh/id_rsa

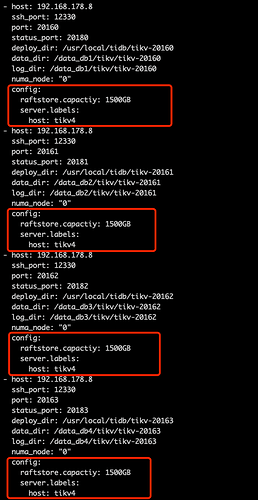

scale-out.yaml 文件内容:

tikv_servers:

host: 192.168.178.8

ssh_port: 12330

port: 20160

status_port: 20180

deploy_dir: /usr/local/tidb/tikv-20160

data_dir: /data_db1/tikv/tikv-20160

log_dir: /data_db1/tikv/tikv-20160host: 192.168.178.8

ssh_port: 12330

port: 20161

status_port: 20181

deploy_dir: /usr/local/tidb/tikv-20161

data_dir: /data_db2/tikv/tikv-20161

log_dir: /data_db2/tikv/tikv-20161host: 192.168.178.8

ssh_port: 12330

port: 20162

status_port: 20182

deploy_dir: /usr/local/tidb/tikv-20162

data_dir: /data_db3/tikv/tikv-20162

log_dir: /data_db3/tikv/tikv-20162host: 192.168.178.8

ssh_port: 12330

port: 20163

status_port: 20183

deploy_dir: /usr/local/tidb/tikv-20163

data_dir: /data_db4/tikv/tikv-20163

log_dir: /data_db4/tikv/tikv-20163

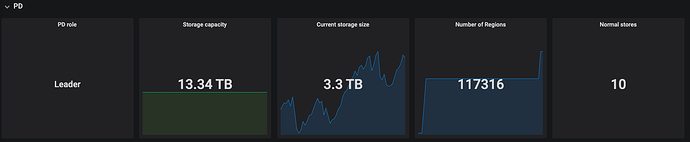

【现象】扩容后显示扩容正常,pd-ctl store 显示4个节点也已经新增,但是leader_score和region_count均为0

{

“store”: {

“id”: 5278951,

“address”: “192.168.178.8:20162”,

“version”: “4.0.0-rc”,

“status_address”: “192.168.178.8:20182”,

“git_hash”: “f45d0c963df3ee4b1011caf5eb146cacd1fbbad8”,

“start_timestamp”: 1623912961,

“binary_path”: “/usr/local/tidb/tikv-20162/bin/tikv-server”,

“last_heartbeat”: 1623917401462523740,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.745TiB”,

“available”: “1.743TiB”,

“used_size”: “31.5MiB”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 0,

“region_weight”: 1,

“region_score”: 0,

“region_size”: 0,

“start_ts”: “2021-06-17T14:56:01+08:00”,

“last_heartbeat_ts”: “2021-06-17T16:10:01.46252374+08:00”,

“uptime”: “1h14m0.46252374s”

}

tikv节点日志信息:

[2021/06/17 14:56:00.794 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=addr-resolver]

[2021/06/17 14:56:00.796 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=region-collector-worker]

[2021/06/17 14:56:00.895 +08:00] [INFO] [future.rs:136] [“starting working thread”] [worker=gc-worker]

[2021/06/17 14:56:00.896 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=lock-collector]

[2021/06/17 14:56:00.959 +08:00] [INFO] [mod.rs:181] [“Storage started.”]

[2021/06/17 14:56:00.971 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=split-check]

[2021/06/17 14:56:00.973 +08:00] [INFO] [node.rs:348] [“start raft store thread”] [store_id=5278949]

[2021/06/17 14:56:00.973 +08:00] [INFO] [store.rs:862] [“start store”] [takes=15.599µs] [merge_count=0] [applying_count=0] [tombstone_count=0] [region_count=0] [store_id=5278949]

[2021/06/17 14:56:00.973 +08:00] [INFO] [store.rs:913] [“cleans up garbage data”] [takes=24.983µs] [garbage_range_count=1] [store_id=5278949]

[2021/06/17 14:56:00.992 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=snapshot-worker]

[2021/06/17 14:56:00.994 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=raft-gc-worker]

[2021/06/17 14:56:00.995 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=cleanup-worker]

[2021/06/17 14:56:00.999 +08:00] [INFO] [future.rs:136] [“starting working thread”] [worker=pd-worker]

[2021/06/17 14:56:01.000 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=consistency-check]

[2021/06/17 14:56:01.003 +08:00] [WARN] [store.rs:1180] [“set thread priority for raftstore failed”] [error=“Os { code: 13, kind: PermissionDenied, message: "Permission denied" }”]

[2021/06/17 14:56:01.003 +08:00] [INFO] [node.rs:167] [“put store to PD”] [store=“id: 5278949 address: "192.168.178.8:20160" version: "4.0.0-rc" status_address: "192.168.178.8:20180" git_hash: "f45d0c963df3ee4b1011caf5eb146cacd1fbbad8" start_timestamp: 1623912960 binary_path: "/usr/local/tidb/tikv-20160/bin/tikv-server"”]

[2021/06/17 14:56:01.011 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=cdc]

[2021/06/17 14:56:01.033 +08:00] [INFO] [future.rs:136] [“starting working thread”] [worker=waiter-manager]

[2021/06/17 14:56:01.040 +08:00] [INFO] [future.rs:136] [“starting working thread”] [worker=deadlock-detector]

[2021/06/17 14:56:01.042 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=backup-endpoint]

[2021/06/17 14:56:01.052 +08:00] [INFO] [mod.rs:335] [“starting working thread”] [worker=snap-handler]

[2021/06/17 14:56:01.054 +08:00] [INFO] [server.rs:225] [“listening on addr”] [addr=0.0.0.0:20160]

[2021/06/17 14:56:01.065 +08:00] [INFO] [server.rs:253] [“TiKV is ready to serve”]

【TiDB 版本】v4.0.0-rc

请问集群为何没有自动平衡数据?还需要调整leader_weight和region_weight参数吗?