agoo

2021 年6 月 16 日 10:56

1

【TiDB 版本】

【概述】

CREATE TABLE `t` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT,

`bid` int(10) unsigned NOT NULL,

`cid` bigint(20) DEFAULT NULL,

`flag` tinyint(3) NOT NULL DEFAULT '0',

`last_update` timestamp DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`) /*T![clustered_index] CLUSTERED */,

KEY `x_flag` (`bid`,`cid`,`flag`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin;

使用如下的方式循环操作(更新操作后立刻进行读取)

while(1){

$result = dbdo("select id, bid, flag from t where flag=0 limit 5000");

if (count($result) == 0) break;

//do something with $result

dbdo("update t set flag=1 where bid in(xxx)");

}

[2021/06/12 03:40:07.707 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 74800000000003BA8C5F698000000000000004040000000000002730040000000000000000038000000000000004 lock_version: 425574218510368769 key: 74800000000003BA8C5F72800000000000010E lock_ttl: 3002 txn_size: 459 min_commit_ts: 425574218510368770"]

[2021/06/12 03:40:07.759 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 74800000000003BA8C5F698000000000000001040000000000002730040000000000167F39 lock_version: 425574218523475969 key: 74800000000003BA8C5F698000000000000004040000000000002730040000000000000001038000000000000004 lock_ttl: 3002 txn_size: 765 lock_type: Del min_commit_ts: 425574218523475970"]

[2021/06/12 03:40:07.811 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 74800000000003BA885F698000000000000004040000000000002730040000000000000000038000000000000030 lock_version: 425574218536583173 key: 74800000000003BA885F698000000000000004040000000000002730040000000000000001038000000000000030 lock_ttl: 3007 txn_size: 2157 min_commit_ts: 425574218536583174"]

这个例子中,确实可以修改程序用逻辑来避免下一次的select去扫描前一次update过的记录,

不修改程序对业务本身没有影响,但是会触发大量meet_lock报警。

所以想问下,

谢谢。

1 个赞

qizheng

2021 年6 月 16 日 11:42

2

可以看下 TiDB 监控 KV Errors 面板下面的 kv backoff ops 有哪些,以及 slow query 日志有没有记录相关 Backoff_time 和 Backoff_type

这种表现可能是因为 5.0 的新 feature async commit 引入的(或者说概率增加了)。在开启 async commit 之后(5.0 默认开启),两阶段事务第一阶段写锁完成之后就会返回成功,而 commit 阶段则会异步完成。所以此时立马去读可能就会遇到锁,之后 tidb 会重试。https://docs.pingcap.com/zh/tidb/stable/system-variables#tidb_enable_async_commit-从-v50-版本开始引入

1 个赞

agoo

2021 年6 月 17 日 04:59

4

感谢回复。

1 这次测试是在单机测试环境下做的,没有配监控面板。

2 看了乐观锁和悲观锁的文档, TiDB 锁冲突问题处理 | PingCAP Docs 流程图上面

3 刚刚测试了一下,程序一开始就加上 set tidb_enable_async_commit=0 也还是一样会出现lock。

[2021/06/17 11:33:14.261 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 748000000000000A785F698000000000000001040000000000000001038000000000000001038000000000000001038000000000000001 lock_version: 425694906076364811 key: 748000000000000A785F728000000000000001 lock_ttl: 3007 txn_size: 500 min_commit_ts: 425694906076364812"]

[2021/06/17 11:33:14.327 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 748000000000000A785F6980000000000000010400000000000000010380000000000189C403800000000000000103800000000000033A lock_version: 425694906089472003 key: 748000000000000A785F7280000000000001F6 lock_ttl: 3007 txn_size: 500 min_commit_ts: 425694906089472004"]

[2021/06/17 11:33:14.396 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 748000000000000A785F698000000000000001040000000000000001038000000000018A4A038000000000000001038000000000000405 lock_version: 425694906115686401 key: 748000000000000A785F7280000000000003EA lock_ttl: 3007 txn_size: 500 min_commit_ts: 425694906115686402"]

解析出lock key的内容之后,看到是primary lock其实是x_flag这个index的内容,

4 explain select id, bid, flag from t where flag=0 limit 5000 执行计划如下:

+-------------------------------+----------+-----------+---------------+----------------------------------+

| id | estRows | task | access object | operator info |

+-------------------------------+----------+-----------+---------------+----------------------------------+

| Limit_8 | 0.00 | root | | offset:0, count:5000 |

| └─TableReader_14 | 0.00 | root | | data:Limit_13 |

| └─Limit_13 | 0.00 | cop[tikv] | | offset:0, count:5000 |

| └─Selection_12 | 0.00 | cop[tikv] | | eq(test.t.flag, 0) |

| └─TableRangeScan_11 | 12000.00 | cop[tikv] | table:t | range:[0,+inf], keep order:false |

+-------------------------------+----------+-----------+---------------+----------------------------------+

qizheng

2021 年6 月 18 日 07:32

5

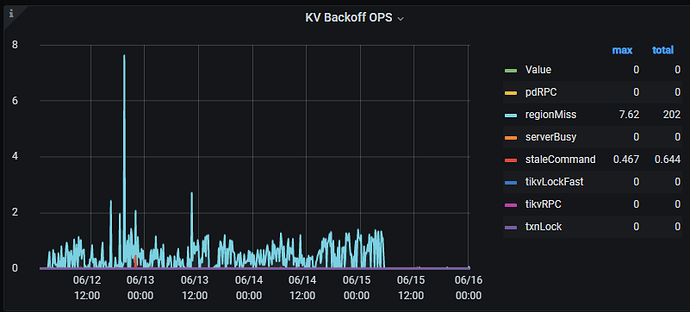

看监控主要是 regionMiss 这类由于 region balance 调度产生的 backoff,并没有看到非预期的读写冲突导致的重试,slow query 有没有记录 backoff type 类型以及比较高的 backoff time

agoo

2021 年6 月 22 日 09:14

6

找了一段生产环境报警的日志。

下面日志感觉也看不出什么内容,

tikv.log

[2021/06/22 03:00:19.851 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Region error (will back off and retry) message: \"peer is not leader for region 518529, leader ma

y Some(id: 518532 store_id: 7)\" not_leader { region_id: 518529 leader { id: 518532 store_id: 7 } }"]

[2021/06/22 03:00:23.171 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000712D85F698000000000000004040000000000002

9B1040000000000000000038000000000000003 lock_version: 425800085830893570 key: 7480000000000712D85F6980000000000000040400000000000029B104000000000000000103800000000000018C lock_tt

l: 3002 txn_size: 579 min_commit_ts: 425800085830893571"]

[2021/06/22 03:00:24.213 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000712D85F698000000000000001040000000000002

9B104000000000010BE25 lock_version: 425800086106144769 key: 7480000000000712D85F6980000000000000040400000000000029B1040000000000000001038000000000000003 lock_ttl: 3002 txn_size:965 lock_type: Del min_commit_ts: 425800086106144770"]

server1 tidb.log

[2021/06/22 03:00:03.247 +09:00] [INFO] [schema_validator.go:289] ["the schema validator enqueue, queue is too long"] ["delta max count"=1024] ["remove schema version"=231486]

[2021/06/22 03:00:16.813 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=474433] [currIdx=1] [leaderStoreID=1]

[2021/06/22 03:00:16.815 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=442837] [currIdx=2] [leaderStoreID=4]

[2021/06/22 03:00:37.840 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=48653] [currIdx=0] [leaderStoreID=7]

[2021/06/22 03:00:41.264 +09:00] [INFO] [domain.go:137] ["diff load InfoSchema success"] [usedSchemaVersion=232538] [neededSchemaVersion=232539] ["start time"=2.060643ms] [phyTblIDs="[463572,463578]"] [actionTypes="[2048,2048]"]

server2 tidb.log

[2021/06/22 03:00:14.961 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=442837] [currIdx=2] [leaderStoreID=4]

[2021/06/22 03:00:17.215 +09:00] [WARN] [2pc.go:1406] ["schemaLeaseChecker is not set for this transaction"] [conn=4025759] [sessionID=4025759] [startTS=425800084271136770] [commitTS=425800084271136771]

[2021/06/22 03:00:17.943 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=474433] [currIdx=1] [leaderStoreID=1]

[2021/06/22 03:00:19.851 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=518529] [currIdx=1] [leaderStoreID=7]

[2021/06/22 03:00:21.419 +09:00] [WARN] [2pc.go:1406] ["schemaLeaseChecker is not set for this transaction"] [conn=4025759] [sessionID=4025759] [startTS=425800085372141570] [commitTS=425800085372141571]

[2021/06/22 03:00:38.989 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=48653] [currIdx=0] [leaderStoreID=7]

server3 tidb.log

[2021/06/22 03:00:03.298 +09:00] [INFO] [ddl_worker.go:402] ["[ddl] finish DDL job"] [worker="worker 1, tp general"] [job="ID:463577, Type:truncate table, State:synced, SchemaState:public, SchemaID:21959, TableID:463508, RowCount:0, ArgLen:2, start time: 2021-06-22 03:00:03.172 +0900 JST, Err:<nil>, ErrCount:0, SnapshotVersion:0"]

[2021/06/22 03:00:15.732 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=442837] [currIdx=2] [leaderStoreID=4]

[2021/06/22 03:00:18.730 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=474433] [currIdx=1] [leaderStoreID=1]

[2021/06/22 03:00:34.848 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=518529] [currIdx=1] [leaderStoreID=7]

[2021/06/22 03:00:35.126 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=7585] [currIdx=0] [leaderStoreID=7]

[2021/06/22 03:00:35.127 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=7585] [currIdx=0] [leaderStoreID=7]

[2021/06/22 03:00:39.779 +09:00] [INFO] [region_cache.go:971] ["switch region leader to specific leader due to kv return NotLeader"] [regionID=48653] [currIdx=0] [leaderStoreID=7]

agoo

2021 年6 月 22 日 10:00

7

刚刚又在测服测试了一下,相关日志如下:

tikv

[2021/06/22 18:27:11.841 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 748000000000000ACD5F728000000000000001 lock_version: 425814663285964801 key: 748000000000000ACD5F728000000000000361 lock_ttl: 3054 txn_size: 5000 min_commit_ts: 425814663285964802"]

[2021/06/22 18:27:12.110 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 748000000000000ACD5F7280000000000019ED lock_version: 425814663338393601 key: 748000000000000ACD5F728000000000001D4C lock_ttl: 3054 txn_size: 5000 min_commit_ts: 425814663338393602"]

[2021/06/22 18:27:12.557 +09:00] [WARN] [endpoint.rs:639] [error-response] [err="Key is locked (will clean up) primary_lock: 748000000000000ACD5F72800000000000900D lock_version: 425814663482572801 key: 748000000000000ACD5F7280000000000337A5 lock_ttl: 3022 txn_size: 2000 min_commit_ts: 425814663482572802"]

tidb

[2021/06/22 18:26:54.139 +09:00] [INFO] [2pc.go:397] ["[BIG_TXN]"] [session=5597] ["table ID"=2765] [size=612974] [keys=12000] [puts=12000] [dels=0] [locks=0] [checks=0] [txnStartTS=425814658646016001]

[2021/06/22 18:26:59.993 +09:00] [INFO] [gc_worker.go:674] ["[gc worker] start delete ranges"] [uuid=5e6aea97af00004] [ranges=0]

[2021/06/22 18:26:59.993 +09:00] [INFO] [gc_worker.go:714] ["[gc worker] finish delete ranges"] [uuid=5e6aea97af00004] ["num of ranges"=0] ["cost time"=349ns]

[2021/06/22 18:26:59.994 +09:00] [INFO] [gc_worker.go:737] ["[gc worker] start redo-delete ranges"] [uuid=5e6aea97af00004] ["num of ranges"=0]

[2021/06/22 18:26:59.994 +09:00] [INFO] [gc_worker.go:766] ["[gc worker] finish redo-delete ranges"] [uuid=5e6aea97af00004] ["num of ranges"=0] ["cost time"=356ns]

[2021/06/22 18:26:59.997 +09:00] [INFO] [gc_worker.go:1531] ["[gc worker] sent safe point to PD"] [uuid=5e6aea97af00004] ["safe point"=425814476691865600]

[2021/06/22 18:27:22.919 +09:00] [INFO] [update.go:982] ["[stats] auto analyze triggered"] [sql="analyze table `sorter_test`.`t`"] [reason="too many modifications(24000/12000>0.5)"]

slow-log (多次测试,只有一次有下面的慢日志)

# Time: 2021-06-22T18:27:12.449961852+09:00

# Txn_start_ts: 425814663377715202

# User@Host: nint_test[nint_test] @ 172.30.3.47 [172.30.3.47]

# Conn_ID: 5607

# Query_time: 0.368131065

# Parse_time: 0.000081951

# Compile_time: 0.000315818

# Rewrite_time: 0.000080063

# Optimize_time: 0.000129905

# Wait_TS: 0.000297785

# Cop_time: 0.365860235 Process_time: 0.04 Request_count: 1 Process_keys: 12000 Total_keys: 96002 Rocksdb_delete_skipped_count: 12000 Rocksdb_key_skipped_count: 116000 Rocksdb_block_cache_hit_count: 74

# DB: sorter_test

# Is_internal: false

# Digest: 2821c29624f9b3410b806268742454ffe9dcf64327421098e48a82574e9c2d63

# Stats: t:425814650624409608

# Num_cop_tasks: 1

# Cop_proc_avg: 0.04 Cop_proc_addr: 172.30.3.47:20162

# Cop_wait_avg: 0 Cop_wait_addr: 172.30.3.47:20162

# Mem_max: 48318

# Prepared: false

# Plan_from_cache: false

# Plan_from_binding: false

# Has_more_results: false

# KV_total: 0.067794732

# PD_total: 0.000274108

# Backoff_total: 0

# Write_sql_response_total: 0.001314748

# Succ: true

# Plan: tidb_decode_plan('2wiAMAkxNl84CTAJMAlvZmZzZXQ6MCwgY291bnQ6NTAwMAkyAQVgdGltZTozNjUuOW1zLCBsb29wczo0CU4vQQEEHAoxCTMxXzE0BUQwZGF0YTpMaW1pdF8xM2o9AHQsIGNvcF90YXNrOiB7bnVtOiAxLCBtYXg6IDM5LjIBZixwcm9jX2tleXM6IDEBhRQsIHRvdF8BFgw6IDQwASIMcnBjXwU7BDIsBQwFphAgNjcuNgEeoGNvcHJfY2FjaGVfaGl0X3JhdGlvOiAwLjAwfSwgUmVzb2x2ZUxvY2s6AX8UX3JwYzoxBWMIYWxfBUkIMjk4AYk4fQk0Ny4yIEtCCU4vQQoyIS4UMTMJMV8wejEBBGt2Cd4AewVNADMFkyk5UDE2fSwgc2Nhbl9kZXRhaWw6IHt0bwF3AdwIZXNzQvUABGFsDRMMOTYwMAHsXG9ja3NkYjoge2RlbGV0ZV9za2lwcGVkXym3MS8Ia2V5QhoAIDE2MDAwLCBibAH5BCB7OR8NOxg3NCwgcmVhFUsAMA0PPGJ5dGU6IDAgQnl0ZXN9fX0hDQEEAAohCwQxMi0QYGVxKHNvcnRlcl90ZXN0LnQuZmxhZywgMSlRCe4VASUVSQY1EQGfAG92DQEEMCxOCQFuBAEBVa4DARg0CTQ0XzExJQQAMUGqXAl0YWJsZTp0LCByYW5nZTpbMCwraW5mXQGKNGVwIG9yZGVyOmZhbHNlETD+GQH+GQH+GQFWGQE=')

# Plan_digest: 999f379b339ca2d65eb9518af9def07306a180a7591490697545c742e67d92dd

use sorter_test;

SELECT id, flag, last_update FROM t WHERE t.flag=1 LIMIT 5000;

更新 @202106221943

tidb

[2021/06/22 20:08:26.391 +09:00] [DEBUG] [server.go:450] ["new connection"] [conn=19][remoteAddr=10.21.200.54:58540]

[2021/06/22 20:08:26.399 +09:00] [DEBUG] [set.go:213] ["set session var"] [conn=19] [name=tidb_enable_async_commit] [val=0]

[2021/06/22 20:08:26.400 +09:00] [DEBUG] [set.go:213] ["set session var"] [conn=19] [name=tidb_enable_1pc] [val=0]

[2021/06/22 20:08:26.650 +09:00] [DEBUG] [ddl_worker.go:159] ["[ddl] wait to check DDL status again"] [worker="worker 1, tp general"] [interval=1s]

[2021/06/22 20:08:26.650 +09:00] [DEBUG] [ddl_worker.go:159] ["[ddl] wait to check DDL status again"] [worker="worker 2, tp add index"] [interval=1s]

[2021/06/22 20:08:26.651 +09:00] [DEBUG] [ddl.go:218] ["[ddl] check whether is the DDL owner"] [isOwner=true] [selfID=b09a4da1-5370-4b6c-9e05-d12c14cf1aa5]

[2021/06/22 20:08:26.652 +09:00] [DEBUG] [ddl.go:218] ["[ddl] check whether is the DDL owner"] [isOwner=true] [selfID=b09a4da1-5370-4b6c-9e05-d12c14cf1aa5]

[2021/06/22 20:08:26.864 +09:00] [INFO] [2pc.go:397] ["[BIG_TXN]"] [session=19] ["table ID"=285] [size=803714] [keys=15000] [puts=10000] [dels=5000] [locks=0] [checks=0] [txnStartTS=425816255753093121]

[2021/06/22 20:08:26.930 +09:00] [WARN] [memory_usage_alarm.go:141] ["tidb-server has the risk of OOM. Running SQLs and heap profile will be recorded in record path"] ["is server-memory-quota set"=false] ["system memory total"=8201785344] ["system memory usage"=6563168256] ["tidb-server memory usage"=29684832] [memory-usage-alarm-ratio=0.8] ["record path"="/tmp/1013_tidb/MC4wLjAuMDo0MDAwLzAuMC4wLjA6MTAwODA=/tmp-storage/record"]

[2021/06/22 20:08:27.650 +09:00] [DEBUG] [ddl_worker.go:159] ["[ddl] wait to check DDL status again"] [worker="worker 1, tp general"] [interval=1s]

[2021/06/22 20:08:27.650 +09:00] [DEBUG] [ddl_worker.go:159] ["[ddl] wait to check DDL status again"] [worker="worker 2, tp add index"] [interval=1s]

[2021/06/22 20:08:27.651 +09:00] [DEBUG] [ddl.go:218] ["[ddl] check whether is the DDL owner"] [isOwner=true] [selfID=b09a4da1-5370-4b6c-9e05-d12c14cf1aa5]

[2021/06/22 20:08:27.652 +09:00] [DEBUG] [ddl.go:218] ["[ddl] check whether is the DDL owner"] [isOwner=true] [selfID=b09a4da1-5370-4b6c-9e05-d12c14cf1aa5]

[2021/06/22 20:08:27.662 +09:00] [DEBUG] [txn.go:281] ["[kv] txnLatches disabled, 2pc directly"] [conn=19] []

[2021/06/22 20:08:27.727 +09:00] [DEBUG] [coprocessor.go:867] ["coprocessor encounters"] [lock="primary_lock:\"t\\200\\000\\000\\000\\000\\000\\001\\035_i\\200\\000\\000\\000\\000\\000\\000\\001\\004\\000\\000\\000\\000\\000\\000\\000\\000\\003\\200\\000\\000\\000\\000\\000\\000\\000\\003\\200\\000\\000\\000\\000\\000\\000\\001\\003\\200\\000\\000\\000\\000\\000\\000\\003\" lock_version:425816255753093121 key:\"t\\200\\000\\000\\000\\000\\000\\001\\035_r\\200\\000\\000\\000\\000\\000\\000\\001\" lock_ttl:5609 txn_size:15000 min_commit_ts:425816255753093122 "]

[2021/06/22 20:08:28.276 +09:00] [INFO] [2pc.go:397] ["[BIG_TXN]"] [session=19] ["table ID"=285] [size=803765] [keys=15000] [puts=10000] [dels=5000] [locks=0] [checks=0] [txnStartTS=425816256159416321]

[2021/06/22 20:08:28.650 +09:00] [DEBUG] [ddl_worker.go:159] ["[ddl] wait to check DDL status again"] [worker="worker 1, tp general"] [interval=1s]

[2021/06/22 20:08:28.651 +09:00] [DEBUG] [ddl_worker.go:159] ["[ddl] wait to check DDL status again"] [worker="worker 2, tp add index"] [interval=1s]

[2021/06/22 20:08:28.659 +09:00] [DEBUG] [ddl.go:218] ["[ddl] check whether is the DDL owner"] [isOwner=true] [selfID=b09a4da1-5370-4b6c-9e05-d12c14cf1aa5]

[2021/06/22 20:08:28.660 +09:00] [DEBUG] [ddl.go:218] ["[ddl] check whether is the DDL owner"] [isOwner=true] [selfID=b09a4da1-5370-4b6c-9e05-d12c14cf1aa5]

[2021/06/22 20:08:29.043 +09:00] [DEBUG] [txn.go:281] ["[kv] txnLatches disabled, 2pc directly"] [conn=19] []

[2021/06/22 20:08:29.123 +09:00] [DEBUG] [coprocessor.go:867] ["coprocessor encounters"] [lock="primary_lock:\"t\\200\\000\\000\\000\\000\\000\\001\\035_i\\200\\000\\000\\000\\000\\000\\000\\001\\004\\000\\000\\000\\000\\000\\000\\000\\000\\003\\200\\000\\000\\000\\000\\000\\000\\256\\003\\200\\000\\000\\000\\000\\000\\000\\001\\003\\200\\000\\000\\000\\000\\000\\033\\332\" lock_version:425816256159416321 key:\"t\\200\\000\\000\\000\\000\\000\\001\\035_r\\200\\000\\000\\000\\000\\000\\023\\211\" lock_ttl:5465 txn_size:15000 min_commit_ts:425816256159416322 "]

[2021/06/22 20:08:29.621 +09:00] [INFO] [2pc.go:397] ["[BIG_TXN]"] [session=19] ["table ID"=285] [size=803733] [keys=15000] [puts=10000] [dels=5000] [locks=0] [checks=0] [txnStartTS=425816256526417921]

[2021/06/22 20:08:29.651 +09:00] [DEBUG] [ddl_worker.go:159] ["[ddl] wait to check DDL status again"] [worker="worker 2, tp add index"] [interval=1s]

[2021/06/22 20:08:29.651 +09:00] [DEBUG] [ddl_worker.go:159] ["[ddl] wait to check DDL status again"] [worker="worker 1, tp general"] [interval=1s]

[2021/06/22 20:08:29.652 +09:00] [DEBUG] [ddl.go:218] ["[ddl] check whether is the DDL owner"] [isOwner=true] [selfID=b09a4da1-5370-4b6c-9e05-d12c14cf1aa5]

[2021/06/22 20:08:29.652 +09:00] [DEBUG] [ddl.go:218] ["[ddl] check whether is the DDL owner"] [isOwner=true] [selfID=b09a4da1-5370-4b6c-9e05-d12c14cf1aa5]

[2021/06/22 20:08:30.186 +09:00] [DEBUG] [txn.go:281] ["[kv] txnLatches disabled, 2pc directly"] [conn=19] []

...

spc_monkey

2021 年7 月 18 日 08:33

10

解决办法,应该很多吧,感觉如果找原因的话,还是建议 tidb 的 general log 打开,自信分析一下 日志比较好

xfworld

2021 年7 月 18 日 08:34

11

我刚看了下你说的环境,单机配置的是 tiup playground模式么?

然后在看语句:

bid 不是主键? 你切换成主键 id 试试

另外,如果单主键模式不能满足你,你可以切换成 多主键

5.X 以后的版本,第二阶段提交是异步的,如果你有同步需求,可以关闭掉这个设置;

spc_monkey

2021 年7 月 19 日 04:08

13

1、异步提交,数据库有参数控制

agoo

2021 年7 月 19 日 04:57

14

请问下,这个控制是上面说的 tidb_enable_async_commit 吗?(设置了还是会出现lock)

spc_monkey:

1、异步提交,数据库有参数控制

agoo

2021 年7 月 19 日 06:00

16

感谢回复。

spc_monkey

2021 年7 月 19 日 06:26

18

spc_monkey

2021 年7 月 19 日 06:39

20

好的,另外,asktug 好像有类似可以提需求的地方(非产品功能/bug 之类的需求),就是咱们个人的需求,可以在里面提一下相关的需求