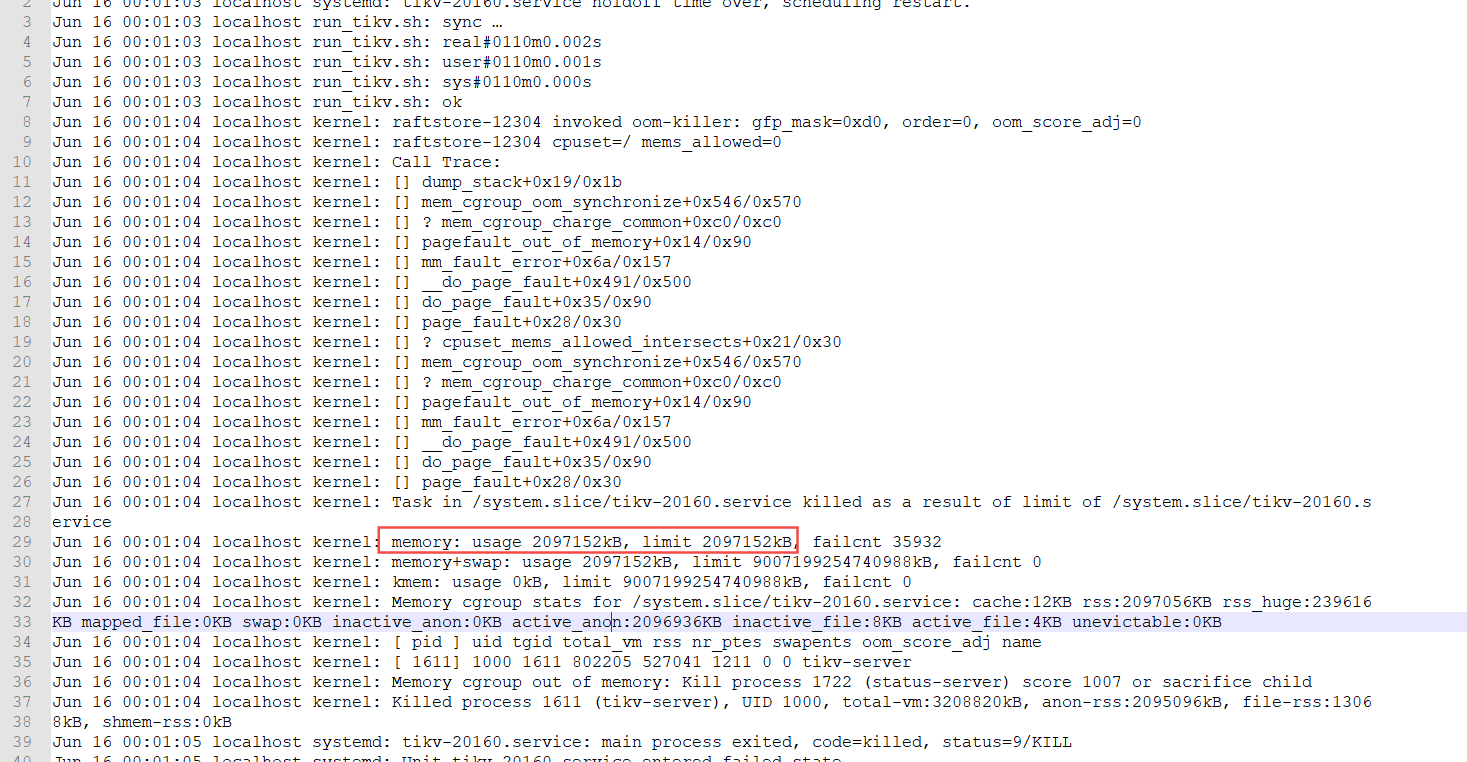

前面3个tikv中的其中一个节点,突然down掉,起不来,日志报错入标题;经过一顿扩缩容操作后,新扩容了一个新的节点, 老的节点下线了; 新的节点又突然down掉,报如上错,到底哪里出了问题??

[2021/06/15 16:39:21.933 +08:00] [INFO] [server.rs:270] [“using config”] [config="{“log-level”:“info”,“log-file”:"/data/tidb-

deploy/tikv-20160/log/tikv.log",“log-format”:“text”,“slow-log-file”:"",“slow-log-threshold”:“1s”,“log-rotation-timespa

n”:“1d”,“log-rotation-size”:“300MiB”,“panic-when-unexpected-key-or-data”:false,“enable-io-snoop”:true,“abort-on-panic”:

false,“readpool”:{“unified”:{“min-thread-count”:1,“max-thread-count”:4,“stack-size”:“10MiB”,“max-tasks-per-worker”:200

0},“storage”:{“use-unified-pool”:true,“high-concurrency”:4,“normal-concurrency”:4,“low-concurrency”:4,“max-tasks-per-work

er-high”:2000,“max-tasks-per-worker-normal”:2000,“max-tasks-per-worker-low”:2000,“stack-size”:“10MiB”},“coprocessor”:{“u

se-unified-pool”:true,“high-concurrency”:3,“normal-concurrency”:3,“low-concurrency”:3,“max-tasks-per-worker-high”:2000,“ma

x-tasks-per-worker-normal”:2000,“max-tasks-per-worker-low”:2000,“stack-size”:“10MiB”}},“server”:{“addr”:“0.0.0.0:20160”

,“advertise-addr”:“192.168.5.49:20160”,“status-addr”:“0.0.0.0:20180”,“advertise-status-addr”:“192.168.5.49:20180”,“stat

us-thread-pool-size”:1,“max-grpc-send-msg-len”:10485760,“grpc-compression-type”:“none”,“grpc-concurrency”:5,“grpc-concurre

nt-stream”:1024,“grpc-raft-conn-num”:1,“grpc-memory-pool-quota”:9223372036854775807,“grpc-stream-initial-window-size”:“2MiB

“,“grpc-keepalive-time”:“10s”,“grpc-keepalive-timeout”:“3s”,“concurrent-send-snap-limit”:32,“concurrent-recv-snap-limit”

:32,“end-point-recursion-limit”:1000,“end-point-stream-channel-size”:8,“end-point-batch-row-limit”:64,“end-point-stream-batch

-row-limit”:128,“end-point-enable-batch-if-possible”:true,“end-point-request-max-handle-duration”:“1m”,“end-point-max-concur

rency”:4,“snap-max-write-bytes-per-sec”:“100MiB”,“snap-max-total-size”:“0KiB”,“stats-concurrency”:1,“heavy-load-threshol

d”:300,“heavy-load-wait-duration”:“1ms”,“enable-request-batch”:true,“background-thread-count”:2,“end-point-slow-log-thresh

old”:“1s”,“forward-max-connections-per-address”:4,“labels”:{}},“storage”:{“data-dir”:”/data/tidb-data/tikv-20160”,“gc-

ratio-threshold”:1.1,“max-key-size”:4096,“scheduler-concurrency”:524288,“scheduler-worker-pool-size”:4,“scheduler-pending-wr

ite-threshold”:“100MiB”,“reserve-space”:“0KiB”,“enable-async-apply-prewrite”:false,“enable-ttl”:false,“ttl-check-poll-in

terval”:“12h”,“block-cache”:{“shared”:true,“capacity”:“1331MiB”,“num-shard-bits”:6,“strict-capacity-limit”:false,“hi

gh-pri-pool-ratio”:0.8,“memory-allocator”:“nodump”}},“pd”:{“endpoints”:[“192.168.5.43:2379”,“192.168.5.44:2379”],“retr

y-interval”:“300ms”,“retry-max-count”:9223372036854775807,“retry-log-every”:10,“update-interval”:“10m”,“enable-forwardin

g”:false},“metric”:{“job”:“tikv”},“raftstore”:{“prevote”:true,“raftdb-path”:"/data/tidb-data/tikv-20160/raft",“capac

ity”:“0KiB”,“raft-base-tick-interval”:“1s”,“raft-heartbeat-ticks”:2,“raft-election-timeout-ticks”:10,“raft-min-election-

timeout-ticks”:10,“raft-max-election-timeout-ticks”:20,“raft-max-size-per-msg”:“1MiB”,“raft-max-inflight-msgs”:256,“raft-e

ntry-max-size”:“8MiB”,“raft-log-gc-tick-interval”:“10s”,“raft-log-gc-threshold”:50,“raft-log-gc-count-limit”:73728,“raft

-log-gc-size-limit”:“72MiB”,“raft-log-reserve-max-ticks”:6,“raft-engine-purge-interval”:“10s”,“raft-entry-cache-life-time

“:“30s”,“raft-reject-transfer-leader-duration”:“3s”,“split-region-check-tick-interval”:“10s”,“region-split-check-diff”:

“6MiB”,“region-compact-check-interval”:“5m”,“region-compact-check-step”:100,“region-compact-min-tombstones”:10000,“region-

compact-tombstones-percent”:30,“pd-heartbeat-tick-interval”:“1m”,“pd-store-heartbeat-tick-interval”:“10s”,“snap-mgr-gc-tic

k-interval”:“1m”,“snap-gc-timeout”:“4h”,“lock-cf-compact-interval”:“10m”,“lock-cf-compact-bytes-threshold”:“256MiB”,

“notify-capacity”:40960,“messages-per-tick”:4096,“max-peer-down-duration”:“5m”,“max-leader-missing-duration”:“2h”,“abnor

mal-leader-missing-duration”:“10m”,“peer-stale-state-check-interval”:“5m”,“leader-transfer-max-log-lag”:128,“snap-apply-ba

tch-size”:“10MiB”,“consistency-check-interval”:“0s”,“report-region-flow-interval”:“1m”,“raft-store-max-leader-lease”:”

9s”,“right-derive-when-split”:true,“allow-remove-leader”:false,“merge-max-log-gap”:10,“merge-check-tick-interval”:“2s”,"

use-delete-range":false,“cleanup-import-sst-interval”:“10m”,“local-read-batch-size”:1024,“apply-max-batch-size”:256,"apply

[2021/06/15 16:39:21.937 +08:00] [ERROR] [server.rs:866] [“failed to init io snooper”] [err_code=KV:Unknown] [err="“IO snooper is n

ot started due to not compiling with BCC”"]

[2021/06/15 16:39:21.937 +08:00] [INFO] [mod.rs:116] [“encryption: none of key dictionary and file dictionary are found.”]

[2021/06/15 16:39:21.937 +08:00] [INFO] [mod.rs:477] [“encryption is disabled.”]

[2021/06/15 16:39:22.007 +08:00] [INFO] [future.rs:146] [“starting working thread”] [worker=gc-worker]

[2021/06/15 16:39:22.071 +08:00] [INFO] [mod.rs:214] [“Storage started.”]

[2021/06/15 16:39:22.080 +08:00] [INFO] [node.rs:176] [“put store to PD”] [store=“id: 123045 address: “192.168.5.49:20160” version

: “5.0.2” status_address: “192.168.5.49:20180” git_hash: “6e6ea0e02c2caac556f95a821f92b28fc88dba85” start_timestamp: 162374636

2 deploy_path: “/data/tidb-deploy/tikv-20160/bin””]

[2021/06/15 16:39:22.083 +08:00] [INFO] [node.rs:243] [“initializing replication mode”] [store_id=123045] [status=Some()]

[2021/06/15 16:39:22.083 +08:00] [INFO] [replication_mode.rs:51] [“associated store labels”] [labels="[]"] [store_id=4]

[2021/06/15 16:39:22.083 +08:00] [INFO] [replication_mode.rs:51] [“associated store labels”] [labels="[key: “host” value: “tikv44

“]”] [store_id=5]

[2021/06/15 16:39:22.083 +08:00] [INFO] [replication_mode.rs:51] [“associated store labels”] [labels=”[]"] [store_id=123045]

[2021/06/15 16:39:22.083 +08:00] [INFO] [replication_mode.rs:51] [“associated store labels”] [labels="[key: “host” value: "tikv45

“]”] [store_id=1]

[2021/06/15 16:39:22.083 +08:00] [INFO] [node.rs:387] [“start raft store thread”] [store_id=123045]

[2021/06/15 16:39:22.084 +08:00] [INFO] [snap.rs:1137] [“Initializing SnapManager, encryption is enabled: false”]

[2021/06/15 16:39:22.185 +08:00] [INFO] [peer.rs:191] [“create peer”] [peer_id=411767325] [region_id=411767323]

[2021/06/15 16:39:22.190 +08:00] [INFO] [raft.rs:2443] [“switched to configuration”] [config=“Configuration { voters: Configuration

{ incoming: Configuration { voters: {411767324, 411767325, 411767326} }, outgoing: Configuration { voters: {} } }, learners: {}, lea

rners_next: {}, auto_leave: false }”] [raft_id=411767325] [region_id=411767323]

[2021/06/15 16:39:22.190 +08:00] [INFO] [raft.rs:1064] [“became follower at term 166”] [term=166] [raft_id=411767325] [region_id=411

767323]

[2021/06/15 16:39:22.190 +08:00] [INFO] [raft.rs:375] [newRaft] [peers=“Configuration { incoming: Configuration { voters: {411767324

, 411767325, 411767326} }, outgoing: Configuration { voters: {} } }”] [“last term”=143] [“last index”=170] [applied=157] [commit=170

] [term=166] [raft_id=411767325] [region_id=411767323]

[2021/06/15 16:39:22.190 +08:00] [INFO] [raw_node.rs:285] [“RawNode created with id 411767325.”] [id=411767325] [raft_id=411767325]

[region_id=411767323]

[2021/06/15 16:39:22.190 +08:00] [INFO] [peer.rs:191] [“create peer”] [peer_id=414801547] [region_id=414801545]

[2021/06/15 16:39:22.191 +08:00] [INFO] [raft.rs:2443] [“switched to configuration”] [config=“Configuration { voters: Configuration

{ incoming: Configuration { voters: {414801548, 414801546, 414801547} }, outgoing: Configuration { voters: {} } }, learners: {}, lea

rners_next: {}, auto_leave: false }”] [raft_id=414801547] [region_id=414801545]

[2021/06/15 16:39:22.191 +08:00] [INFO] [raft.rs:1064] [“became follower at term 94”] [term=94] [raft_id=414801547] [region_id=41480

1545]