【 TiDB 使用环境】生产环境

【附件】

Cluster type: tidbhttp://10.0.40.149:2379/dashboard

10.0.40.65:9093 alertmanager 10.0.40.65 9093/9094 linux/x86_64 Up /data/deploy/alertmanager/data /data/deploy/alertmanager

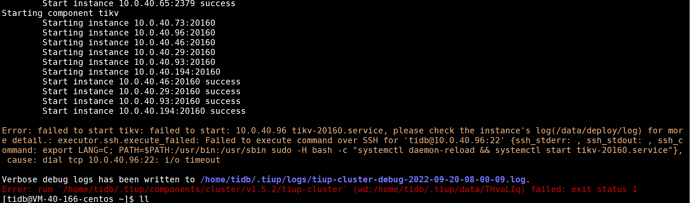

tiup cluster start txidc_saas_tidb

cat /home/tidb/.tiup/storage/cluster/clusters/txidc_saas_tidb/meta.yaml

host: 10.0.40.166

host: 10.0.40.85

host: 10.0.40.25

host: 10.0.40.46

host: 10.0.40.93

host: 10.0.40.29

host: 10.0.40.194

host: 10.0.40.96

host: 10.0.40.73

host: 10.0.40.65

host: 10.0.40.149

host: 10.0.40.20

host: 10.0.40.65

host: 10.0.40.65

host: 10.0.40.65

北京大爷

2022 年9 月 20 日 00:39

4

北京大爷

2022 年9 月 20 日 00:42

5

1 个赞

tikv节点下线后,有没有检查tikv节点数据是否迁移完。

检查过的,就是没有将服务器从列表中删除而已,然后就将服务器下架了