【使用环境】生产(3tidb/3pd/3tikv)

【TiDB版本】5.3.0

【遇到的问题】TiKV 部分内存限制参数原理不太理解

- TiKV 实例独立部署,内存配置如下(宿主机物理内存128G,

free -g显示125G):

tikv:

raftdb.defaultcf.block-cache-size: 4GiB

readpool.unified.max-thread-count: 38

rocksdb.defaultcf.block-cache-size: 50GiB

rocksdb.lockcf.block-cache-size: 4GiB

rocksdb.writecf.block-cache-size: 25GiB

server.grpc-concurrency: 14

server.grpc-raft-conn-num: 5

split.qps-threshold: 2000

storage.block-cache.capacity: 90GiB

mysql> show config where name like 'storage.block-cache.capacity';

+------+---------------------+------------------------------+-------+

| Type | Instance | Name | Value |

+------+---------------------+------------------------------+-------+

| tikv | 192.168.3.225:20160 | storage.block-cache.capacity | 75GiB |

| tikv | 192.168.3.224:20160 | storage.block-cache.capacity | 75GiB |

| tikv | 192.168.3.226:20160 | storage.block-cache.capacity | 75GiB |

+------+---------------------+------------------------------+-------+

3 rows in set (0.01 sec)

-

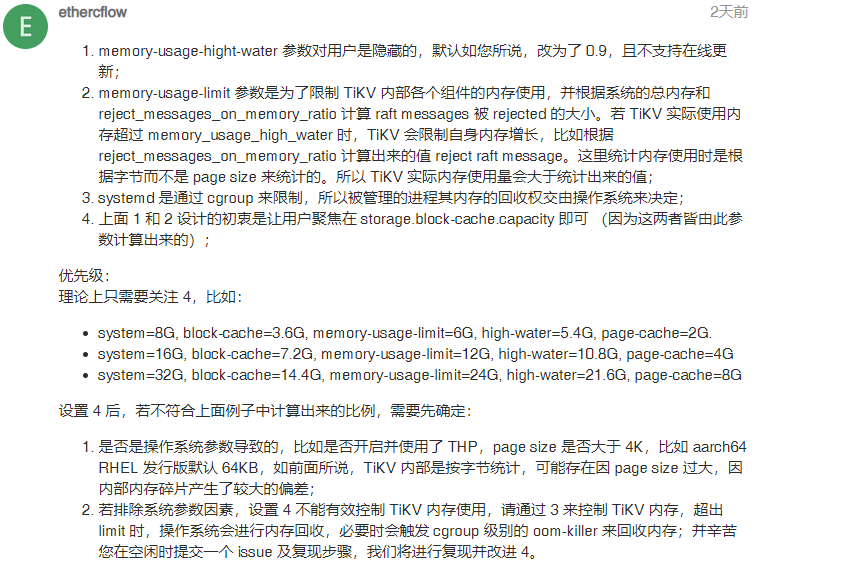

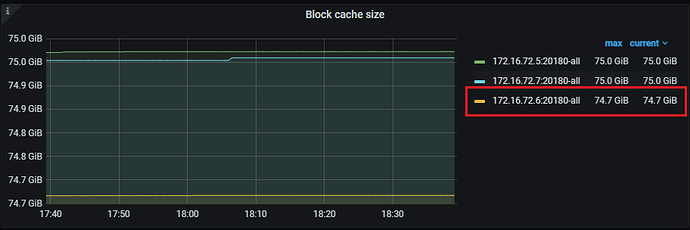

Grafana->TiKV-Detail->RocksDB-KV->Block Cache Size 面板

-

未关闭 THP(Transparent HugePage)

[tidb@idc1-offline-tikv2 scripts]$ cat /proc/meminfo|grep Huge

AnonHugePages: 4096 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

[tidb@idc1-offline-tikv2 scripts]$ cat /sys/kernel/mm/transparent_hugepage/defrag

[always] madvise never

- OOM 之前,tikv日志如下:

[2022/09/15 21:02:37.837 +08:00] [WARN] [config.rs:2650] ["memory_usage_limit:ReadableSize(134217728000) > recommanded:ReadableSize(103432923648), maybe page cache isn't enough"]

[2022/09/15 21:02:37.880 +08:00] [WARN] [config.rs:2650] ["memory_usage_limit:ReadableSize(137910564864) > recommanded:ReadableSize(103432923648), maybe page cache isn't enough"]

[2022/09/15 21:02:37.882 +08:00] [INFO] [server.rs:1408] ["beginning system configuration check"]

[2022/09/15 21:02:37.882 +08:00] [INFO] [config.rs:919] ["data dir"] [mount_fs="FsInfo { tp: \"ext4\", opts: \"rw,noatime,nodelalloc,stripe=64,data=ordered\", mnt_dir: \"/tidb-data\", fsname: \"/dev/sdb1\" }"] [data_path=/tidb-data/tikv-20160]

[2022/09/15 21:02:37.883 +08:00] [INFO] [config.rs:919] ["data dir"] [mount_fs="FsInfo { tp: \"ext4\", opts: \"rw,noatime,nodelalloc,stripe=64,data=ordered\", mnt_dir: \"/tidb-data\", fsname: \"/dev/sdb1\" }"] [data_path=/tidb-data/tikv-20160/raft]

[2022/09/15 21:02:37.898 +08:00] [INFO] [server.rs:316] ["using config"] [config="{\"log-level\":\"info\",\"log-file\":\"/tidb-deploy/tikv-20160/log/tikv.log\",\"log-format\":\"text\",\"slow-log-file\":\"\",\"slow-log-threshold\":\"1s\",\"log-rotation-timespan\":\"1d\",\"log-rotation-size\":\"300MiB\",\"panic-when-unexpected-key-or-data\":false,\"enable-io-snoop\":true,\"abort-on-panic\":false,\"memory-usage-limit\":\"125GiB\",\"memory-usage-high-water\":0.9,....

同事为了提高内存缓存的数据量,将 Block Cache Size 提高到了 90G。我个人认为参数设置过高,是导致 OOM 的主要原因。

按 https://asktug.com/t/topic/933132/2 中大佬的描述。

当 Block Cache Size 设置为 90G 时:

memory-usage-limit= Block Cache * 10/6 = 150G,因为物理内存128G,所以memory-usage-limit=128G。memory-usage-high-water为 0.9 ,所以memory-usage-limit* 0.9 = 128G * 0.9 = 115G。Page-Cache= 物理内存 * 0.25 = 128G * 0.25 = 32G

疑问

- 这里的

memory-usage-high-water起什么作用?似乎未起到任何作用? Page-Cache大小可以调整么?默认为物理内存 1/4 ?- 后期可否像 Oracle 的 SGA 那样,只需为 TiKV 设置一个总内存限制参数,如

tikv_memory_target。然后,TiKV 根据比例或运行状态,自动调整其内部各组件内存限制。同时,也可显式指定 TiKV 内部各组件内存限制? - 一个 TiKV OOM 重启后,内存降下来了。其余两个的内存占用也随之下降,这是什么原因?

- 通过 set 命令设置 storage.block-cache.capacity=75G,但是TiKV配置文件中设置为90G。Grafana监控中显示,storage.block-cache.capacity 为 75G。是因为通过 set 设置的系统变量优先级要高于TiKV集群配置文件中的设置?set 设置的值不会持久化到集群配置文件中么?