版本:5.0.4

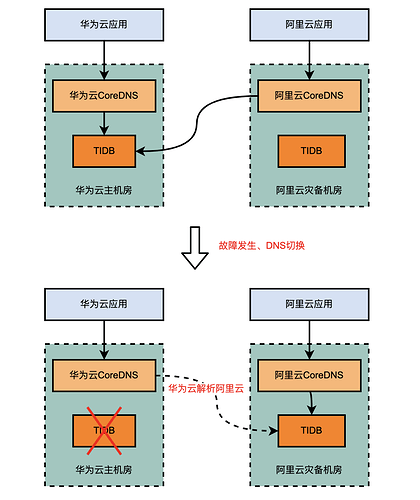

架构:双云灾备

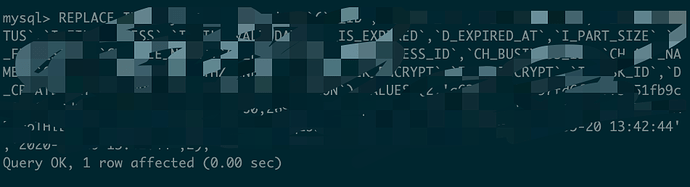

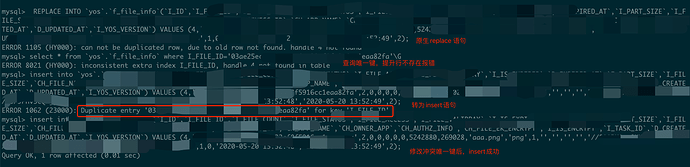

问题:使用 sync-diff-inspector 工具校验数据后,执行replace语句失败:

【概述】 使用 sync-diff-inspector 校验两个tidb集群数据是否一致

结果数据不一致,执行replace语句后写入失败。

唯一键:

PRIMARY KEY (`I_ID`) /*T![clustered_index] CLUSTERED */,

UNIQUE KEY `FSI` (`I_FILE_ID`),

ERROR 1105 (HY000) at line 40950: can not be duplicated row, due to old row not found. handle 2396698 not found

ERROR 1105 (HY000) at line 40951: can not be duplicated row, due to old row not found. handle 2396699 not found

ERROR 1105 (HY000) at line 40952: can not be duplicated row, due to old row not found. handle 2396700 not found

ERROR 1105 (HY000) at line 40953: can not be duplicated row, due to old row not found. handle 2396701 not found

ERROR 1105 (HY000) at line 40956: can not be duplicated row, due to old row not found. handle 2396704 not found

ERROR 1105 (HY000) at line 40957: can not be duplicated row, due to old row not found. handle 2396705 not found

ERROR 1105 (HY000) at line 40964: can not be duplicated row, due to old row not found. handle 2396712 not found

- 成功replace:

- 失败replace

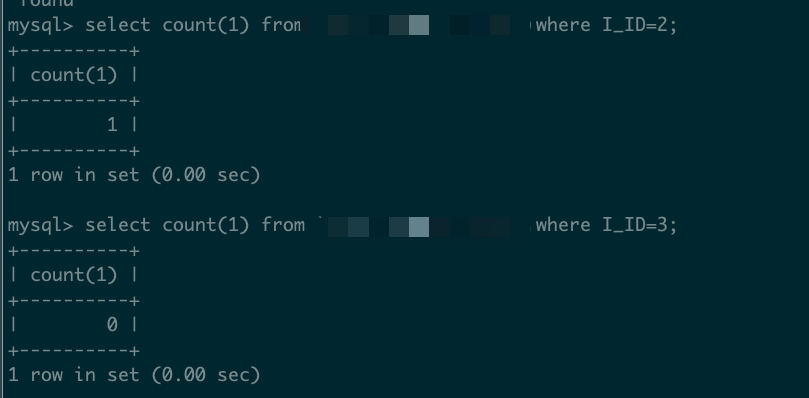

成功ID=2,失败ID=3

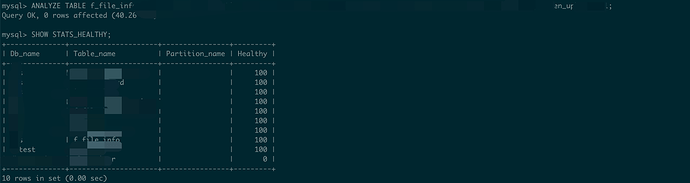

- 重新收集统计信息后,失败:

执行admin check table失败

mysql> admin check table f_file_info;

ERROR 8003 (HY000): f_file_info err:[admin:8223]index:<nil> != record:&admin.RecordData{Handle:54323, Values:[]types.Datum{types.Datum{k:0x5, decimal:0x0, length:0x0, i:0, collation:"utf8mb4_bin", b:[]uint8{0x65, 0x37, 0x34, 0x39, 0x35, 0x62, 0x33, 0x30, 0x34, 0x32, 0x66, 0x64, 0x39, 0x65, 0x38, 0x35, 0x65, 0x32, 0x61, 0x65, 0x37, 0x63, 0x36, 0x66, 0x66, 0x65, 0x31, 0x63, 0x31, 0x32, 0x34, 0x62, 0x66, 0x34, 0x65, 0x61, 0x36, 0x34, 0x35, 0x39}, x:interface {}(nil)}}}

详细步骤如下:

- 检查表region在哪个TiKV节点

mysql> SPLIT TABLE sbtest1 BETWEEN (0) AND (100000) REGIONS 8;

+--------------------+----------------------+

| TOTAL_SPLIT_REGION | SCATTER_FINISH_RATIO |

+--------------------+----------------------+

| 8 | 1 |

+--------------------+----------------------+

1 row in set (3.54 sec)

mysql> show table sbtest1 regions;

+-----------+--------------+--------------+-----------+-----------------+------------------+------------+---------------+------------+----------------------+------------------+

| REGION_ID | START_KEY | END_KEY | LEADER_ID | LEADER_STORE_ID | PEERS | SCATTERING | WRITTEN_BYTES | READ_BYTES | APPROXIMATE_SIZE(MB) | APPROXIMATE_KEYS |

+-----------+--------------+--------------+-----------+-----------------+------------------+------------+---------------+------------+----------------------+------------------+

| 1596 | t_85_r | t_85_r_12500 | 1624 | 3 | 1626, 1624, 1625 | 0 | 1740 | 0 | 1 | 0 |

| 1600 | t_85_r_12500 | t_85_r_25000 | 1602 | 14 | 1601, 1602, 1603 | 0 | 1503 | 0 | 1 | 0 |

| 1604 | t_85_r_25000 | t_85_r_37500 | 1627 | 13 | 1628, 1629, 1627 | 0 | 4178 | 0 | 1 | 0 |

| 1608 | t_85_r_37500 | t_85_r_50000 | 1611 | 2 | 1609, 1610, 1611 | 0 | 1659 | 0 | 1 | 0 |

| 1612 | t_85_r_50000 | t_85_r_62500 | 1632 | 1 | 1632, 1630, 1631 | 0 | 1725 | 0 | 1 | 0 |

| 1616 | t_85_r_62500 | t_85_r_75000 | 1618 | 14 | 1617, 1618, 1619 | 0 | 27 | 0 | 1 | 0 |

| 1620 | t_85_r_75000 | t_85_r_87500 | 1635 | 1 | 1635, 1633, 1634 | 0 | 1725 | 0 | 1 | 0 |

| 5 | t_85_r_87500 | | 792 | 4 | 792, 1296, 1326 | 0 | 4258 | 2470034 | 1 | 0 |

| 1592 | t_85_ | t_85_r | 1593 | 4 | 1593, 1594, 1595 | 0 | 1491 | 0 | 3 | 11363 |

+-----------+--------------+--------------+-----------+-----------------+------------------+------------+---------------+------------+----------------------+------------------+

9 rows in set (0.01 sec)

- pd节点执行

tiup ctl:v5.0.4 pd --pd=host1:2379 -i

» store --jq=".stores[].store | { id, address, state_name}"

{"id":8,"address":"host1:20161","state_name":"Up"}

{"id":9,"address":"host2:20161","state_name":"Up"}

{"id":7,"address":"host1:20160","state_name":"Up"}

{"id":2,"address":"host3:20160","state_name":"Up"}

{"id":10,"address":"host2:20160","state_name":"Up"}

{"id":1,"address":"host3:20161","state_name":"Up"}

» region 1512

"store_id": 10,

"store_id": 1,

"store_id": 8,

» region --jq=".regions[] | {id: .id, peer_stores: [.peers[].store_id] | select(length as $total | map(if .==(8,1,10) then . else empty end) | length>=$total-length) }"

{"id":1135,"peer_stores":[9,8,1]}

{"id":1270,"peer_stores":[8,10,1]}

{"id":805,"peer_stores":[10,7,1]}

{"id":1127,"peer_stores":[8,10,2]}

{"id":890,"peer_stores":[10,7,1]}

{"id":175,"peer_stores":[10,7,1]}

{"id":707,"peer_stores":[8,10,1]}

{"id":1536,"peer_stores":[1,8,10]}

{"id":712,"peer_stores":[8,10,1]}

{"id":1023,"peer_stores":[7,1,10]}

{"id":1223,"peer_stores":[9,8,1]}

{"id":1512,"peer_stores":[1,8,10]}

{"id":941,"peer_stores":[10,1,8]}

{"id":874,"peer_stores":[8,10,1]}

{"id":1520,"peer_stores":[1,8,10]}

{"id":1528,"peer_stores":[1,8,10]}

{"id":1008,"peer_stores":[10,8,2]}

{"id":917,"peer_stores":[2,8,10]}

{"id":669,"peer_stores":[2,10,8]}

{"id":133,"peer_stores":[1,10,8]}

{"id":184,"peer_stores":[8,1,10]}

{"id":1131,"peer_stores":[1,10,8]}

{"id":129,"peer_stores":[8,1,9]}

{"id":988,"peer_stores":[2,10,8]}

{"id":1087,"peer_stores":[10,7,1]}

{"id":977,"peer_stores":[2,10,8]}

{"id":971,"peer_stores":[1,9,8]}

{"id":1278,"peer_stores":[7,1,10]}

{"id":796,"peer_stores":[2,8,10]}

{"id":1141,"peer_stores":[9,8,1]}

{"id":1097,"peer_stores":[10,7,1]}

{"id":900,"peer_stores":[10,1,8]}

{"id":1019,"peer_stores":[10,1,8]}

{"id":1169,"peer_stores":[8,10,2]}

{"id":1145,"peer_stores":[10,1,7]}

{"id":859,"peer_stores":[1,8,10]}

{"id":896,"peer_stores":[8,10,2]}

{"id":866,"peer_stores":[10,7,1]}

{"id":153,"peer_stores":[8,1,9]}

- 模拟故障

# 清理掉tikv部署文件

mv /data/tidb-deploy/tikv-20160 /data/tidb-deploy/tikv-20160_bak

# kill 任务

ps -ef|grep 20160

kill 12374

# mv 掉数据目录;如果磁盘空间不足直接rm -rf 掉文件目录。

mv /data/tidb-data/tikv_data_20160 /data/tidb-data/tikv_data_20160_bak

tiup ctl:v5.0.4 pd --pd=host1:2379 -i

store --jq=".stores[].store | { id, address, state_name}"

config show grep schedule-limit

config set leader-schedule-limit 0

config set region-schedule-limit 0

config set replica-schedule-limit 0

config set merge-schedule-limit 0

config set hot-region-schedule-limit 0

tiup cluster list tiup cluster display tidb_expertise_5.0

tiup cluster stop tidb_xxx_5.0 -R=tidb -y

- 停掉TiKV节点

tiup cluster stop tidb_xxx_5.0 -R=tikv -y

在所有已停掉的正常TiKV执行unsafe-recover 恢复TiKV,此时可能数据丢失

# bj3-all-tidb-xxx-01:

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db unsafe-recover remove-fail-stores -s 8,1,10 --all-regions

# bj3-all-tidb-xxx-02:

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db unsafe-recover remove-fail-stores -s 8,1,10 --all-regions

# bj3-all-tidb-xxx-03

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20161/db unsafe-recover remove-fail-stores -s 8,1,10 --all-regions

- 缩容故障TiKV,重启TiKV,PD

tiup cluster scale-in tidb_expertise_5.0 -N=host1:20160,host2:20161,host3:20160 -y

tiup cluster stop tidb_expertise_5.0 -R=pd

tiup cluster start tidb_expertise_5.0 -R=pd,tikv

tiup ctl:v5.0.4 pd --pd=host1:2379 -i

config set leader-schedule-limit 4

config set region-schedule-limit 2048

config set replica-schedule-limit 64

config set merge-schedule-limit 8

config set hot-region-schedule-limit 4

[tidb@bj3-all-tidb-expertise-01 ~]$ tiup cluster display tidb_expertise_5.0

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.10.2/tiup-cluster display tidb_expertise_5.0

Cluster type: tidb

Cluster name: tidb_expertise_5.0

Cluster version: v5.0.4

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://bj3-all-tidb-xxx-01:2379/dashboard

Grafana URL: http://bj3-all-tidb-xxx-01:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

bj3-all-tidb-xxx-02:8300 cdc bj3-all-tidb-xxx-02 8300 linux/x86_64 Up /data/tidb-data/cdc-8300 /data/tidb-deploy/cdc-8300

bj3-all-tidb-xxx-01:3000 grafana bj3-all-tidb-xxx-01 3000 linux/x86_64 Up - /data/tidb-deploy/grafana-3000

bj3-all-tidb-xxx-01:2379 pd bj3-all-tidb-xxx-01 2379/2380 linux/x86_64 Up|L|UI /data/tidb-data/pd_data /data/tidb-deploy/pd-2379

bj3-all-tidb-xxx-01:9090 prometheus bj3-all-tidb-xxx-01 9090 linux/x86_64 Up /data/tidb-data/prometheus-9090 /data/tidb-deploy/prometheus-9090

bj3-all-tidb-xxx-02:4000 tidb bj3-all-tidb-xxx-02 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

bj3-all-tidb-xxx-03:4000 tidb bj3-all-tidb-xxx-03 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

bj3-all-tidb-xxx-02:20161 tikv bj3-all-tidb-xxx-02 20161/20181 linux/x86_64 Disconnected /data/tidb-data/tikv_data_20161 /data/tidb-deploy/tikv-20161

bj3-all-tidb-xxx-01:20161 tikv bj3-all-tidb-xxx-01 20161/20181 linux/x86_64 Disconnected /data/tidb-data/tikv_data_20161 /data/tidb-deploy/tikv-20161

bj3-all-tidb-xxx-03:20160 tikv bj3-all-tidb-xxx-03 20160/20180 linux/x86_64 Disconnected /data/tidb-data/tikv_data_20160 /data/tidb-deploy/tikv-20160

Total nodes: 9

检查故障tikv实例上的region

- 多数派副本在故障的tikv节点:

tiup ctl:v5.0.4 pd --pd=bj3-all-tidb-xxx-01:2379 -i

» region --jq=".regions[] | {id: .id, peer_stores: [.peers[].store_id] | select(length as $total | map(if .==(8,1,10) then . else empty end) | length>=$total-length) }"

{"id":1512,"peer_stores":[1,8,10]}

{"id":1528,"peer_stores":[1,8,10]}

{"id":1131,"peer_stores":[1,10,8]}

{"id":133,"peer_stores":[1,10,8]}

{"id":707,"peer_stores":[8,10,1]}

{"id":1536,"peer_stores":[1,8,10]}

{"id":1270,"peer_stores":[8,10,1]}

{"id":874,"peer_stores":[8,10,1]}

{"id":184,"peer_stores":[8,1,10]}

{"id":712,"peer_stores":[8,10,1]}

{"id":1019,"peer_stores":[10,1,8]}

{"id":859,"peer_stores":[1,8,10]}

{"id":941,"peer_stores":[10,1,8]}

{"id":1520,"peer_stores":[1,8,10]}

{"id":900,"peer_stores":[10,1,8]}

- 没有leader的 region: 正常情况此步骤没有结果。

» region --jq '.regions[]|select(has("leader")|not)|{id: .id,peer_stores: [.peers[].store_id]}'

- 检查region是哪些表

结果:所有表都是业务数据,非系统表。

curl http://bj3-all-tidb-xxx-02:10080/regions/1590 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/1211 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/1630 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/731 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/1316 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/1598 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/905 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/1574 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/706 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/715 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/1582 |grep -E "table_name|db_name"

curl http://bj3-all-tidb-xxx-02:10080/regions/979 |grep -E "table_name|db_name"

- 重建故障region:

此操作需要关闭其中一个健康的TiKV,执行以下命令:

停掉 bj3-all-tidb-xxx-03:20161 Tikv节点

tiup cluster stop tidb_expertise_5.0 -N=bj3-all-tidb-xxx-03:20160

# 在已停掉的bj3-all-tidb-xxx-03:20161 Tikv节点 执行recreate-region

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 1590

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 1211

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 1630

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 731

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 1316

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 1598

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 905

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 1574

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 706

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 715

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 1582

tiup ctl:v5.0.4 tikv --db /data/tidb-data/tikv_data_20160/db recreate-region -p 'bj3-all-tidb-xxx-01:2379' -r 979

清理已删除tikv,并restart集群

tiup cluster scale-in tidb_expertise_5.0 -N=bj3-all-tidb-xxx-01:20160,bj3-all-tidb-xxx-02:20161,bj3-all-tidb-xxx-03:20160 -y --force

tiup cluster restart tidb_expertise_5.0 -y

Starting component blackbox_exporter

Starting instance host1

Starting instance bj3-all-tidb-xxx-01

Starting instance bj3-all-tidb-xxx-02

Start bj3-all-tidb-xxx-02 success

Start bj3-all-tidb-xxx-03 success

Start bj3-all-tidb-xxx-01 success

Restarted cluster `tidb_expertise_5.0` successfully

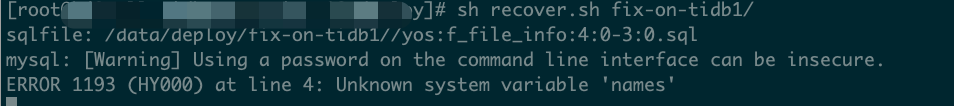

清理故障tikv后使用sync-diff-inspector 数据校验,并补数据

补数据发现:

set session sql_mode='';

mysql> REPLACE INTO `sbtest`.`sbtest1`(`I_ID`,xxxxxxx,`I_TASK_ID`,`D_CREATED_AT`,`D_UPDATED_AT`,`I_YOS_VERSION`) VALUES (3,2);

ERROR 1105 (HY000): can not be duplicated row, due to old row not found. handle 3 not found

tidb.log

[2022/09/08 16:26:12.608 +08:00] [ERROR] [replace.go:68] ["get old row failed when replace"] [handle=3] [toBeInsertedRow="(3, , 2, 0,

0, 0, 0, 0, , , , , , 1, 0, 2020-05-20 13:46:57, , 2)"]

[2022/09/08 16:26:12.608 +08:00] [INFO] [tidb.go:242] ["rollbackTxn for ddl/autocommit failed"]

[2022/09/08 16:26:12.608 +08:00] [WARN] [session.go:1458] ["run statement failed"] [conn=4825] [schemaVersion=48] [error="can not be duplicated row, due to old row not found

. handle 3 not found"] [session="{\

\"currDBName\": \"\",\

\"id\": 4825,\

\"status\": 2,\

\"strictMode\": true,\

\"user\": {\

\"Username\": \"root\",\

\"Ho

stname\": \"127.0.0.1\",\

\"CurrentUser\": false,\

\"AuthUsername\": \"root\",\

\"AuthHostname\": \"%\"\

}\

}"]

, user:root"] [command=Query] [status="inTxn:0, autocommit:1"] [sql="REPLACE INTO `sbtest`.`sbtest1`(`I_ID`,xxxx,xxxx,xxx) VALUES (3,xxx,xxx)"] [txn_mode=PESSIMISTIC] [err="can not be duplicated row, due to old row not found. handle 3 not found\

github.com/pingcap/errors.NotFoundf\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20201126102027-b0a155152ca3/juju_adaptor.go:117\

github.com/pingcap/tidb/executor.(*ReplaceExec).removeRow\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/replace.go:72\

github.com/pingcap/tidb/executor.(*ReplaceExec).removeIndexRow\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/replace.go:185\

github.com/pingcap/tidb/executor.(*ReplaceExec).replaceRow\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/replace.go:145\

github.com/pingcap/tidb/executor.(*ReplaceExec).exec\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/replace.go:238\

github.com/pingcap/tidb/executor.insertRows\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/insert_common.go:277\

github.com/pingcap/tidb/executor.(*ReplaceExec).Next\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/replace.go:253\

github.com/pingcap/tidb/executor.Next\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/executor.go:278\

github.com/pingcap/tidb/executor.(*ExecStmt).handleNoDelayExecutor\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/adapter.go:537\

github.com/pingcap/tidb/executor.(*ExecStmt).handleNoDelay\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/adapter.go:418\

github.com/pingcap/tidb/executor.(*ExecStmt).Exec\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/executor/adapter.go:368\

github.com/pingcap/tidb/session.runStmt\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/session/session.go:1560\

github.com/pingcap/tidb/session.(*session).ExecuteStmt\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/session/session.go:1455\

github.com/pingcap/tidb/server.(*TiDBContext).ExecuteStmt\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/server/driver_tidb.go:218\

github.com/pingcap/tidb/server.(*clientConn).handleStmt\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/server/conn.go:1694\

github.com/pingcap/tidb/server.(*clientConn).handleQuery\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/server/conn.go:1567\

github.com/pingcap/tidb/server.(*clientConn).dispatch\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/server/conn.go:1101\

github.com/pingcap/tidb/server.(*clientConn).Run\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/server/conn.go:859\

github.com/pingcap/tidb/server.(*Server).onConn\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/server/server.go:478\

runtime.goexit\

\t/usr/local/go/src/runtime/asm_amd64.s:1357"]