详细信息如下:

scala> spark.sqlContext.setConf(“spark.sql.tidb.addr”, “192.168.1.160”)

scala> spark.sqlContext.setConf(“spark.sql.tidb.port”, “4000”)

scala> spark.sqlContext.setConf(“spark.sql.tidb.user”, “test_spark”)

scala> spark.sqlContext.setConf(“spark.sql.tidb.password”, “11111”)

scala> spark.sql(“select * from test1.test1”).show

java.lang.IllegalArgumentException: Failed to get pd addresses from TiDB, please make sure user has PROCESS privilege on INFORMATION_SCHEMA.CLUSTER_INFO

at com.pingcap.tispark.auth.TiAuthorization.getPDAddress(TiAuthorization.scala:118)

at org.apache.spark.sql.TiContext.(TiContext.scala:47)

at org.apache.spark.sql.TiExtensions.getOrCreateTiContext(TiExtensions.scala:49)

at org.apache.spark.sql.TiExtensions.$anonfun$apply$2(TiExtensions.scala:36)

at org.apache.spark.sql.extensions.TiAuthorizationRule.(TiAuthorizationRule.scala:34)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.pingcap.tispark.utils.ReflectionUtil$.newTiAuthRule(ReflectionUtil.scala:140)

at org.apache.spark.sql.extensions.TiAuthRuleFactory.apply(rules.scala:36)

at org.apache.spark.sql.extensions.TiAuthRuleFactory.apply(rules.scala:25)

at org.apache.spark.sql.SparkSessionExtensions.$anonfun$buildResolutionRules$1(SparkSessionExtensions.scala:141)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at scala.collection.TraversableLike.map(TraversableLike.scala:238)

at scala.collection.TraversableLike.map$(TraversableLike.scala:231)

at scala.collection.AbstractTraversable.map(Traversable.scala:108)

at org.apache.spark.sql.SparkSessionExtensions.buildResolutionRules(SparkSessionExtensions.scala:141)

at org.apache.spark.sql.internal.BaseSessionStateBuilder.customResolutionRules(BaseSessionStateBuilder.scala:200)

at org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1.(HiveSessionStateBuilder.scala:82)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.analyzer(HiveSessionStateBuilder.scala:73)

at org.apache.spark.sql.internal.BaseSessionStateBuilder.$anonfun$build$2(BaseSessionStateBuilder.scala:342)

at org.apache.spark.sql.internal.SessionState.analyzer$lzycompute(SessionState.scala:84)

at org.apache.spark.sql.internal.SessionState.analyzer(SessionState.scala:84)

at org.apache.spark.sql.execution.QueryExecution.$anonfun$analyzed$1(QueryExecution.scala:73)

at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)

at org.apache.spark.sql.execution.QueryExecution.$anonfun$executePhase$1(QueryExecution.scala:143)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:775)

at org.apache.spark.sql.execution.QueryExecution.executePhase(QueryExecution.scala:143)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:73)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:71)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:63)

at org.apache.spark.sql.Dataset$.$anonfun$ofRows$2(Dataset.scala:98)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:775)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:96)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:618)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:775)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:613)

… 47 elided

Caused by: java.lang.IllegalArgumentException: queryForObject() result size: expected 1, acctually 3

at com.pingcap.tikv.jdbc.JDBCClient.queryForObject(JDBCClient.java:111)

at com.pingcap.tikv.jdbc.JDBCClient.getPDAddress(JDBCClient.java:64)

at com.pingcap.tispark.auth.TiAuthorization.getPDAddress(TiAuthorization.scala:113)

… 87 more

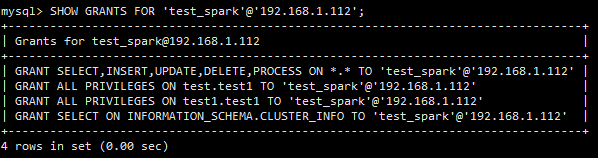

查询数据库用户权限是有PROCESS权限的:

mysql> show grants for ‘test_spark’@‘192.168.1.112’;

±---------------------------------------------------------------------------------+

| Grants for test_spark@192.168.1.112 |

±---------------------------------------------------------------------------------+

| GRANT SELECT,INSERT,UPDATE,DELETE,PROCESS ON . TO ‘test_spark’@‘192.168.1.112’ |

| GRANT ALL PRIVILEGES ON test.test1 TO ‘test_spark’@‘192.168.1.112’ |

| GRANT ALL PRIVILEGES ON test1.test1 TO ‘test_spark’@‘192.168.1.112’ |

±---------------------------------------------------------------------------------+

3 rows in set (0.00 sec)

另外还有以下这个错误信息,因为TIDB是混合模式部署的群集,有3个pd节点,执行查询命令的时候提示返回结果期待为1实际返回3,怎么样才能限制返回的数量呢?

Caused by: java.lang.IllegalArgumentException: queryForObject() result size: expected 1, acctually 3

at com.pingcap.tikv.jdbc.JDBCClient.queryForObject(JDBCClient.java:111)

at com.pingcap.tikv.jdbc.JDBCClient.getPDAddress(JDBCClient.java:64)

at com.pingcap.tispark.auth.TiAuthorization.getPDAddress(TiAuthorization.scala:113)

… 87 more