【 TiDB 使用环境`】测试环境

【 TiDB 版本】v6.1.0

【遇到的问题】我创建集群后,如果 Pod 没有创建,我应该怎么排查问题啊?

【复现路径】我通过官方文档提供的方式kubectl describe tidbclusters -n tidb tidb-cluster,没有看到有用的提示啊

【问题现象及影响】

你这是用于生产还是测试啊,

测试部署

目前属于调研阶段

官网文档具体是哪页文档,有连接不。

测试环境调研假定用的都是本地盘,参考 https://docs.pingcap.com/zh/tidb-in-kubernetes/stable/configure-storage-class 的本地盘内容,先确认当前集群有可用的 pv 盘

如下是我的yaml文件内容

IT IS NOT SUITABLE FOR PRODUCTION USE.

This YAML describes a basic TiDB cluster with minimum resource requirements,

which should be able to run in any Kubernetes cluster with storage support.

apiVersion: pingcap.com/v1alpha1

kind: TidbCluster

metadata:

name: tidb-cluster

namespace: tidb

spec:

version: “v6.1.0”

timezone: Asia/Shanghai

pvReclaimPolicy: Retain

enableDynamicConfiguration: true

configUpdateStrategy: RollingUpdate

discovery: {}

helper:

image: alpine:3.16.0

pd:

#affinity: {}

#enableDashboardInternalProxy: true

baseImage: pingcap/pd

config: |

[dashboard]

internal-proxy = true

#config:

# log:

# level:info

maxFailoverCount: 0

podSecurityContext: {}

replicas: 3

# if storageClassName is not set, the default Storage Class of the Kubernetes cluster will be used

requests:

cpu: “1”

memory: 2000Mi

storage: 20Gi

storageClassName: longhorn

schedulerName: tidb-scheduler

tidb:

#affinity: {}

#annotations:

# tidb.pingcap.com/sysctl-init: “true”

baseImage: pingcap/tidb

config: |

[performance]

tcp-keep-alive = true

#config:

# log:

# level: info

# performance:

# max-procs: 0

# tcp-keep-alive: true

# enableTLSClient: false

#maxFailoverCount: 3

#podSecurityContext:

# sysctls:

# - name: net.ipv4.tcp_keepalive_time

# value: “300”

# - name: net.ipv4.tcp_keepalive_intvl

# value: “75”

# - name: net.core.somaxconn

# value: “32768”

maxFailoverCount: 0

service:

type: NodePort

externalTrafficPolicy: Local

replicas: 3

requests:

cpu: “1”

memory: 2000Mi

separateSlowLog: true

slowLogTailer:

limits:

cpu: 100m

memory: 150Mi

requests:

cpu: 20m

memory: 50Mi

tikv:

#affinity: {}

#annotations:

# tidb.pingcap.com/sysctl-init: “true”

#config:

# log-level: info

baseImage: pingcap/tikv

config: |

log-level = “info”

hostNetwork: false

maxFailoverCount: 0

# If only 1 TiKV is deployed, the TiKV region leader

# cannot be transferred during upgrade, so we have

# to configure a short timeout

podSecurityContext:

sysctls:

- name: net.core.somaxconn

value: “32768”

# evictLeaderTimeout: 1m

privileged: false

replicas: 3

# if storageClassName is not set, the default Storage Class of the Kubernetes cluster will be used

# storageClassName: local-storage

requests:

cpu: “1”

memory: 4Gi

storage: 20Gi

storageClassName: longhorn

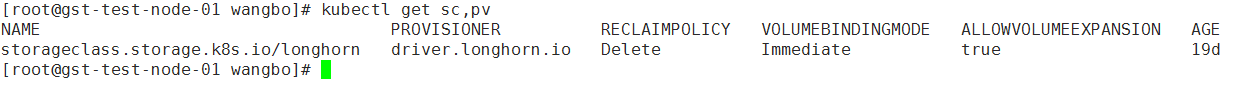

可以确认下以下的输出

kubectl get sc,pv

kubectl -n tidb describe tc tidb-cluster

kubectl -n {operator-namespace} logs tidb-controller-manager-{xxxxxx}

reflector.go:127] k8s.io/client-go@v0.19.16/tools/cache/reflector.go:156: Failed to watch *v1alpha1.TidbCluster: failed to list *v1alpha1.TidbCluster: v1alpha1.TidbClusterList.Items: []v1alpha1.TidbCluster: v1alpha1.TidbCluster.Spec: v1alpha1.TidbClusterSpec.PodSecurityContext: PD: v1alpha1.PDSpec.EnableDashboardInternalProxy: Config: unmarshalerDecoder: json: cannot unmarshal string into Go struct field PDConfig.log of type v1alpha1.PDLogConfig, error found in #10 byte of …|vel:info"},“enableDa|…, bigger context …|eImage”:“pingcap/pd”,“config”:{“log”:“level:info”},“enableDashboardInternalProxy”:true,"podSecurityC|…

Name: tidb-cluster

Namespace: tidb

Labels:

Annotations: API Version: pingcap.com/v1alpha1

Kind: TidbCluster

Metadata:

Creation Timestamp: 2022-09-02T10:22:27Z

Generation: 1

Managed Fields:

API Version: pingcap.com/v1alpha1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:configUpdateStrategy:

f:discovery:

f:enableDynamicConfiguration:

f:helper:

.:

f:image:

f:imagePullPolicy:

f:pd:

.:

f:baseImage:

f:config:

f:maxFailoverCount:

f:podSecurityContext:

f:replicas:

f:requests:

.:

f:cpu:

f:memory:

f:storage:

f:schedulerName:

f:storageClassName:

f:pvReclaimPolicy:

f:tidb:

.:

f:baseImage:

f:config:

f:maxFailoverCount:

f:replicas:

f:requests:

.:

f:cpu:

f:memory:

f:separateSlowLog:

f:service:

.:

f:externalTrafficPolicy:

f:type:

f:slowLogTailer:

.:

f:limits:

.:

f:cpu:

f:memory:

f:requests:

.:

f:cpu:

f:memory:

f:tikv:

.:

f:baseImage:

f:config:

f:hostNetwork:

f:maxFailoverCount:

f:podSecurityContext:

.:

f:sysctls:

f:privileged:

f:replicas:

f:requests:

.:

f:cpu:

f:memory:

f:storage:

f:storageClassName:

f:timezone:

f:version:

Manager: kubectl

Operation: Update

Time: 2022-09-02T10:22:27Z

Resource Version: 34851580

Self Link: /apis/pingcap.com/v1alpha1/namespaces/tidb/tidbclusters/tidb-cluster

UID: 3cb96e92-0953-4912-8a5d-7551d904d177

Spec:

Config Update Strategy: RollingUpdate

Discovery:

Enable Dynamic Configuration: true

Helper:

Image: alpine:3.16.0

Image Pull Policy: IfNotPresent

Pd:

Base Image: pingcap/pd

Config: [dashboard]

internal-proxy = true

Max Failover Count: 0

Pod Security Context:

Replicas: 1

Requests:

Cpu: 1

Memory: 2000Mi

Storage: 20Gi

Scheduler Name: tidb-scheduler

Storage Class Name: longhorn

Pv Reclaim Policy: Retain

Tidb:

Base Image: pingcap/tidb

Config: [performance]

tcp-keep-alive = true

Max Failover Count: 0

Replicas: 1

Requests:

Cpu: 1

Memory: 2000Mi

Separate Slow Log: true

Service:

External Traffic Policy: Local

Type: NodePort

Slow Log Tailer:

Limits:

Cpu: 100m

Memory: 150Mi

Requests:

Cpu: 20m

Memory: 50Mi

Tikv:

Base Image: pingcap/tikv

Config: log-level = “info”

Host Network: false

Max Failover Count: 0

Pod Security Context:

Sysctls:

Name: net.core.somaxconn

Value: 32768

Privileged: false

Replicas: 1

Requests:

Cpu: 1

Memory: 4Gi

Storage: 20Gi

Storage Class Name: longhorn

Timezone: Asia/Shanghai

Version: v6.1.0

Events:

describe tc 的输出中显示报错是 Failed to watch *v1alpha1.TidbCluster, json: cannot unmarshal

猜测是 v1alpha1 的 CRD 中 pd.EnableDashboardInternalProxy 可能是必须项

建议参考官网文档,使用最新的 operator v1.3.7 和 CRD v1

https://docs.pingcap.com/zh/tidb-in-kubernetes/stable/get-started#安装-tidb-operator-crds

感谢您的帮助,这些配置我都看过了,我的kubernete的版本是Kubernetes v1.18.18

我的tidb-operator是v1.3.7,用的也是最新的crd,如下是我kubectl get crd的内容

NAME CREATED AT

alertmanagers.monitoring.coreos.com 2022-04-20T07:20:58Z

backingimagedatasources.longhorn.io 2022-08-13T12:32:48Z

backingimagemanagers.longhorn.io 2022-08-13T12:32:48Z

backingimages.longhorn.io 2022-08-13T12:32:48Z

backups.longhorn.io 2022-08-13T12:32:48Z

backups.pingcap.com 2022-08-14T03:55:22Z

backupschedules.pingcap.com 2022-08-14T03:55:22Z

backuptargets.longhorn.io 2022-08-13T12:32:48Z

backupvolumes.longhorn.io 2022-08-13T12:32:48Z

dmclusters.pingcap.com 2022-08-14T03:55:23Z

engineimages.longhorn.io 2022-08-13T12:32:48Z

engines.longhorn.io 2022-08-13T12:32:48Z

instancemanagers.longhorn.io 2022-08-13T12:32:48Z

nodes.longhorn.io 2022-08-13T12:32:48Z

prometheuses.monitoring.coreos.com 2022-04-20T07:20:58Z

prometheusrules.monitoring.coreos.com 2022-04-20T07:20:58Z

recurringjobs.longhorn.io 2022-08-13T12:32:48Z

replicas.longhorn.io 2022-08-13T12:32:48Z

restores.pingcap.com 2022-08-14T03:55:23Z

servicemonitors.monitoring.coreos.com 2022-04-20T07:20:58Z

settings.longhorn.io 2022-08-13T12:32:48Z

sharemanagers.longhorn.io 2022-08-13T12:32:48Z

tidbclusterautoscalers.pingcap.com 2022-08-14T03:55:23Z

tidbclusters.pingcap.com 2022-08-14T03:55:23Z

tidbinitializers.pingcap.com 2022-08-14T03:55:24Z

tidbmonitors.pingcap.com 2022-08-14T03:55:24Z

tidbngmonitorings.pingcap.com 2022-08-14T03:55:24Z

volumes.longhorn.io 2022-08-13T12:32:48Z

看错了,报错是 TC v1alpha1 的语法错误,错误信息指向的是 spec.pd.config ,先将 spec.pd.config 设置为 {} 空试下吧

感谢您的帮助,设置为{} 还是同样的问题,看来症结不在这

上面的 tc yaml文件内容缩进都不对,能直接附件形式上传下文件不