为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

【TiDB 版本】

v4.0.11

【问题描述】

tiflash突然宕机,且起不来,日志中一直再报如下错误

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。

日志如下:

tiflash_log.zip (2.2 MB)

为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

【TiDB 版本】

v4.0.11

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。

日志如下:

tiflash_log.zip (2.2 MB)

麻烦 tiflash 的 error 日志和 tiflash 的 log 文件形式上传一下

文件如下:

tiflash_error.zip (41.1 KB) tiflash_log.zip (2.2 MB)

[tidb@ip-10-18-253-5 ~]$ tiup ctl:v4.0.11 pd -u http://10.18.253.83:2379 config show

Starting component ctl: /home/tidb/.tiup/components/ctl/v4.0.11/ctl pd -u http://10.18.253.83:2379 config show

{

“replication”: {

“enable-placement-rules”: “true”,

“location-labels”: “”,

“max-replicas”: 3,

“strictly-match-label”: “false”

},

“schedule”: {

“enable-cross-table-merge”: “false”,

“enable-debug-metrics”: “false”,

“enable-location-replacement”: “true”,

“enable-make-up-replica”: “true”,

“enable-one-way-merge”: “false”,

“enable-remove-down-replica”: “true”,

“enable-remove-extra-replica”: “true”,

“enable-replace-offline-replica”: “true”,

“high-space-ratio”: 0.7,

“hot-region-cache-hits-threshold”: 3,

“hot-region-schedule-limit”: 4,

“leader-schedule-limit”: 4,

“leader-schedule-policy”: “count”,

“low-space-ratio”: 0.8,

“max-merge-region-keys”: 200000,

“max-merge-region-size”: 20,

“max-pending-peer-count”: 16,

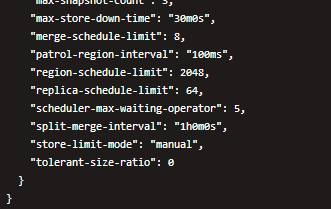

“max-snapshot-count”: 3,

“max-store-down-time”: “30m0s”,

“merge-schedule-limit”: 8,

“patrol-region-interval”: “100ms”,

“region-schedule-limit”: 2048,

“replica-schedule-limit”: 64,

“scheduler-max-waiting-operator”: 5,

“split-merge-interval”: “1h0m0s”,

“store-limit-mode”: “manual”,

“tolerant-size-ratio”: 0

}

}

10.18.253.83:2379 这个 pd 日志提供一下

pd_log.zip (4.9 MB)

现在能大致确定是什么原因导致tiflash down机和起不来的吗

pd 的日志里面 [2021/05/23 05:23:22.432 +00:00] [WARN] [grpclog.go:60] [“transport: http2Server.HandleStreams failed to read frame: read tcp 10.18.253.83:2379->10.18.253.69:38752: read: connection reset by peer”]

查一下 网络有没有问题.

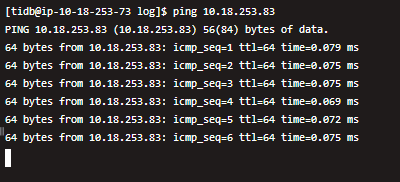

ping是没有问题的,telnet不通,因为69和73的tiflash挂了,而且69的38752端口也没使用

应该是kv写完后,同步给tiflash,然后报这个connection问题,因为69和73的tiflash服务本身就是down了,所以不通吧

从 tiflash 结点 telnet 这个 10.18.253.83:2379

网络上有什么变化吗?

ping和telnet都没有问题

tiflash 的 manger 日志也提供一下。

tiflash_cluster_manager.log。在如果没有修改默认在 tiflash log 目录下。

tiflash_manager.zip (1.6 KB)

在 tiflash 结点上运行一下 curl http://10.18.253.12:2379/pd/api/v1/members

[tidb@ip-10-18-253-73 log]$ curl http://10.18.253.12:2379/pd/api/v1/members

{

“header”: {

“cluster_id”: 6938337866423754123

},

“members”: [

{

“name”: “pd-10.18.253.83-2379”,

“member_id”: 98094734768936214,

“peer_urls”: [

“http://10.18.253.83:2380”

],

“client_urls”: [

“http://10.18.253.83:2379”

],

“deploy_path”: “/data/tidb-deploy/pd-2379/bin”,

“binary_version”: “v4.0.11”,

“git_hash”: “96efd6f402361f2f84e39ea5b3a7a6f6b10acfb1”

},

{

“name”: “pd-10.18.253.106-2379”,

“member_id”: 10368929817371469603,

“peer_urls”: [

“http://10.18.253.106:2380”

],

“client_urls”: [

“http://10.18.253.106:2379”

],

“deploy_path”: “/data/tidb-deploy/pd-2379/bin”,

“binary_version”: “v4.0.11”,

“git_hash”: “96efd6f402361f2f84e39ea5b3a7a6f6b10acfb1”

},

{

“name”: “pd-10.18.253.12-2379”,

“member_id”: 11416212041977913084,

“peer_urls”: [

“http://10.18.253.12:2380”

],

“client_urls”: [

“http://10.18.253.12:2379”

],

“deploy_path”: “/data/tidb-deploy/pd-2379/bin”,

“binary_version”: “v4.0.11”,

“git_hash”: “96efd6f402361f2f84e39ea5b3a7a6f6b10acfb1”

}

],

“leader”: {

“name”: “pd-10.18.253.83-2379”,

“member_id”: 98094734768936214,

“peer_urls”: [

“http://10.18.253.83:2380”

],

“client_urls”: [

“http://10.18.253.83:2379”

]

},

“etcd_leader”: {

“name”: “pd-10.18.253.83-2379”,

“member_id”: 98094734768936214,

“peer_urls”: [

“http://10.18.253.83:2380”

],

“client_urls”: [

“http://10.18.253.83:2379”

],

“deploy_path”: “/data/tidb-deploy/pd-2379/bin”,

“binary_version”: “v4.0.11”,

“git_hash”: “96efd6f402361f2f84e39ea5b3a7a6f6b10acfb1”

}

}

已经在内部排查了。

麻烦看一下 tiup cluster edit-config 里面 tiflash 的部分。参数配置是什么样的。