【 TiDB 使用环境】生产环境 or 测试环境 or POC

测试环境

【 TiDB 版本】

v6.2

【遇到的问题】

通过jdbc查询6亿数据量的大表,返回全部数据,执行到25分59秒时,报错:TiFlash server timeout

执行的sql:

SELECT

`arch_safe_demo_building_plan_10y_1000partition_7index`.`id`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`data_id`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`duplicate_id`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`order_number`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`flag`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`amount`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`scenario`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`version`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`account`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`years`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`period`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`entity`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`industrial`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`building`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`section`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`contract_num`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`contract_first`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`contract_second`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`contract_content`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`misc_project`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`misc1`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`misc2`,

`arch_safe_demo_building_plan_10y_1000partition_7index`.`misc3`

FROM

`test`.`arch_safe_demo_building_plan_10y_1000partition_7index`

检查过超时参数,wait_timeout是28800,其他也都是默认值,没问题。

同样有一张1亿的表,可以正常查询,大概耗时70分钟。

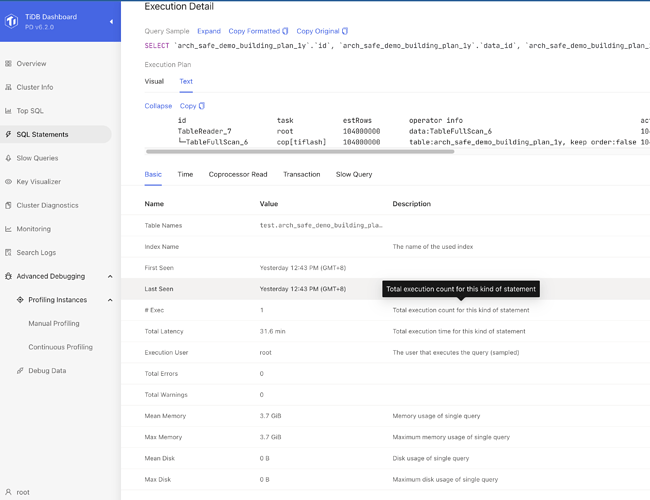

一亿的查询截图

【复现路径】

做过哪些操作出现的问题【问题现象及影响】

【附件】 相关日志及监控(https://metricstool.pingcap.com/)

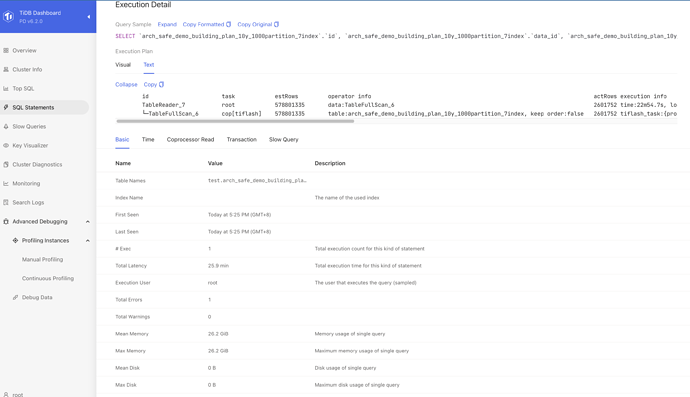

sql执行情况

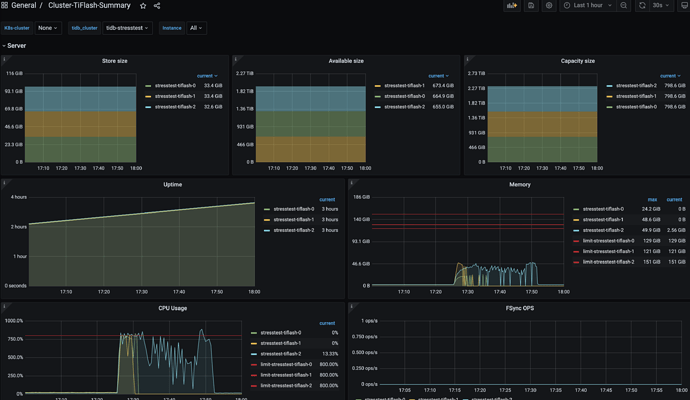

tiflash监控

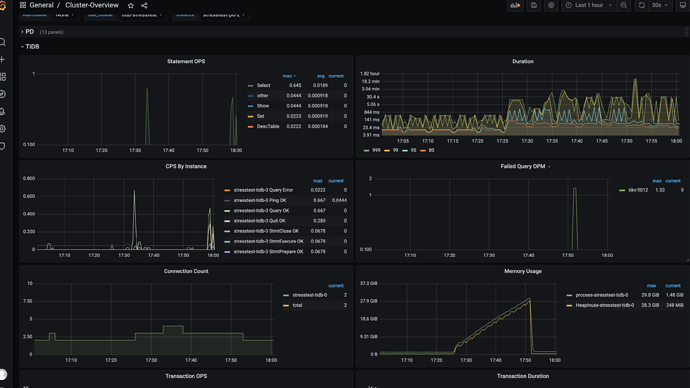

tidb监控

tidb错误日志,tiflash未发现错误日志

2022-08-25T17:51:25.661604677+08:00 [2022/08/25 17:51:25.660 +08:00] [INFO] [coprocessor.go:865] [“[TIME_COP_PROCESS] resp_time:1m1.796102256s txnStartTS:435531161164316675 region_id:5264227 store_addr:stresstest-tiflash-0.stresstest-tiflash-peer.tidb.svc:3930 backoff_ms:41764 backoff_types:[tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC,regionMiss,tiflashRPC]”] [conn=608964515043741975]

2022-08-25T17:51:25.661662334+08:00 [2022/08/25 17:51:25.660 +08:00] [WARN] [backoff.go:158] [“regionMiss backoffer.maxSleep 40000ms is exceeded, errors:

send tiflash request error: rpc error: code = DeadlineExceeded desc = context deadline exceeded, ctx: region ID: 5264227, meta: id:5264227 start_key:"t\200\000\000\000\000\000\005\221_r" end_key:"t\200\000\000\000\000\000\005\222" region_epoch:<conf_ver:11 version:2240 > peers:<id:5264228 store_id:1 > peers:<id:5264229 store_id:4 > peers:<id:5264230 store_id:5 > peers:<id:29609002 store_id:2751002 role:Learner > peers:<id:41795700 store_id:2752001 role:Learner > , peer: id:29609002 store_id:2751002 role:Learner , addr: stresstest-tiflash-0.stresstest-tiflash-peer.tidb.svc:3930, idx: 0, reqStoreType: TiFlashOnly, runStoreType: tiflash, try next peer later at 2022-08-25T17:50:22.061018854+08:00

region_id:5264227, region_ver:2240, store_type:tiflash, peer_addr:stresstest-tiflash-0.stresstest-tiflash-peer.tidb.svc:3930, error:epoch_not_match:<> at 2022-08-25T17:50:23.354344981+08:00

send tiflash request error: rpc error: code = DeadlineExceeded desc = context deadline exceeded, ctx: region ID: 5264227, meta: id:5264227 start_key:"t\200\000\000\000\000\000\005\221_r" end_key:"t\200\000\000\000\000\000\005\222" region_epoch:<conf_ver:11 version:2240 > peers:<id:5264228 store_id:1 > peers:<id:5264229 store_id:4 > peers:<id:5264230 store_id:5 > peers:<id:29609002 store_id:2751002 role:Learner > peers:<id:41795700 store_id:2752001 role:Learner > , peer: id:29609002 store_id:2751002 role:Learner , addr: stresstest-tiflash-0.stresstest-tiflash-peer.tidb.svc:3930, idx: 0, reqStoreType: TiFlashOnly, runStoreType: tiflash, try next peer later at 2022-08-25T17:51:23.865736172+08:00

longest sleep type: tiflashRPC, time: 33254ms”]

2022-08-25T17:51:25.670991107+08:00 [2022/08/25 17:51:25.670 +08:00] [INFO] [conn.go:1165] [“command dispatched failed”] [conn=608964515043741975] [connInfo=“id:608964515043741975, addr:10.233.103.0:19688 status:10, collation:utf8mb4_bin, user:root”] [command=Query] [status=“inTxn:0, autocommit:1”] [sql=“SELECT arch_safe_demo_building_plan_10y_1000partition_7index.id, arch_safe_demo_building_plan_10y_1000partition_7index.data_id, arch_safe_demo_building_plan_10y_1000partition_7index.duplicate_id, arch_safe_demo_building_plan_10y_1000partition_7index.order_number, arch_safe_demo_building_plan_10y_1000partition_7index.flag, arch_safe_demo_building_plan_10y_1000partition_7index.amount, arch_safe_demo_building_plan_10y_1000partition_7index.scenario, arch_safe_demo_building_plan_10y_1000partition_7index.version, arch_safe_demo_building_plan_10y_1000partition_7index.account, arch_safe_demo_building_plan_10y_1000partition_7index.years, arch_safe_demo_building_plan_10y_1000partition_7index.period, arch_safe_demo_building_plan_10y_1000partition_7index.entity, arch_safe_demo_building_plan_10y_1000partition_7index.industrial, arch_safe_demo_building_plan_10y_1000partition_7index.building, arch_safe_demo_building_plan_10y_1000partition_7index.section, arch_safe_demo_building_plan_10y_1000partition_7index.contract_num, arch_safe_demo_building_plan_10y_1000partition_7index.contract_first, arch_safe_demo_building_plan_10y_1000partition_7index.contract_second, arch_safe_demo_building_plan_10y_1000partition_7index.contract_content, arch_safe_demo_building_plan_10y_1000partition_7index.misc_project, arch_safe_demo_building_plan_10y_1000partition_7index.misc1, arch_safe_demo_building_plan_10y_1000partition_7index.misc2, arch_safe_demo_building_plan_10y_1000partition_7index.misc3

FROM test.arch_safe_demo_building_plan_10y_1000partition_7index”] [txn_mode=PESSIMISTIC] [timestamp=435531161164316675] [err=“[tikv:9012]TiFlash server timeout

github.com/pingcap/errors.AddStack

\t/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20211224045212-9687c2b0f87c/errors.go:174

github.com/pingcap/errors.Trace

\t/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20211224045212-9687c2b0f87c/juju_adaptor.go:15

github.com/pingcap/tidb/store/copr.(*copIteratorWorker).handleCopResponse

\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/copr/coprocessor.go:919

github.com/pingcap/tidb/store/copr.(*copIteratorWorker).handleCopPagingResult

\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/copr/coprocessor.go:877

github.com/pingcap/tidb/store/copr.(*copIteratorWorker).handleTaskOnce

\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/copr/coprocessor.go:816

github.com/pingcap/tidb/store/copr.(*copIteratorWorker).handleTask

\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/copr/coprocessor.go:702

github.com/pingcap/tidb/store/copr.(*copIteratorWorker).run

\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/copr/coprocessor.go:444

runtime.goexit

\t/usr/local/go/src/runtime/asm_amd64.s:1571”]

2022-08-25T17:51:37.539916689+08:00 [2022/08/25 17:51:37.538 +08:00] [WARN] [pd.go:152] [“get timestamp too slow”] [“cost time”=74.163858ms]

2022-08-25T17:51:48.997102917+08:00 [2022/08/25 17:51:48.996 +08:00] [ERROR] [grpclogger.go:116] [“[transport]transport: Got too many pings from the client, closing the connection.”] [system=grpc] [grpc_log=true]

2022-08-25T17:51:48.998239038+08:00 [2022/08/25 17:51:48.997 +08:00] [ERROR] [grpclogger.go:116] [“[transport]transport: loopyWriter.run returning. Err: transport: Connection closing”] [system=grpc] [grpc_log=true]

2022-08-25T17:52:35.628564557+08:00 [2022/08/25 17:52:35.627 +08:00] [INFO] [gc_worker.go:601] [“[gc worker] last safe point is later than current one.No need to gc.This might be caused by manually enlarging gc lifetime”] [“leaderTick on”=60b4c9fd3dc0001] [“last safe point”=2022/08/25 10:06:29.000 +08:00] [“current safe point”=2022/08/25 09:52:35.540 +08:00]

2022-08-25T17:52:38.899956904+08:00 [2022/08/25 17:52:38.899 +08:00] [ERROR] [grpclogger.go:116] [“[transport]transport: Got too many pings from the client, closing the connection.”] [system=grpc] [grpc_log=true]

2022-08-25T17:52:38.900414472+08:00 [2022/08/25 17:52:38.900 +08:00] [ERROR] [grpclogger.go:116] [“[transport]transport: loopyWriter.run returning. Err: transport: Connection closing”] [system=grpc] [grpc_log=true]

2022-08-25T17:53:07.652825023+08:00 [2022/08/25 17:53:07.644 +08:00] [WARN] [pd.go:152] [“get timestamp too slow”] [“cost time”=98.383067ms]

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。