背景

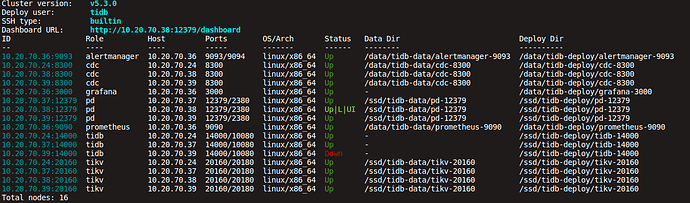

一个tidb 集群,tidb 节点有 3 个 down 了其中一个,不知道是否因执行某个 delete 操作,没注意看清 delete 的数据有多少,后续发现一次 delete 的数据量太多了,造成其中一个 tidb down 了。

后续发现某个 tidb 节点 down 了之后,使用 tiup cluster restart --node ip:port ,准备将其启动时,显示以下信息

Error: failed to start tidb: failed to start: 10.20.70.39 tidb-14000.service, please check the instance's log(/ssd/tidb-deploy/tidb-14000/log) for more detail.: timed out waiting for port 14000 to be started after 2m0s

Verbose debug logs has been written to /home/tidb/.tiup/logs/tiup-cluster-debug-2022-08-23-22-06-23.log.

Error: run `/home/tidb/.tiup/components/cluster/v1.7.0/tiup-cluster` (wd:/home/tidb/.tiup/data/TFLI7w1) failed: exit status 1

/home/tidb/.tiup/logs/tiup-cluster-debug-2022-08-23-22-06-23.log 文件关键信息

2022-08-23T22:06:23.264+0800 DEBUG retry error: operation timed out after 2m0s

2022-08-23T22:06:23.264+0800 DEBUG TaskFinish {"task": "StartCluster", "error": "failed to start tidb: failed to start: 10.20.70.39 tidb-14000.service, please check the instance's log(/ssd/tidb-deploy/tidb-14000/log) for more detail.: timed out waiting for port 14000 to be started after 2m0s", "errorVerbose": "timed out waiting for port 14000 to be started after 2m0s\

github.com/pingcap/tiup/pkg/cluster/module.(*WaitFor).Execute\

\tgithub.com/pingcap/tiup/pkg/cluster/module/wait_for.go:91\

github.com/pingcap/tiup/pkg/cluster/spec.PortStarted\

\tgithub.com/pingcap/tiup/pkg/cluster/spec/instance.go:115\

github.com/pingcap/tiup/pkg/cluster/spec.(*BaseInstance).Ready\

\tgithub.com/pingcap/tiup/pkg/cluster/spec/instance.go:147\

github.com/pingcap/tiup/pkg/cluster/operation.startInstance\

\tgithub.com/pingcap/tiup/pkg/cluster/operation/action.go:359\

github.com/pingcap/tiup/pkg/cluster/operation.StartComponent.func1\

\tgithub.com/pingcap/tiup/pkg/cluster/operation/action.go:485\

golang.org/x/sync/errgroup.(*Group).Go.func1\

\tgolang.org/x/sync@v0.0.0-20210220032951-036812b2e83c/errgroup/errgroup.go:57\

runtime.goexit\

\truntime/asm_amd64.s:1581\

failed to start: 10.20.70.39 tidb-14000.service, please check the instance's log(/ssd/tidb-deploy/tidb-14000/log) for more detail.\

failed to start tidb"}

2022-08-23T22:06:23.265+0800 INFO Execute command finished {"code": 1, "error": "failed to start tidb: failed to start: 10.20.70.39 tidb-14000.service, please check the instance's log(/ssd/tidb-deploy/tidb-14000/log) for more detail.: timed out waiting for port 14000 to be started after 2m0s", "errorVerbose": "timed out waiting for port 14000 to be started after 2m0s\

github.com/pingcap/tiup/pkg/cluster/module.(*WaitFor).Execute\

\tgithub.com/pingcap/tiup/pkg/cluster/module/wait_for.go:91\

github.com/pingcap/tiup/pkg/cluster/spec.PortStarted\

\tgithub.com/pingcap/tiup/pkg/cluster/spec/instance.go:115\

github.com/pingcap/tiup/pkg/cluster/spec.(*BaseInstance).Ready\

\tgithub.com/pingcap/tiup/pkg/cluster/spec/instance.go:147\

github.com/pingcap/tiup/pkg/cluster/operation.startInstance\

\tgithub.com/pingcap/tiup/pkg/cluster/operation/action.go:359\

github.com/pingcap/tiup/pkg/cluster/operation.StartComponent.func1\

\tgithub.com/pingcap/tiup/pkg/cluster/operation/action.go:485\

golang.org/x/sync/errgroup.(*Group).Go.func1\

\tgolang.org/x/sync@v0.0.0-20210220032951-036812b2e83c/errgroup/errgroup.go:57\

runtime.goexit\

\truntime/asm_amd64.s:1581\

failed to start: 10.20.70.39 tidb-14000.service, please check the instance's log(/ssd/tidb-deploy/tidb-14000/log) for more detail.\

failed to start tidb"}

查看 tidb log,日志如下

:32.719 +08:00] [INFO] [domain.go:506] ["globalConfigSyncerKeeper exited."]

[2022/08/23 19:27:32.719 +08:00] [WARN] [manager.go:291] ["is not the owner"] ["owner info"="[bindinfo] /tidb/bindinfo/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"]

[2022/08/23 19:27:32.719 +08:00] [INFO] [domain.go:1187] ["PlanReplayerLoop exited."]

[2022/08/23 19:27:32.719 +08:00] [INFO] [manager.go:258] ["break campaign loop, context is done"] ["owner info"="[bindinfo] /tidb/bindinfo/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"]

[2022/08/23 19:27:32.719 +08:00] [INFO] [domain.go:933] ["loadPrivilegeInLoop exited."]

[2022/08/23 19:27:32.719 +08:00] [WARN] [manager.go:291] ["is not the owner"] ["owner info"="[telemetry] /tidb/telemetry/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"]

[2022/08/23 19:27:32.719 +08:00] [INFO] [domain.go:1165] ["TelemetryRotateSubWindowLoop exited."]

[2022/08/23 19:27:32.719 +08:00] [INFO] [manager.go:345] ["watcher is closed, no owner"] ["owner info"="[stats] ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53 watch owner key /tidb/stats/owner/161581faba041325"]

[2022/08/23 19:27:32.719 +08:00] [INFO] [domain.go:560] ["loadSchemaInLoop exited."]

[2022/08/23 19:27:32.719 +08:00] [INFO] [domain.go:1061] ["globalBindHandleWorkerLoop exited."]

[2022/08/23 19:27:32.719 +08:00] [INFO] [manager.go:249] ["etcd session is done, creates a new one"] ["owner info"="[telemetry] /tidb/telemetry/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"]

[2022/08/23 19:27:32.720 +08:00] [INFO] [manager.go:253] ["break campaign loop, NewSession failed"] ["owner info"="[telemetry] /tidb/telemetry/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"] [error="context canceled"]

[2022/08/23 19:27:32.720 +08:00] [WARN] [manager.go:291] ["is not the owner"] ["owner info"="[stats] /tidb/stats/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"]

[2022/08/23 19:27:32.720 +08:00] [INFO] [domain.go:452] ["topNSlowQueryLoop exited."]

[2022/08/23 19:27:33.826 +08:00] [INFO] [manager.go:277] ["failed to campaign"] ["owner info"="[stats] /tidb/stats/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"] [error="context canceled"]

[2022/08/23 19:27:33.827 +08:00] [INFO] [manager.go:249] ["etcd session is done, creates a new one"] ["owner info"="[stats] /tidb/stats/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"]

[2022/08/23 19:27:33.827 +08:00] [INFO] [manager.go:253] ["break campaign loop, NewSession failed"] ["owner info"="[stats] /tidb/stats/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"] [error="context canceled"]

[2022/08/23 19:27:33.953 +08:00] [INFO] [manager.go:302] ["revoke session"] ["owner info"="[telemetry] /tidb/telemetry/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"] [error="rpc error: code = Canceled desc = grpc: the client connection is closing"]

[2022/08/23 19:27:33.953 +08:00] [INFO] [domain.go:1135] ["TelemetryReportLoop exited."]

[2022/08/23 19:27:33.971 +08:00] [INFO] [manager.go:302] ["revoke session"] ["owner info"="[bindinfo] /tidb/bindinfo/owner ownerManager 4c6db34a-8534-43bb-aa8c-45d8a40f0a53"] [error="rpc error: code = Canceled desc = grpc: the client connection is closing"]

[2022/08/23 19:27:33.971 +08:00

因为没能解决重启的问题,就想着暴力一点,直接将状态为 Dwon 的 tidb 缩容,缩容后,再扩容一个新的 tidb 节点,但是扩容的时候,发现也出问题了,显示以下 Error

Error: executor.ssh.execute_failed: Failed to execute command over SSH for 'tidb@10.20.70.38:22' {ssh_stderr: , ssh_stdout: [2022/08/23 21:56:26.080 +08:00] [FATAL] [terror.go:292]["unexpected error"] [error="toml: cannot load TOML value of type string into a Go integer"] [stack="github.com/pingcap/tidb/parser/terror.MustNil\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/parser/terror/terror.go:292\

github.com/pingcap/tidb/config.InitializeConfig\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/config/config.go:796\

main.main\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/tidb-server/main.go:177\

runtime.main\

\t/usr/local/go/src/runtime/proc.go:225"] [stack="github.com/pingcap/tidb/parser/terror.MustNil\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/parser/terror/terror.go:292\

github.com/pingcap/tidb/config.InitializeConfig\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/config/config.go:796\

main.main\

\t/home/jenkins/agent/workspace/optimization-build-tidb-linux-amd/go/src/github.com/pingcap/tidb/tidb-server/main.go:177\

runtime.main\

\t/usr/local/go/src/runtime/proc.go:225"]

, ssh_command: export LANG=C; PATH=$PATH:/bin:/sbin:/usr/bin:/usr/sbin /ssd/tidb-deploy/tidb-14000/bin/tidb-server --config-check --config=/ssd/tidb-deploy/tidb-14000/conf/tidb.toml }, cause: Process exited with status 1: check config failed

Verbose debug logs has been written to /home/tidb/.tiup/logs/tiup-cluster-debug-2022-08-23-21-56-35.log.

Error: run `/home/tidb/.tiup/components/cluster/v1.7.0/tiup-cluster` (wd:/home/tidb/.tiup/data/TFLG7Ha) failed: exit status 1

现在遇到的问题就是已经 Down 掉的 tidb 节点无法重启,也无法扩容新的 tidb 节点进来