【版本】v6.1.0 ARM

raft-engine.dir: /raft/raftdb

【现象】 使用pd-ctl store lable为tikv添加了label之后执行reload -R tikv 某tikv节点报

Restart instance xxx130.96:20160 success

Error: failed to evict store leader xxx130.97: metric tikv_raftstore_region_count{type=“leader”} not found

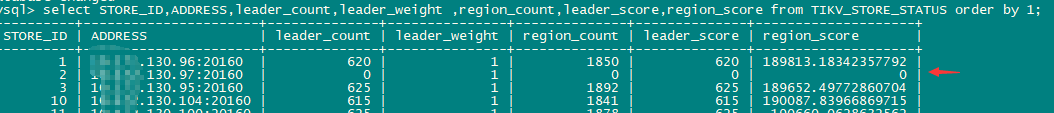

【检查】

1、 检查tikv上region信息都是0

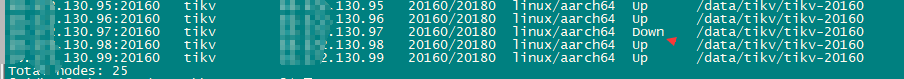

2、检查 tikv状态

3、检查tikv.log发现有报错

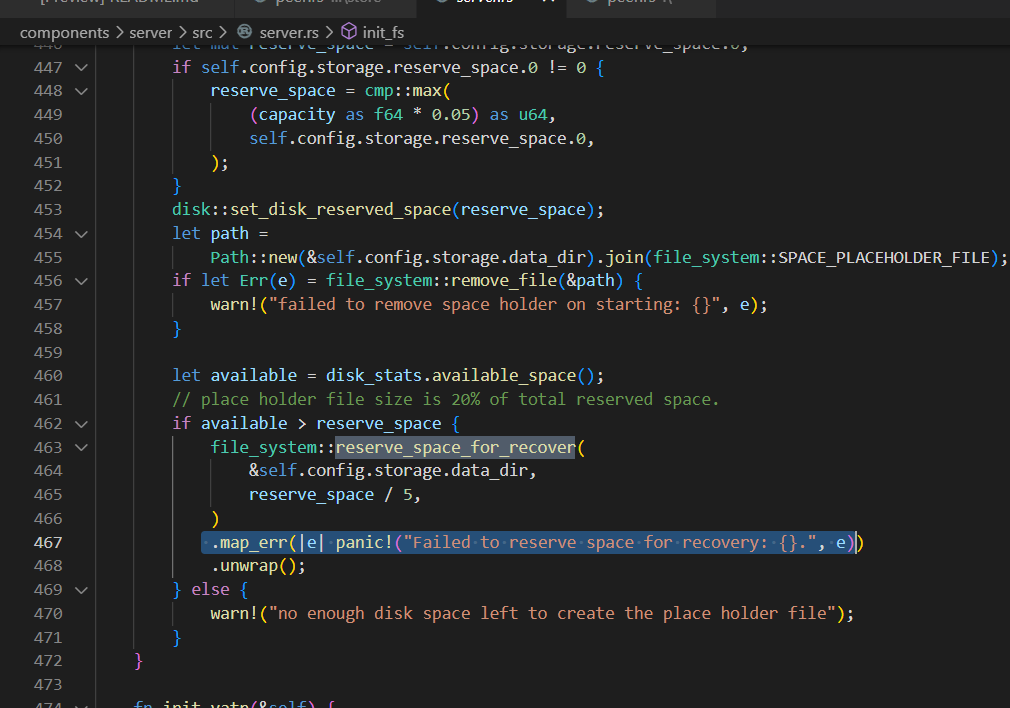

[FATAL] [lib.rs:491] [“Failed to reserve space for recovery: Structure needs cleaning (os error 117).”]

4、检查文件系统、参数、placeholder文件

![]()

4、尝试重启tikv仍然报上述错误

[2022/08/17 15:39:27.324 +08:00] [INFO] [config.rs:891] [“data dir”] [mount_fs=“FsInfo { tp: "ext4", opts: "rw,noatime,nodelalloc,stripe=64", mnt_dir: "/data", fsname: "/dev/mapper/datavg-lv_data" }”] [data_path=/data/tikv/tikv-20160/raft]

[2022/08/17 15:33:40.836 +08:00] [WARN] [server.rs:457] [“failed to remove space holder on starting: No such file or directory (os error 2)”]

[2022/08/17 15:33:44.895 +08:00] [FATAL] [lib.rs:491] [“Failed to reserve space for recovery: Structure needs cleaning (os error 117).”] [backtrace=" 0: tikv_util::set_panic_hook::{{closure}}

at /var/lib/docker/jenkins/workspace/build-common@3/go/src/github.com/pingcap/tikv/components/tikv_util/src/lib.rs:490:18

1: std::panicking::rust_panic_with_hook

at /root/.rustup/toolchains/nightly-2022-02-14-aarch64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/panicking.rs:702:17

2: std::panicking::begin_panic_handler::{{closure}}

at /root/.rustup/toolchains/nightly-2022-02-14-aarch64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/panicking.rs:588:13

3: std::sys_common::backtrace::__rust_end_short_backtrace

at /root/.rustup/toolchains/nightly-2022-02-14-aarch64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/sys_common/backtrace.rs:138:18

4: rust_begin_unwind

at /root/.rustup/toolchains/nightly-2022-02-14-aarch64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/panicking.rs:584:5

5: core::panicking::panic_fmt

at /root/.rustup/toolchains/nightly-2022-02-14-aarch64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/panicking.rs:143:14

6: server::server::TiKvServer::init_fs::{{closure}}

at /var/lib/docker/jenkins/workspace/build-common@3/go/src/github.com/pingcap/tikv/components/server/src/server.rs:467:26

core::result::Result<T,E>::map_err

at /root/.rustup/toolchains/nightly-2022-02-14-aarch64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/result.rs:842:27

server::server::TiKvServer::init_fs

at /var/lib/docker/jenkins/workspace/build-common@3/go/src/github.com/pingcap/tikv/components/server/src/server.rs:463:13

7: server::server::run_impl

at /var/lib/docker/jenkins/workspace/build-common@3/go/src/github.com/pingcap/tikv/components/server/src/server.rs:124:5

server::server::run_tikv

at /var/lib/docker/jenkins/workspace/build-common@3/go/src/github.com/pingcap/tikv/components/server/src/server.rs:163:5

8: tikv_server::main

at /var/lib/docker/jenkins/workspace/build-common@3/go/src/github.com/pingcap/tikv/cmd/tikv-server/src/main.rs:189:5

9: core::ops::function::FnOnce::call_once

at /root/.rustup/toolchains/nightly-2022-02-14-aarch64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/ops/function.rs:227:5

std::sys_common::backtrace::__rust_begin_short_backtrace

at /root/.rustup/toolchains/nightly-2022-02-14-aarch64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/sys_common/backtrace.rs:122:18

10: main

11: __libc_start_main

12:

"] [location=components/server/src/server.rs:467] [thread_name=main]

【问题】

1、 tikv节点已经是down的了reload时为什么还通过metric tikv_raftstore_region_count{type=“leader”} 判断leader数量,对于这种状态是否可以跳过或其他方式处理?(可能这个tikv在部署时就有问题,当时没太注意)

2、 space holder file 紧急情况下可以删除释放磁盘空间,这里在重启时报 [“Failed to reserve space for recovery: Structure needs cleaning (os error 117).”] 错误, OS error code 117: Structure needs cleaning ,这是要清理哪些结构?之前没有手动删除过placeholder文件