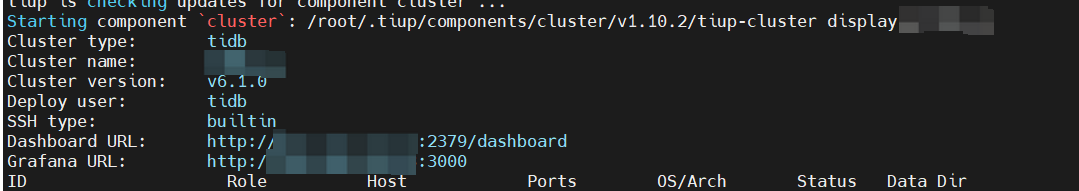

tidb5.3升级6.1时报错,监控模块引起的错误。大家帮忙看下?

日志如下:

2022-08-08T21:28:49.952+0800 INFO SSHCommand {“host”: “192.168.1.3”, “port”: “22”, “cmd”: “export LANG=C; PATH=$PATH:/bin:/sbin:/usr/bin:/usr/sbin ss -ltn”, “stdout”: “State Recv-Q Send-Q Local Address:Port Peer Address:Port \

LISTEN 0 128 :22 : \

LISTEN 0 100 127.0.0.1:25 : \

LISTEN 0 100 :18686 : \

LISTEN 0 40000 192.168.1.3:9093 : \

LISTEN 0 40000 192.168.1.3:9094 : \

LISTEN 0 128 127.0.0.1:1234 : \

LISTEN 0 40000 [::]:12020 [::]: \

LISTEN 0 128 [::]:22 [::]: \

LISTEN 0 40000 [::]:3000 [::]:* \

LISTEN 0 100 [::1]:25 [::]:* \

LISTEN 0 40000 [::]:9090 [::]:* \

LISTEN 0 128 [::]:40324 [::]:* \

LISTEN 0 128 [::]:6123 [::]:* \

LISTEN 0 128 [::]:36429 [::]:* \

LISTEN 0 128 [::]:8081 [::]:* \

”, “stderr”: “”}

2022-08-08T21:28:49.952+0800 INFO CheckPoint {“host”: “192.168.1.3”, “port”: 22, “user”: “tidb”, “sudo”: false, “cmd”: “ss -ltn”, “stdout”: “State Recv-Q Send-Q Local Address:Port Peer Address:Port \

LISTEN 0 128 :22 : \

LISTEN 0 100 127.0.0.1:25 : \

LISTEN 0 100 :18686 : \

LISTEN 0 40000 192.168.1.3:9093 : \

LISTEN 0 40000 192.168.1.3:9094 : \

LISTEN 0 128 127.0.0.1:1234 : \

LISTEN 0 40000 [::]:12020 [::]: \

LISTEN 0 128 [::]:22 [::]: \

LISTEN 0 40000 [::]:3000 [::]:* \

LISTEN 0 100 [::1]:25 [::]:* \

LISTEN 0 40000 [::]:9090 [::]:* \

LISTEN 0 128 [::]:40324 [::]:* \

LISTEN 0 128 [::]:6123 [::]:* \

LISTEN 0 128 [::]:36429 [::]:* \

LISTEN 0 128 [::]:8081 [::]:* \

”, “stderr”: “”, “hash”: “2de5b500c9fae6d418fa200ca150b8d5264d6b19”, “func”: “github.com/pingcap/tiup/pkg/cluster/executor.(*CheckPointExecutor).Execute”, “hit”: false}

2022-08-08T21:28:49.952+0800 DEBUG retry error {“error”: “operation timed out after 2m0s”}

2022-08-08T21:28:49.952+0800 DEBUG setting replication config: leader-schedule-limit=4

2022-08-08T21:28:49.958+0800 DEBUG setting replication config: region-schedule-limit=2048

2022-08-08T21:28:49.963+0800 DEBUG TaskFinish {“task”: “UpgradeCluster”, “error”: “failed to start: 192.168.1.3 node_exporter-9100.service, please check the instance’s log() for more detail.: timed out waiting for port 9100 to be started after 2m0s”, “errorVerbose”: “timed out waiting for port 9100 to be started after 2m0s\ngithub.com/pingcap/tiup/pkg/cluster/module.(*WaitFor).Execute\

\tgithub.com/pingcap/tiup/pkg/cluster/module/wait_for.go:91\

github.com/pingcap/tiup/pkg/cluster/spec.PortStarted\

\tgithub.com/pingcap/tiup/pkg/cluster/spec/instance.go:116\

github.com/pingcap/tiup/pkg/cluster/operation.systemctlMonitor.func1\

\tgithub.com/pingcap/tiup/pkg/cluster/operation/action.go:335\

golang.org/x/sync/errgroup.(*Group).Go.func1\

\tgolang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\

runtime.goexit\

\truntime/asm_amd64.s:1571\

failed to start: 192.168.1.3 node_exporter-9100.service, please check the instance’s log() for more detail.”}

2022-08-08T21:28:49.963+0800 INFO Execute command finished {“code”: 1, “error”: “failed to start: 192.168.1.3 node_exporter-9100.service, please check the instance’s log() for more detail.: timed out waiting for port 9100 to be started after 2m0s”, “errorVerbose”: “timed out waiting for port 9100 to be started after 2m0s\ngithub.com/pingcap/tiup/pkg/cluster/module.(*WaitFor).Execute\

\tgithub.com/pingcap/tiup/pkg/cluster/module/wait_for.go:91\

github.com/pingcap/tiup/pkg/cluster/spec.PortStarted\

\tgithub.com/pingcap/tiup/pkg/cluster/spec/instance.go:116\

github.com/pingcap/tiup/pkg/cluster/operation.systemctlMonitor.func1\

\tgithub.com/pingcap/tiup/pkg/cluster/operation/action.go:335\

golang.org/x/sync/errgroup.(*Group).Go.func1\

\tgolang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\

runtime.goexit\

\truntime/asm_amd64.s:1571\

failed to start: 192.168.1.3 node_exporter-9100.service, please check the instance’s log() for more detail.”}

看看端口是不是占用了,防火墙策略,192.168.1.3这个节点的日志

防火墙关闭了。

从其他正常node复制缺少的9100有关文件到问题node。这应该属于tiup 升级的一个bug。

1.通过系统日志 cd /var/log &&tail -f -n 1000 messages 查看报错信息,发现是node_exporter-9100.service服务问题。问题阶段没有起来,非端口占用问题。

2.将正常节点的部署目录下monitor-9100文件拷贝一份到问题节点。

3.重新upgrade,成功。

timed out waiting for port 9100 to be,说明 start systemd.service 的命令应该是发下去的,但是等待过程中超时了,但具体超时的原因未知。 不过看似该问题已解,绕过方法正确。

该问题根本原因是 binary 及相关目录就没产生吗,还是生成了 process 没起来?

先暂时标记为已解决了,如有其他问题请留言。

该主题在最后一个回复创建后60天后自动关闭。不再允许新的回复。