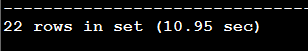

线上环境V5.4.1版本,3个PD,TIDB,TIKV 节点,1个Tiflash节点 ,数据1T,一个sql 有索引情况下走tikv 查询只要0.05秒(explain 看扫描了24多行),走tiflash 要10.91秒(explain 看走了全表扫描),问题如下:

1、为什么走tiflash 会比走tikv 慢?是不是有索引情况下tidb 走tikv 会比tiflash 快?

2、1个tiflash节点有查询时,cpu 和内存占用就升高,能否可以通过增加tiflash 节点数,设置 提高tiflash 副本数来提高查询速度,降低tiflash 压力?

sql 语句:

SELECT * FROM t_order_2017 WHERE 1=1 and m_id =472425658 and g_id in (1074010202,1074020202,1074190202,1074190222,1074010303,1074020303,1074190303,1074190323,1051029902,1051089902,1051039902,1051019902,1051029903,1051089903,1051039903,1051019903,1051019905,1051079905,1051029905,1051089911,1051010707,1051020707,1051190707,10741998223,1074190802,1074190902,1074010802,1074010902,1074020802,1074020902,1074050202,1074050802,1074050902,1074060202,1074060802,1074060902,1074090202,1074090802,1074090902,1074130202,1074070802,1074070902,1074080202,1074220802,1074220902,1074220202,1074080802,1074080902,1074070202,1074130802,1074130902,1074120802,1074120902,10740102022,1074160802,1074160902,10741902022,1074110802,1074110902,1074120202,1074160202,1074110202,1074050303,1074060303,1074090303,1074130303,1074080303,1074220303,1074070303,10741903233,1074120303,1074160303,1074110303,1051179902,1051209902,1051049902,1051069902,1051059902,1051159902,1051119902,1051109902,1051099902,1051199902,1051129902,1051219902,1051079902,1051149902,1051139902,1051189902,1051169902,1051260202,1051380202,1051390202,1051179903,1051209903,1051049903,1051069903,1051059903,1051159903,1051119903,1051109903,1051099903,1051199903,1051129903,1051219903,1051079903,1051149903,1051139903,1051189903,1051169903,1051260303,1051190323,1051380303,1051390303,1051189905,1051049905,1051059905,1051039905,1051149905,1051109905,1051099905,1051089905,1051179905,1051260502,1051119905,1051069905,1051139905,1051129905,1051169905,1051159905,1051380502,1051010607,1051020607,1051190607,1051050707,1051050607,1051060707,1051060607,1051090707,1051090607,1051070707,1051070607,1051110707,1051110607,1051150707,1051150607,1051120707,1051120607,1051100707,1051100607,1051160707,1051160607,1051220707,1051220607,1051080707,1051080607,1051130707,1051130607,1051180707,1051180607,1051240707,1051240607,1051140707,1051140607,1051260707,1051260607,1051380707,1051380607) ORDER BY o_create_time LIMIT 5000 OFFSET 0;

表总数:

57853069

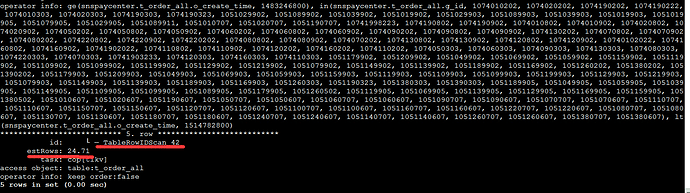

tikv:

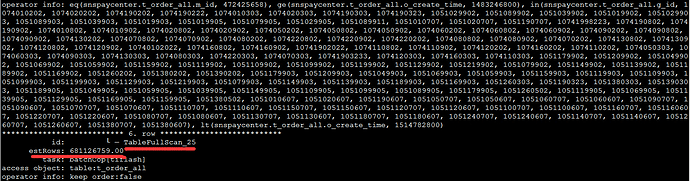

tiflash:

【附件】

请提供各个组件的 version 信息,如 cdc/tikv,可通过执行 cdc version/tikv-server --version 获取。