seiang

2022 年6 月 28 日 02:00

1

【 TiDB 使用环境`】生产

CDC节点日志如下:cdc.log.tar.gz (5.8 MB)

【复现路径】

【问题现象及影响】

【附件】

相关日志、配置文件、Grafana 监控(https://metricstool.pingcap.com/ )

TiUP Cluster Display 信息

TiUP CLuster Edit config 信息

TiDB-Overview 监控

对应模块的 Grafana 监控(如有 BR、TiDB-binlog、TiCDC 等)

对应模块日志(包含问题前后 1 小时日志)

若提问为性能优化、故障排查 类问题,请下载脚本 运行。终端输出的打印结果,请务必全选 并复制粘贴上传。

polars

2022 年6 月 28 日 03:43

2

我们5.3版本,也碰到了类似的问题,突然延迟大增,然后就不同步了,内存占用还特别高,最大到50G

seiang

2022 年6 月 28 日 03:55

3

也是通过CDC同步到MySQL吗?那你们定位到具体原因了吗?最后怎么解决的呢?

dba-kit

2022 年6 月 28 日 03:57

4

我之前遇到过在TiDB上执行一个大事务(insert into select的方式备份表),结果TiCDC就一直延时。

dba-kit

2022 年6 月 28 日 04:00

5

我当时是先找到是哪个表延时的,然后修改changfeed,暂时不同步那个表,优先恢复其他表的同步问题。然后用dumpling来导出那个表,另起一个changefeed来同步,来跳过那个大事务。等业务数据追上了,再找个时间把两个changefedd来合并一下

seiang

2022 年6 月 28 日 04:03

6

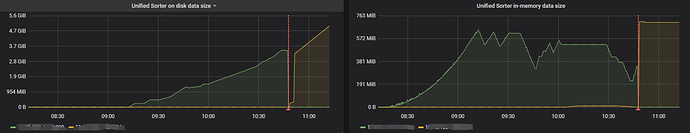

应该不是不事务,我只同步了一张表,这张表就只有insert操作,Unified Sort on disk一直在增长,而且在不断增加,这个定位不到具体的原因

代晓磊_Mars

2022 年6 月 28 日 04:32

7

看看上游tidb的同步表有没有大的添加索引的DDL操作?或者刚进行了DDL操作

代晓磊_Mars

2022 年6 月 28 日 04:34

8

执行下cdc cli changefeed query -s --pd=http://10.0.10.25:2379 --changefeed-id=simple-replication-task看下当前同步任务是否有报错

应该是有大事务 cdc是事务堵住了 tidb的改表结构是online ddl 很快就完成 而mysql不是 这样的同步 数据量大了 mysql就支撑不住。正好证明tidb的优秀

从 6:34 开始,出现大量的这种日志,这个时间 tikv 发生了什么么?

[2022/06/28 06:34:00.826 +08:00] [WARN] [client.go:1543] ["region not receiving resolved event from tikv or resolved ts is not pushing for too long time, try to resolve lock"] [regionID=4149003] [span="[7480000000000004ff415f72800000000

9ff1900410000000000fa, 7480000000000004ff415f728000000009ff1c9a5f0000000000fa)"] [duration=21.35s] [lastEvent=132.861559ms] [resolvedTs=434207252666122299]

[2022/06/28 06:34:00.830 +08:00] [WARN] [client.go:1543] ["region not receiving resolved event from tikv or resolved ts is not pushing for too long time, try to resolve lock"] [regionID=4107597] [span="[7480000000000004ffbf5f72800000000

1ff5cdfe10000000000fa, 7480000000000004ffbf5f728000000001ff607c870000000000fa)"] [duration=21.35s] [lastEvent=136.812613ms] [resolvedTs=434207252666122299]

[2022/06/28 06:34:00.836 +08:00] [WARN] [client.go:1543] ["region not receiving resolved event from tikv or resolved ts is not pushing for too long time, try to resolve lock"] [regionID=4044439] [span="[7480000000000004ffbb5f72800000000

3ff3564880000000000fa, 7480000000000004ffbb5f728000000003ff3902770000000000fa)"] [duration=21.35s] [lastEvent=141.137059ms] [resolvedTs=434207252666122299]

seiang

2022 年6 月 28 日 05:21

11

同步的表今天没有做DDL的操作,同步任务状态也是正常的:ctl: /home/tidb/.tiup/components/ctl/v5.0.3/ctl cdc changefeed query -s --pd=http://10.30.xx.xx:2379 --changefeed-id=xxxs-sync-task

seiang

2022 年6 月 28 日 05:23

12

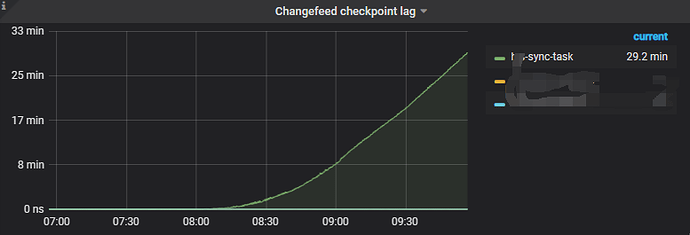

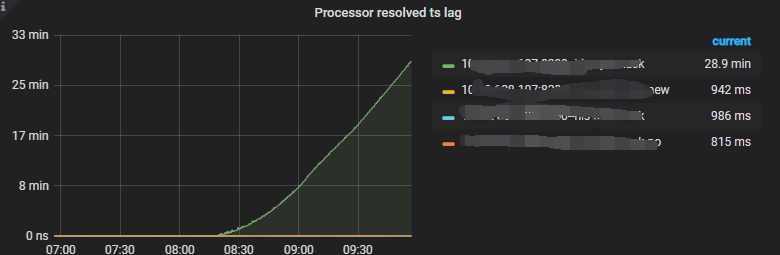

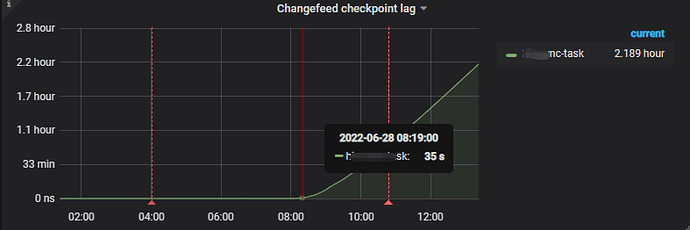

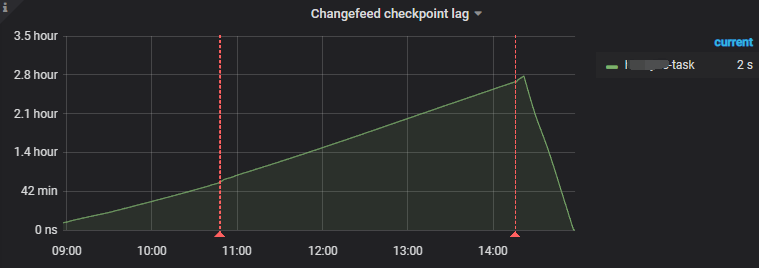

6:34 这个时间,tikv没有任何的异常情况,TiCDC同步延迟从监控看是从早上08:20左右开始逐渐增大的;

而且现在延迟越来越大了

dba-kit

2022 年6 月 28 日 05:35

13

MySQL监控有么?是不是下游MySQL性能不足了?

1 个赞

db_user

2022 年6 月 28 日 05:46

14

建议查看mysql的通用日志,二进制日志,或者对3306端口做一个tcpdump,我之前遇到过是因为开发在进行大量delete ,堵塞了其他操作,通过tcpdump监听到了

seiang:

TiCDC任务同步MySQL已经运行一个多月了

但是从你的log看,昨天前天的qps都是0,从今天凌晨才开始进数据

[2022/06/27 23:31:07.247 +08:00] ... [changefeed=xxx-task] [count=0] [qps=0]

[2022/06/27 23:41:07.247 +08:00] ... [changefeed=xxx-task] [count=0] [qps=0]

[2022/06/27 23:51:07.248 +08:00] ... [changefeed=xxx-task] [count=0] [qps=0]

[2022/06/28 00:01:07.248 +08:00] ... [changefeed=xxx-task] [count=119204] [qps=198]

[2022/06/28 00:11:07.249 +08:00] ... [changefeed=xxx-task] [count=1008319] [qps=1680]

[2022/06/28 00:21:07.272 +08:00] ... [changefeed=xxx-task] [count=932367] [qps=1553]

seiang

2022 年6 月 28 日 06:00

16

数据一直是有同步的,因为是两个CDC,在另一个cdc节点日志上是可以看到之前数据同步的,qps不为0

[2022/06/27 02:21:00.785 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=429212] [qps=715]

[2022/06/27 02:31:00.789 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=404536] [qps=674]

[2022/06/27 02:41:00.791 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=384137] [qps=640]

[2022/06/27 02:51:00.797 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=365038] [qps=608]

[2022/06/27 03:01:00.797 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=350421] [qps=584]

[2022/06/27 03:11:00.837 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=334392] [qps=557]

[2022/06/27 03:21:00.848 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=325291] [qps=542]

[2022/06/27 03:31:00.904 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=312800] [qps=521]

[2022/06/27 03:41:00.904 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=302907] [qps=504]

[2022/06/27 03:51:00.909 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=293315] [qps=488]

[2022/06/27 04:01:00.909 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=288179] [qps=480]

[2022/06/27 04:11:00.910 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=279882] [qps=466]

[2022/06/27 04:21:00.910 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=278376] [qps=463]

[2022/06/27 04:31:00.921 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=275506] [qps=459]

[2022/06/27 04:41:00.921 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=277555] [qps=462]

[2022/06/27 04:51:00.922 +08:00] [INFO] [statistics.go:126] ["sink replication status"] [name=mysql] [changefeed=xxxx-task] [capture=xx.xx.xx.108:8300] [count=279637] [qps=466

下面是另外一个cdc节点的日志:cdc.log.tar.gz (15.8 MB)

seiang

2022 年6 月 28 日 06:59

17

问题已经解决了,下游mysql性能的问题导致的

感谢@ dba-kit @ 代晓磊_Mars @ ShawnYan @ 蒋明tidb狂热爱好者 各位提供的排查思路

dba-kit

2022 年6 月 28 日 07:01

20

哈哈哈,果然。