【集群情况】

- 集群版本:v5.1.1

- TiKV 节点:5 个

【遇到的问题】

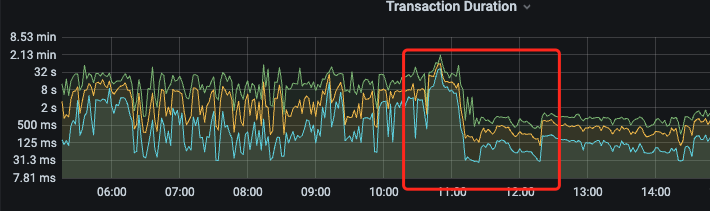

1、最近两天发现 tidb 慢日志越来越多,发现一些原来执行速度很快的 SQL 突然飙升到几百秒

2、发现其中一个 TiKV 节点的负载特别高,登进去服务器都特别卡

3、该节点的日志在疯狂刷

[2022/06/14 16:22:15.516 +08:00] [INFO] [tracker.rs:255] [slow-query] [perf_stats.internal_delete_skipped_count=0] [perf_stats.internal_key_skipped_count=0] [perf_stats.block_read_byte=48736] [perf_stats.block_read_count=2] [perf_stats.block_cache_hit_count=21] [scan.range.first="Some(start: 7480000000000002485F72800000008FEC1E34 end: 7480000000000002485F72800000008FEC1E35)"] [scan.ranges=2] [scan.total=2] [scan.processed=2] [scan.is_desc=false] [tag=select] [table_id=584] [txn_start_ts=433899413354053884] [total_suspend_time=0ns] [total_process_time=77ms] [handler_build_time=0ns] [wait_time.snapshot=0ns] [wait_time.schedule=2.101s] [wait_time=2.101s] [total_lifetime=2.178s] [remote_host=ipv4:192.168.1.20:54728] [region_id=1335237651]

[2022/06/14 16:22:15.516 +08:00] [INFO] [tracker.rs:255] [slow-query] [perf_stats.internal_delete_skipped_count=0] [perf_stats.internal_key_skipped_count=0] [perf_stats.block_read_byte=0] [perf_stats.block_read_count=0] [perf_stats.block_cache_hit_count=12] [scan.range.first="Some(start: 7480000000000002485F728000000091AA7A6F end: 7480000000000002485F728000000091AA7A70)"] [scan.ranges=1] [scan.total=1] [scan.processed=1] [scan.is_desc=false] [tag=select] [table_id=584] [txn_start_ts=433899409027366962] [total_suspend_time=0ns] [total_process_time=0ns] [handler_build_time=0ns] [wait_time.snapshot=0ns] [wait_time.schedule=2.178s] [wait_time=2.178s] [total_lifetime=2.178s] [remote_host=ipv4:192.168.1.21:60418] [region_id=1336868458]

[2022/06/14 16:22:15.517 +08:00] [INFO] [tracker.rs:255] [slow-query] [perf_stats.internal_delete_skipped_count=0] [perf_stats.internal_key_skipped_count=0] [perf_stats.block_read_byte=0] [perf_stats.block_read_count=0] [perf_stats.block_cache_hit_count=23] [scan.range.first="Some(start: 7480000000000002485F728000000091E59469 end: 7480000000000002485F728000000091E5946A)"] [scan.ranges=2] [scan.total=2] [scan.processed=2] [scan.is_desc=false] [tag=select] [table_id=584] [txn_start_ts=433899404584026210] [total_suspend_time=0ns] [total_process_time=1ms] [handler_build_time=0ns] [wait_time.snapshot=2.178s] [wait_time.schedule=2.691s] [wait_time=4.869s] [total_lifetime=4.87s] [remote_host=ipv4:192.168.1.21:60434] [region_id=1337111165]

[2022/06/14 16:22:15.517 +08:00] [INFO] [tracker.rs:255] [slow-query] [perf_stats.internal_delete_skipped_count=0] [perf_stats.internal_key_skipped_count=0] [perf_stats.block_read_byte=0] [perf_stats.block_read_count=0] [perf_stats.block_cache_hit_count=12] [scan.range.first="Some(start: 7480000000000002485F72800000009460CDF0 end: 7480000000000002485F72800000009460CDF1)"] [scan.ranges=1] [scan.total=1] [scan.processed=1] [scan.is_desc=false] [tag=select] [table_id=584] [txn_start_ts=433899414361997446] [total_suspend_time=0ns] [total_process_time=0ns] [handler_build_time=0ns] [wait_time.snapshot=0ns] [wait_time.schedule=2.175s] [wait_time=2.175s] [total_lifetime=2.175s] [remote_host=ipv4:192.168.1.21:60422] [region_id=1339316288]

[2022/06/14 16:22:15.520 +08:00] [INFO] [tracker.rs:255] [slow-query] [perf_stats.internal_delete_skipped_count=0] [perf_stats.internal_key_skipped_count=2] [perf_stats.block_read_byte=48436] [perf_stats.block_read_count=2] [perf_stats.block_cache_hit_count=22] [scan.range.first="Some(start: 7480000000000002485F7280000000932F25FB end: 7480000000000002485F7280000000932F25FD)"] [scan.ranges=2] [scan.total=4] [scan.processed=3] [scan.is_desc=false] [tag=select] [table_id=584] [txn_start_ts=433899407166144574] [total_suspend_time=0ns] [total_process_time=1ms] [handler_build_time=0ns] [wait_time.snapshot=0ns] [wait_time.schedule=2.163s] [wait_time=2.163s] [total_lifetime=2.164s] [remote_host=ipv4:192.168.1.20:54730] [region_id=1338184470]

4、重启该 tikv 节点后还是会很慢

5、直接关闭该节点,TiDB 集群瞬间就活了,慢速日志也没在刷了

【疑问】

1、日志的内容是什么意思,为什么只有这个节点在疯狂刷,其他节点不会?

1、节点停止(down)后,按道理 region 应该会迁移到其他节点(配置1小时),但是过了一段时间发现其他节点的 region 并没有上升的趋势

2、目前该节点挂载了一块固态硬盘,计划把 tikv 数据目录迁移进去,请问怎么操作比较方便呢?