找到cc

1

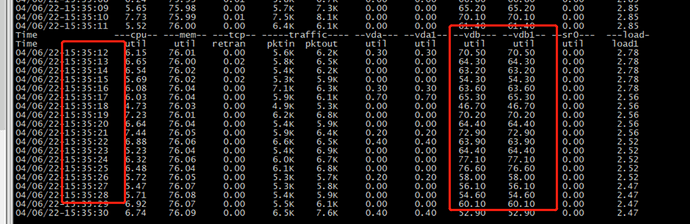

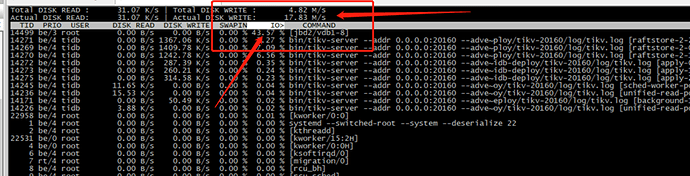

【 TiDB 使用环境】生产环境 【 TiDB 版本】v5.4.0 【遇到的问题】 所有的tikv节点数据盘(essd)的util非常高,不正常,磁盘的读写流量很低 【复现路径】做过哪些操作出现的问题`

mysql使用dm同步到tidb,几天前已经同步完成,一直在实时同步,

【问题现象及影响】

所有的tikv节点数据盘(essd)的util非常高,不正常,磁盘的写流量很低,没有读流量

怀疑tidb的磁盘有问题或参数设置问题,

目前业务还没正式切换到tidb集群,希望找到问题原因。

【附件】

请提供各个组件的 version 信息,如 cdc/tikv,可通过执行 cdc version/tikv-server --version 获取。

找到cc

2

Starting component cluster: /home/tidb/.tiup/components/cluster/v1.9.5/tiup-cluster /home/tidb/.tiup/components/cluster/v1.9.5/tiup-cluster display big-tidb

Cluster type: tidb

Cluster name: big-tidb

Cluster version: v5.4.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://xxx.104:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

xxx.119:9093 alertmanager xxx.119 9093/9094 linux/x86_64 Up /data/tidb-data/alertmanager-9093 /usr/local/tidb-deploy/alertmanager-9093

xxx.90:8300 cdc xxx.90 8300 linux/x86_64 Up /data/tidb-data/cdc-8300 /usr/local/tidb-deploy/cdc-8300

xxx.95:8300 cdc xxx.95 8300 linux/x86_64 Up /data/tidb-data/cdc-8300 /usr/local/tidb-deploy/cdc-8300

xxx.119:3000 grafana xxx.119 3000 linux/x86_64 Up - /usr/local/tidb-deploy/grafana-3000

xxx.104:2379 pd xxx.104 2379/2380 linux/x86_64 Up|UI /data/tidb-data/pd-2379 /usr/local/tidb-deploy/pd-2379

xxx.108:2379 pd xxx.108 2379/2380 linux/x86_64 Up /data/tidb-data/pd-2379 /usr/local/tidb-deploy/pd-2379

xxx.119:9090 prometheus xxx.119 9090/12020 linux/x86_64 Up /data/tidb-data/prometheus-8249 /usr/local/tidb-deploy/prometheus-8249

xxx.41:4000 tidb xxx.41 4000/10080 linux/x86_64 Up - /usr/local/tidb-deploy/tidb-4000

xxx.62:4000 tidb xxx.62 4000/10080 linux/x86_64 Up - /usr/local/tidb-deploy/tidb-4000

xxx.129:20160 tikv xxx.129 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /usr/local/tidb-deploy/tikv-20160

xxx.135:20160 tikv xxx.135 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /usr/local/tidb-deploy/tikv-20160

xxx.37:20160 tikv xxx.37 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /usr/local/tidb-deploy/tikv-20160

Total nodes: 12

找到cc

3

- TiUP Cluster Edit Config 信息

user: tidb

tidb_version: v5.4.0

topology:

global:

user: tidb

ssh_port: 22

ssh_type: builtin

deploy_dir: /usr/local/tidb-deploy

data_dir: /data/tidb-data

os: linux

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

deploy_dir: /usr/local/tidb-deploy/monitored-9100

data_dir: /data/tidb-data/monitored-9100

log_dir: /data/tidb-data/monitored-9100/log

server_configs:

tidb:

binlog.enable: false

binlog.ignore-error: false

log.level: error

log.slow-threshold: 300

performance.txn-entry-size-limit: 10485760

tikv:

raftstore.apply-max-batch-size: 2048

raftstore.apply-pool-size: 3

raftstore.raft-entry-max-size: 10485760

raftstore.store-max-batch-size: 2048

raftstore.store-pool-size: 3

server.grpc-concurrency: 4

pd:

schedule.leader-schedule-limit: 4

schedule.region-schedule-limit: 2048

schedule.replica-schedule-limit: 64

tiflash: {}

tiflash-learner: {}

pump: {}

drainer: {}

cdc: {}

grafana: {}

tidb_servers:

- host: xxx.41

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /usr/local/tidb-deploy/tidb-4000

log_dir: /usr/local/tidb-deploy/tidb-4000/log

config:

log.slow-query-file: tidb-slow-overwrited.log

arch: amd64

os: linux

- host: xxx.62

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /usr/local/tidb-deploy/tidb-4000

log_dir: /usr/local/tidb-deploy/tidb-4000/log

config:

log.slow-query-file: tidb-slow-overwrited.log

arch: amd64

os: linux

tikv_servers:

- host: xxx.37

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /usr/local/tidb-deploy/tikv-20160

data_dir: /data/tidb-data/tikv-20160

log_dir: /usr/local/tidb-deploy/tikv-20160/log

config:

server.grpc-concurrency: 4

arch: amd64

os: linux

- host: xxx.135

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /usr/local/tidb-deploy/tikv-20160

data_dir: /data/tidb-data/tikv-20160

log_dir: /usr/local/tidb-deploy/tikv-20160/log

config:

server.grpc-concurrency: 4

arch: amd64

os: linux

- host: xxx.129

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /usr/local/tidb-deploy/tikv-20160

data_dir: /data/tidb-data/tikv-20160

log_dir: /usr/local/tidb-deploy/tikv-20160/log

config:

server.grpc-concurrency: 4

arch: amd64

os: linux

tiflash_servers: []

pd_servers:

- host: xxx.104

ssh_port: 22

name: HUOBAN-APPLICATION-PD01

client_port: 2379

peer_port: 2380

deploy_dir: /usr/local/tidb-deploy/pd-2379

data_dir: /data/tidb-data/pd-2379

log_dir: /usr/local/tidb-deploy/pd-2379/log

config:

schedule.max-merge-region-keys: 200000

schedule.max-merge-region-size: 20

arch: amd64

os: linux

- host: xxx.40

ssh_port: 22

name: HUOBAN-APPLICATION-PD02

client_port: 2379

peer_port: 2380

deploy_dir: /usr/local/tidb-deploy/pd-2379

data_dir: /data/tidb-data/pd-2379

log_dir: /usr/local/tidb-deploy/pd-2379/log

config:

schedule.max-merge-region-keys: 200000

schedule.max-merge-region-size: 20

arch: amd64

os: linux

- host: xxx.108

ssh_port: 22

name: HUOBAN-APPLICATION-PD03

client_port: 2379

peer_port: 2380

deploy_dir: /usr/local/tidb-deploy/pd-2379

data_dir: /data/tidb-data/pd-2379

log_dir: /usr/local/tidb-deploy/pd-2379/log

config:

schedule.max-merge-region-keys: 200000

schedule.max-merge-region-size: 20

arch: amd64

os: linux

cdc_servers:

- host: xxx.95

ssh_port: 22

port: 8300

deploy_dir: /usr/local/tidb-deploy/cdc-8300

data_dir: /data/tidb-data/cdc-8300

log_dir: /usr/local/tidb-deploy/cdc-8300/log

gc-ttl: 86400

arch: amd64

os: linux

- host: xxx.90

ssh_port: 22

port: 8300

deploy_dir: /usr/local/tidb-deploy/cdc-8300

data_dir: /data/tidb-data/cdc-8300

log_dir: /usr/local/tidb-deploy/cdc-8300/log

gc-ttl: 86400

arch: amd64

os: linux

monitoring_servers:

- host: xxx.119

ssh_port: 22

port: 9090

ng_port: 12020

deploy_dir: /usr/local/tidb-deploy/prometheus-8249

data_dir: /data/tidb-data/prometheus-8249

log_dir: /usr/local/tidb-deploy/prometheus-8249/log

external_alertmanagers: []

arch: amd64

os: linux

grafana_servers:

- host: xxx.119

ssh_port: 22

port: 3000

deploy_dir: /usr/local/tidb-deploy/grafana-3000

arch: amd64

os: linux

username: admin

password: admin

anonymous_enable: false

root_url: “”

domain: “”

alertmanager_servers:

- host: xxx.119

ssh_port: 22

web_port: 9093

cluster_port: 9094

deploy_dir: /usr/local/tidb-deploy/alertmanager-9093

data_dir: /data/tidb-data/alertmanager-9093

log_dir: /usr/local/tidb-deploy/alertmanager-9093/log

arch: amd64

os: linux

1.检查一个pd的状态以及日志是否有异常

2.tidb的监控也发一下

system

(system)

关闭

9

此话题已在最后回复的 1 分钟后被自动关闭。不再允许新回复。