备份脚本:

br backup full --pd “ip:2379” --storage “local://${BACKUP_DIR}” --ratelimit 10 --concurrency 4 --log-level debug --log-level debug --log-level debug --log-level debug --log-level debug --log-level debug --log-level debug --log-level debug --log-level debug --log-file ${BR_LOG} 1>

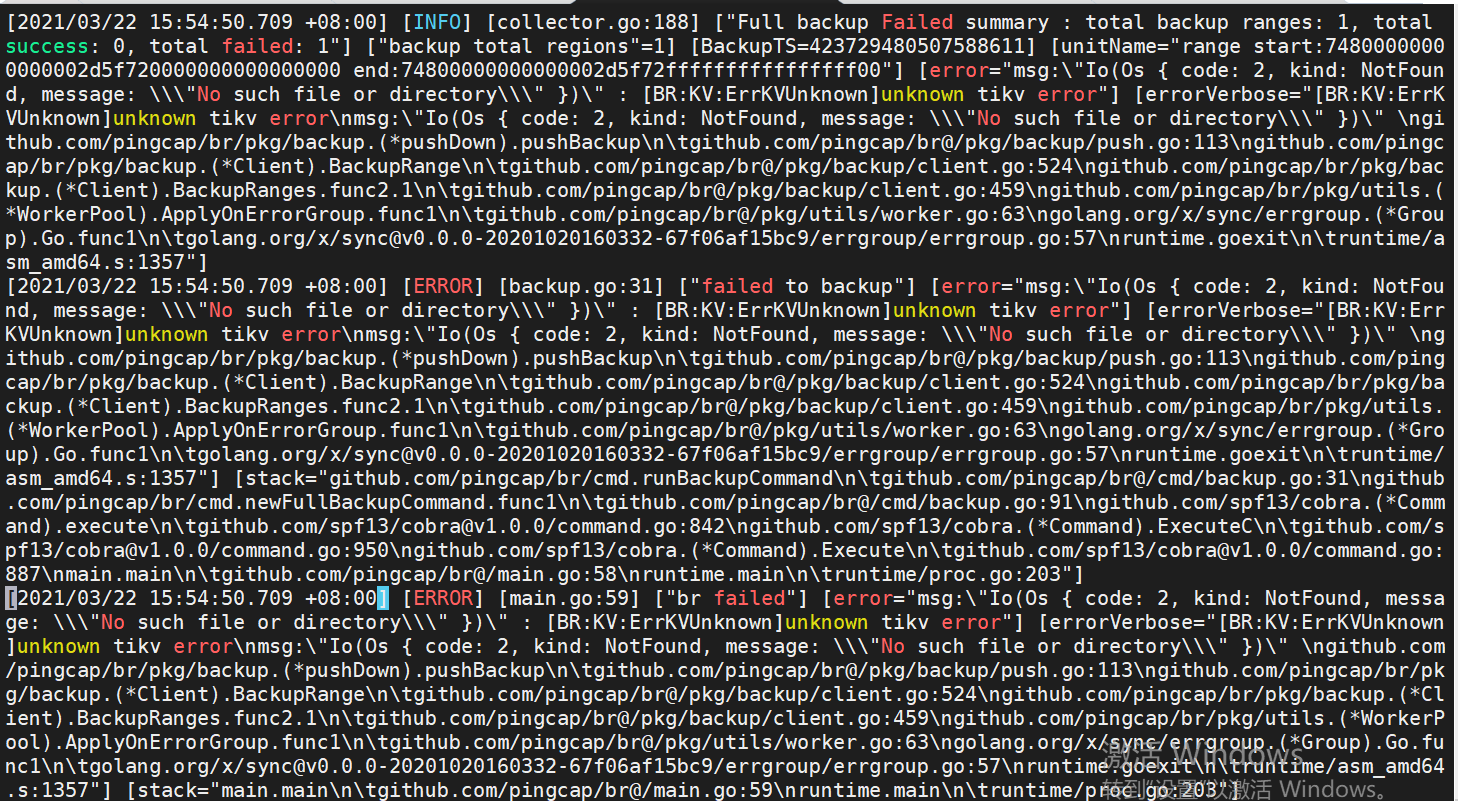

报错:

[2021/03/22 11:31:27.740 +08:00] [ERROR] [backup.go:25] [“failed to backup”] [error="msg:“Io(Os { code: 2, kind: NotFound, message: \“No such file or directory\” })” "] [errorVerbose=“msg:“Io(Os { code: 2, kind: NotFound, message: \“No such file or directory\” })” \ngithub.com/pingcap/br/pkg/backup.(*pushDown).pushBackup\

\t/home/jenkins/agent/workspace/build_br_multi_branch_v4.0.5/go/src/github.com/pingcap/br/pkg/backup/push.go:113\ngithub.com/pingcap/br/pkg/backup.(*Client).BackupRange\

\t/home/jenkins/agent/workspace/build_br_multi_branch_v4.0.5/go/src/github.com/pingcap/br/pkg/backup/client.go:501\ngithub.com/pingcap/br/pkg/backup.(*Client).BackupRanges.func2.1\

\t/home/jenkins/agent/workspace/build_br_multi_branch_v4.0.5/go/src/github.com/pingcap/br/pkg/backup/client.go:430\ngithub.com/pingcap/br/pkg/utils.(*WorkerPool).ApplyOnErrorGroup.func1\

\t/home/jenkins/agent/workspace/build_br_multi_branch_v4.0.5/go/src/github.com/pingcap/br/pkg/utils/worker.go:62\ngolang.org/x/sync/errgroup.(*Group).Go.func1\

\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20200625203802-6e8e738ad208/errgroup/errgroup.go:57\

runtime.goexit\

\t/usr/local/go/src/runtime/asm_amd64.s:1357”] [stack=“github.com/pingcap/log.Error\

\t/go/pkg/mod/github.com/pingcap/log@v0.0.0-20200511115504-543df19646ad/global.go:42\

github.com/pingcap/br/cmd.runBackupCommand\

\t/home/jenkins/agent/workspace/build_br_multi_branch_v4.0.5/go/src/github.com/pingcap/br/cmd/backup.go:25\

github.com/pingcap/br/cmd.newFullBackupCommand.func1\

\t/home/jenkins/agent/workspace/build_br_multi_branch_v4.0.5/go/src/github.com/pingcap/br/cmd/backup.go:84\

github.com/spf13/cobra.(*Command).execute\

\t/go/pkg/mod/github.com/spf13/cobra@v1.0.0/command.go:842\ngithub.com/spf13/cobra.(*Command).ExecuteC\

\t/go/pkg/mod/github.com/spf13/cobra@v1.0.0/command.go:950\ngithub.com/spf13/cobra.(*Command).Execute\

\t/go/pkg/mod/github.com/spf13/cobra@v1.0.0/command.go:887\

main.main\

\t/home/jenkins/agent/workspace/build_br_multi_branch_v4.0.5/go/src/github.com/pingcap/br/main.go:57\

runtime.main\

\t/usr/local/go/src/runtime/proc.go:203”]

报错的内容是 message: \“No such file or directory\ ,麻烦检查下目录路径和权限是否正确。

脚本上的目录权限都有

上面的路径是通过 NFS 挂载的还是本地盘?每个 tikv 节点上都可以正常访问吗?

本地磁盘,

有一个tikv 节点是 Pending Offline 状态,执行缩容后,就一直是这个状态

xxxx:20161 tikv xxxx 20161/20181 linux/x86_64 Pending Offline /data/tidb-data/tikv-20161 /data/tidb-deploy/tikv-20161

那目前正常状况的 tikv 节点数有几个?

正常的有3个

BR 工具在备份的时候仅仅在每个 Region 的 Leader 处生成该 Region 的备份文件,上面有一个 tikv 节点依然处于 offline 状态,而在 offline 过程中会进行 region leader 的 transfter 和 region balance ,建议先等这个 tikv 节点变成 tombstone 状态后再尝试下备份动作,如果后面还有问题的话再排查下是否为其他原因导致的。

这个tikv的状态一直是Pending Offline,从上周四,我开始缩容的,今天还是这个状态

嗯,这个是另外一个话题了,建议把 offline 慢的问题重新开贴提问,这样方便其他同学在遇到类似问题时检索下信息。

1.麻烦反馈下当前 store 节点状态,pd-ctl 执行 store 命令;

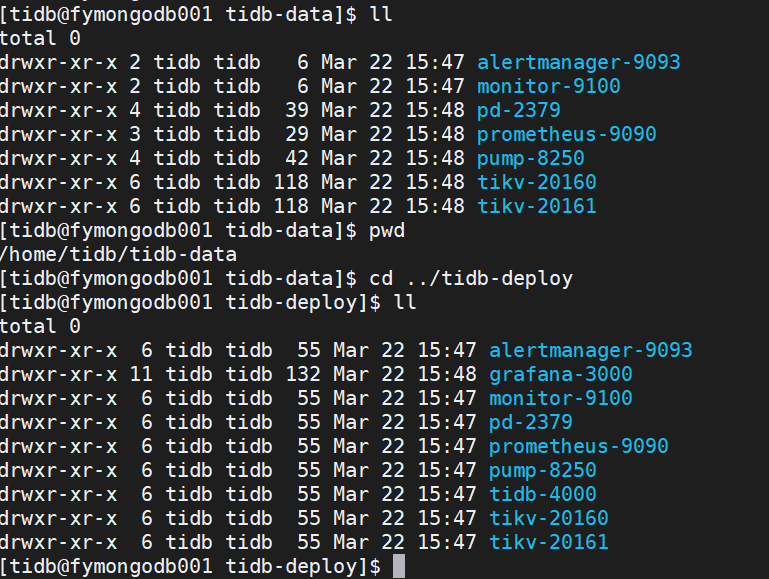

2.核实下在每个 tikv 节点上目录径路是否相同,目录的权限属主是否完全相同,可以提供截图看下。

» store

{

“count”: 4,

“stores”: [

{

“store”: {

“id”: 5,

“address”: “192.168.8.207:20160”,

“labels”: [

{

“key”: “host”,

“value”: “tikv2”

}

],

“version”: “4.0.11”,

“status_address”: “0.0.0.0:20180”,

“git_hash”: “4ac5e7ea1839d63163e911e2e1164d663f49592b”,

“start_timestamp”: 1616399290,

“deploy_path”: “/home/tidb/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1616405110801978018,

“state_name”: “Up”

},

“status”: {

“capacity”: “1015GiB”,

“available”: “990.1GiB”,

“used_size”: “32.59MiB”,

“leader_count”: 13,

“leader_weight”: 1,

“leader_score”: 13,

“leader_size”: 13,

“region_count”: 17,

“region_weight”: 1,

“region_score”: 17,

“region_size”: 17,

“start_ts”: “2021-03-22T15:48:10+08:00”,

“last_heartbeat_ts”: “2021-03-22T17:25:10.801978018+08:00”,

“uptime”: “1h37m0.801978018s”

}

},

{

“store”: {

“id”: 1,

“address”: “192.168.8.223:20160”,

“labels”: [

{

“key”: “host”,

“value”: “tikv1”

}

],

“version”: “4.0.11”,

“status_address”: “0.0.0.0:20180”,

“git_hash”: “4ac5e7ea1839d63163e911e2e1164d663f49592b”,

“start_timestamp”: 1616399290,

“deploy_path”: “/home/tidb/tidb-deploy/tikv-20160/bin”,

“last_heartbeat”: 1616405110829712078,

“state_name”: “Up”

},

“status”: {

“capacity”: “1015GiB”,

“available”: “498.2GiB”,

“used_size”: “31.79MiB”,

“leader_count”: 3,

“leader_weight”: 1,

“leader_score”: 3,

“leader_size”: 3,

“region_count”: 17,

“region_weight”: 1,

“region_score”: 17,

“region_size”: 17,

“start_ts”: “2021-03-22T15:48:10+08:00”,

“last_heartbeat_ts”: “2021-03-22T17:25:10.829712078+08:00”,

“uptime”: “1h37m0.829712078s”

}

},

{

“store”: {

“id”: 4,

“address”: “192.168.8.223:20161”,

“labels”: [

{

“key”: “host”,

“value”: “tikv1”

}

],

“version”: “4.0.11”,

“status_address”: “0.0.0.0:20181”,

“git_hash”: “4ac5e7ea1839d63163e911e2e1164d663f49592b”,

“start_timestamp”: 1616399290,

“deploy_path”: “/home/tidb/tidb-deploy/tikv-20161/bin”,

“last_heartbeat”: 1616405110833141225,

“state_name”: “Up”

},

“status”: {

“capacity”: “1015GiB”,

“available”: “498.2GiB”,

“used_size”: “31.7MiB”,

“leader_count”: 3,

“leader_weight”: 1,

“leader_score”: 3,

“leader_size”: 3,

“region_count”: 16,

“region_weight”: 1,

“region_score”: 16,

“region_size”: 16,

“start_ts”: “2021-03-22T15:48:10+08:00”,

“last_heartbeat_ts”: “2021-03-22T17:25:10.833141225+08:00”,

“uptime”: “1h37m0.833141225s”

}

},

{

“store”: {

“id”: 10,

“address”: “192.168.8.207:20161”,

“labels”: [

{

“key”: “host”,

“value”: “tikv2”

}

],

“version”: “4.0.11”,

“status_address”: “0.0.0.0:20181”,

“git_hash”: “4ac5e7ea1839d63163e911e2e1164d663f49592b”,

“start_timestamp”: 1616399290,

“deploy_path”: “/home/tidb/tidb-deploy/tikv-20161/bin”,

“last_heartbeat”: 1616405110832021541,

“state_name”: “Up”

},

“status”: {

“capacity”: “1015GiB”,

“available”: “990.1GiB”,

“used_size”: “31.74MiB”,

“leader_count”: 3,

“leader_weight”: 1,

“leader_score”: 3,

“leader_size”: 3,

“region_count”: 16,

“region_weight”: 1,

“region_score”: 16,

“region_size”: 16,

“start_ts”: “2021-03-22T15:48:10+08:00”,

“last_heartbeat_ts”: “2021-03-22T17:25:10.832021541+08:00”,

“uptime”: “1h37m0.832021541s”

}

}

]

}

你备份的路径是 /home/tidb/tidb-data ? 建议使用一个干净的空目录进行备份。

备份路径不是 /home/tidb/tidb-data ,是/home/tidb/backup/br

好吧,上面提供的截图不是备份路径的权限情况,麻烦再提供下每个 tikv 节点上 /home/tidb/backup/br 目录权限情况,并检查下每个 tikv 节点上 tidb 用户的 uid 和 gid 是否都相同。

[tidb@localhost backup]$ id tidb

uid=1000(tidb) gid=1000(tidb) groups=1000(tidb)

[tidb@fymongodb001 br]$ id tidb

uid=1002(tidb) gid=1002(tidb) groups=1002(tidb)

uid 和gid我都改成一样了,还是报那个错误,什么文件或目录不存在?

br 备份使用的是什么用户? 另外 br 工具和 tidb 集群的版本是多少?

br备份的用户是 tidb

tidb版本是 4.0.11

br工具是从tidb-community-server-v4.0.11-linux-amd64 这里解压出来的