为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

【TiDB 版本】

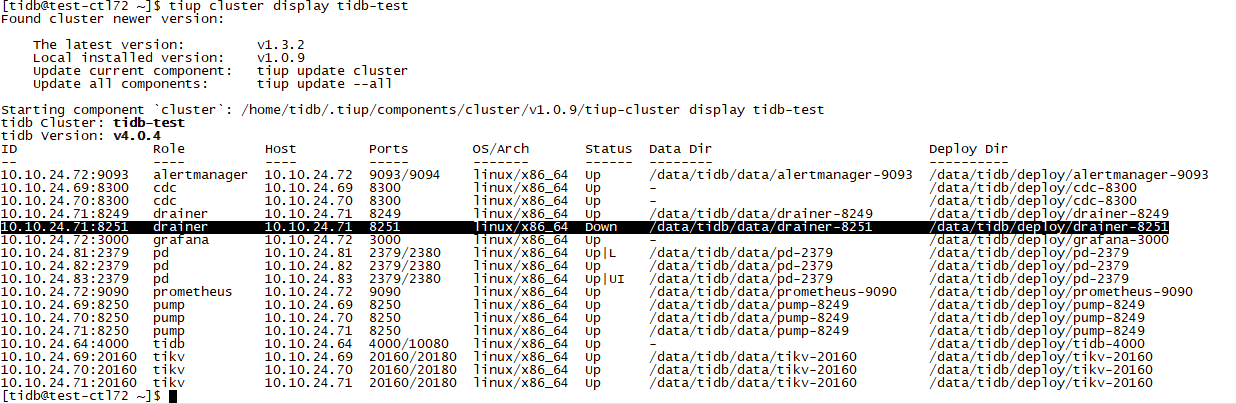

4.0

【问题描述】

集群中原有一个drainer节点,后又在原来节点上用不同端口添加了一个,没有添加成功。把新添加的节点删除

:tiup cluster scale-in tidb-test --force -N 10.10.24.71:8251

过程中报错,软件目录已经删了,但是信息还在tiup中。

10.10.24.71:8249 drainer 10.10.24.71 8249 linux/x86_64 Up /data/tidb/data/drainer-8249 /data/tidb/deploy/drainer-8249

10.10.24.71:8251 drainer 10.10.24.71 8251 linux/x86_64 Down /data/tidb/data/drainer-8251 /data/tidb/deploy/drainer-8251

报错日志

[tidb@test-ctl72 ~]$ tiup cluster scale-in tidb-test --force -N 10.10.24.71:8251

Found cluster newer version:

The latest version: v1.3.2

Local installed version: v1.0.9

Update current component: tiup update cluster

Update all components: tiup update --all

Starting component cluster: /home/tidb/.tiup/components/cluster/v1.0.9/tiup-cluster scale-in tidb-test --force -N 10.10.24.71:8251

This operation will delete the 10.10.24.71:8251 nodes in tidb-test and all their data.

Do you want to continue? [y/N]: y

Forcing scale in is unsafe and may result in data lost for stateful components.

Do you want to continue? [y/N]: y

Scale-in nodes…

-

[ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.72

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.70

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.64

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.69

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.71

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.71

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.71

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.69

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.71

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.70

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.81

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.82

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.69

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.72

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.72

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.83

-

[Parallel] - UserSSH: user=tidb, host=10.10.24.70

-

[ Serial ] - ClusterOperate: operation=ScaleInOperation, options={Roles:[] Nodes:[10.10.24.71:8251] Force:true SSHTimeout:5 OptTimeout:120 APITimeout:300 IgnoreConfigCheck:false NativeSSH:false RetainDataRoles:[] RetainDataNodes:[]}

Stopping component drainer

Stopping instance 10.10.24.71

Failed to stop drainer-8251.service: Unit drainer-8251.service not loaded.Stop drainer 10.10.24.71:8251 success

Destroying component drainer

Destroying instance 10.10.24.71

Deleting paths on 10.10.24.71: /etc/systemd/system/drainer-8251.service /data/tidb/data/drainer-8251 /data/tidb/deploy/drainer-8251/log /data/tidb/deploy/drainer-8251

Destroy 10.10.24.71 success

- Destroy drainer paths: [/data/tidb/data/drainer-8251 /data/tidb/deploy/drainer-8251/log /data/tidb/deploy/drainer-8251 /etc/systemd/system/drainer-8251.service]

panic: runtime error: index out of range [0] with length 0

goroutine 1 [running]:

github.com/pingcap/tiup/pkg/cluster/api.(*BinlogClient).updateStatus(0xc00026f040, 0x145098e, 0x8, 0xc0003a2a10, 0x10, 0x144f905, 0x7, 0x0, 0x0)

github.com/pingcap/tiup@/pkg/cluster/api/binlog.go:146 +0x498

github.com/pingcap/tiup/pkg/cluster/api.(*BinlogClient).UpdateDrainerState(…)

github.com/pingcap/tiup@/pkg/cluster/api/binlog.go:127

github.com/pingcap/tiup/pkg/cluster/operation.ScaleInCluster(0x16758c0, 0xc0004468c0, 0xc0000c2000, 0x0, 0x0, 0x0, 0xc00039c8c0, 0x1, 0x1, 0x1, …)

github.com/pingcap/tiup@/pkg/cluster/operation/scale_in.go:155 +0xc8f

github.com/pingcap/tiup/pkg/cluster/operation.ScaleIn(...)

github.com/pingcap/tiup@/pkg/cluster/operation/scale_in.go:76

github.com/pingcap/tiup/pkg/cluster/task.(*ClusterOperate).Execute(0xc000373900, 0xc0004468c0, 0xc000373900, 0x1)

github.com/pingcap/tiup@/pkg/cluster/task/action.go:70 +0x84e

github.com/pingcap/tiup/pkg/cluster/task.(*Serial).Execute(0xc00023d7e0, 0xc0004468c0, 0xc0003a0f80, 0xc000252198)

github.com/pingcap/tiup@/pkg/cluster/task/task.go:189 +0xc1

github.com/pingcap/tiup/pkg/cluster.(*Manager).ScaleIn(0xc00041ed40, 0x7fff122d7696, 0x9, 0x0, 0x5, 0xc000080100, 0xc00039c8c0, 0x1, 0x1, 0xc000221830, …)

github.com/pingcap/tiup@/pkg/cluster/manager.go:1140 +0x893

github.com/pingcap/tiup/components/cluster/command.newScaleInCmd.func1(0xc000485080, 0xc0002c2f40, 0x1, 0x4, 0x0, 0x0)

github.com/pingcap/tiup@/components/cluster/command/scale_in.go:48 +0x1ab

github.com/spf13/cobra.(*Command).execute(0xc000485080, 0xc0002c2f00, 0x4, 0x4, 0xc000485080, 0xc0002c2f00)

github.com/spf13/cobra@v1.0.0/command.go:842 +0x460

github.com/spf13/cobra.(*Command).ExecuteC(0xc000484000, 0x180d96b, 0x2045000, 0x24)

github.com/spf13/cobra@v1.0.0/command.go:950 +0x349

github.com/spf13/cobra.(*Command).Execute(…)

github.com/spf13/cobra@v1.0.0/command.go:887

github.com/pingcap/tiup/components/cluster/command.Execute()

github.com/pingcap/tiup@/components/cluster/command/root.go:242 +0x468

main.main()

github.com/pingcap/tiup@/components/cluster/main.go:19 +0x20

Error: run /home/tidb/.tiup/components/cluster/v1.0.9/tiup-cluster (wd:/home/tidb/.tiup/data/SPuZ6wU) failed: exit status 2

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。