为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

【TiDB 版本】

TiDB v4.0.10 + DM v2.0.1

【问题描述】

我们通过5个DM任务从MySQL向TiDB同步数据,这些任务均非合库合表任务,且同步的库表相互之间没有交集,safe-mode均未显式打开,syncer线程数16。除DM任务外,确定没有其他写入动作。

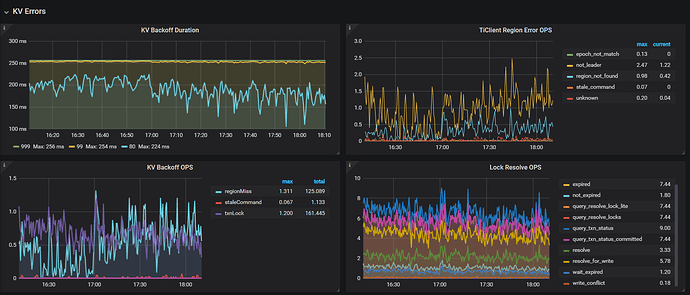

同步开始后,在监控的TiDB/KV Errors面板观察到持续的写-写冲突,如下图所示。

TiDB log中的信息:

[2021/02/06 18:23:12.073 +08:00] [INFO] [2pc.go:822] ["prewrite encounters lock"] [conn=0] [lock="key: {metaKey=true, key=DB:820, field=TID:862}, primary: {metaKey=true, key=DB:820, field=TID:862}, txnStartTS: 422735247324217368, lockForUpdateTS:0, ttl: 3001, type: Put"]

[2021/02/06 18:23:12.074 +08:00] [WARN] [txn.go:66] [RunInNewTxn] ["retry txn"=422735247324217370] ["original txn"=422735247324217370] [error="[kv:9007]Write conflict, txnStartTS=422735247324217370, conflictStartTS=422735247324217368, conflictCommitTS=422735247324217371, key={metaKey=true, key=DB:820, field=TID:862} primary={metaKey=true, key=DB:820, field=TID:862} [try again later]"]

[2021/02/06 18:23:12.076 +08:00] [INFO] [2pc.go:1336] ["2PC clean up done"] [txnStartTS=422735247324217370]

[2021/02/06 18:23:13.752 +08:00] [INFO] [2pc.go:822] ["prewrite encounters lock"] [conn=0] [lock="key: {metaKey=true, key=DB:820, field=TID:862}, primary: {metaKey=true, key=DB:820, field=TID:862}, txnStartTS: 422735247756755003, lockForUpdateTS:0, ttl: 3001, type: Put"]

[2021/02/06 18:23:13.754 +08:00] [WARN] [txn.go:66] [RunInNewTxn] ["retry txn"=422735247756755004] ["original txn"=422735247756755004] [error="[kv:9007]Write conflict, txnStartTS=422735247756755004, conflictStartTS=422735247756755003, conflictCommitTS=422735247756755010, key={metaKey=true, key=DB:820, field=TID:862} primary={metaKey=true, key=DB:820, field=TID:862} [try again later]"]

[2021/02/06 18:23:13.755 +08:00] [INFO] [2pc.go:1336] ["2PC clean up done"] [txnStartTS=422735247756755004]

[2021/02/06 18:23:13.797 +08:00] [INFO] [2pc.go:822] ["prewrite encounters lock"] [conn=0] [lock="key: {metaKey=true, key=DB:820, field=TID:862}, primary: {metaKey=true, key=DB:820, field=TID:862}, txnStartTS: 422735247769862173, lockForUpdateTS:0, ttl: 3001, type: Put"]

[2021/02/06 18:23:13.799 +08:00] [WARN] [txn.go:66] [RunInNewTxn] ["retry txn"=422735247769862172] ["original txn"=422735247769862172] [error="[kv:9007]Write conflict, txnStartTS=422735247769862172, conflictStartTS=422735247769862173, conflictCommitTS=422735247769862176, key={metaKey=true, key=DB:820, field=TID:862} primary={metaKey=true, key=DB:820, field=TID:862} [try again later]"]

[2021/02/06 18:23:13.800 +08:00] [INFO] [2pc.go:1336] ["2PC clean up done"] [txnStartTS=422735247769862172]

[2021/02/06 18:23:14.209 +08:00] [INFO] [2pc.go:822] ["prewrite encounters lock"] [conn=0] [lock="key: {metaKey=true, key=DB:820, field=TID:862}, primary: {metaKey=true, key=DB:820, field=TID:862}, txnStartTS: 422735247887826949, lockForUpdateTS:0, ttl: 3001, type: Put"]

[2021/02/06 18:23:14.211 +08:00] [WARN] [txn.go:66] [RunInNewTxn] ["retry txn"=422735247887826950] ["original txn"=422735247887826950] [error="[kv:9007]Write conflict, txnStartTS=422735247887826950, conflictStartTS=422735247887826949, conflictCommitTS=422735247887826952, key={metaKey=true, key=DB:820, field=TID:862} primary={metaKey=true, key=DB:820, field=TID:862} [try again later]"]

[2021/02/06 18:23:14.212 +08:00] [INFO] [2pc.go:1336] ["2PC clean up done"] [txnStartTS=422735247887826950]

[2021/02/06 18:23:14.765 +08:00] [INFO] [2pc.go:822] ["prewrite encounters lock"] [conn=0] [lock="key: {metaKey=true, key=DB:820, field=TID:862}, primary: {metaKey=true, key=DB:820, field=TID:862}, txnStartTS: 422735248032006156, lockForUpdateTS:0, ttl: 3001, type: Put"]

[2021/02/06 18:23:14.767 +08:00] [WARN] [txn.go:66] [RunInNewTxn] ["retry txn"=422735248032006158] ["original txn"=422735248032006158] [error="[kv:9007]Write conflict, txnStartTS=422735248032006158, conflictStartTS=422735248032006156, conflictCommitTS=422735248032006162, key={metaKey=true, key=DB:820, field=TID:862} primary={metaKey=true, key=DB:820, field=TID:862} [try again later]"]

[2021/02/06 18:23:14.769 +08:00] [INFO] [2pc.go:1336] ["2PC clean up done"] [txnStartTS=422735248032006158]

但是,检查所有TiKV logs,并未发现与写冲突相关的任何信息(基本都是与not leader相关的),按照常理也不应出现写冲突。另外,将5个DM任务中流量最大的那一个(30张表,同步速率约1K QPS)停掉,写冲突的报错就会消失。

Questions:

- 日志中报出的冲突信息相同,均为

key={metaKey=true, key=DB:820, field=TID:862},表示什么key有冲突?(应该是与业务数据无关的) - DM任务为什么用乐观事务?可以更改么?

- 导致此问题的可能原因是什么呢?

Many thanks~~~