【 TiDB 使用环境】生产\测试环境\ POC

【 TiDB 版本】V6.0.0

【遇到的问题】

【复现路径】做过哪些操作出现的问题

【问题现象及影响】

节点1登陆

/opt/mysql5730/bin/mysql -h 192.168.1.118 -P4000 -uroot -p

mysql> SELECT @@autocommit;

±-------------+

| @@autocommit |

±-------------+

| 0 |

±-------------+

1 row in set (0.00 sec)

mysql> insert into tb_test values(4,‘name4’);

Query OK, 1 row affected (0.00 sec)

mysql> select * from tb_test;

±-----±------+

| id | name |

±-----±------+

| 1 | name1 |

| 2 | name2 |

| 3 | name3 |

| 4 | name4 |

±-----±------+

4 rows in set (0.00 sec)

mysql> commit;

Query OK, 0 rows affected (0.02 sec)

节点2登陆查看

/opt/mysql5730/bin/mysql -h 192.168.1.134 -P4000 -uroot -p

mysql> select * from tb_test;

±-----±------+

| id | name |

±-----±------+

| 1 | name1 |

| 2 | name2 |

| 3 | name3 |

±-----±------+

3 rows in set (0.00 sec)

为什么节点2登陆进去查不到id=4的记录

关闭事务自动自动提交后,其他会话的事务开始时间就是打开会话的时间,也就是说你只能查到那个时间版本的数据,新插入的自然就看不到了。

有两种方式可以查到最新版本数据:

- 用select for update

- 显式开启一个事务,比如先begin,再select

是一直查不到吗?还是过一会才有

如果不一致,那么看看集群还是好的吗?是不是已经部分断开了?

节点2退出重新登录就有了

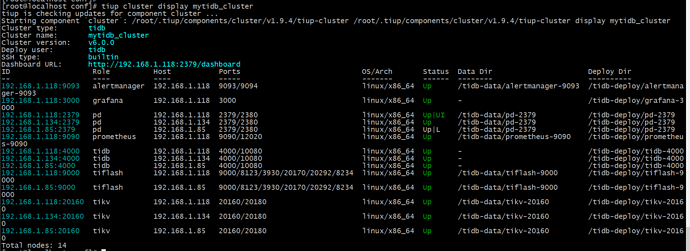

集群状态如下,好像都是正常的

[root@localhost conf]# tiup cluster display mytidb_cluster

tiup is checking updates for component cluster …

Starting component cluster: /root/.tiup/components/cluster/v1.9.4/tiup-cluster /root/.tiup/components/cluster/v1.9.4/tiup-cluster display mytidb_cluster

Cluster type: tidb

Cluster name: mytidb_cluster

Cluster version: v6.0.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.1.118:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

192.168.1.118:9093 alertmanager 192.168.1.118 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.1.118:3000 grafana 192.168.1.118 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.1.118:2379 pd 192.168.1.118 2379/2380 linux/x86_64 Up|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.1.134:2379 pd 192.168.1.134 2379/2380 linux/x86_64 Up /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.1.85:2379 pd 192.168.1.85 2379/2380 linux/x86_64 Up|L /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.1.118:9090 prometheus 192.168.1.118 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.1.118:4000 tidb 192.168.1.118 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.1.134:4000 tidb 192.168.1.134 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.1.85:4000 tidb 192.168.1.85 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.1.118:9000 tiflash 192.168.1.118 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.1.85:9000 tiflash 192.168.1.85 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.1.118:20160 tikv 192.168.1.118 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.1.134:20160 tikv 192.168.1.134 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.1.85:20160 tikv 192.168.1.85 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

Total nodes: 14

除了autocommit外其他参数调整过什么,比如像事务隔离级别类的

没有的,奇怪的,现在我验证了下 节点1写入,节点2可以查得到数据了

你用那种方式设置的? https://docs.pingcap.com/zh/tidb/stable/transaction-overview#自动提交

再结合 session /global 设置生效范围,看是否能导致原因

SET GLOBAL autocommit = 0;

我就执行了这个命令,然后往表里写数据,当时节点1写入,commit后 ,节点2查询(未开启新会话,原有会话里查询),是查不到节点1写入的数据,但是节点2退出重新登录 就可以查得到了。

看下监控页面 tikv-detail中raft-io ,raft-propose等监控里有啥异常没,还有那个时段的tidb 日志。另外第一次查询是登录第2个tidb server没有执行其他的吧

mysql> select @@autocommit;

+--------------+

| @@autocommit |

+--------------+

| 0 |

+--------------+

1 row in set (0.00 sec)

mysql> SELECT TIDB_PARSE_TSO(@@tidb_current_ts);

+-----------------------------------+

| TIDB_PARSE_TSO(@@tidb_current_ts) |

+-----------------------------------+

| 2022-05-19 10:16:46.981000 |

+-----------------------------------+

1 row in set (0.00 sec)

mysql> begin;

Query OK, 0 rows affected (0.00 sec)

mysql> SELECT TIDB_PARSE_TSO(@@tidb_current_ts);

+-----------------------------------+

| TIDB_PARSE_TSO(@@tidb_current_ts) |

+-----------------------------------+

| 2022-05-19 10:21:15.232000 |

+-----------------------------------+

1 row in set (0.00 sec)

第二个tidb_server没有做任何事情

我看了tidb的日志,节点1有这些报错,不知道是不是跟这些报错有关系

[2022/05/19 10:22:36.392 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: Got too many pings from the client, closing the connection."] [system=gr

pc] [grpc_log=true]

[2022/05/19 10:22:36.392 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: loopyWriter.run returning. Err: transport: Connection closing"] [system=

grpc] [grpc_log=true]

节点2有这些报错

[2022/05/20 10:12:50.551 +08:00] [INFO] [gc_worker.go:1565] ["[gc worker] sent safe point to PD"] [uuid=6036e32a4380002] [“safe point”=433327196165111808]

[2022/05/20 10:13:50.426 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: Got too many pings from the client, closing the connection."] [system=gr

pc] [grpc_log=true]

[2022/05/20 10:13:50.427 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: loopyWriter.run returning. Err: transport: Connection closing"] [system=

grpc] [grpc_log=true]

[2022/05/20 10:16:00.428 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: Got too many pings from the client, closing the connection."] [system=gr

pc] [grpc_log=true]

[2022/05/20 10:16:00.428 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: loopyWriter.run returning. Err: transport: Connection closing"] [system=

grpc] [grpc_log=true]

[2022/05/20 10:16:50.429 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: Got too many pings from the client, closing the connection."] [system=gr

pc] [grpc_log=true]

[2022/05/20 10:16:50.429 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: loopyWriter.run returning. Err: transport: Connection closing"] [system=

grpc] [grpc_log=true]

[2022/05/20 10:18:50.420 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: Got too many pings from the client, closing the connection."] [system=gr

pc] [grpc_log=true]

[2022/05/20 10:18:50.421 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: loopyWriter.run returning. Err: transport: Connection closing"] [system=

grpc] [grpc_log=true]

[2022/05/20 10:20:40.425 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: Got too many pings from the client, closing the connection."] [system=gr

pc] [grpc_log=true]

[2022/05/20 10:20:40.426 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: loopyWriter.run returning. Err: transport: Connection closing"] [system=

grpc] [grpc_log=true]

[2022/05/20 10:21:10.485 +08:00] [INFO] [gc_worker.go:329] ["[gc worker] starts the whole job"] [uuid=6036e32a4380002] [safePoint=433327353464619008] [concur

rency=3]

[2022/05/20 10:21:10.487 +08:00] [INFO] [gc_worker.go:1039] ["[gc worker] start resolve locks"] [uuid=6036e32a4380002] [safePoint=433327353464619008] [concur

rency=3]

[2022/05/20 10:21:10.487 +08:00] [INFO] [range_task.go:137] [“range task started”] [name=resolve-locks-runner] [startKey=] [endKey=] [concurrency=3]

[2022/05/20 10:21:10.488 +08:00] [INFO] [range_task.go:243] [“range task finished”] [name=resolve-locks-runner] [startKey=] [endKey=] [“cost time”=950.663µs]

[“completed regions”=1]

[2022/05/20 10:21:10.488 +08:00] [INFO] [gc_worker.go:1060] ["[gc worker] finish resolve locks"] [uuid=6036e32a4380002] [safePoint=433327353464619008] [regio

ns=1]

[2022/05/20 10:22:00.429 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: Got too many pings from the client, closing the connection."] [system=gr

pc] [grpc_log=true]

[2022/05/20 10:22:00.429 +08:00] [ERROR] [grpclogger.go:116] ["[transport]transport: loopyWriter.run returning. Err: transport: Connection closing"] [system=

grpc] [grpc_log=true]

tidb好像跟oracle,mysql不一样,在oracle,mysql里面当前会话写入的数据,另外的终端会话不需要重新开启新会话也可以查得到其他会话写入的数据。

问题再现

节点1写入数据

mysql> set global autocommit=0;

Query OK, 0 rows affected (0.03 sec)

mysql> select * from tb_test;

±-----±-------+

| id | name |

±-----±-------+

| 1 | name1 |

| 2 | name2 |

| 3 | name3 |

| 4 | name4 |

| 5 | name5 |

| 6 | name6 |

| 7 | name7 |

| 8 | name8 |

| 9 | name9 |

| 10 | name10 |

| 11 | name11 |

| 12 | name12 |

±-----±-------+

12 rows in set (0.01 sec)

mysql> insert into tb_test values(13,‘name13’);

Query OK, 1 row affected (0.00 sec)

mysql> select * from tb_test;

±-----±-------+

| id | name |

±-----±-------+

| 1 | name1 |

| 2 | name2 |

| 3 | name3 |

| 4 | name4 |

| 5 | name5 |

| 6 | name6 |

| 7 | name7 |

| 8 | name8 |

| 9 | name9 |

| 10 | name10 |

| 11 | name11 |

| 12 | name12 |

| 13 | name13 |

±-----±-------+

13 rows in set (0.01 sec)

mysql> commit;

Query OK, 0 rows affected (0.01 sec)

节点2当前会话查询

mysql> select * from tb_test;

±-----±-------+

| id | name |

±-----±-------+

| 1 | name1 |

| 2 | name2 |

| 3 | name3 |

| 4 | name4 |

| 5 | name5 |

| 6 | name6 |

| 7 | name7 |

| 8 | name8 |

| 9 | name9 |

| 10 | name10 |

| 11 | name11 |

| 12 | name12 |

±-----±-------+

12 rows in set (0.00 sec)

查不到id=13的记录

节点2重新登录就可以查得到

[root@localhost tidb-deploy]# /opt/mysql5730/bin/mysql -h 192.168.1.85 -P4000 -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 425

Server version: 5.7.25-TiDB-v6.0.0 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible

Copyright © 2000, 2020, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type ‘help;’ or ‘\h’ for help. Type ‘\c’ to clear the current input statement.

mysql> use db_test;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from tb_test;

±-----±-------+

| id | name |

±-----±-------+

| 1 | name1 |

| 2 | name2 |

| 3 | name3 |

| 4 | name4 |

| 5 | name5 |

| 6 | name6 |

| 7 | name7 |

| 8 | name8 |

| 9 | name9 |

| 10 | name10 |

| 11 | name11 |

| 12 | name12 |

| 13 | name13 |

±-----±-------+

13 rows in set (0.00 sec)

对oracle不太熟不好判断,mysql行为和tidb是一致的,可以测试下

我也复现了,因为改成global autocommit=0,第二个会话执行SQL后要commit一下,否则还是之前SQL开始的事务时间。改成session autocommit=0就没问题了。 默认是snapshot isolation隔离级别。

那这种情况的话,要是应用程序连接的情况下怎么弄呢,让应用程序在另外的节点执行commit好像有点不现实吧