【 TiDB 使用环境`】生产环境

【 TiDB 版本】v4.0.15

【遇到的问题】pd突然挂掉

【问题现象及影响】三个pd节点全部挂掉,进而整个tikv集群挂掉。

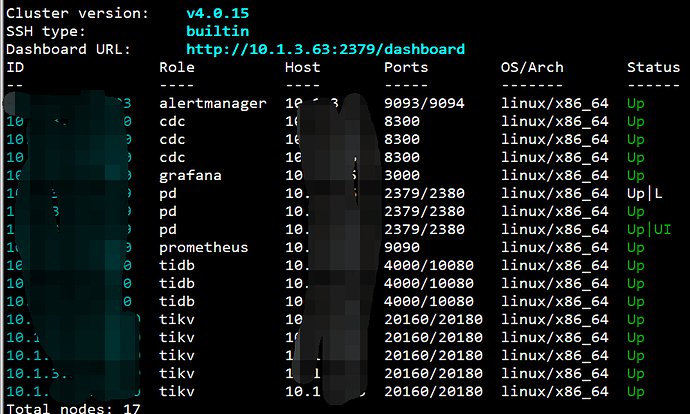

集群拓扑:

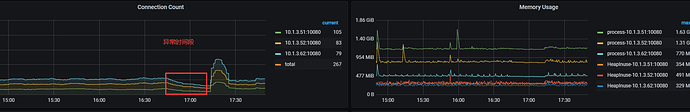

异常前后连接情况:

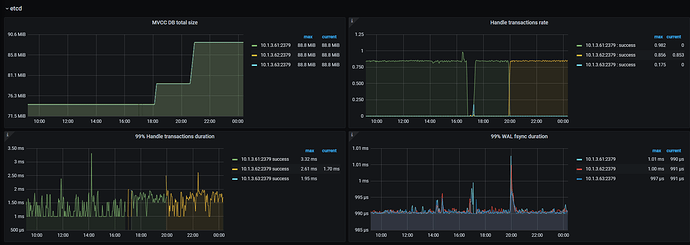

pd日志:

`[2022/04/13 16:44:43.023 +08:00] [INFO] [grpc_service.go:815] [“update service GC safe point”] [service-id=ticdc] [expire-at=1649925883] [safepoint=432495521068220442]

[2022/04/13 16:44:43.464 +08:00] [ERROR] [server.go:1203] [“failed to update timestamp”] [error=“[PD:etcd:ErrEtcdTxn]etcd Txn failed”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [server.go:108] [“region syncer has been stopped”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [cluster.go:310] [“metrics are reset”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [coordinator.go:103] [“patrol regions has been stopped”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [cluster.go:312] [“background jobs has been stopped”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [coordinator.go:652] [“scheduler has been stopped”] [scheduler-name=balance-region-scheduler] [error=“context canceled”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [coordinator.go:205] [“drive push operator has been stopped”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [coordinator.go:652] [“scheduler has been stopped”] [scheduler-name=balance-hot-region-scheduler] [error=“context canceled”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [cluster.go:331] [“coordinator is stopping”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [coordinator.go:652] [“scheduler has been stopped”] [scheduler-name=balance-leader-scheduler] [error=“context canceled”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [coordinator.go:652] [“scheduler has been stopped”] [scheduler-name=label-scheduler] [error=“context canceled”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [cluster.go:327] [“coordinator has been stopped”]

[2022/04/13 16:44:43.465 +08:00] [INFO] [cluster.go:360] [“raftcluster is stopped”]

[2022/04/13 16:44:43.466 +08:00] [ERROR] [leader.go:142] [“getting pd leader meets error”] [error=“[PD:proto:ErrProtoUnmarshal]proto: Member: wiretype end group for non-group”]

[2022/04/13 16:44:43.468 +08:00] [ERROR] [tso.go:302] [“invalid timestamp”] [timestamp={}]

[2022/04/13 16:44:43.469 +08:00] [ERROR] [tso.go:302] [“invalid timestamp”] [timestamp={}]

[2022/04/13 16:44:43.470 +08:00] [ERROR] [tso.go:302] [“invalid timestamp”] [timestamp={}]

[2022/04/13 16:44:43.470 +08:00] [ERROR] [tso.go:302] [“invalid timestamp”] [timestamp={}]

[2022/04/13 16:44:43.471 +08:00] [ERROR] [tso.go:302] [“invalid timestamp”] [timestamp={}]

`

重启多次失败,然后关闭了cdc,一会之后再次重启成功。