【 TiDB 使用环境`】测试环境按照官方文档快速上手部署单机集群

[root@node1:0 ~]# tiup cluster deploy liking v6.0.0 ./topo.yaml --user root -p

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.9.3/tiup-cluster /root/.tiup/components/cluster/v1.9.3/tiup-cluster deploy liking v6.0.0 ./topo.yaml --user root -p

Input SSH password:

+ Detect CPU Arch

- Detecting node 192.168.222.11 ... Done

Please confirm your topology:

Cluster type: tidb

Cluster name: liking

Cluster version: v6.0.0

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

pd 192.168.222.11 2379/2380 linux/x86_64 /u01/tidb/deploy/pd-2379,/u01/tidb/data/pd-2379

tikv 192.168.222.11 20160/20180 linux/x86_64 /u01/tidb/deploy/tikv-20160,/u01/tidb/data/tikv-20160

tikv 192.168.222.11 20161/20181 linux/x86_64 /u01/tidb/deploy/tikv-20161,/u01/tidb/data/tikv-20161

tikv 192.168.222.11 20162/20182 linux/x86_64 /u01/tidb/deploy/tikv-20162,/u01/tidb/data/tikv-20162

tidb 192.168.222.11 4000/10080 linux/x86_64 /u01/tidb/deploy/tidb-4000

tiflash 192.168.222.11 9000/8123/3930/20170/20292/8234 linux/x86_64 /u01/tidb/deploy/tiflash-9000,/u01/tidb/data/tiflash-9000

prometheus 192.168.222.11 9090/12020 linux/x86_64 /u01/tidb/deploy/prometheus-9090,/u01/tidb/data/prometheus-9090

grafana 192.168.222.11 3000 linux/x86_64 /u01/tidb/deploy/grafana-3000

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

+ Generate SSH keys ... Done

+ Download TiDB components

- Download pd:v6.0.0 (linux/amd64) ... Done

- Download tikv:v6.0.0 (linux/amd64) ... Done

- Download tidb:v6.0.0 (linux/amd64) ... Done

- Download tiflash:v6.0.0 (linux/amd64) ... Done

- Download prometheus:v6.0.0 (linux/amd64) ... Done

- Download grafana:v6.0.0 (linux/amd64) ... Done

- Download node_exporter: (linux/amd64) ... Done

- Download blackbox_exporter: (linux/amd64) ... Done

+ Initialize target host environments

- Prepare 192.168.222.11:22 ... Done

+ Deploy TiDB instance

- Copy pd -> 192.168.222.11 ... Done

- Copy tikv -> 192.168.222.11 ... Done

- Copy tikv -> 192.168.222.11 ... Done

- Copy tikv -> 192.168.222.11 ... Done

- Copy tidb -> 192.168.222.11 ... Done

- Copy tiflash -> 192.168.222.11 ... Done

- Copy prometheus -> 192.168.222.11 ... Done

- Copy grafana -> 192.168.222.11 ... Done

- Deploy node_exporter -> 192.168.222.11 ... Done

- Deploy blackbox_exporter -> 192.168.222.11 ... Done

+ Copy certificate to remote host

+ Init instance configs

- Generate config pd -> 192.168.222.11:2379 ... Done

- Generate config tikv -> 192.168.222.11:20160 ... Done

- Generate config tikv -> 192.168.222.11:20161 ... Done

- Generate config tikv -> 192.168.222.11:20162 ... Done

- Generate config tidb -> 192.168.222.11:4000 ... Done

- Generate config tiflash -> 192.168.222.11:9000 ... Done

- Generate config prometheus -> 192.168.222.11:9090 ... Done

- Generate config grafana -> 192.168.222.11:3000 ... Done

+ Init monitor configs

- Generate config node_exporter -> 192.168.222.11 ... Done

- Generate config blackbox_exporter -> 192.168.222.11 ... Done

+ Check status

Enabling component pd

Enabling instance 192.168.222.11:2379

Enable instance 192.168.222.11:2379 success

Enabling component tikv

Enabling instance 192.168.222.11:20162

Enabling instance 192.168.222.11:20160

Enabling instance 192.168.222.11:20161

Enable instance 192.168.222.11:20160 success

Enable instance 192.168.222.11:20162 success

Enable instance 192.168.222.11:20161 success

Enabling component tidb

Enabling instance 192.168.222.11:4000

Enable instance 192.168.222.11:4000 success

Enabling component tiflash

Enabling instance 192.168.222.11:9000

Enable instance 192.168.222.11:9000 success

Enabling component prometheus

Enabling instance 192.168.222.11:9090

Enable instance 192.168.222.11:9090 success

Enabling component grafana

Enabling instance 192.168.222.11:3000

Enable instance 192.168.222.11:3000 success

Enabling component node_exporter

Enabling instance 192.168.222.11

Enable 192.168.222.11 success

Enabling component blackbox_exporter

Enabling instance 192.168.222.11

Enable 192.168.222.11 success

Cluster `liking` deployed successfully, you can start it with command: `tiup cluster start liking --init`

首次初始化启动时报错如下:

[root@node1:0 ~]# tiup cluster start liking --init

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.9.3/tiup-cluster /root/.tiup/components/cluster/v1.9.3/tiup-cluster start liking --init

Starting cluster liking...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/liking/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/liking/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 192.168.222.11:2379

Start instance 192.168.222.11:2379 success

Starting component tikv

Starting instance 192.168.222.11:20162

Starting instance 192.168.222.11:20160

Starting instance 192.168.222.11:20161

Start instance 192.168.222.11:20160 success

Start instance 192.168.222.11:20162 success

Start instance 192.168.222.11:20161 success

Starting component tidb

Starting instance 192.168.222.11:4000

Start instance 192.168.222.11:4000 success

Starting component tiflash

Starting instance 192.168.222.11:9000

Start instance 192.168.222.11:9000 success

Starting component prometheus

Starting instance 192.168.222.11:9090

Start instance 192.168.222.11:9090 success

Starting component grafana

Starting instance 192.168.222.11:3000

Start instance 192.168.222.11:3000 success

Starting component node_exporter

Starting instance 192.168.222.11

Start 192.168.222.11 success

Starting component blackbox_exporter

Starting instance 192.168.222.11

Start 192.168.222.11 success

+ [ Serial ] - UpdateTopology: cluster=liking

Started cluster `liking` successfully

Failed to set root password of TiDB database to 'G^174F*P!3t2sz&Wd5'

Error: dial tcp 192.168.222.11:4000: connect: connection refused

Verbose debug logs has been written to /root/.tiup/logs/tiup-cluster-debug-2022-04-08-15-01-41.log.

查看最后的日志文件,也无其他有用的信息,请知情者指教,谢谢!

4 个赞

边城元元

2022 年4 月 8 日 07:44

2

maxsession也按照文档改为20了,再调大一些 试一下 50

2 个赞

看来我遇见的是一个很特殊的情况了?不知熟悉TiDB的大侠们有无知情可以指导一二的

2 个赞

Hi70KG

2022 年4 月 10 日 14:53

8

把这个日志发出来看看有没有报错?

2 个赞

附件是log文件,最后的报错与上面的内容是一样的,烦请指教,谢谢tiup-cluster-debug-2022-04-08-15-01-41.log (285.3 KB)

2022-04-08T15:01:40.599+0800 INFO Start 192.168.222.11 success

2022-04-08T15:01:40.599+0800 DEBUG TaskFinish {"task": "StartCluster"}

2022-04-08T15:01:40.599+0800 INFO + [ Serial ] - UpdateTopology: cluster=liking

2022-04-08T15:01:40.599+0800 DEBUG TaskBegin {"task": "UpdateTopology: cluster=liking"}

2022-04-08T15:01:40.722+0800 DEBUG TaskFinish {"task": "UpdateTopology: cluster=liking"}

2022-04-08T15:01:40.722+0800 INFO Started cluster `liking` successfully

2022-04-08T15:01:40.746+0800 ERROR Failed to set root password of TiDB database to 'G^174F*P!3t2sz&Wd5'

2022-04-08T15:01:40.746+0800 INFO Execute command finished {"code": 1, "error": "dial tcp 192.168

.222.11:4000: connect: connection refused"}

3 个赞

Hi70KG

2022 年4 月 11 日 07:23

10

小伙伴直接

1 个赞

liking

2022 年4 月 11 日 07:36

11

如果不初始化直接启动,也是同样的错误,目前的关键是已有的错误日志不够清晰,不足以指明到底错在了哪里!还望继续给出指导意见,谢谢

2 个赞

Hi70KG

2022 年4 月 11 日 07:36

12

用初始化我刚才没初始化成功插入数据,刚初始化成功了

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.9.3/tiup-cluster /root/.tiup/components/cluster/v1.9.3/tiup-cluster start tidb-test --init

Starting cluster tidb-test...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.80.150

+ [Parallel] - UserSSH: user=tidb, host=192.168.80.150

+ [Parallel] - UserSSH: user=tidb, host=192.168.80.150

+ [Parallel] - UserSSH: user=tidb, host=192.168.80.150

+ [Parallel] - UserSSH: user=tidb, host=192.168.80.150

+ [Parallel] - UserSSH: user=tidb, host=192.168.80.150

+ [Parallel] - UserSSH: user=tidb, host=192.168.80.150

+ [Parallel] - UserSSH: user=tidb, host=192.168.80.150

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 192.168.80.150:2379

Start instance 192.168.80.150:2379 success

Starting component tikv

Starting instance 192.168.80.150:20162

Starting instance 192.168.80.150:20160

Starting instance 192.168.80.150:20161

Start instance 192.168.80.150:20160 success

Start instance 192.168.80.150:20162 success

Start instance 192.168.80.150:20161 success

Starting component tidb

Starting instance 192.168.80.150:4000

Start instance 192.168.80.150:4000 success

Starting component tiflash

Starting instance 192.168.80.150:9000

Start instance 192.168.80.150:9000 success

Starting component prometheus

Starting instance 192.168.80.150:9090

Start instance 192.168.80.150:9090 success

Starting component grafana

Starting instance 192.168.80.150:3000

Start instance 192.168.80.150:3000 success

Starting component node_exporter

Starting instance 192.168.80.150

Start 192.168.80.150 success

Starting component blackbox_exporter

Starting instance 192.168.80.150

Start 192.168.80.150 success

+ [ Serial ] - UpdateTopology: cluster=tidb-test

Started cluster `tidb-test` successfully

The root password of TiDB database has been changed.

The new password is: '9x7*0CJavz%L3&6D^5'.

Copy and record it to somewhere safe, it is only displayed once, and will not be stored.

The generated password can NOT be get and shown again.

1 个赞

Hi70KG

2022 年4 月 11 日 07:40

13

不初始化,我正常插入数据,但是登录dashboard,用户名root不需要密码,prometheus监控也报错,没有数据,

1 个赞

liking

2022 年4 月 11 日 07:41

14

我再次做了启动,操作如下:

[root@node1:0 ~]# tiup cluster start liking

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.9.3/tiup-cluster /root/.tiup/components/cluster/v1.9.3/tiup-cluster start liking

Starting cluster liking...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/liking/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/liking/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [Parallel] - UserSSH: user=tidb, host=192.168.222.11

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 192.168.222.11:2379

Start instance 192.168.222.11:2379 success

Starting component tikv

Starting instance 192.168.222.11:20162

Starting instance 192.168.222.11:20160

Starting instance 192.168.222.11:20161

Start instance 192.168.222.11:20160 success

Start instance 192.168.222.11:20162 success

Start instance 192.168.222.11:20161 success

Starting component tidb

Starting instance 192.168.222.11:4000

Start instance 192.168.222.11:4000 success

Starting component tiflash

Starting instance 192.168.222.11:9000

Start instance 192.168.222.11:9000 success

Starting component prometheus

Starting instance 192.168.222.11:9090

Start instance 192.168.222.11:9090 success

Starting component grafana

Starting instance 192.168.222.11:3000

Start instance 192.168.222.11:3000 success

Starting component node_exporter

Starting instance 192.168.222.11

Start 192.168.222.11 success

Starting component blackbox_exporter

Starting instance 192.168.222.11

Start 192.168.222.11 success

+ [ Serial ] - UpdateTopology: cluster=liking

Started cluster `liking` successfully

问题就在于这里,看上去是启动成功了,但是实际上tidb并未起来,应该是接着就死掉了!如下查看状态:

[root@node1:0 ~]# tiup cluster display liking

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.9.3/tiup-cluster /root/.tiup/components/cluster/v1.9.3/tiup-cluster display liking

Cluster type: tidb

Cluster name: liking

Cluster version: v6.0.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.222.11:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.222.11:3000 grafana 192.168.222.11 3000 linux/x86_64 Up - /u01/tidb/deploy/grafana-3000

192.168.222.11:2379 pd 192.168.222.11 2379/2380 linux/x86_64 Up|L|UI /u01/tidb/data/pd-2379 /u01/tidb/deploy/pd-2379

192.168.222.11:9090 prometheus 192.168.222.11 9090/12020 linux/x86_64 Up /u01/tidb/data/prometheus-9090 /u01/tidb/deploy/prometheus-9090

192.168.222.11:4000 tidb 192.168.222.11 4000/10080 linux/x86_64 Down - /u01/tidb/deploy/tidb-4000

192.168.222.11:9000 tiflash 192.168.222.11 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /u01/tidb/data/tiflash-9000 /u01/tidb/deploy/tiflash-9000

192.168.222.11:20160 tikv 192.168.222.11 20160/20180 linux/x86_64 Up /u01/tidb/data/tikv-20160 /u01/tidb/deploy/tikv-20160

192.168.222.11:20161 tikv 192.168.222.11 20161/20181 linux/x86_64 Up /u01/tidb/data/tikv-20161 /u01/tidb/deploy/tikv-20161

192.168.222.11:20162 tikv 192.168.222.11 20162/20182 linux/x86_64 Up /u01/tidb/data/tikv-20162 /u01/tidb/deploy/tikv-20162

Total nodes: 8

问题到底在哪里呢?

2 个赞

Hi70KG

2022 年4 月 11 日 07:44

15

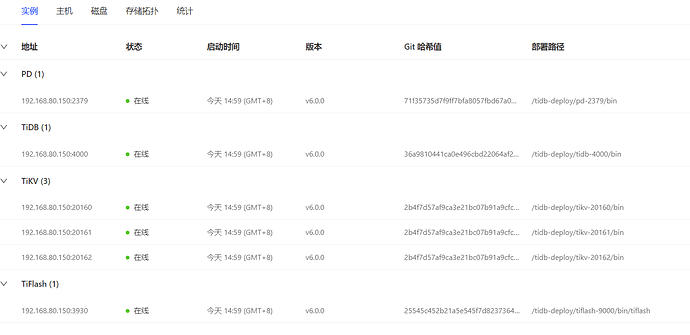

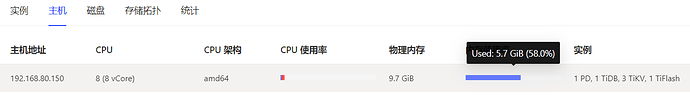

我机器配置,是不是你内存不足呀,安装完就6G了,10G内存安装不卡,内存至少8G

[root@kmaster ~]# mysql -h 127.0.0.1 -P 4000 -u root -p'9x7*0CJavz%L3&6D^5'

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 707

Server version: 5.7.25-TiDB-v6.0.0 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> use test

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MySQL [test]> show tables;

+----------------+

| Tables_in_test |

+----------------+

| t1 |

+----------------+

1 row in set (0.00 sec)

MySQL [test]> select * from t1;

+------+

| id |

+------+

| 1 |

| 2 |

| 3 |

+------+

3 rows in set (0.01 sec)

MySQL [test]> set password='123456';

Query OK, 0 rows affected (0.03 sec)

1 个赞

Min_Chen

2022 年4 月 11 日 07:47

16

直接去 tidb-server 所在服务器拿 tidb-server 的日志,看 tiup 的 log 基本没有用,只能看到连不上,拒绝。去看 tidb-server 的日志,看为啥没起来或是为啥没监听端口,然后根据报错采取不同的方法解决。

3 个赞

Min_Chen

2022 年4 月 11 日 07:52

17

如果是刚启动就掉,也是先看 tidb-server 日志,看是什么报错,对症下药,不要看 tiup 日志了,没用,只能以一个观察者的角度看到现象。

3 个赞

liking

2022 年4 月 11 日 07:53

18

谢谢提示,就是没想到到这里看日志,我看了下:

[2022/04/11 15:52:09.114 +08:00] [FATAL] [main.go:691] ["failed to create the server"] [error="failed to cleanup stale Unix socket file /tmp/tidb-400

0.sock: dial unix /tmp/tidb-4000.sock: connect: permission denied"] [stack="main.createServer\

\t/home/jenkins/agent/workspace/build-common/go/src/gi

thub.com/pingcap/tidb/tidb-server/main.go:691\

main.main\

\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/mai

n.go:205\

runtime.main\

\t/usr/local/go/src/runtime/proc.go:250"] [stack="main.createServer\

\t/home/jenkins/agent/workspace/build-common/go/src/gith

ub.com/pingcap/tidb/tidb-server/main.go:691\

main.main\

\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.

go:205\

runtime.main\

\t/usr/local/go/src/runtime/proc.go:250"]

2 个赞

Min_Chen

2022 年4 月 11 日 07:56

19

看来是权限不足,tidb 应该是用 tidb 用户部署的吧?

1 个赞

Hi70KG

2022 年4 月 11 日 07:58

20

我刚查了部署完的,这个sock确实是tidb用户的

[root@kmaster log]# ll /tmp/tidb-4000.sock

srwxr-xr-x 1 tidb tidb 0 Apr 11 14:59 /tmp/tidb-4000.sock

1 个赞