课程名称:【TiDB 4.0 PCTA学习笔记】Day5 2.3.1~2.3.2

学习时长:180min

课程收获:

- 使用TiUP部署TiDB

- 使用k8s部署TiDB

2.3.1 TiUP部署TiDB

TiUP解决什么问题

* 如何方便的获取组件(TiDB、TiKV、PD)

* 按照顺序放到每台机器对应的位置

* 配置好启动参数,让它们之间可以互相连接

* 最后,按照一定的顺序去启动它们:PD ==> TiKV ==> TiDB

在TiDB 4.0后,推出了TiUP,自动化上述过程

TiUP的核心理念

- 官网:https://tiup.io

- 类似一个包管理器,一切模块都是组件

- 使用命令

tiup list列出支持所有的组件 - 支持自定义tiup仓库,可以定义本地离线仓库,参考文档

示例Demo

- 本地启动一个TiDB集群,指定版本:

tiup playground v4.0.0 - 连接TiDB:

mysql --host 127.0.0.1 --port 4000 -u root - 显示所有数据库:

show databases - 创建一个数据库:

create database testDb - 显示TiDB集群节点信息:

tiup palyground display - 另一种创建集群的方式:

tiup playground --db 2 --pd 3 --kv 4默认使用最新版本 - 在创建成功提示信息页面输入

Ctrl+C将关闭集群

使用TiUP扩容、缩容

- 扩容一个无状态的TiDB节点:

tiup playground scale-out --db 1 - 缩容刚刚创建的TiDB节点:

tiup playground scale-in --pid xxxxx - 使用自己编译的二进制文件启动TiDB:

tiup playground sacle-out --db 1 --db.binpath /tmp/test/tidb-server

在生产环境上部署

准备一个拓扑文件,yaml格式。示例:

- 先检查机器环境:

tiup cluster check /tmp/youconfigfile.yaml -u root - 部署TiDB集群:

tiup cluster deploy mytest-cluster v4.0.0 /tmp/youconfigfile.yml -u root - 启动集群:

tiup cluster start mytest-cluster - 查看集群的拓扑结构:

tiup cluster display mytest-cluster - 通过MySQL客户端连接上去

mysql -u root -h 172.16.5.140 -P 4000 - 通过配置文件进行扩容,准备一个yaml文件,内容如下:

- 通过配置文件进行扩容:

tiup cluster scale-out mytest-cluster /tmp/myscaleconfigfile.yml - 对集群进行缩容:

tiup cluster scale-in mytest-cluster -N 172.16.5.134:4000 - 集群TiDB升级,升级过程中官方承诺业务不会受到影响:

tiup cluster upgrade test-cluster v4.0.5 - 集群删除,不可逆,想清楚:

tiup cluster destroy mytest-cluster

集群配置文件的详细示例:https://github.com/pingcap/tiup/blob/master/examples/topology.example.yaml

2.3.2 在k8s上部署TiDB

**优势:**管理更简单

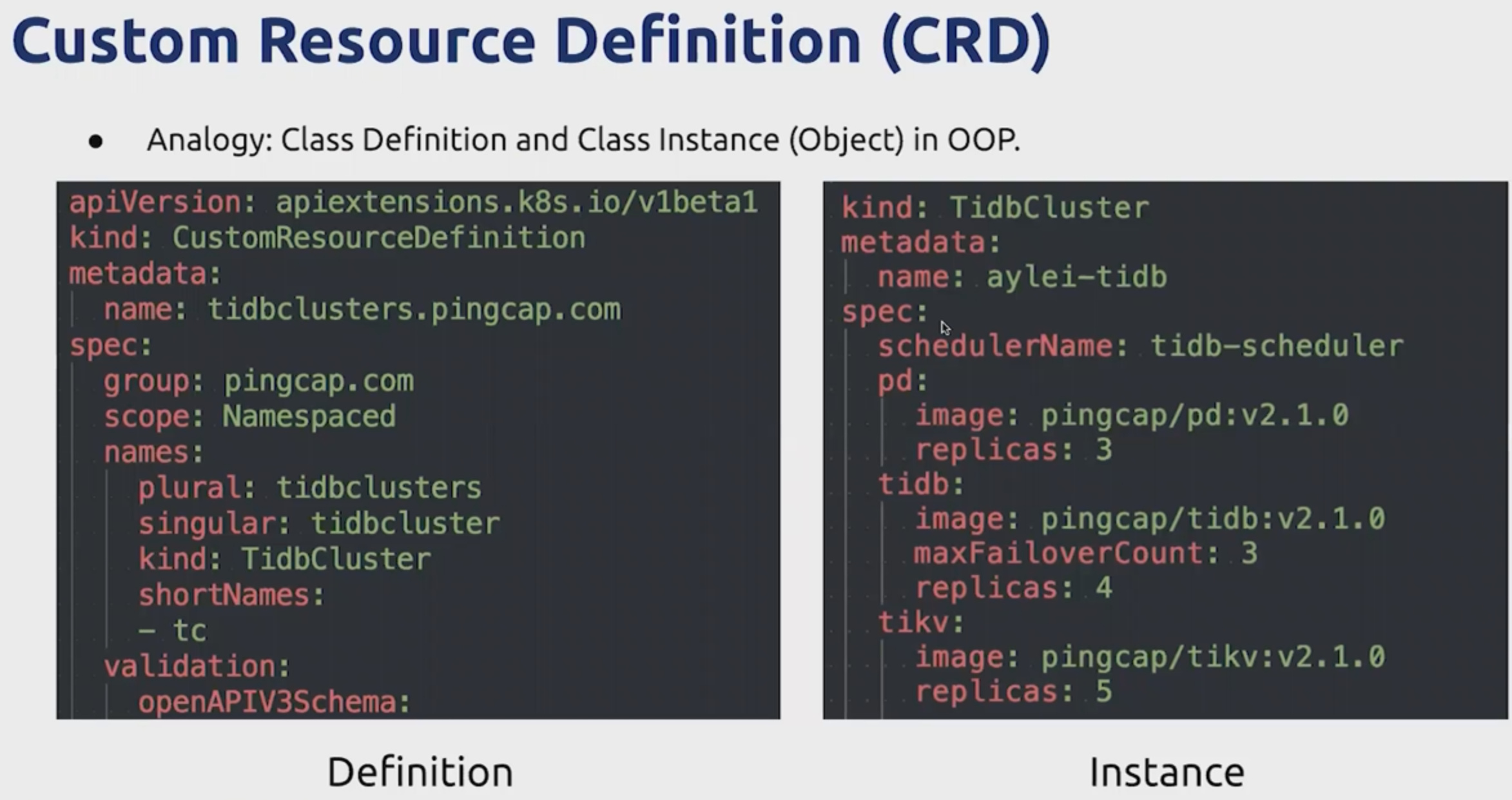

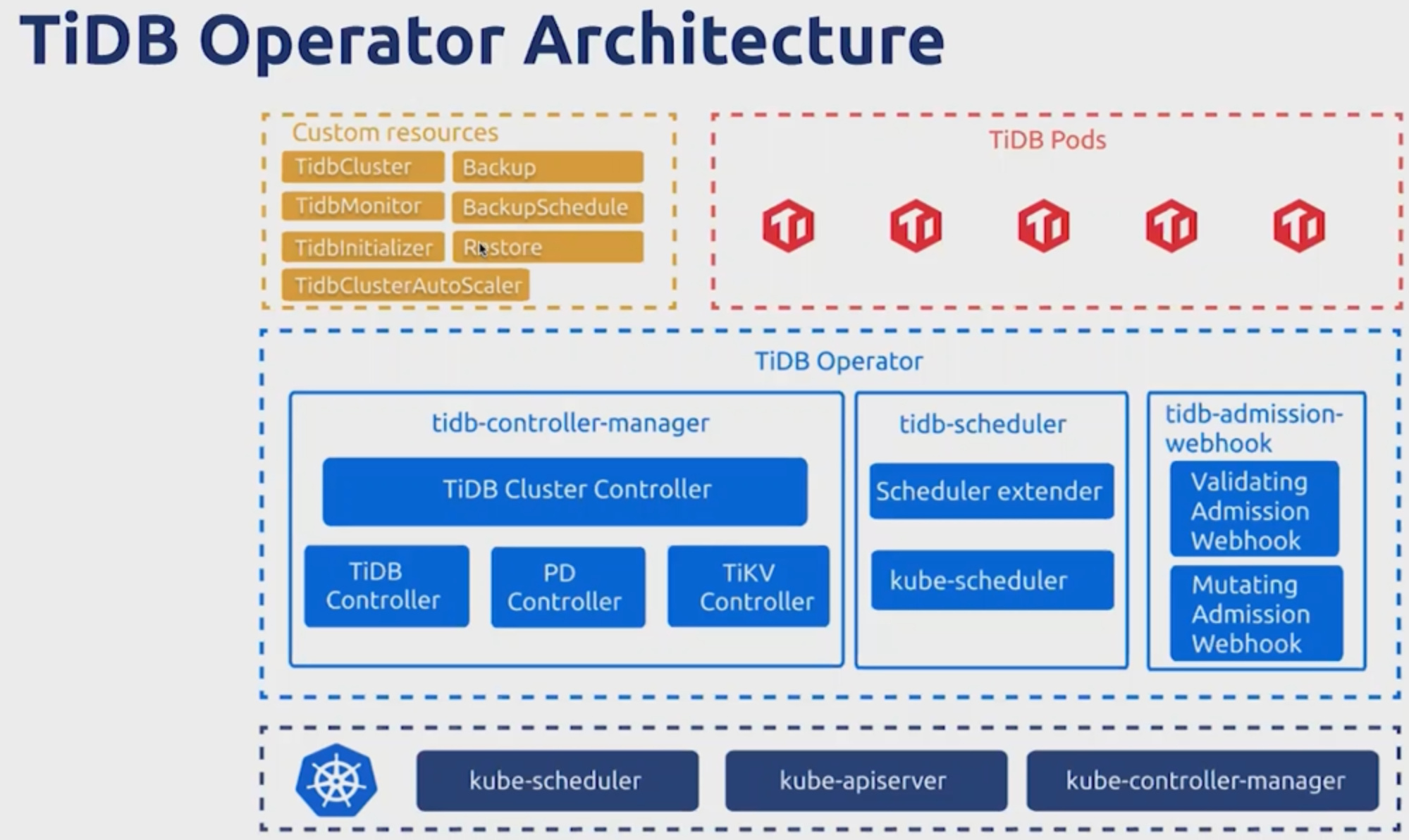

TiDB Operator

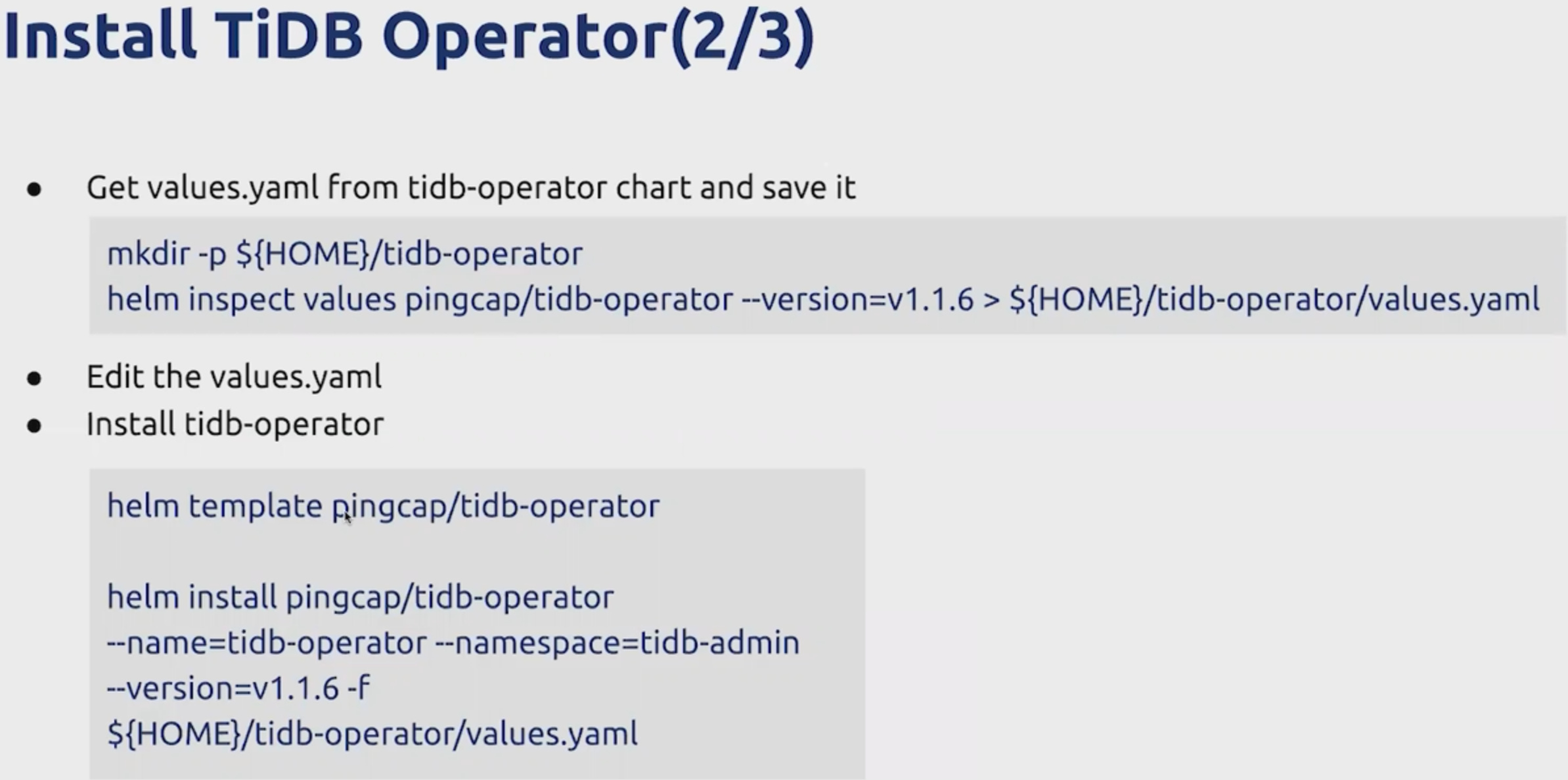

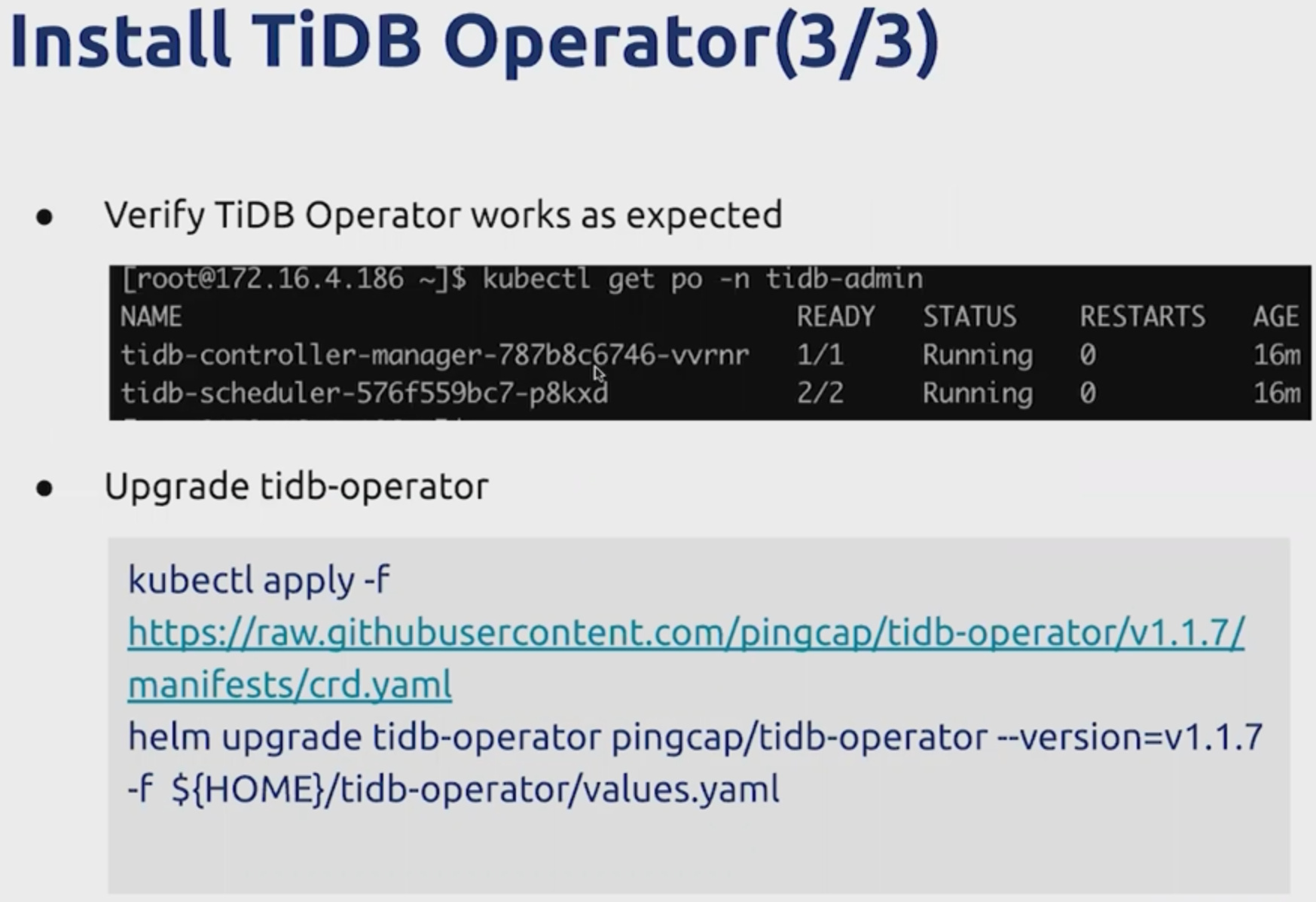

部署TiDB Operator

安装CRD: kubectl apply -f , kubectl get crd | grep tidbclusters

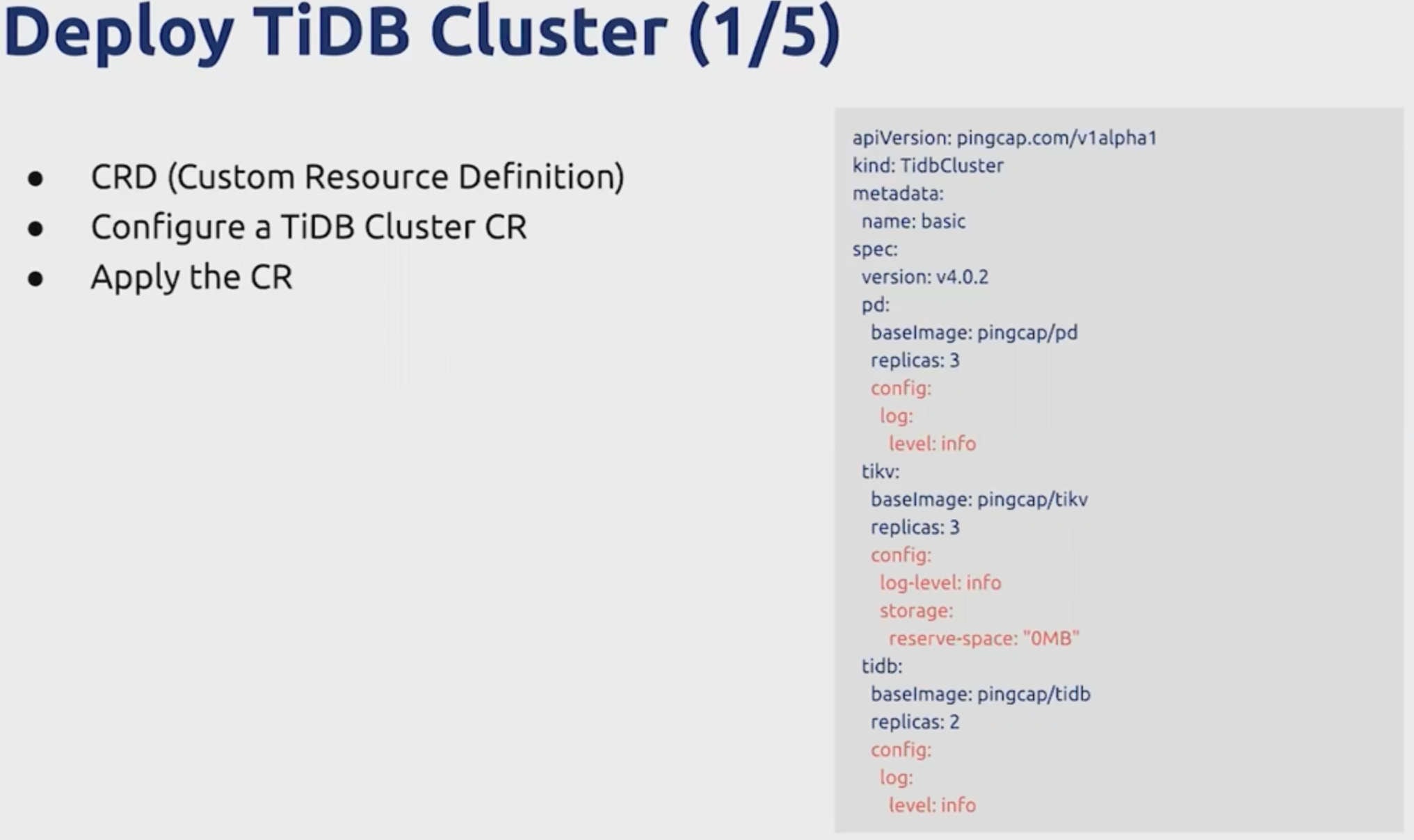

部署TiDB集群

2.3.3 如何将数据导入TiDB

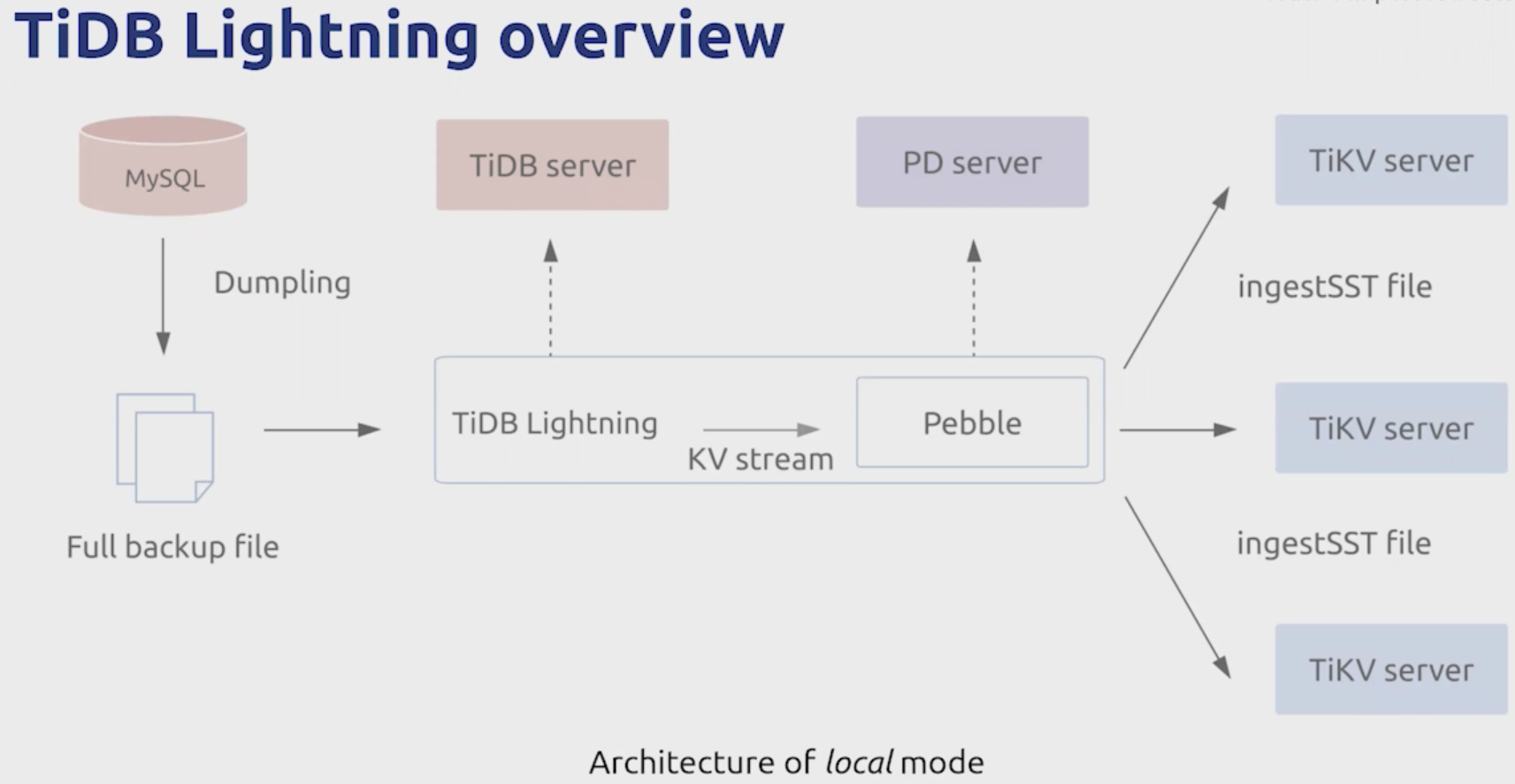

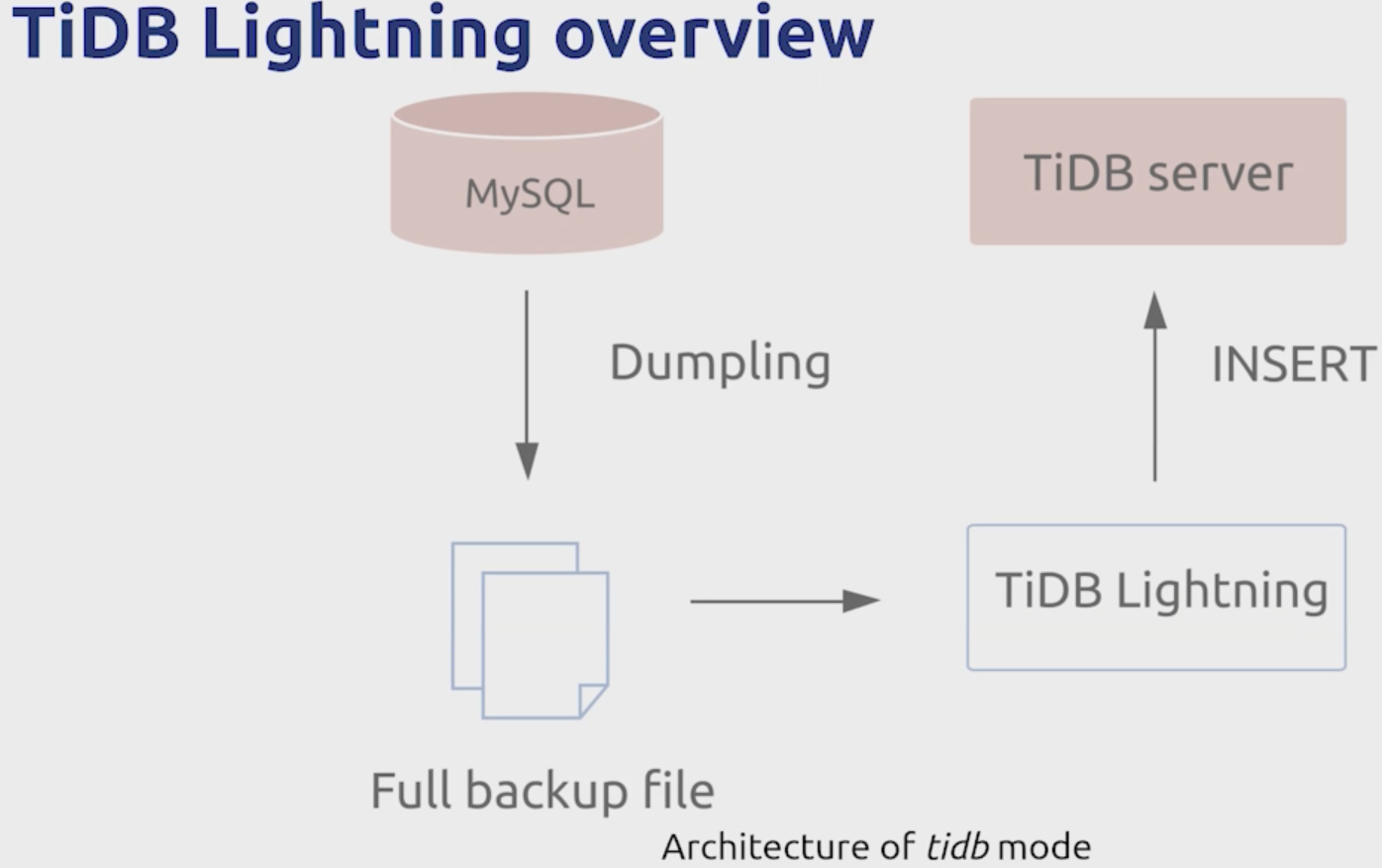

全量数据导入

* 工具:TiDB Lightning

* Local 模式导入

* TiDB 模式导入

* table filter。导入其中一部分库或者表。

* checkpoint。断点功能。导入中断后可再重启继续导入。断点信息一般建议存入本地。

* 具有可视化页面

* 支持CSV、SQL等导入文件。

增量数据导入

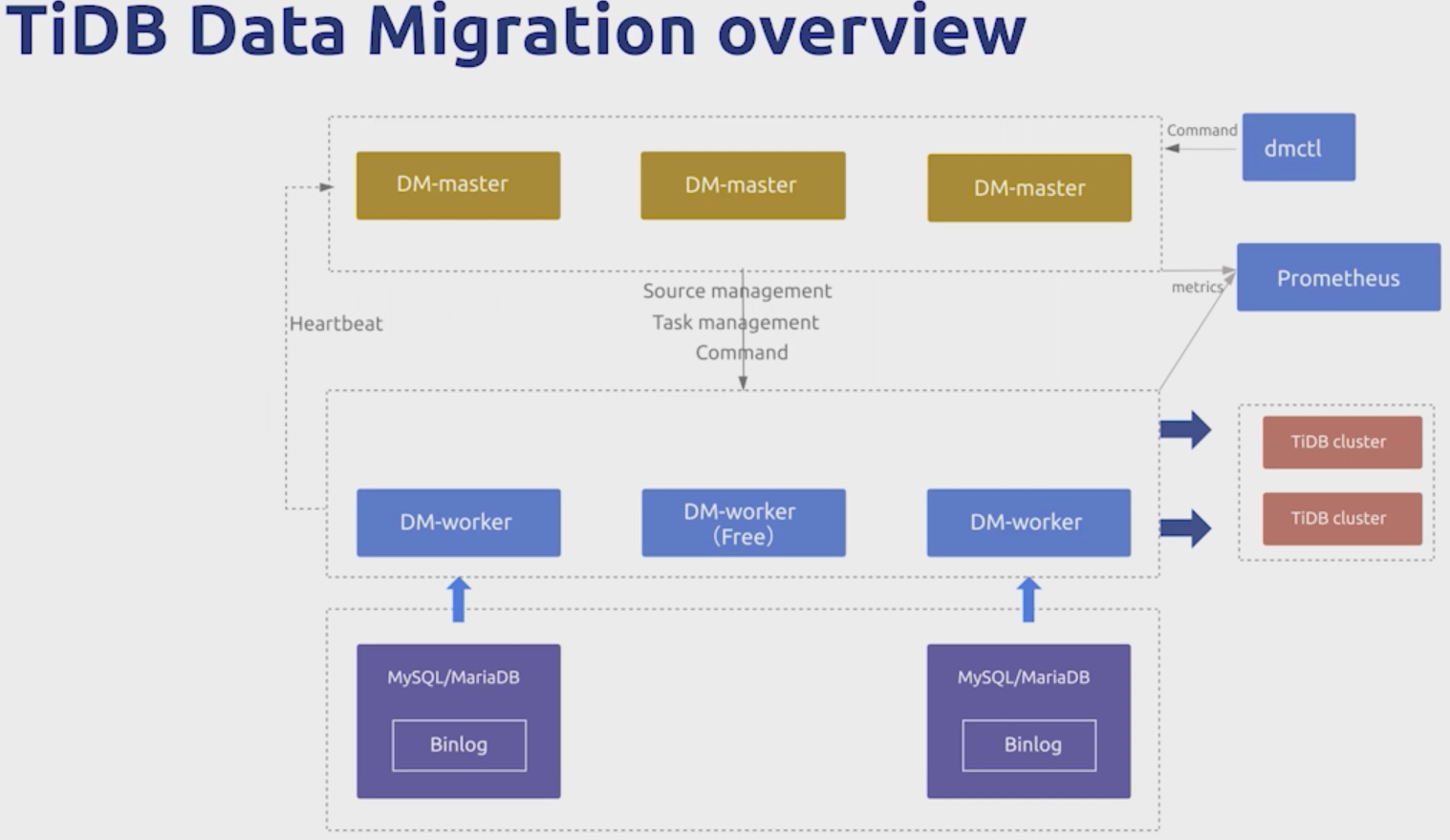

工具:TiDB Migration 简称(DM),支持全量、增量导入。

架构

* 将上游库的数据,导入到下游库中,可以将上游数据导入到下游不同的表中。

* 可以进行Table filter。

* binlog。实现增量同步。binlog event支持过滤。

* 适用场景

* 增量备份

* 分库分表的合并

* 同时做增量和全量的导入

附录

官方TiUP集群配置文件示例:

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

user: "tidb"

# # group is used to specify the group name the user belong to.

# group: "tidb"

ssh_port: 22

deploy_dir: "/tidb-deploy"

data_dir: "/tidb-data"

arch: "amd64" # Supported values: "amd64", "arm64" (default: "amd64")

# # Resource Control is used to limit the resource of an instance.

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html

# # Supports using instance-level `resource_control` to override global `resource_control`.

# resource_control:

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#MemoryLimit=bytes

# memory_limit: "2G"

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#CPUQuota=

# # The percentage specifies how much CPU time the unit shall get at maximum, relative to the total CPU time available on one CPU. Use values > 100% for allotting CPU time on more than one CPU.

# # Example: CPUQuota=200% ensures that the executed processes will never get more than two CPU time.

# cpu_quota: "200%"

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#IOReadBandwidthMax=device%20bytes

# io_read_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"

# io_write_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"

# # Monitored variables are applied to all the machines.

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

# deploy_dir: "/tidb-deploy/monitored-9100"

# data_dir: "/tidb-data/monitored-9100"

# log_dir: "/tidb-deploy/monitored-9100/log"

# # Server configs are used to specify the runtime configuration of TiDB components.

# # All configuration items can be found in TiDB docs:

# #

# # - TiDB: https://pingcap.com/docs/stable/reference/configuration/tidb-server/configuration-file/

# # - TiKV: https://pingcap.com/docs/stable/reference/configuration/tikv-server/configuration-file/

# # - PD: https://pingcap.com/docs/stable/reference/configuration/pd-server/configuration-file/

# #

# # All configuration items use points to represent the hierarchy, e.g:

# # readpool.storage.use-unified-pool

# # ^ ^

# # You can overwrite this configuration via the instance-level `config` field.

server_configs:

tidb:

# run-ddl: true

# lease: "45s"

# split-table: true

# token-limit: 1000

# mem-quota-query: 1073741824

# nested-loop-join-cache-capacity: 20971520

# oom-use-tmp-storage: true

# tmp-storage-path: "/tmp/<os/user.Current().Uid>_tidb/MC4wLjAuMDo0MDAwLzAuMC4wLjA6MTAwODA=/tmp-storage"

# tmp-storage-quota: -1

# oom-action: "cancel"

# enable-streaming: false

# enable-batch-dml: false

# lower-case-table-names: 2

# compatible-kill-query: false

# check-mb4-value-in-utf8: true

# treat-old-version-utf8-as-utf8mb4: true

# max-index-length: 3072

# enable-table-lock: false

# delay-clean-table-lock: 0

# split-region-max-num: 1000

# alter-primary-key: false

# server-version: ""

# repair-mode: false

# repair-table-list: []

# max-server-connections: 0

# new_collations_enabled_on_first_bootstrap: false

# skip-register-to-dashboard: false

# enable-telemetry: true

# deprecate-integer-display-length: false

# log.level: "info"

# log.format: "text"

# log.enable-slow-log: true

# log.slow-query-file: "tidb-slow.log"

# log.slow-threshold: 300

# log.record-plan-in-slow-log: 1

# log.expensive-threshold: 10000

# log.query-log-max-len: 4096

# log.file.filename: ""

# log.file.max-size: 300

# log.file.max-days: 0

# log.file.max-backups: 0

# security.ssl-ca: ""

# security.ssl-cert: ""

# security.ssl-key: ""

# security.cluster-ssl-ca: ""

# security.cluster-ssl-cert: ""

# security.cluster-ssl-key: ""

# security.spilled-file-encryption-method: "plaintext"

# status.report-status: true

# status.metrics-addr: ""

# status.metrics-interval: 15

# status.record-db-qps: false

# performance.max-procs: 0

# performance.server-memory-quota: 0

# performance.stmt-count-limit: 5000

# performance.tcp-keep-alive: true

# performance.cross-join: true

# performance.stats-lease: "3s"

# performance.run-auto-analyze: true

# performance.feedback-probability: 0.05

# performance.query-feedback-limit: 512

# performance.pseudo-estimate-ratio: 0.8

# performance.force-priority: "NO_PRIORITY"

# performance.bind-info-lease: "3s"

# performance.distinct-agg-push-down: false

# performance.txn-total-size-limit: 104857600

# performance.txn-entry-size-limit: 6291456

# performance.committer-concurrency: 16

# performance.max-txn-ttl: 3600000

# performance.mem-profile-interval: "1m"

# proxy-protocol.networks: ""

# proxy-protocol.header-timeout: 5

# prepared-plan-cache.enabled: false

# prepared-plan-cache.capacity: 100

# prepared-plan-cache.memory-guard-ratio: 0.1

# opentracing.enable: false

# opentracing.rpc-metrics: false

# opentracing.sampler.type: "const"

# opentracing.sampler.param: 1.0

# opentracing.sampler.sampling-server-url: ""

# opentracing.sampler.max-operations: 0

# opentracing.sampler.sampling-refresh-interval: 0

# opentracing.reporter.queue-size: 0

# opentracing.reporter.buffer-flush-interval: 0

# opentracing.reporter.log-spans: false

# opentracing.reporter.local-agent-host-port: ""

# tikv-client.grpc-connection-count: 4

# tikv-client.grpc-keepalive-time: 10

# tikv-client.grpc-keepalive-timeout: 3

# tikv-client.commit-timeout: "41s"

# tikv-client.max-batch-size: 128

# tikv-client.overload-threshold: 200

# tikv-client.max-batch-wait-time: 0

# tikv-client.batch-wait-size: 8

# tikv-client.enable-chunk-rpc: true

# tikv-client.region-cache-ttl: 600

# tikv-client.store-limit: 0

# tikv-client.store-liveness-timeout: "5s"

# tikv-client.ttl-refreshed-txn-size: 33554432

# tikv-client.async-commit.enable: false

# tikv-client.async-commit.keys-limit: 256

# tikv-client.async-commit.total-key-size-limit: 4096

# tikv-client.copr-cache.enable: true

# tikv-client.copr-cache.capacity-mb: 1000.0

# tikv-client.copr-cache.admission-max-result-mb: 10.0

# tikv-client.copr-cache.admission-min-process-ms: 5

# binlog.enable: false

# binlog.write-timeout: "15s"

# binlog.ignore-error: false

# binlog.binlog-socket: ""

# binlog.strategy: "range"

# pessimistic-txn.max-retry-count: 256

# stmt-summary.enable: true

# stmt-summary.enable-internal-query: false

# stmt-summary.max-stmt-count: 200

# stmt-summary.max-sql-length: 4096

# stmt-summary.refresh-interval: 1800

# stmt-summary.history-size: 24

tikv:

# log-level: "info"

# readpool.unified.min-thread-count: 1

# readpool.unified.max-thread-count: 8

# readpool.unified.stack-size: "10MB"

# readpool.unified.max-tasks-per-worker: 2000

# readpool.storage.use-unified-pool: false

# readpool.storage.high-concurrency: 4

# readpool.storage.normal-concurrency: 4

# readpool.storage.low-concurrency: 4

# readpool.storage.max-tasks-per-worker-high: 2000

# readpool.storage.max-tasks-per-worker-normal: 2000

# readpool.storage.max-tasks-per-worker-low: 2000

# readpool.storage.stack-size: "10MB"

# readpool.coprocessor.use-unified-pool: true

# readpool.coprocessor.high-concurrency: 8

# readpool.coprocessor.normal-concurrency: 8

# readpool.coprocessor.low-concurrency: 8

# readpool.coprocessor.max-tasks-per-worker-high: 2000

# readpool.coprocessor.max-tasks-per-worker-normal: 2000

# readpool.coprocessor.max-tasks-per-worker-low: 2000

# server.status-thread-pool-size: 1

# server.grpc-compression-type: "none"

# server.grpc-concurrency: 4

# server.grpc-concurrent-stream: 1024

# server.grpc-memory-pool-quota: "32G"

# server.grpc-raft-conn-num: 1

# server.grpc-stream-initial-window-size: "2MB"

# server.grpc-keepalive-time: "10s"

# server.grpc-keepalive-timeout: "3s"

# server.concurrent-send-snap-limit: 32

# server.concurrent-recv-snap-limit: 32

# server.end-point-recursion-limit: 1000

# server.end-point-request-max-handle-duration: "60s"

# server.snap-max-write-bytes-per-sec: "100MB"

# server.enable-request-batch: true

# #server.request-batch-enable-cross-command: true

# #server.request-batch-wait-duration: "1ms"

# storage.scheduler-concurrency: 2048000

# storage.scheduler-worker-pool-size: 4

# storage.scheduler-pending-write-threshold: "100MB"

# storage.block-cache.shared: true

# storage.block-cache.capacity: "1GB"

# raftstore.prevote: true

# #raftstore.raftdb-path: ""

# raftstore.capacity: 0

# raftstore.notify-capacity: 40960

# raftstore.messages-per-tick: 4096

# raftstore.pd-heartbeat-tick-interval: "60s"

# raftstore.pd-store-heartbeat-tick-interval: "10s"

# raftstore.region-split-check-diff: "6MB"

# raftstore.split-region-check-tick-interval: "10s"

# raftstore.raft-entry-max-size: "8MB"

# raftstore.raft-log-gc-tick-interval: "10s"

# raftstore.raft-log-gc-threshold: 50

# raftstore.raft-log-gc-count-limit: 72000

# raftstore.raft-log-gc-size-limit: "72MB"

# raftstore.raft-engine-purge-interval: "10s"

# raftstore.max-peer-down-duration: "5m"

# raftstore.region-compact-check-interval: "5m"

# raftstore.region-compact-check-step: 100

# raftstore.region-compact-min-tombstones: 10000

# raftstore.region-compact-tombstones-percent: 30

# raftstore.lock-cf-compact-interval: "10m"

# raftstore.lock-cf-compact-bytes-threshold: "256MB"

# raftstore.consistency-check-interval: "0s"

# raftstore.cleanup-import-sst-interval: "10m"

# raftstore.apply-pool-size: 2

# raftstore.store-pool-size: 2

# coprocessor.split-region-on-table: false

# coprocessor.batch-split-limit: 10

# coprocessor.region-max-size: "144MB"

# coprocessor.region-split-size: "96MB"

# coprocessor.region-max-keys: 1440000

# coprocessor.region-split-keys: 960000

# coprocessor.consistency-check-method: "mvcc"

# rocksdb.max-background-jobs: 8

# rocksdb.max-sub-compactions: 3

# rocksdb.max-open-files: 40960

# rocksdb.max-manifest-file-size: "128MB"

# rocksdb.create-if-missing: true

# rocksdb.wal-recovery-mode: 2

# rocksdb.wal-dir: "/tmp/tikv/store"

# rocksdb.wal-ttl-seconds: 0

# rocksdb.wal-size-limit: 0

# rocksdb.max-total-wal-size: "4GB"

# rocksdb.enable-statistics: true

# rocksdb.stats-dump-period: "10m"

# rocksdb.compaction-readahead-size: 0

# rocksdb.writable-file-max-buffer-size: "1MB"

# rocksdb.use-direct-io-for-flush-and-compaction: false

# rocksdb.rate-bytes-per-sec: 0

# rocksdb.rate-limiter-refill-period: "100ms"

# rocksdb.rate-limiter-mode: 2

# rocksdb.auto-tuned: false

# rocksdb.enable-pipelined-write: true

# rocksdb.bytes-per-sync: "1MB"

# rocksdb.wal-bytes-per-sync: "512KB"

# rocksdb.info-log-max-size: "1GB"

# rocksdb.info-log-roll-time: "0s"

# rocksdb.info-log-keep-log-file-num: 10

# rocksdb.info-log-dir: ""

# rocksdb.info-log-level: "info"

# rocksdb.titan.enabled: false

# rocksdb.titan.max-background-gc: 4

# rocksdb.defaultcf.compression-per-level: ["no", "no", "lz4", "lz4", "lz4", "zstd", "zstd"]

# rocksdb.defaultcf.block-size: "64KB"

# rocksdb.defaultcf.bloom-filter-bits-per-key: 10

# rocksdb.defaultcf.block-based-bloom-filter: false

# rocksdb.defaultcf.level0-file-num-compaction-trigger: 4

# rocksdb.defaultcf.level0-slowdown-writes-trigger: 20

# rocksdb.defaultcf.level0-stop-writes-trigger: 36

# rocksdb.defaultcf.write-buffer-size: "128MB"

# rocksdb.defaultcf.max-write-buffer-number: 5

# rocksdb.defaultcf.min-write-buffer-number-to-merge: 1

# rocksdb.defaultcf.max-bytes-for-level-base: "512MB"

# rocksdb.defaultcf.target-file-size-base: "8MB"

# rocksdb.defaultcf.max-compaction-bytes: "2GB"

# rocksdb.defaultcf.compaction-pri: 3

# rocksdb.defaultcf.cache-index-and-filter-blocks: true

# rocksdb.defaultcf.pin-l0-filter-and-index-blocks: true

# rocksdb.defaultcf.read-amp-bytes-per-bit: 0

# rocksdb.defaultcf.dynamic-level-bytes: true

# rocksdb.defaultcf.optimize-filters-for-hits: true

# rocksdb.defaultcf.titan.min-blob-size: "1KB"

# rocksdb.defaultcf.titan.blob-file-compression: "lz4"

# rocksdb.defaultcf.titan.blob-cache-size: "0GB"

# rocksdb.defaultcf.titan.discardable-ratio: 0.5

# rocksdb.defaultcf.titan.blob-run-mode: "normal"

# rocksdb.defaultcf.titan.level_merge: false

# rocksdb.defaultcf.titan.gc-merge-rewrite: false

# rocksdb.writecf.block-size: "64KB"

# rocksdb.writecf.write-buffer-size: "128MB"

# rocksdb.writecf.max-write-buffer-number: 5

# rocksdb.writecf.min-write-buffer-number-to-merge: 1

# rocksdb.writecf.max-bytes-for-level-base: "512MB"

# rocksdb.writecf.target-file-size-base: "8MB"

# rocksdb.writecf.level0-file-num-compaction-trigger: 4

# rocksdb.writecf.level0-slowdown-writes-trigger: 20

# rocksdb.writecf.level0-stop-writes-trigger: 36

# rocksdb.writecf.cache-index-and-filter-blocks: true

# rocksdb.writecf.pin-l0-filter-and-index-blocks: true

# rocksdb.writecf.compaction-pri: 3

# rocksdb.writecf.read-amp-bytes-per-bit: 0

# rocksdb.writecf.dynamic-level-bytes: true

# rocksdb.writecf.optimize-filters-for-hits: false

# rocksdb.lockcf.compression-per-level: ["no", "no", "no", "no", "no", "no", "no"]

# rocksdb.lockcf.block-size: "16KB"

# rocksdb.lockcf.write-buffer-size: "32MB"

# rocksdb.lockcf.max-write-buffer-number: 5

# rocksdb.lockcf.min-write-buffer-number-to-merge: 1

# rocksdb.lockcf.max-bytes-for-level-base: "128MB"

# rocksdb.lockcf.target-file-size-base: "8MB"

# rocksdb.lockcf.level0-file-num-compaction-trigger: 1

# rocksdb.lockcf.level0-slowdown-writes-trigger: 20

# rocksdb.lockcf.level0-stop-writes-trigger: 36

# rocksdb.lockcf.cache-index-and-filter-blocks: true

# rocksdb.lockcf.pin-l0-filter-and-index-blocks: true

# rocksdb.lockcf.compaction-pri: 0

# rocksdb.lockcf.read-amp-bytes-per-bit: 0

# rocksdb.lockcf.dynamic-level-bytes: true

# rocksdb.lockcf.optimize-filters-for-hits: false

# raftdb.max-background-jobs: 4

# raftdb.max-sub-compactions: 2

# raftdb.max-open-files: 40960

# raftdb.max-manifest-file-size: "20MB"

# raftdb.create-if-missing: true

# raftdb.enable-statistics: true

# raftdb.stats-dump-period: "10m"

# raftdb.compaction-readahead-size: 0

# raftdb.writable-file-max-buffer-size: "1MB"

# raftdb.use-direct-io-for-flush-and-compaction: false

# raftdb.enable-pipelined-write: true

# raftdb.allow-concurrent-memtable-write: false

# raftdb.bytes-per-sync: "1MB"

# raftdb.wal-bytes-per-sync: "512KB"

# raftdb.info-log-max-size: "1GB"

# raftdb.info-log-roll-time: "0s"

# raftdb.info-log-keep-log-file-num: 10

# raftdb.info-log-dir: ""

# raftdb.info-log-level: "info"

# raftdb.optimize-filters-for-hits: true

# raftdb.defaultcf.compression-per-level: ["no", "no", "lz4", "lz4", "lz4", "zstd", "zstd"]

# raftdb.defaultcf.block-size: "64KB"

# raftdb.defaultcf.write-buffer-size: "128MB"

# raftdb.defaultcf.max-write-buffer-number: 5

# raftdb.defaultcf.min-write-buffer-number-to-merge: 1

# raftdb.defaultcf.max-bytes-for-level-base: "512MB"

# raftdb.defaultcf.target-file-size-base: "8MB"

# raftdb.defaultcf.level0-file-num-compaction-trigger: 4

# raftdb.defaultcf.level0-slowdown-writes-trigger: 20

# raftdb.defaultcf.level0-stop-writes-trigger: 36

# raftdb.defaultcf.cache-index-and-filter-blocks: true

# raftdb.defaultcf.pin-l0-filter-and-index-blocks: true

# raftdb.defaultcf.compaction-pri: 0

# raftdb.defaultcf.read-amp-bytes-per-bit: 0

# raftdb.defaultcf.dynamic-level-bytes: true

# raftdb.defaultcf.optimize-filters-for-hits: true

# raft-engine.enable: false

# raft-engine.recovery_mode: "tolerate-corrupted-tail-records"

# raft-engine.bytes-per-sync: "256KB"

# raft-engine.target-file-size: "128MB"

# raft-engine.purge-threshold: "10GB"

# raft-engine.cache-limit: "1GB"

# security.ca-path: ""

# security.cert-path: ""

# security.key-path: ""

# security.cert-allowed-cn: []

# security.redact-info-log: false

# import.num-threads: 8

# import.stream-channel-window: 128

# backup.num-threads: 24

# pessimistic-txn.enabled: true

# pessimistic-txn.wait-for-lock-timeout: "1s"

# pessimistic-txn.wake-up-delay-duration: "20ms"

# pessimistic-txn.pipelined: true

# gc.batch-keys: 512

# gc.max-write-bytes-per-sec: "0"

# gc.enable-compaction-filter: false

# gc.ratio-threshold: 1.1

pd:

# lease: 3

# tso-save-interval: "3s"

# enable-prevote: true

# security.cacert-path: ""

# security.cert-path: ""

# security.key-path: ""

# security.cert-allowed-cn: ["example.com"]

# log.level: "info"

# log.format: "text"

# log.disable-timestamp: false

# log.file.filename: ""

# log.file.max-size: 300

# log.file.max-days: 28

# log.file.max-backups: 7

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

# schedule.split-merge-interval: "1h"

# schedule.max-snapshot-count: 3

# schedule.max-pending-peer-count: 16

# schedule.max-store-down-time: "30m"

# schedule.leader-schedule-limit: 4

# schedule.region-schedule-limit: 2048

# schedule.replica-schedule-limit: 64

# schedule.merge-schedule-limit: 8

# schedule.hot-region-schedule-limit: 4

# schedule.enable-one-way-merge: false

# schedule.enable-cross-table-merge: false

# replication.max-replicas: 3

# replication.location-labels: ["dc","zone","rack","host"]

# label-property:

# reject-leader:

# - key: "dc"

# value: "sha"

tiflash:

logger.level: "info"

## Deprecated multi-disks storage path setting style since v4.0.9,

## check storage.* configurations in host level for new style.

# path_realtime_mode: false

tiflash-learner:

log-level: "info"

# raftstore.apply-pool-size: 4

# raftstore.store-pool-size: 4

pump:

gc: 7

pd_servers:

- host: 10.0.1.11

# ssh_port: 22

# name: "pd-1"

# client_port: 2379

# peer_port: 2380

# deploy_dir: "/tidb-deploy/pd-2379"

# data_dir: "/tidb-data/pd-2379"

# log_dir: "/tidb-deploy/pd-2379/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.pd` values.

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

- host: 10.0.1.12

- host: 10.0.1.13

tidb_servers:

- host: 10.0.1.11

# ssh_port: 22

# port: 4000

# status_port: 10080

# deploy_dir: "/tidb-deploy/tidb-4000"

# log_dir: "/tidb-deploy/tidb-4000/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.tidb` values.

# config:

# log.level: warn

# log.slow-query-file: tidb-slow-overwrited.log

- host: 10.0.1.12

tikv_servers:

- host: 10.0.1.14

# ssh_port: 22

# port: 20160

# status_port: 20180

# deploy_dir: "/tidb-deploy/tikv-20160"

# data_dir: "/tidb-data/tikv-20160"

# log_dir: "/tidb-deploy/tikv-20160/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.tikv` values.

config:

server.labels: { zone: "zone1", host: "host1" }

# server.grpc-concurrency: 4

- host: 10.0.1.15

config:

server.labels: { zone: "zone1", host: "host2" }

- host: 10.0.1.16

config:

server.labels: { zone: "zone2", host: "host3" }

tiflash_servers:

- host: 10.0.1.14

# ssh_port: 22

# tcp_port: 9000

# http_port: 8123

# flash_service_port: 3930

# flash_proxy_port: 20170

# flash_proxy_status_port: 20292

# metrics_port: 8234

# deploy_dir: /tidb-deploy/tiflash-9000

## With cluster version >= v4.0.9 and you want to deploy a multi-disk TiFlash node, it is recommended to

## check config.storage.* for details. The data_dir will be ignored if you defined those configurations.

## Setting data_dir to a ','-joined string is still supported but deprecated.

## Check https://docs.pingcap.com/tidb/stable/tiflash-configuration#multi-disk-deployment for more details.

# data_dir: /tidb-data/tiflash-9000

# log_dir: /tidb-deploy/tiflash-9000/log

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.tiflash` values.

# config:

# logger.level: "info"

# ## Deprecated multi-disks storage path setting. Deprecated since v4.0.9,

# ## check following storage.* configurations for new style.

# # path_realtime_mode: false

# #

# ## Multi-disks storage paths settings. These will overwrite the `data_dir`.

# ## If there are multiple SSD disks on the machine,

# ## specify the path list on `storage.main.dir` can improve TiFlash performance.

# # storage.main.dir: [ "/nvme_ssd0_512/tiflash", "/nvme_ssd1_512/tiflash" ]

# ## Store capacity of each path, i.e. max data size allowed.

# ## If it is not set, or is set to 0s, the actual disk capacity is used.

# # storage.main.capacity = [ 536870912000, 536870912000 ]

# #

# ## If there are multiple disks with different IO metrics (e.g. one SSD and some HDDs)

# ## on the machine,

# ## set `storage.latest.dir` to store the latest data on SSD (disks with higher IOPS metrics)

# ## set `storage.main.dir` to store the main data on HDD (disks with lower IOPS metrics)

# ## can improve TiFlash performance.

# # storage.main.dir: [ "/hdd0_512/tiflash", "/hdd1_512/tiflash", "/hdd2_512/tiflash" ]

# # storage.latest.dir: [ "/nvme_ssd0_100/tiflash" ]

# ## Store capacity of each path, i.e. max data size allowed.

# ## If it is not set, or is set to 0s, the actual disk capacity is used.

# # storage.main.capacity = [ 536870912000, 536870912000 ]

# # storage.latest.capacity = [ 107374182400 ]

#

# # The following configs are used to overwrite the `server_configs.tiflash-learner` values.

# learner_config:

# log-level: "info"

# raftstore.apply-pool-size: 4

# raftstore.store-pool-size: 4

- host: 10.0.1.15

- host: 10.0.1.16

# pump_servers:

# - host: 10.0.1.17

# ssh_port: 22

# port: 8250

# deploy_dir: "/tidb-deploy/pump-8249"

# data_dir: "/tidb-data/pump-8249"

# log_dir: "/tidb-deploy/pump-8249/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.drainer` values.

# config:

# gc: 7

# - host: 10.0.1.18

# - host: 10.0.1.19

# drainer_servers:

# - host: 10.0.1.17

# port: 8249

# data_dir: "/tidb-data/drainer-8249"

# # If drainer doesn't have a checkpoint, use initial commitTS as the initial checkpoint.

# # Will get a latest timestamp from pd if commit_ts is set to -1 (the default value).

# commit_ts: -1

# deploy_dir: "/tidb-deploy/drainer-8249"

# log_dir: "/tidb-deploy/drainer-8249/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.drainer` values.

# config:

# syncer.db-type: "mysql"

# syncer.to.host: "127.0.0.1"

# syncer.to.user: "root"

# syncer.to.password: ""

# syncer.to.port: 3306

# syncer.ignore-table:

# - db-name: test

# tbl-name: log

# - db-name: test

# tbl-name: audit

# - host: 10.0.1.19

# cdc_servers:

# - host: 10.0.1.20

# ssh_port: 22

# port: 8300

# deploy_dir: "/tidb-deploy/cdc-8300"

# log_dir: "/tidb-deploy/cdc-8300/log"

# numa_node: "0,1"

# - host: 10.0.1.21

# - host: 10.0.1.22

# NOTE: Only 1 master node is supported for now

tispark_masters:

- host: 10.0.1.21

#spark_config:

# spark.driver.memory: "2g"

# spark.eventLog.enabled: "False"

# spark.tispark.grpc.framesize: 2147483647

# spark.tispark.grpc.timeout_in_sec: 100

# spark.tispark.meta.reload_period_in_sec: 60

# spark.tispark.request.command.priority: "Low"

# spark.tispark.table.scan_concurrency: 256

#spark_env:

# SPARK_EXECUTOR_CORES: 5

# SPARK_EXECUTOR_MEMORY: "10g"

# SPARK_WORKER_CORES: 5

# SPARK_WORKER_MEMORY: "10g"

# NOTE: multiple worker nodes on the same host is not supported

tispark_workers:

- host: 10.0.1.22

- host: 10.0.1.23

monitoring_servers:

- host: 10.0.1.11

# ssh_port: 22

# port: 9090

# deploy_dir: "/tidb-deploy/prometheus-8249"

# data_dir: "/tidb-data/prometheus-8249"

# log_dir: "/tidb-deploy/prometheus-8249/log"

grafana_servers:

- host: 10.0.1.11

# port: 3000

# deploy_dir: /tidb-deploy/grafana-3000

alertmanager_servers:

- host: 10.0.1.11

# ssh_port: 22

# web_port: 9093

# cluster_port: 9094

# deploy_dir: "/tidb-deploy/alertmanager-9093"

# data_dir: "/tidb-data/alertmanager-9093"

# log_dir: "/tidb-deploy/alertmanager-9093/log"