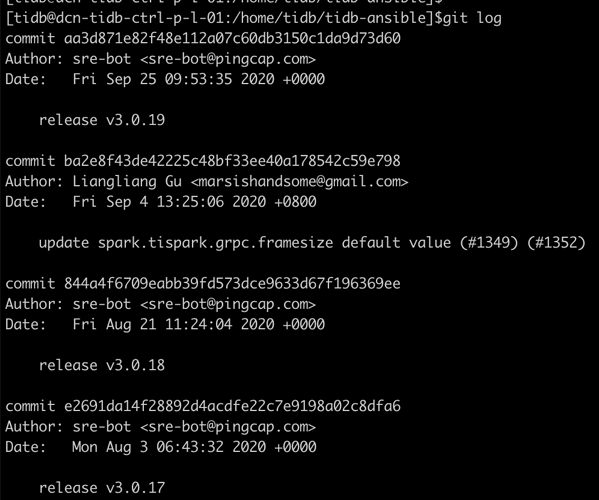

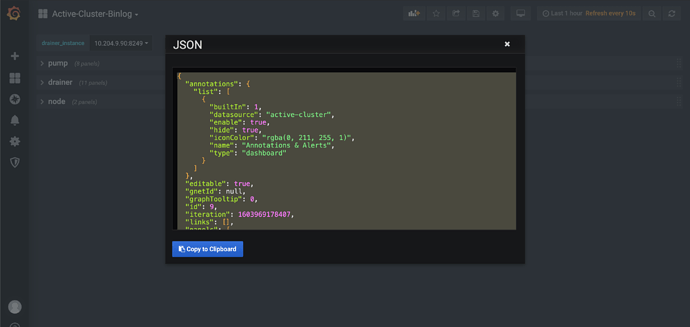

升级TiDB从2.1升级到3.0.19,滚动升级monitor的时候发生问题,导致grafana监控不全。

grafana升级成功,promethus也升级成功

有啥办法解决么。

问题

[10.204.9.17]: Ansible FAILED! => playbook: rolling_update_monitor.yml; TASK: import grafana dashboards - run import script; message: {“changed”: true, “cmd”: “python grafana-config-copy.py dests-10.204.9.17.json”, “delta”: “0:01:00.987482”, “end”: “2020-10-25 23:56:53.931952”, “msg”: “non-zero return code”, “rc”: 1, “start”: “2020-10-25 23:55:52.944470”, “stderr”: “Traceback (most recent call last):

File "grafana-config-copy.py", line 142, in

ret = import_dashboard_via_user_pass(dest[‘url’], dest[‘user’], dest[‘password’], dashboard)

File "grafana-config-copy.py", line 124, in import_dashboard_via_user_pass

resp = urllib2.urlopen(req)

File "/usr/lib64/python2.7/urllib2.py", line 154, in urlopen

return opener.open(url, data, timeout)

File "/usr/lib64/python2.7/urllib2.py", line 431, in open

response = self._open(req, data)

File "/usr/lib64/python2.7/urllib2.py", line 449, in _open

‘_open’, req)

File "/usr/lib64/python2.7/urllib2.py", line 409, in _call_chain

result = func(*args)

File "/usr/lib64/python2.7/urllib2.py", line 1244, in http_open

return self.do_open(httplib.HTTPConnection, req)

File "/usr/lib64/python2.7/urllib2.py", line 1217, in do_open

r = h.getresponse(buffering=True)

File "/usr/lib64/python2.7/httplib.py", line 1089, in getresponse

response.begin()

File "/usr/lib64/python2.7/httplib.py", line 444, in begin

version, status, reason = self._read_status()

File "/usr/lib64/python2.7/httplib.py", line 408, in _read_status

raise BadStatusLine(line)

httplib.BadStatusLine: ‘’”, “stderr_lines”: [“Traceback (most recent call last):”, " File "grafana-config-copy.py", line 142, in “, " ret = import_dashboard_via_user_pass(dest[‘url’], dest[‘user’], dest[‘password’], dashboard)”, " File "grafana-config-copy.py", line 124, in import_dashboard_via_user_pass", " resp = urllib2.urlopen(req)“, " File "/usr/lib64/python2.7/urllib2.py", line 154, in urlopen”, " return opener.open(url, data, timeout)“, " File "/usr/lib64/python2.7/urllib2.py", line 431, in open”, " response = self._open(req, data)“, " File "/usr/lib64/python2.7/urllib2.py", line 449, in _open”, " ‘_open’, req)“, " File "/usr/lib64/python2.7/urllib2.py", line 409, in _call_chain”, " result = func(*args)“, " File "/usr/lib64/python2.7/urllib2.py", line 1244, in http_open”, " return self.do_open(httplib.HTTPConnection, req)“, " File "/usr/lib64/python2.7/urllib2.py", line 1217, in do_open”, " r = h.getresponse(buffering=True)“, " File "/usr/lib64/python2.7/httplib.py", line 1089, in getresponse”, " response.begin()“, " File "/usr/lib64/python2.7/httplib.py", line 444, in begin”, " version, status, reason = self._read_status()“, " File "/usr/lib64/python2.7/httplib.py", line 408, in _read_status”, " raise BadStatusLine(line)", “httplib.BadStatusLine: ‘’”], “stdout”: “[load] from <node.json>:node

[import] <Pay-Cluster-Node_exporter> to [Pay-Cluster]\t… “, “stdout_lines”: [”[load] from <node.json>:node”, "[import] <Pay-Cluster-Node_exporter> to [Pay-Cluster]\t… "]}